Save to My DOJO

Table of contents

- What is VMware vSAN?

- Understanding Availability and vSAN Storage Policies Failures to Tolerate (FTT)

- vSAN Blueprints for the SMB

- Blueprint #1 – VMware vSAN 2-node configuration

- Blueprint #2 – VMware vSAN 3-node configuration

- Blueprint #3 – VMware vSAN 4/5/6-node configuration

- Blueprint #4 – VMware vSAN Stretched Cluster

- Is vSAN the Future for Data Storage?

As many organizations enter a hardware refresh cycle, they must consider both the hardware and technologies available as they plan to purchase new hardware and provision new environments. Many businesses are now looking at software-defined storage as one of their first choices for backing storage for their enterprise data center and virtualization solutions.

VMware has arguably maintained an edge over the competition across their portfolio of solutions for the enterprise datacenter, including software-defined storage. VMware vSAN is the premiere software-defined storage solution available to organizations today and offers many excellent capabilities. It is well suited for organizations of all sizes, ranging from small businesses and edge locations to large enterprise customers.

What is VMware vSAN?

Before looking at the available configuration options for deploying VMware vSAN to suit the needs of various deployments, let’s first look at the VMware vSAN technology itself. What is it, and how does it work? VMware vSAN is a software-defined enterprise storage solution that enables businesses to implement what is known as hyper-converged infrastructure (HCI).

Instead of the traditional 3-2-1 configuration where organizations have (3) hypervisor hosts, (2) storage switches, and (1) storage area network device (SAN), VMware vSAN enables pooling locally attached storage in each VMware ESXi host as one logical volume. In this way, there is no separately attached storage device providing storage to the vSphere cluster.

VMware vSAN software-defined storage solution

This feature is key to implementing hyper-converged infrastructure where compute, storage, and networking is “in the box.” With this more modern technology model for implementing virtualization technologies, it provides many additional benefits, including the following:

-

- Control of storage within vSphere – With vSAN, VI admins can provision and manage storage from within the vSphere client, without the need for the storage team. Traditional 3-2-1 architectures may rely on the storage team to configure and allocate storage for workloads, slowing down the workflow for turning up new resources.

-

- Easy scalability – With vSAN, businesses can easily scale up and scale out storage by adding additional diskgroups and vSAN hosts

-

- Automation – Using PowerCLI, organizations can fully automate vSAN storage alongside other automated tasks

-

- Use vSphere policy-based management for storage – VMware’s software-defined policies allow storage to be controlled and governed in a granular way

-

- Optimized for flash storage – VMware vSAN is optimized for flash storage. Businesses can run performance and latency-sensitive workloads while benefiting from all the other vSAN capabilities using all-flash vSAN.

-

- Easy stretched clusters for zero data loss failovers and failbacks – The vSAN stretched Cluster provides an excellent option for site-level resiliency and workloads that require as little downtime as possible

-

- Disaggregated compute and storage resources – Using vSAN HCI Mesh, compute and storage can be disaggregated and ensure that free storage is not landlocked within a particular cluster. Other clusters can take advantage of available storage found in a different vSAN cluster.

-

- Integrated file services – Organizations can run critical file services on top of vSAN

-

- iSCSI access to vSAN storage – VMware vSAN provides iSCSI storage that can be used for many different use cases, including Windows Server Failover Clusters

-

- Two-node direct connect – Small businesses may have a limited budget or running virtualization clusters. The two-node direct connect allows connecting two vSAN hosts in the two-node configuration without a network switch in between. This configuration is also a great option in remote office/branch office (ROBO) and edge environments.

-

- Provides Data Persistence platform for modern workloads – VMware vSAN provides the tools needed for allocating storage for modern workloads and includes a growing ecosystem of third-party plugins

Pricing

VMware vSAN is a paid product license from VMware. The licensed editions include:

-

- Standard

-

- Advanced – Adds support for deduplication and compression, RAID-5/6 Erasure Coding, and VMware vRealize Operations within vCenter

-

- Enterprise – Adds Data-at-rest and data-in-transit encryption, stretched Cluster with local failure protection, file services, VMware HCI Mesh, Data Persistence platform for modern stateful services

-

- Enterprise Plus – Adds vRealize Operations 8 Advanced

In addition to the paid licenses, VMware includes a vSAN license for free as part of other paid solutions, such as VMware Horizon Advanced and Enterprise.

Understanding Availability and vSAN Storage Policies Failures to Tolerate (FTT)

One of the primary requirements for understanding and designing your vSAN server clusters is how vSAN handles failures and maintains the availability of your data. It relies on the vSAN Storage Policies to determine these settings. It uses the term Failures to tolerate (FTT) to help understand the number of failures any vSAN configuration can handle and still maintain access to your data.

Failures to tolerate define the number of host and device failures that a virtual machine can tolerate. Customers can choose anything from no data redundancy, all the way to RAID 6 erasure coding to 3 failures RAID-1 mirroring. VMware’s stance on mirroring vs. RAID-5/6 erasure coding is that mirroring provides better performance and the erasure code provides more efficient space utilization.

-

- ***Note*** – It is essential to understand that a VM storage policy with FTT = 0 (No Data Redundancy) is not supported and can lead to data loss. This policy setting is not something you would configure for a production cluster.

There are two formulas to note for understanding the needed number of data copies and hosts with VMware vSAN VM storage policies and availability.

-

- n equals the number of failures tolerated

-

- Number of data copies – n+1

-

- Number of hosts contributing storage required = n2+1

With the above formulas, it results in the following configurations

| RAID Configuration | Failures to Tolerate (FTT) | Minimum Hosts Required |

| RAID-1 (Mirroring) This is the default setting. RAID-1 |

1 |

2 |

| RAID-5 (Erasure Coding) |

1 |

4 |

| RAID-1 (Mirroring) |

2 |

5 |

| RAID-6 (Erasure Coding) |

2 |

6 |

| RAID-1 (Mirroring) |

3 |

7 |

vSAN Blueprints for the SMB

With the various VM Storage Policies and host configurations, VMware vSAN provides a wealth of configuration options, scalability, and flexibility to satisfy many different use cases and customer needs from small to large. Let’s consider some different VMware vSAN blueprints for implementing VMware vSAN storage solutions and which configurations and blueprints fit individual use cases.

We will consider:

-

- VMware vSAN 2-node and direct connect configuration

- VMware vSAN 3-node configuration

- VMware vSAN 4/5/6-node configuration

- VMware vSAN stretched Clustering

Blueprint #1 – VMware vSAN 2-node configuration

As mentioned earlier, the VMware vSAN two-node configuration is an excellent option for organizations looking to satisfy specific use cases. The beauty of the VMware vSAN two-node configuration is its simplicity and efficient use of hardware resources, which allows businesses to run business-critical workloads in remote sites, ROBO configurations, and edge locations without needing a rack full of hardware resources.

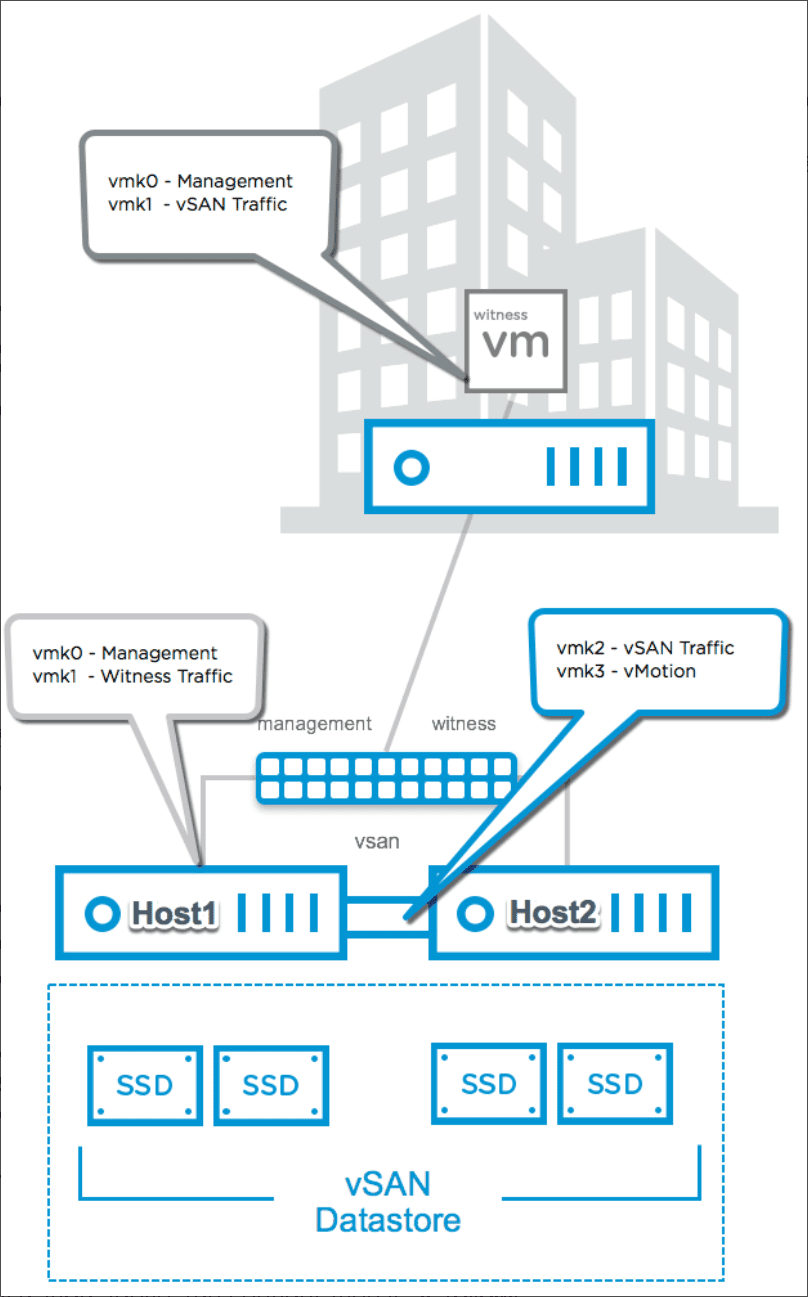

The VMware vSAN 2-node configuration is a specialized version of a vSAN stretched Cluster, including the three fault domains. Each ESXi node in the 2-node configuration compromises a fault domain. Then the witness host appliance, which runs as a virtual machine, comprises the third fault domain. As a side note, the ESXi witness host virtual machine appliance is the only production-supported, nested installation of VMware ESXi.

The witness host is a specialized ESXi server running as a virtual machine in a different VMware vSphere environment. Currently, the witness host is only supported to run in another vSphere environment. However, there have been rumblings that VMware will support running the ESXi witness node appliance in other environments such as in Amazon AWS, Microsoft Azure, or Google GCP. If this happens in a future vSAN release, customers can architect their 2-node configurations with even more flexibility.

One of the great new features around the witness node appliance, released in vSphere 7, is the new ability to use a single witness node appliance to house the witness node components for multiple vSAN 2-node clusters. Introducing this feature allows organizations to reduce the complexity and resources needed for the witness components since these can be shared between multiple clusters. Up to 64 2-node clusters can share a single witness appliance.

Primary Failures to Tolerate (PFTT)

From a data perspective, the witness host never holds data objects, only witness components that are minimal in size since these are basically small metadata files. The two physical ESXi hosts that comprise the 2-node cluster store the data objects.

With the vSAN 2-node deployment, data is stored in a mirrored data protection configuration. It means that one copy of your data is stored on the first ESXi node, and another copy is stored on the second ESXi node in the 2-node Cluster. The witness component is, as described above, stored on the witness host appliance.

In vSAN 6.6 or higher 2 Node Clusters, PFTT may not be greater than 1. It is because 2-node clusters contain three fault domains.

Enhancements with vSAN 7 Update 3

VMware vSAN 7 Update 3 introduces the ability to provide a secondary level of resilience in a two-node cluster. If each host has more than one disk group running in each host, it allows you to have multiple failures. With vSAN 7 Update 3, you can suffer a complete host failure, a subsequent failure of a witness, and a disk group failure. That is three major failures in a two-node cluster.

VMware vSAN 2-node configuration

Direct Connect

The two nodes are configured in a single site location and in most cases, will be connected via a network switch between them. However, in the “Direct Connect” configuration, released with vSAN 6.5, the ESXi hosts that comprise the 2-node vSAN cluster configuration can be connected using a simple network cable connection, hence, directly connected. This capability even further reduces the required hardware footprint.

With new server hardware, organizations can potentially have two nodes with 100 Gbit connectivity, using the direct connect option and 100 Gbit network adapters, all without network switching infrastructure in between. By default, the 2-node vSAN witness node needs to have connectivity with each of the vSAN data node’s VMkernel interfaces tagged with vSAN traffic.

However, VMware introduced a specialized command-line tool that allows specifying an alternate VMkernel interface designated to carry traffic destined for the witness node, separate from the vSAN tagged VMkernel interface. This configuration allows for separate networks between node-to-node and node-to-witness traffic.

VMware vSAN 2-node Direct Connect configuration

Use case

The 2-node and 2-node Direct Connect configurations are excellent choices for small SMB customers who need to conserve cost while maintaining a high availability level. It is also a perfect choice for organizations of any size who need to place a vSAN cluster in an edge environment with minimal hardware requirements.

Blueprint #2 – VMware vSAN 3-node configuration

The next configuration we want to cover is the 3-node vSAN cluster host design. It is a more traditional design of a vSAN cluster. It provides an “entry-level” configuration for vSAN since it requires the least hardware outside the two-node design.

With a 3-node vSAN cluster design, you do not set up stretched Clustering. Instead, each host comes into the vSAN Cluster as a standalone host. Each is considered its own fault domain. With a 3-node vSAN configuration, you can tolerate only one host failure with the number of failures to tolerate set to 1. With this configuration, VMware vSAN saves two replicas to different hosts. Then, the witness component is saved to the third host.

A 3-node vSAN cluster default fault domains

If you attempt to configure a vSAN 3-node cluster with RAID-5/6 (Erasure coding), you will see the following error when assigning the RAID-5 erasure coding policy:

-

- “Datastore does not match current VM policy. “Policy specified requires 4 fault domains contributing all-flash storage, but only 3 found”

Configuring the VM Storage Policy on a 3-node cluster to RAID5 or 6

If you change to 3 failures – RAID-1 (Mirroring) storage policy, the same is true. Once you move forward with the 3 failures – RAID-1 mirroring configuration, you won’t see your vSAN Datastore as compatible.

Viewing compatible datastores with the 3 failures – RAID-1 mirroring configuration

When you consider that you only have 3-nodes, it makes sense that creating additional mirrored copies of your data between the three hosts would not make sense or provide any additional resiliency. If you lose a single host, it will take the other copies of data with it.

Limitations of the 3-node vSAN cluster configuration

There are limitations to note with the 3-node vSAN Cluster configuration that can create operational limitations and expose you to data loss during a failure. These include:

-

- During a failure scenario of a host or other component, vSAN cannot rebuild data on another host or protect your data from another failure.

-

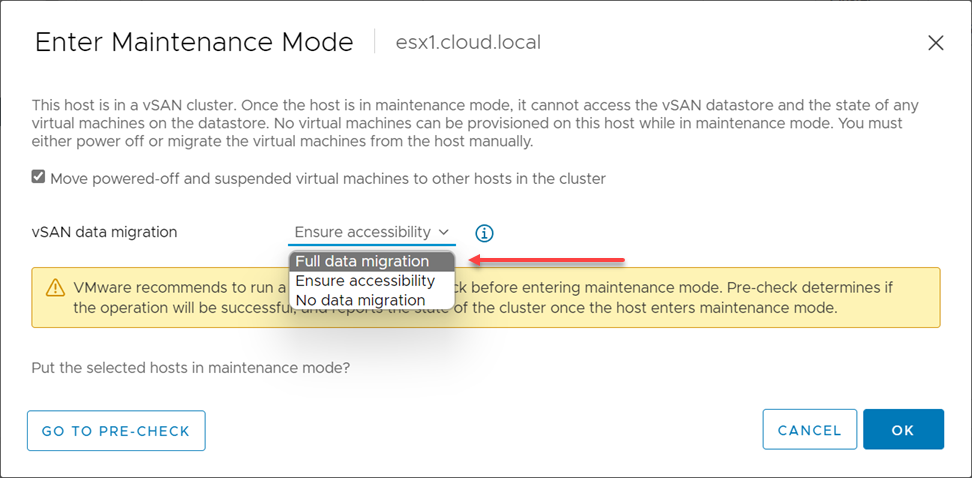

- Another consideration with the 3-node Cluster is when you need to take a host down for maintenance with a planned outage, vSAN cannot evacuate data from the host to maintain policy compliance due to the limited number of nodes. Therefore, when entering maintenance mode on a 3-node cluster, you can only set the Ensure data accessibility data evacuation option.

-

- If you are in a situation where you already have a 3-node cluster with an inaccessible host or disk group, and you have another failure, your VMs will be inaccessible.

-

- It is also worth considering you will not be able to create a new snapshot on virtual machines running in a 3-node vSAN cluster when a host is down, even for planned maintenance. Snapshots require the VM storage policy to be met, so you run into an issue where you don’t have all the components available to take a snapshot. It proves to be a “real-world” impact as even backup processes that attempt to run on a 3-node vSAN cluster with a host down will fail until the host is brought back online to provide the availability to all the data components.

Use case

What is the use case for the 3-node vSAN Cluster? The 3-node vSAN Cluster provides an entry-level cluster configuration that uses minimal resources and allows access to the two data components and the witness component, all in the same Cluster. Furthermore, it does this without needing the specialized witness appliance’s external witness component.

However, with the 3-node Cluster, you don’t benefit from configuring a “Direct Connect” configuration as you do with the 2-node Cluster. So, for the most part, while the 3-node vSAN cluster configuration is supported, it is not a recommended configuration. Outside of the 2-node vSAN cluster option, VMware recommends using the 4-node Cluster as it provides the additional resources needed to have maintenance operations without being in a policy deficient situation.

A 3-node cluster is still an excellent option for businesses that need to have the resiliency, flexibility, and capabilities offered by vSAN without large hardware investments. As long as customers understand the limitations of the 3-node configuration, it can run production workloads with a reasonable degree of high availability.

Blueprint #3 – VMware vSAN 4/5/6-node configuration

Moving past the 3-node vSAN cluster configuration, you get into the vSAN Cluster configurations that offer the most resiliency for your data, performance, and storage space efficiency.

4-node vSAN Cluster

Starting with the 4-node vSAN Cluster configuration, customers have the recommended configuration of VMware vSAN implemented in their environments outside of the 2-node stretched Cluster. As mentioned, the 3-node vSAN Cluster provides the minimum number of hosts in the standard cluster configuration (non-2-node Cluster). With the 3-node configuration, as detailed earlier, when a host or disk group is down, vSAN is operating in a degraded state where you cannot withstand any other failures, and you are impacted operationally (backups can’t complete, etc.).

Moving in the 4-node configuration, you are not subject to the same constraints as the 3-node Cluster. You can continue to operate in a normal state as far as data availability when you have a host or disk group down. Operationally, you can still create snapshots, perform backups, and do other tasks.

In a 4-node vSAN Cluster, if you have a node or disk group fail or need to take a host down for maintenance, vSAN can perform “self-healing” and reprotect the data objects using the available disk space in the remaining three nodes of the Cluster. What do we mean by “self-healing?”

With vSAN, you always have the primary copy of the data, a secondary copy of the data, and finally, a witness component. These are always placed on separate hosts. In a 4-node vSAN cluster, you have enough hosts that when a node or component fails, you have another host on which you can rebuild the missing component. Whereas in the 3-node, you are waiting on the third host to become available once again, you have the extra host to instantly begin rebuilding data components or migrating data to (in the case of a planned maintenance mode event).

In the 4-node configuration, you also can use the “Full data migration” option when placing hosts in maintenance mode to meet the FTT=1 requirement.

Choosing the vSAN data migration option

4-node vSAN introduces Erasure Coding

One of the other advantages of the 4-node vSAN Cluster compared to the 3-node Cluster is using Erasure Coding instead of data mirroring. What is Erasure Coding?

-

- Erasure coding is a way to protect data by fragmenting the data across physical boundaries in a way that allows maintaining access to the data, even if a part of the data is lost. In the case of VMware vSAN, it is object storage that stripes data set with parity across multiple hosts.

With RAID-5 erasure coding, 4 objects are created for the dataset, which is spread across all the hosts in the Cluster. For this reason, you can only assign the RAID-5 erasure coding storage policy in a 4-node vSAN cluster, not the RAID-6 storage policy. The RAID-6 storage policy requires at least 6 hosts in a vSAN cluster for implementation.

RAID-5 data placement

What is the advantage of using erasure coding instead of data mirroring? The erasure code storage policies provide more efficient storage space utilization on the vSAN datastore. Whereas RAID-1 mirroring requires twice the capacity for a single data set, RAID-5 erasure coding requires only x1.33 space for FTT=1. It results in significant space savings compared to the data mirroring policies.

-

- FTT=1

-

- X1.33 compared to x2 capacity with RAID-1

-

- Requires 4 hosts

However, there is a tradeoff between more efficient space utilization and performance. There is storage I/O amplification with erasure coding on file writes, not reads. Current data and parity need to be read and merged, and new parity is written.

RAID-5/6 Erasure Coding Improvements in vSAN 7.0 Update 2

VMware has been working hard to improve the performance across the board with each release of VMware vSAN. One of the improvements noted with the release of VMware vSAN 7.0 Update 2 is performance enhancements related to RAID-5/6 erasure coding.

As mentioned above, part of the performance degradation with erasure coding is related to the I/O amplification around writing parity information. The new improvements in vSAN 7.0 Update 2 relate to how vSAN performs the parity calculation and optimizes how vSAN reads old data and parity calculations.

VMware has optimized the calculations and has not changed the data structures used in vSAN’s erasure codes. With the new optimizations, performance will benefit from using RAID-5 and RAID-6 storage policies, seeing large sequential writes bursts. In addition, CPU cycles per IOPs are reduced, benefiting the virtual machines using the vSAN datastore. Finally, RAID-6 operations will benefit even more than RAID-5 due to the overall increased amplification using RAID-6 over RAID-5.

Failures to tolerate (FTT)

As noted above, it means if you assign the RAID-5 erasure coding policy, if you have a failure of one of your hosts in a 4-node vSAN Cluster, you are in a degraded state with no opportunity for “self-healing” rebuild. It is similar to the 3-node vSAN host with the data mirroring policy. Once a failure happens in the 4-node vSAN Cluster running RAID-5 erasure coding, there is no available hardware to recreate the objects immediately.

Use Cases – 4-node vSAN Cluster

The 4-node vSAN Cluster is the recommended vSAN configuration for the FTT=1 mirror as it provides the ability to recover using self-healing. However, the 4-node Cluster is not the recommended configuration for RAID-5 erasure coding as you need at least a 5-node cluster for self-healing RAID-5 erasure coding.

5-node vSAN Cluster

The 5-node vSAN Cluster adds the advantage of having the extra host needed to provide immediate “self-healing” with RAID-5 erasure coding. With the 5-node vSAN Cluster configuration, you still don’t have the number of nodes needed for RAID-6 erasure coding since RAID-6 requires a minimum 6-node vSAN configuration.

6-node vSAN Cluster

The 6-node vSAN Cluster is required for RAID-6 erasure coding. With RAID-6 erasure coding, the Failures to tolerate is “2.” It uses x1.5 storage space compared to x3 the capacity compared to RAID-1 mirroring. So, for FTT=2, it is much more cost-effective from a capacity perspective. With RAID-6 erasure coding, the amplification of storage I/O is even more than RAID-5 since you are essentially writing “double parity.”

VMware vSAN RAID-6 erasure coding

Use cases for 6-node vSAN Cluster

The 6-node vSAN Cluster provides the ability to jump to the FTT=2 level of data resiliency, which is where most business-critical applications need to be. In addition, the 6-node vSAN Cluster allows taking advantage of the RAID-6 erasure coding storage policy with the resiliency benefits and space savings benefits from erasure coding. With the new improvements in vSAN 7.0 Update 2 from a performance perspective, RAID-5/6 erasure coding has become a more feasible option for performance-sensitive workloads.

Blueprint #4 – VMware vSAN Stretched Cluster

For the ultimate in resiliency and site-level protection, organizations can opt for the vSAN stretched Cluster. The Stretched Cluster functionality for VMware vSAN was introduced in vSAN 6.1 and is a specialized vSAN configuration targeting a specific use case – disaster/downtime avoidance.

VMware vSAN stretched clusters provide an active/active vSAN cluster configuration, allowing identically configured ESXi hosts distributed evenly between the two sites to function as a single logical cluster, each configured as their own fault domain. Additionally, a Witness Host is used to provide the witness component of the stretched Cluster.

As we learned earlier, the 2-node vSAN Cluster is a small view of how a stretched cluster works. Each node is a fault domain, with the Witness Host providing the witness components. Essentially with the larger stretched Cluster, we are simply adding multiple ESXi hosts to each fault domain.

One of the design considerations with the Stretched Cluster configuration is the need for a high bandwidth/low latency link connecting the two sites. The vSAN Stretched Cluster requires latency of no more than 5 ms RTT (Round Trip Time). This requirement can potentially be a “deal-breaker” when organizations consider using a Stretched Cluster configuration between two available sites if the latency requirements cannot be met.

A few things to note about the vSAN Stretched Cluster:

-

- X+Y+Z – This nomenclature describes the Stretched Cluster configuration where X is a data site, Y is a data site, and Z is the number of witness hosts at site C

-

- Data sites are where virtual machines are deployed

-

- The minimum configuration is 1+1+1 (3 nodes)

-

- The maximum configuration as of vSAN 7 Update 2 is 20+20+1 (41 nodes)

The virtual machines in the first site have data components stored in each site and the witness component stored on the Witness Host. Organizations use the fault domains and affinity rules to keep virtual machines running in the preferred sites.

Even in a failure scenario where an entire site goes down, the virtual machine still has a copy of the data in the remaining site, and “more than 50% of components” are available to remain accessible. The beauty of the Stretched Cluster is that customers minimize and eliminate data loss. Failover and failback (which are often underestimated) are greatly simplified. At the point of failback, virtual machines can simply be DRS controlled back to the preferred site.

Within each site, vSAN uses mirroring to create the redundant data copies needed to protect data intrasite. In addition, the mirrored copies between the sites protect from data loss from a site-wide failure.

vSAN 7 Update 3 Stretched Cluster enhancements

In vSAN 7 Update 3, Stretched Clusters have received major improvements to the data resiliency offered. In vSAN 7 Update 3, if one of the data sites becomes unavailable, followed by a planned or unplanned outage of the witness host, data and VMs remain available, allowing tremendous resiliency in cases of catastrophic loss of Stretched Cluster components. This new resiliency is a tremendous improvement over previous releases, where losing a site and the Witness Host would have meant the remaining site would have had inaccessible virtual machines.

Use Cases for vSAN Stretched Clusters

The use case for vSAN Stretched Clusters are fairly obvious. The vSAN Stretched Cluster protects an organization and its data from a catastrophic loss that can occur with the failure of an entire site. In this case, you may think about a natural disaster that destroys an entire data center location or some other event that takes the site offline.

With Stretched Clustering, your RPO and RTO values are essentially real-time. The only outage experienced for workloads would be the HA event needed to restart virtual machines in the remaining data site. However, the important thing to consider is the data is up-to-date and available. No data skew will exist or need for restoring backups, etc.

It drastically reduces the administrative effort and time needed to bring services back online after a disaster. For businesses who have mission-critical services that depend on real-time failover, no data loss, and the ability to get services back online in minutes, Stretched Clustering fits this use case perfectly.

However, Stretched Cluster will be more expensive from a hardware perspective. Per best practice, you would want to run at least a 4-node vSAN Cluster in each site that comprises a fault domain. This practice ensures the ability to have self-healing operations in both locations if you lose a host or disk group on either side.

To protect your VMware environment, Altaro offers the ultimate VMware backup service to secure backup quickly and replicate your virtual machines. We work hard perpetually to give our customers confidence in their backup strategy.

Plus, you can visit our VMware blog to keep up with the latest articles and news on VMware.

Is vSAN the Future for Data Storage?

Organizations today are looking at modern solutions moving forward with hardware refresh cycles. Software-defined storage is a great option for businesses looking to refresh their backend storage along with hypervisor hosts. VMware vSAN is a premiere software-defined storage solution on the market today in the enterprise. It provides many great features, capabilities, and benefits to businesses looking to modernize their enterprise data center.

VMware vSAN allows customers to quickly and easily scale their storage by simply adding more storage to each hypervisor host or simply adding more hosts to the Cluster. In addition, they can easily automate software-defined storage without the complexities of storage provisioning using a traditional SAN device. VMware vSAN can be managed right from within the vSphere Client and other everyday tasks performed by a VI admin. It helps to simplify the change control requests and provisioning workflows as VI admins can take care of storage administration and their everyday vSphere duties without involving the storage team.

As shown by the aforementioned vSAN configurations, VMware vSAN provides a solution that can be sized to fit just about any environment and use case. The 2-node Direct Connect vSAN Cluster allows creating a minimal hardware cluster that can house business-critical workloads in an edge environment, ROBO, or remote datacenter without the need for expensive networking gear. The 3-node traditional vSAN host allows protecting VMs with little hardware investment and easily scales into the 4/5/6 node vSAN cluster configurations.

With the 4/5/6-node vSAN configurations, organizations benefit from expanded storage policies, including mirroring and RAID-5/6 erasure coding that helps to minimize the capacity costs of mirroring data. In addition, with the improvements made in vSAN 7 Update 2, performance has been greatly improved for RAID-5/6 erasure coding, helping to close the gap on choosing mirroring over erasure coding for performance reasons.

The vSAN software-defined storage solution helps businesses modernize and scale their existing enterprise data center solutions to meet their business’s current and future demands and does so with many different options and capabilities. In addition, by leveraging software-defined technologies, organizations can solve technical challenges that have traditionally been difficult to solve using standard technologies.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!