Save to My DOJO

The topic of quorum in Microsoft Failover Clustering often gets very little mention. Quorum guides the actions of surviving nodes when there are system-level failures. This technology has evolved substantially over the last few versions of Windows/Hyper-V Server and many are not aware of the changes.

The Purpose and Function of Quorum

Quorum has one basic purpose: to ensure that protected roles can always find their way to one, and only one, live host. If you’ve ever wondered why Hyper-V Replica doesn’t have any built-in automatic failover capability, this is why. It’s simple enough for the Replica host to know that it can’t reach the source host anymore, but that’s not enough to be certain that it isn’t still up. If it were to simply start the replicas, it’s entirely possible that the source and the replica machines would all be running simultaneously. This is known as a split-brain situation. In some cases that might not be a big problem, but in others it could be catastrophic. One example would be a database system in which some clients can see the source virtual machine while others have switched over to using the replica. Updates between these two systems would be difficult, if not impossible, to reconcile.

How to Think of Quorum

Oftentimes, quorum is explained and understood as a continual agreement made between the majority of nodes to exclude any that are not responding, sort of the way humans determine quorum at meetings. While there is some truth to this in the more recent versions, it does not tell the entire tale. It is more correct to think of quorum always being calculated from the perspective of each node on its own. If any given node does not believe that quorum is satisfied, it will voluntarily remove itself from the cluster. In so doing, it will take some action on the resources that it is running so that they can be taken over by other nodes. In the case of Hyper-V, it will perform the configured Cluster-Controlled offline action specific to each virtual machine. If other nodes still have quorum, they’ll be able to take control of those virtual machines.

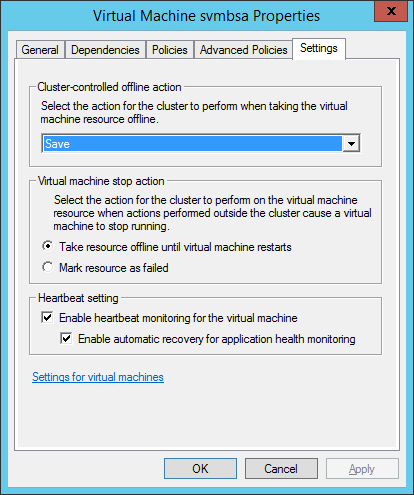

To configure the cluster-controlled offline action:

- Open Failover Cluster Manager, find the virtual machine to be configured, and highlight it.

- In the lower center pane, click the Resources tab. Double-click the item that appears under the Virtual Machine heading.

- When the dialog appears, switch to the Settings tab. Here, you can select the action you want to be taken when the node that the virtual machine is on removes itself from the cluster. The dialog as it appears on 2012 R2 is shown below:

As for quorum itself, there are multiple ways it can be configured. Each successive version of Windows/Hyper-V Server has introduced new options. To understand where we are, it helps to know where we have been. We’ll take tour through the previous versions and end with the new features of 2012 R2.

Through 2008 R2

My personal experience starts with 2008 R2. I know that in very early versions, only disk witness options were available. By the time of 2008 R2, a number of new options were available. The complete list is:

- Disk Witness Only: This is the original version of quorum. One cluster disk is selected to serve as the witness. If any node cannot contact the witness, it assumes quorum has been lost and shuts down. The cluster will write some synchronizing information to the witness. It’s not usually more than a hundred megabytes or so, so it’s common to make this disk around 512MB as that is the smallest efficient disk size for NTFS. That format is required for the witness disk. Make sure you understand the requirements, as most deployments will use one.

- File Share Witness: This is very similar to the disk witness except that it works on a file share instead of a cluster disk.

- Node Majority: The node majority mode is fairly self-explanatory. If a node cannot see enough other nodes that, including itself, constitute a majority, it shuts itself down.

- Node Majority and Witness: This is just a combination of the node majority and either of the two witness options. Node majority doesn’t work well when there are an even number of nodes. The nodes will consider the witness when determining if a majority is present.

Changes in 2012

Prior to 2012, quorum was static. Every item in the cluster is considered to have a vote. If the quorum mode is witness-only, then there is only one vote, and if it is lost, then the entire cluster and all nodes in it will stop. In node majority, each node gets a vote. So, for a four node cluster in node majority plus disk witness, there are a total of five votes. As long as any given node can contact two other nodes or one node and the disk witness, it will remain online. The calculation for how many votes constitute a quorum is simple: 50% + 1. Because of that +1 need, you always want your cluster to have an odd number of votes.

New in 2012 is dynamic quorum. Now, the vote for each cluster node can be selectively removed without completely removing the node from the cluster. If a node is rebooted, for instance, all of its guests will undergo their configured shutdown process as usual and be taken over by other nodes. However, at the cluster’s discretion, the node’s vote might be rescinded and will no longer count toward quorum until it returns to the cluster. If the loss of that node makes the number of surviving votes even, the cluster may choose to dynamically remove a vote from another node.

The impact of dynamic quorum is interesting. If you run the wizard to automatically configure quorum for a two-node cluster in 2012, it will recommend that you choose node majority only, whereas 2008 R2 would have wanted node majority plus a witness. This is because if either node exits the cluster voluntarily, dynamic quorum will still understand that everything is OK. What wasn’t clear to me was exactly how this protected you from the split-brain problem. However, a node wouldn’t be able to take over virtual machines that the other was running because they would still be in use on that other node. I didn’t do a great deal of testing with this, but it seemed to do a good job of keeping guests running without problems when the hosts were isolated.

The other new feature that dynamic quorum brought to 2012 was the ability to directly manage which nodes were allowed to have a vote. While the cluster will dynamically remove votes from nodes that go offline, it will always want to assign them a vote while they are present. You can manually override this by preventing specific nodes from ever having a vote. This can be useful if you want to have fine control over how nodes will react in failure conditions.

Changes in 2012 R2

2012 R2 brought about almost as many improvements as the original introduction of dynamic quorum.

The witness disk is now assigned a dynamic vote as well. In 2012, only the nodes were dynamic. If a witness was present, it always had a vote. The big change with this is that it’s always recommended to have a witness regardless of the number of nodes in the cluster. The cluster can decide on the fly if that witness should have a vote or not.

Now that all entities have a dynamic vote, you can give the cluster hints on which nodes should have their vote taken away first rather than taking them away completely. Doing so allows for much more resiliency when failures are less impacting than inter-site link failures. We’ll round out the post with a how-to.

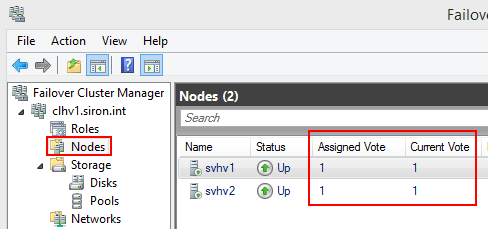

One sort-of nice thing about quorum in 2012 R2 is that you can see vote allocations right in Failover Cluster Manager:

You can see the quorum settings you chose for the nodes, either intentionally or by accepting defaults in the wizard (assigned vote) and what the cluster is doing to manage a node (current vote). What you don’t see is the vote status of witness disks or shares. Luckily, PowerShell can retrieve that for you:

(Get-Cluster).WitnessDynamicWeight

A returned 0 means the witness currently doesn’t have a vote, 1 means that does.

Recovering from Failure

Sometimes, nodes fail. That’s one of the reasons you have a cluster in the first place. Quorum will do its job and shut down nodes that cannot maintain quorum. For the most generic sense, this will work as you expect. You’ve got three nodes in your one and only site and node 2 dies. Nodes 1 and 3 will pick up the pieces and proceed along. In 2008 R2, node 2 will still have its vote, so if node 1 or node 3 goes down, the cluster will go down with it. If you’re using 2012, you probably won’t have a disk witness, so the cluster may or may not remove node 2’s vote after a time. If it does, then it might be able to operate if node 1 or node 3 fails. It’s got it’s own calculations it performs to decide all that which I’m not privy to. If you’re using 2012 R2 and have a witness, then node 2’s vote will be removed after a time, meaning that the cluster will be able to partially survive the loss of a second node, provided that the failure doesn’t occur too quickly after the first failure. If the node can be recovered and comes back online, all the votes will return to their previous status.

If too many nodes fail and the entire cluster goes down, you’ll have a few more steps to take. First, you need enough nodes to maintain quorum. You can evict permanently failed nodes or fix them. Once fixed, you can use Failover Cluster Manager to start the cluster or the Start-Cluster PowerShell cmdlet.

That’s all pretty easy to understand and probably covers 90% of the clusters out there.

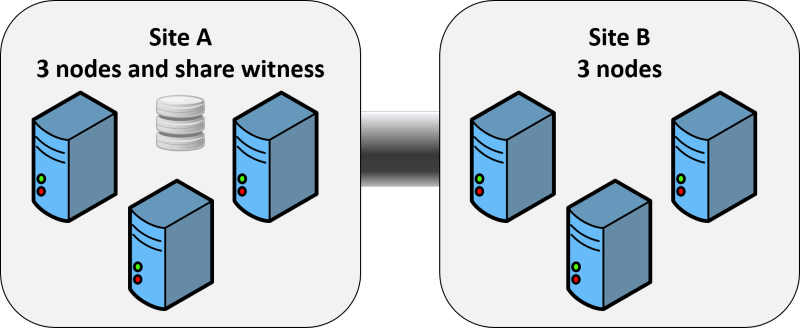

There are a lot of other possibilities, though. Consider the following build:

There’s a lot that goes into the design of like a cluster like this, most of it far beyond what we want to talk about in this post. What we’re focusing on here is what happens when that inter-site link is dropped. There’s a witness in Site A, which gives that site 4 votes. Site B has only 3 votes, so all three of those hosts will gracefully shut down their guests and exit the cluster. However, you might have split the storage of the cluster so that this isn’t strictly necessary. You might have a number of virtual machines whose storage only lives in Site B, so there’s no harm running them in Site B even when the link is down. In the Microsoft documentation, Site B is considered a “partitioned” site (and if you look in the event logs for the cluster, you’ll find entries indicating that the networks are partitioned). You can manually get Site B back online while the link is down.

Note: When it comes to quorum, the PowerShell cmdlets for Failover Clustering are of questionable quality and the documentation is all over the place. You will see things here that are inconsistent with what you find in other locations. You may have experiences that are different from mine. I’ve written this piece from an attempt to reconcile what the documentation says against what I’ve actually seen happen.

Ordinarily, you could just go into Failover Cluster Manager and just start the nodes or start the cluster. That won’t work for Site B if it’s partitioned. What you can do is go onto a single node and run net start clussvc /fq. The /fq stands for “Fix Quorum”, which I don’t think explains at all what it does. It basically means “I know you won’t be able to establish proper quorum; start anyway”. Starting with 2012, you could use PowerShell: Start-ClusterNode -Name SiteB-Node1 -FixQuorum. Well, in 2012 R2, the same parameter is now -ForceQuorum, which makes a lot more sense.

But, this is the point where the documentation just sort of abandons you. You can continue adding in nodes with /fq. Or, you can use /pq (prevent quorum). When I was first doing research on this subject (a while ago), it made it sound like you needed to use /pq for each additional node you wanted to add in the same partitioned site so that they could see each other but wouldn’t damage quorum. But, on further research, that seems to not be the case. It seems to be that when the partitioned nodes are able to reconnect to the main cluster, you need to re-start them with the /pq switch so that they don’t overwrite the quorum status for the non-partitioned nodes. Make sense, right? Except that, this document implies that you don’t need to do that. Notice the “Applies To”. Is the case then, that 2008 could handle this situation better than 2012? And what happens if all the nodes rejoin and the partitioned systems overwrite the quorum data for all the non-partitioned nodes anyway? Don’t they just sort of work it out? The 2008 document implies that they do. Anyway, there seems to be a new feature in 2012 R2 that means you’ll never have to use /PQ again. The real problem is, during my testing, I never got in a place where it seemed necessary anyway, according to their usage. It made more sense to me to use it for nodes 2+ in the partitioned site while the site remained partitioned than anything else. But, if you’re in doubt, you’re not exactly stuck. There seems to be a node property that tells you what to do:

(Get-ClusterNode -Name svhv2).NeedsPreventQuorum

Configuring Quorum

There are PowerShell cmdlets available for basic quorum configuration in 2012+, but I’m just going to link to them (2012 here, 2012 R2 here). It’s better and easier to use Failover Cluster Manager. Right-click the cluster’s name at the top of the tree in the left pane, hover over More Actions, and click Configure Cluster Quorum settings to get started. In 2012 and beyond, you’ll get the option to let the cluster do it all for you (Default). In 2008 R2, you have to pick from the available options, although it will recommend one for you. I’d always recommend that you let the cluster figure out what to do unless you have a specific, identified need to deviate. There are a couple of settings that bear further scrutiny.

Disk Witness vs. Share Witness

If you’re using an even-node cluster in 2012 or earlier or any number of nodes in 2012 R2, using a witness of some sort is preferred.

- Use the disk witness when:

- You have an available iSCSI or Fiber Channel system that all nodes can reach and have the ability to create the appropriate LUN (optimal size of 512MB formatted NTFS; larger is OK but wasteful)

- All nodes are in the same site

- Use the share witness when:

- You won’t be using FC or iSCSI, but will be hosting virtual machines on SMB 3 shares

- Nodes are geographically dispersed and will be using disjointed storage systems

Overall, disk witnesses tend to be more reliable because they aren’t used for anything else. They also tend to be on the same storage units that host virtual machines, so the loss of the LUN likely means loss of other critical storage as well — having the cluster up doesn’t mean much if all the VMs’ storage is unavailable.

The benefit of the share witness is that you don’t require that kind of ubiquitously connected storage. In fact, it’s highly recommended that a share witness exist on something other than a resource related to the cluster. If you create LUNs that only exist in one site or another so that a geographically-dispersed cluster continues to work when inter-site links fail, remote sites can still access a disk share that only exists in one site while the link is up.

Setting Votes

The purpose of manually managing votes is so that you can guide how loss of quorum will affect your hosts. This is most commonly done when you have a geographically dispersed cluster as shown in the image above. You are essentially choosing which site will always stay online in the event that the inter-site link fails. This is often done most effectively by judicious placement of a witness. However, it’s also helpful as a plan against multiple failures. For instance, if Site A above were to lose one of its hosts prior to the link going down, Dynamic Quorum would be choosing who to take a vote away from. By removing one or more votes from Site B in advance, you are maintaining control.

When you choose the Advanced option in 2012+, you can pick which nodes get a vote by un/checking them. You cannot decide if the witness gets a vote; by virtue of choosing a witness, it has a vote.

You can take a node’s vote away at any time like this:

(Get-ClusterNode -Name svhv2).NodeWeight = 0

Just make it a 1 to give its vote back.

In 2012 R2, rather than taking a vote away entirely, you can give the cluster service a hint as to which node you want to be first to lose its vote:

(Get-Cluster).LowerQuorumPriorityNodeID = (Get-ClusterNode ‑Name "svhv2").Id

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

6 thoughts on "Quorum in Microsoft Failover Clusters"

Very informative article. One thing to add:

FQ was changed from FixQuorum to ForceQuorum in 2008 edition itself. FixQuorum was just in 2003 platforms.

Very informative article. One thing to add:

FQ was changed from FixQuorum to ForceQuorum in 2008 edition itself. FixQuorum was just in 2003 platforms.