Save to My DOJO

Windows Server Failover Clusters are becoming commonplace through the industry as the high-availability solution for virtual machines (VMs) and other enterprise applications. I’ve been writing about clustering since 2007 when I joined the engineering team at Microsoft (here is one of the most referenced online articles about quorum from 2011). Even today, one of the concepts that many users continue to misunderstand is a quorum. Most admins know that is has something to do with keeping a majority of servers running, but this blog post will give more insight into why it is important to understand how it works. We will focus on the newest type of quorum configuration known as a cloud witness which was introduced in Windows Server 2016. This solution is designed to support both on-premises clusters and multi-site clusters, along with the guest clusters which can run entirely in the Microsoft Azure public cloud.

Failover Clustering Quorum Fundamentals

NOTE: This post covers quorum for Windows Server 2016 and 2019. You can also info related to quorum on an older version of Windows Server.

Outside of IT, the term “quorum” is defined in business practices as “the number of members of a group or organization required to be present to transact business legally, usually a majority” (Source: Dictionary.com). For Windows Server Failover Clustering, it means that there must be a majority of “cluster voters” online and in communication with each other for the cluster to operate. A cluster voter is either a cluster node or a disk which contains a copy of the cluster database.

The cluster database is a file which defines registry settings that identify the state of every element within the cluster, including all nodes, storage, networks, virtual machines (VMs) and applications. It also keeps track of which node should the sole owner running each application and which node can write to each disk within the cluster’s shared storage. This is so important because it prevents a “split-brain” scenario which can cause corruption in a cluster’s database. A split-brain happens when there is a network partition between two sets of clusters nodes, and they both try to run the same application and write to the same disk in an uncoordinated fashion, which can lead to disk corruption. By designating one of these sets of cluster nodes as the authoritative servers, and forcing the secondary set to remain passive, it ensures that exactly one node runs each application and writes to each disk. The determination of which partition of clusters nodes stays online is based on which side of the partition has a majority of cluster voters, or which side has a quorum.

For this reason, you should always have an odd number of votes across your cluster, meaning 51% or more of voters. Here is a breakdown of the behavior based on the number of voting nodes or disks:

- 2 Votes: This configuration is never recommended because both voters must be active for the cluster to stay online. If you lose communicate between voters, the cluster stays passive and will not run any workloads until both voters (a majority) are operational and in communication with each other.

- 3 Votes: This works fine because one voter can be lost, and the cluster will remain operational, provided that two of the three voters are healthy.

- 4 Votes: This can only sustain the loss of one voter and three voters must be active. This is supported but requires extra hardware yet provides no additional availability benefit and a three-vote cluster.

- 5, 7, 9 … 65 Voters: An odd number of voters are recommended to maximize availability by allowing you to lose half (rounded down) of your voters. For example, in a nine-node cluster, you can lose four voters and it will continue to operate as five voters are active.

- 6, 8, 10 … 64 Voters: This is supported, yet you can only lose half minus one voter, so you are not maximizing your availability. In a ten-node cluster you can only four voters, so five must remain in communication with each other. This provides the same level of availability as the previous example with nine, yet requires an additional server.

Using a Disk Witness for a Quorum Vote

Based on Microsoft’s telemetry data, a majority of failover clusters around the world are deployed with two nodes, to minimize the hardware costs. Although these two nodes only provide two votes, a third vote is provided by a shared disk, known as a “disk witness”. This disk can be any dedicated drive on a shared storage configuration that is supported by the cluster and passes the Validate a Cluster tests. This disk will also contain a copy of the cluster’s database, and just like every other clustered disk, exactly one node will own access to it. It does so by creating an open file handle on that ClusDB file. In the event where there is a network partition between the two servers, then the partition that owns the disk witness will get the extra vote and run all workloads (since it has two of three votes for quorum), while the partition with a single vote will not run anything until it can communicate with the other nodes. This configuration has been supported for several releases, however, there is still a hardware cost to providing a shared storage infrastructure, which is why a cloud witness was introduced in Windows Server 2016.

Cloud Witness for a Failover Cluster

A cloud witness is designed to provide a vote to a Failover Cluster without requiring any physical hardware. It is a basically a disk running in Microsoft Azure which contains a copy of the ClusDB and is accessible by all cluster nodes. It uses Microsoft Azure Blob Storage, and a single Azure Storage Account can be used for multiple clusters, although each cluster requires it owns blob file. The cluster database file itself is very small, which means that the cost to operate this cloud-based storage is almost negligible. The configuration is fairly easy and well-documented by Microsoft in its guide to Deploy a Cloud Witness for a Failover Cluster.

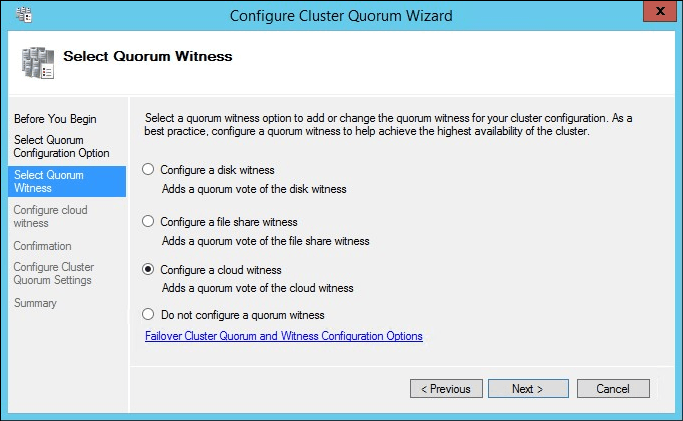

You will notice that the cloud witness is fully integrated within Failover Cluster Manager’s utility, Configure Cluster Quorum Witness, where you can Configure a cloud witness.

Selecting a Cloud Witness to use in the Configure Cluster Quorum Wizard

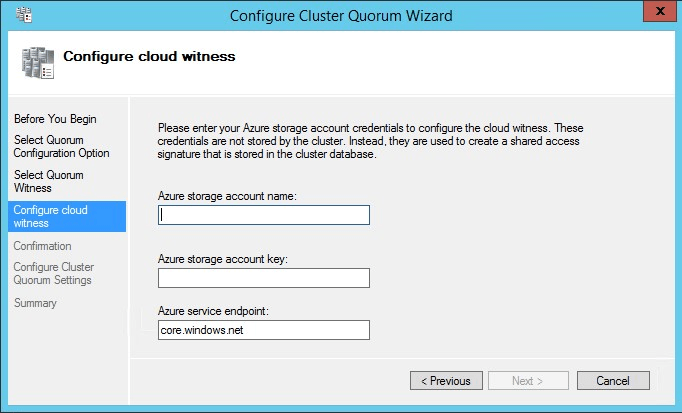

Next, you enter the Azure storage account name, key, and service endpoint.

Entering Cloud Witness details in Configure Cluster Quorum Wizard

Now you have added an extra vote to your failover cluster with much less effort and cost than creating and managing on-premises shared storage.

Failover Clustering Cloud Witness Scenarios

To conclude this blog post we’ll summarize the ideal scenarios for using the Cloud Witness:

- On-premises clusters with no shared storage – For any even-node clusters with no extra shared storage, then consider using a cloud witness as an odd vote to help you determine quorum. This configuration also works well with SQL Always-On clusters and Scale-Out File Server clusters which may have no shared storage.

- Multi-site clusters – If you have a multi-site cluster for disaster recovery, you will usually have two or more nodes at each site. If these balanced sites lose connectivity with each other, you still need a cluster voter to determine which side has quorum. By placing this arbitrating vote in a third site (a cloud witness in Microsoft Azure), it can serve as a tie-breaker to determine the authoritative cluster site.

- Azure Guest Clusters – Now that you can deploy a failover cluster entirely within Microsoft Azure using nested virtualization (also known as a “guest cluster”), you can utilize the cloud witness as an additional cluster vote. This provides you with an end-to-end high-availability solution in the cloud.

The cloud witness is a great solution provided by Microsoft to increase availability in Failover Clusters while reducing the cost to customers. It is now easy to operate a two-node cluster without having to pay for a third host or shared storage disk, whose only role is to provide a vote. Consider using the cloud witness for your cluster deployments and look for Microsoft to continue to integrate its on-premises Windows Server solutions with Microsoft Azure as the industry’s leading hybrid cloud provider.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!