Save to My DOJO

In this article, we’ll have a look at what the role of vSphere Flash Read Cache is, why it was deprecated and what are the options to accelerate workloads without it, in vSphere 7.0 and above.

Host side caching mechanisms such as vSphere Flash Read Cache serve to accelerate workloads by using capacity on a faster medium installed locally on the server such as SSD, NVMe, memory. vSphere Flash Read Cache, also known as vFRC or vFlash, is a now past feature that was released back in vSphere 5.5 which would let you assign capacity from a caching device to accelerate the read operations of your virtual machines.

“vFRC enabled VMs are accelerated with every cache hit, relieving the backend storage from a number of IOs.”

Local caching offers that extra boost in performances to VMs stored on slower mediums without the need to replace your storage systems. Many companies developed caching mechanisms such as PernixData (later acquired by Nutanix) which was one example back in the days with a product that took some of the host’s RAM to create a caching device using a ViB installed on the host.

Why was vSphere Flash Read Cache deprecated?

Unfortunately, vSphere Flash Read Cache was deprecated in vSphere 7.0, due in part to the lack of customer engagement with the feature as well as the shift to full flash storage these last few years.

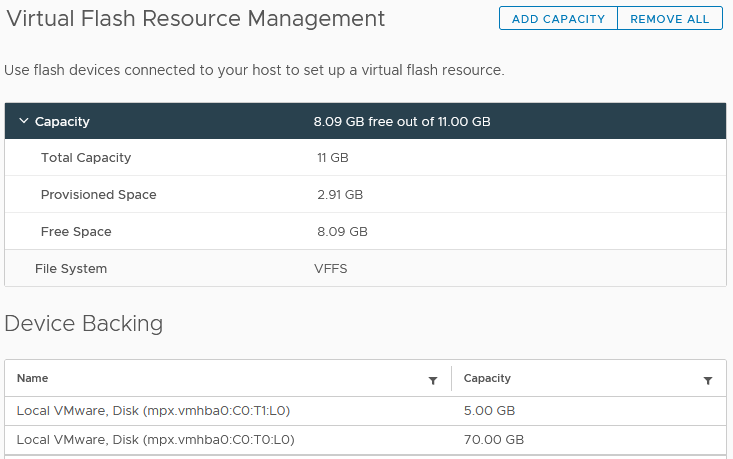

Note that you will still find the Virtual Flash Resource Management pane in the vSphere 7.0 web client. However, it now only serves the following purposes:

- Host swap caching: Storing VM swap files on flash devices to mitigate the impact of the memory reclamation mechanism during resources contention.

- I/O Caching filters: Third party software vendors can leverage the vSphere APIs for I/O Filtering (VAIO) to achieve VM disk caching on flash devices.

- VFFS, the file system used to format flash devices, is also used by default in vSphere 7’s new partitioning system to store OS data when ESXi is installed on a flash device. This the reason why you might see your boot disk in there.

“Virtual Flash devices are now dedicated to Host swap caching and VM I/O Filtering.”

Some will say that host side caching is past history and most big companies have the same lines these days; “SSD is getting cheaper and cheaper”, “Flash devices are a commodity”. Sure it is cheaper than 10 years ago and we all know that a full flash storage will leave any spindles-backed storage backend in the dust.

However, many small and medium-sized companies still don’t have the budget nor the need for a full flash array. Same if you have petabytes of data lying around, chances are a move to full SSD will be way too big an investment.

Now you may be wondering: “Wait a minute, I was actually using this feature, does that mean I can’t upgrade to vSphere 7.0 ?!”

Well, you can upgrade, however, you will have to find another solution as the vSphere Flash Read Cache actions have been removed from the vSphere client.

What are alternatives to vSphere Flash Read Cache?

Unfortunately, there is no one good alternative to replace vFlash as host-based caching isn’t as trendy as it used to be. Note that the following options are just that; options. The list isn’t an exhaustive representation of all that’s out there. Feel free to leave a comment to share other solutions you may know that apply to this context.

Now when reading some of these you might think “Hold on, that’s cheating!”. Well, the idea isn’t really to give one-to-one alternatives to vSphere Flash Read Cache as the list would be very short. We try to approach the root issue (application performance) from different angles and offer different ways to tackle the problem. You may find that one of these options gives you more flexibility, open the door to cost savings or you may realize that it’s time for a new storage system.

It is also worth noting that you shouldn’t really implement any of the following solutions if you haven’t positively identified that your disk pool is the limiting factor in your infrastructure. First, make sure that you don’t have a bottleneck somewhere else (SAN, HBA, RAID Caching…). Esxtop can help you with that with the DAVG, GAVG and KAVG metrics.

Third party software solutions (VAIO)

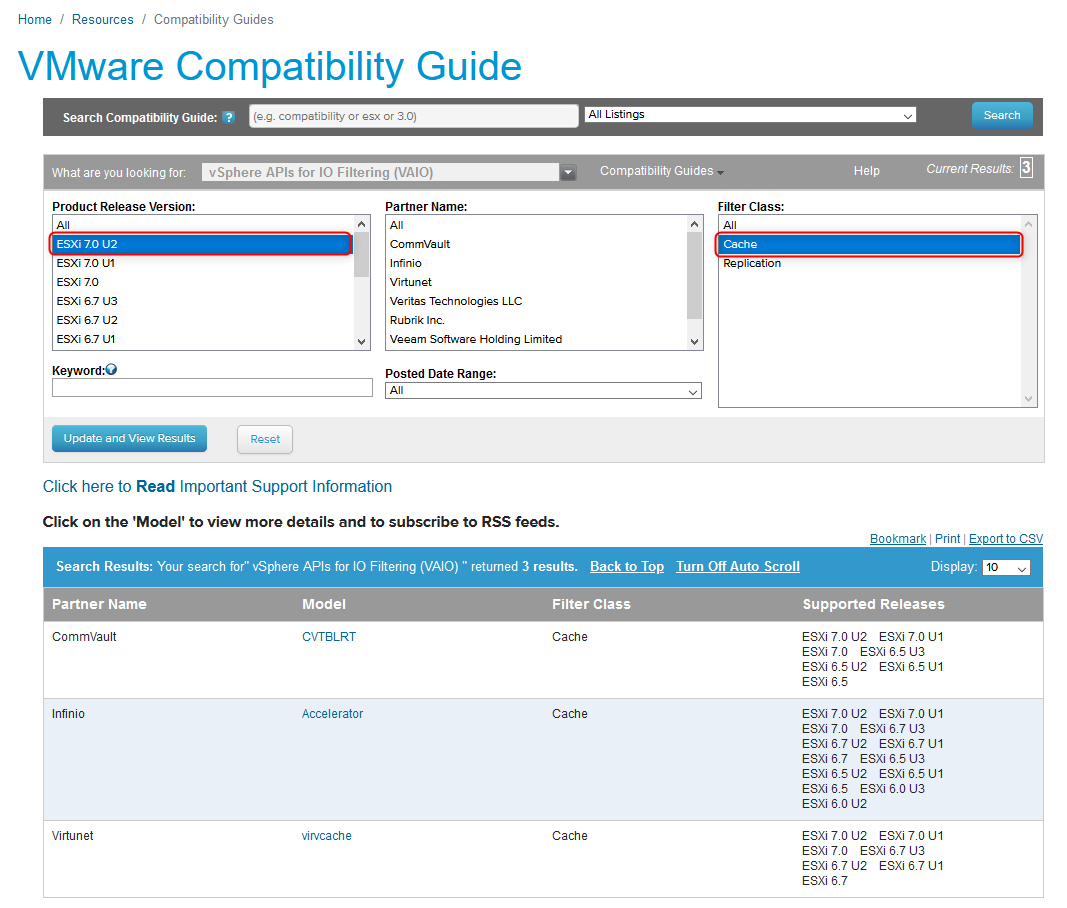

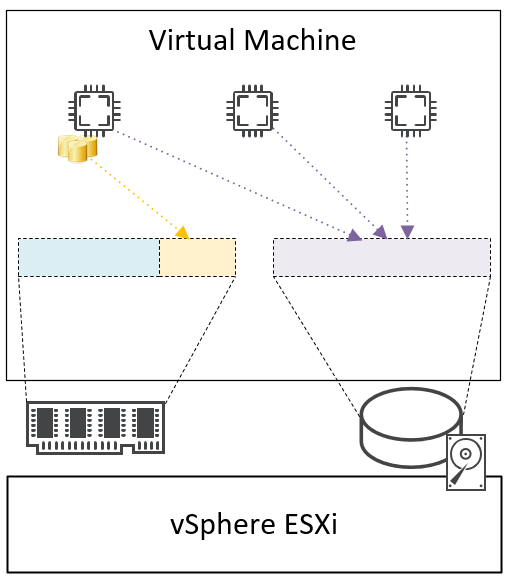

We briefly mentioned the vSphere APIs for I/O Filtering (VAIO) in a previous section. The VAIO was introduced back in vSphere 6.0 Update 1. It is an API that can be leveraged by third party vendor software to manipulate (filter) the I/O path of a virtual machine for caching and/or replication purposes.

After installing the vSphere and vCenter components provided by the vendor, a VASA provider is created and the filter can be applied in the I/O path of a VM’s virtual disk using VM storage policies. Meaning it will work regardless of the backend storage topology, be it VMFS, NFS, vSAN, vVol…

“While processing the virtual machine read I/Os, the filter creates a virtual machine cache and places it on the VFFS volume.”

Anyway, enough with the behind-the-scenes stuff, you may be wondering how to actually use it. Well, you will have to resort to a third-party vendor that offers a solution to leverage the API.

You can find the list of compatible products in the VMware HCL in the “vSphere APIs for I/O Filtering (VAIO)” section. Select your version of vSphere, click on “Cache” and hit “Update the results”.

|

Pros |

Cons |

|

Applies with any backend storage type |

Third-party software to purchase and install |

|

Flexible per-VM configuration |

Local SSD required on each host |

|

Fairly low-cost to implement |

Unknown lifespan (vendors might drop it) |

“Compatible third-party products are listed in the VMware HCL for each vSphere version.”

As you can see, only 3 products are currently certified for vSphere 7.0 U2. You can tell that the golden years of host-based caching are probably behind us as you will see more products if you change to an older version of vSphere.

However, it is still a relevant and valuable piece of infrastructure for those that don’t want to or can’t yet afford the more expensive alternative.

Guest based In-memory caching

One of the upsides of host-based caching is that it is managed at the hypervisor level and gives the vSphere administrator visibility on it. However, you can also achieve performance gains by leveraging in-memory caching in your guest operating system.

“Databases can leverage in-memory and ramdisks to store latency-sensitive data in ram.”

Now this will very much depend on the application and which OS you are running. In-memory tables for databases are probably the most common use case for it. If you have enough memory on the host, you can store part of the database in memory and achieve great performances.

|

Pros |

Cons |

|

Great app performances |

Significant amount of memory required |

|

No exotic infrastructure configuration |

Dependent on the application and OS |

|

No extra hardware or software required |

Applies in few cases |

vSAN

I am aware that bringing vSAN as an alternative is a bit cheeky as it is a completely different storage system compared to traditional storage arrays but bear with me. What makes it fit into this blog is the fact that vSAN is natively built on a caching mechanism that offloads to a capacity tier. This makes it a great option as all the workloads stored on the vSAN datastore will benefit from SSD acceleration.

While most vSAN implementations nowadays are full flash, it is possible to run it in hybrid mode with a spindles-backed capacity tier. This is usually cheaper and offer greater storage capacity. You will most likely see a significant performance improvement even in hybrid mode in terms of I/O per second and max latency.

“vSAN can run in hybrid or full-flash modes.”

Note that you can now connect a traditional cluster to a remote vSAN datastore with no extra vSAN license required thanks to the new vSAN HCI mesh compute cluster feature introduced in vSphere 7.0 Update 2. Meaning an existing vSphere cluster can connect to the vSAN datastore of another cluster, hence facilitating transitions and migrations to vSAN and avoid “big-bang” changes.

Alternatively, if you were using local flash devices for vFRC, you might be able to re-use them as a cache tier, add hard drives for capacity and convert your hosts into a vSAN cluster. However, you will need to check that all the components in your servers are supported in the vSAN HCL.

If you want to better understand how vSAN works and its different components, check out our blog dedicated to it.

|

Pros |

Cons |

|

Great performances overall |

Significant investment |

|

All workloads benefit from caching |

Only accelerates workloads stored on vSAN |

|

Can be easily scaled up/out |

Doesn’t integrate with existing infrastructure |

Wrap up

Host side caching is a great and affordable way to improve virtual machines performances and it fits in most environments with little infrastructure changes. However, the moving train of evolving technology waits for no one and vSphere Flash Read Cache didn’t make the cut this time with the rise of hyperconvergence as well as the drop in prices on the SSD market.

vSphere Flash Read Cache had been leading the charge on the vSphere front and its deprecation is bad fortune for those that were relying on it in their production environments. While this will be a bummer indeed, don’t get stuck on it, there are still plenty of options out there to accelerate your workloads. It might also be a good time to re-think your infrastructure needs and consider moving to a more converged and modern approach.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!