Save to My DOJO

Fault Tolerance is a seldom used feature even though it’s been available since the days of VMware Infrastructure, the old name for what today is known as VMware vSphere; the software suite comprising vCenter Server and the ESXi hypervisor along with the various APIs, tools, and clients that complement it. If you’ve read my post on High Availability, you probably know that Fault Tolerance is a feature intrinsic to an HA enabled cluster.

In today’s post, I’ll go over the new features introduced in vSphere 6.0 and how to enable FT on your VMs.

An Overview

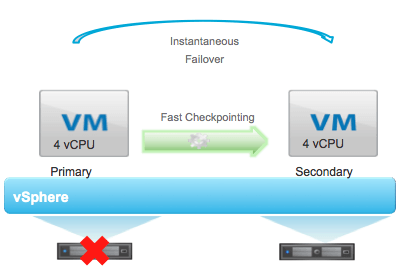

VMware Fault Tolerance (FT) is a process where a virtual machine, called the primary VM, replicates changes to a secondary VM created on an ESXi host other than the one hosting the primary vm. Think mirroring. In the event that the primary VM ‘s host fails, the secondary VM will take over immediately with zero interruption and downtime. Similarly, if the secondary VM’s ESXi host goes offline, a secondary VM is created on yet another host, assuming one is available. For this reason, a 3-host cluster is the recommended minimum even though FT works just as well on clusters with just 2 nodes.

Figure 1 – FT architecture (Source: www.vmware.com)

The one benefit that immediately stands out, is that Fault Tolerance raises the High Availability bar one notch higher. FT bolsters business continuity by mitigating against data loss and application downtime, something that an HA cluster on its own cannot completely deliver. FT is also useful in scenarios where expensive clustering solutions are impractical or costly to implement.

Occasionally, you may come across the term On-Demand Fault Tolerance. Since FT is a resource expensive process, you may decide to employ scripting to enable and disable FT on a schedule; just to mention one way of doing it. On-Demand FT protects business critical VMs and the corresponding business processes such as payroll applications and payroll runs against data loss and service interruption. That said, keep in mind that FT protects only at a host level. It does not provide any application level protection meaning that manual intervention is still required if a VM experiences OS and/or application failures.

New Features and Support

In the opening paragraph, I used to word seldom to indicate that FT has been generally overlooked at least until vSphere 6 came along. The reason for this is due to FT’s lack of support for symmetric multiprocessing and VMs sporting more that 1GB of RAM. In fact, prior to vSphere 6.0, FT could only be enabled on VMs configured with a maximum of 1 vCPU and 1GB of RAM. Needless to say, this limitation turned out to be a show stopper since current operating systems and applications require significantly more compute resources.

NOTE: FT is not available with vSphere Essentials and Essentials Plus licensed deployments.

The improvements that vSphere 6.0 brings to FT are as follows.

-

Support for symmetric multiprocessor vms

- Max. 2 vCPUs – with vSphere Standard and Enterprise licenses

- Max. 4 vCPUs – with a vSphere Enterprise Plus license

-

Support for all types of VM disk provisioning

- Thick Provision Lazy Zeroed

- Thick Provision Eager Zeroed

- Thin Provision

- FT VMs can now be backed up using VAPD disk-only snapshots

-

Support for VMs with up to 64GB of RAM and vmdk sizes of up to 2TB

And here’s a list of VM and vCenter features that remain unsupported

- CD-ROM or floppy virtual devices backed by a physical or remote device.

- USB, Sound devices and 3D enabled Video devices

- Hot-plugging devices, I/O filters, Serial or parallel ports and NIC pass-through

- N_Port ID Virtualization (NPIV)

- Virtual Machine Communication Interface (VMCI)

- Virtual EFI (Extensible Firmware Interface) firmware

- Physical Raw Disk mappings (RDM)

-

VM snapshots (remove them before enabling FT on a VM)

- Linked Clones

- Storage vMotion (moving to an alternate datastore)

- Storage-based policy management

- Virtual SAN

- Virtual Volume Datastores

-

VM Component Protection (see my HA post)

Legacy FT

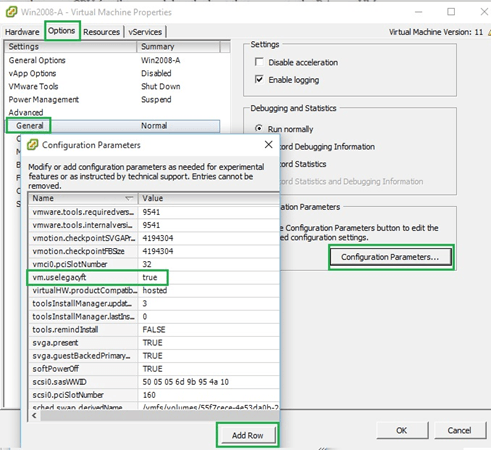

Before I move on, know that VMware uses the term Legacy FT to refer to FT implementations predating vSphere 6.0. If required, you can still enable Legacy FT by adding vm.uselegacyft to the list of advanced configuration parameters.

Figure 2 – Enabling Legacy FT

The Basic Requirements

At the time of writing, the most stringent requirement in my opinion is the 10Gbit dedicated network, which can be a hard sell to SMBs as this is something you usually find in an enterprise setting due to cost. Other than that, just ensure that the CPUs in your clusters support Hardware MMU virtualization and are vMotion ready. For Intel CPUs, anything from Sandy Bridge onwards is supported. For AMD, Bulldozer is the minimum supported micro-architecture.

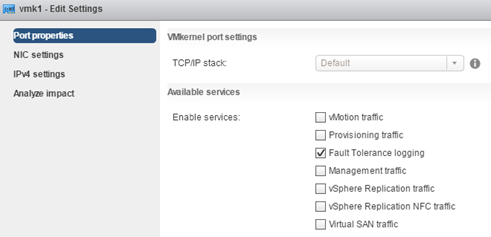

On the networking side, you need to configure at least one vmkernel for Fault Tolerance Logging. At a minimum, each host should have a Gbit network card set aside for vMotion and another one for FT logging.

Figure 3 – Setting up a vmkernel for FT logging

Note: For DRS to work alongside FT, you must enable EVC. Apart from ensuring a consistent CPU profile, this allows DRS to select the optimal placement of FT protected vms.

Turning Fault Tolerance On

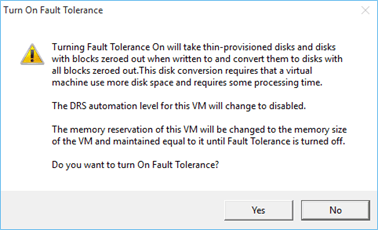

There are a few other requirements that must be met before you can enable FT on a VM. You need to ensure that the VM resides on shared storage (iSCSI, NFS,etc) and that is has no unsupported devices; see list above. Also, note that the disk provisioning type changes to Thick Provision Eager Zeroed after FT is turned on for a VM with thin disks. The conversion process may take a while for large vmdks.

FT can be turned on irrespective of the VM’s power state. However, a number of validation checks are carried out that might differ depending on whether the VM is on or not.

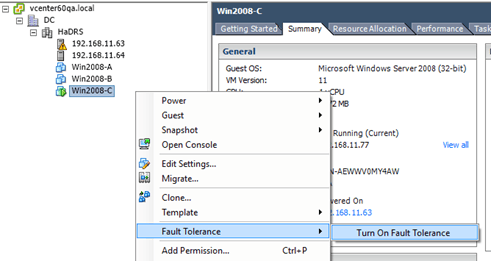

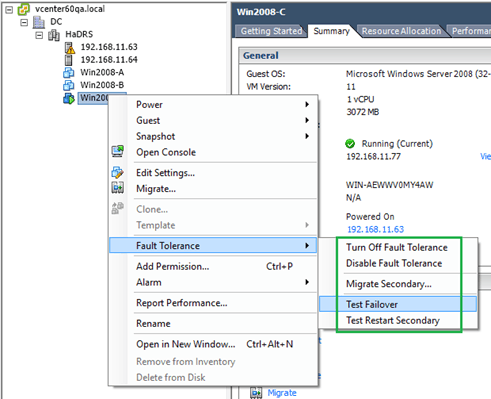

To turn on FT, just right-click on the VM and select Turn On Fault Tolerance. Use the same procedure to turn off FT.

Figure 4 – Turning on FT on a virtual machine

Figure 5 – Changes affected on a vm once FT is turned on

The next video, shows how to turn FT on and off. I’m using a 2-node cluster sporting nested hypervisors so performance is what it is.

Figure 6 – vmdk scrubbing – changing provisioning type to thick eager zeroed

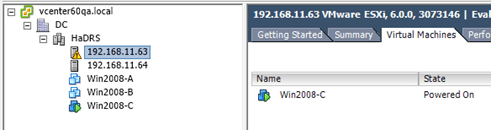

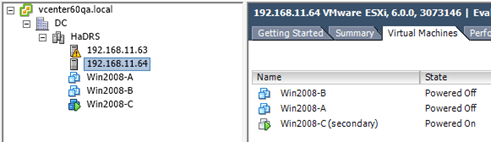

Figure 7 – Primary VM’s host

Figure 8 – Secondary VM’s host

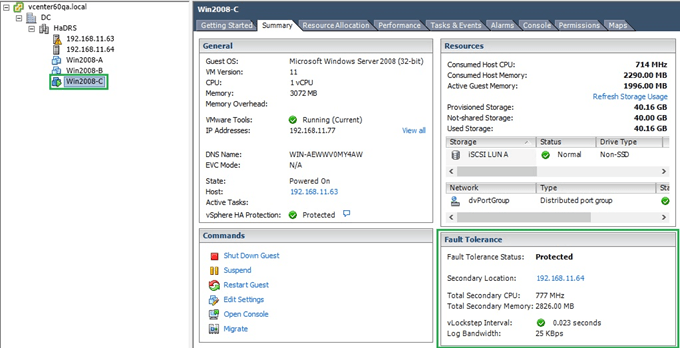

Next, select the VM for which you turned on FT. Under the Summary tab, you should now find a Fault Tolerance details window giving you a number of FT metrics for the selected VM (Fig. 9). Of particular interest is the vLockstep interval which is a measure of how far behind the secondary VM is from the primary one in terms of changes that need to be replicated. Typically, the value should be less than 500ms. In the example shown below, the interval value is of 0.023s or 23ms which is within the acceptable range.

Another metric Log Bandwidth, gives you the current network bandwidth used to replicate changes between the primary and secondary VMs. This can quickly spike up when you have multiple FT protected VMs ((max. 4 VMs or 8 vCPUs per host) hence the 10Gbit dedicated network requirement for FT logging.

Figure 9 – Fault Tolerance details for a FT protect virtual machine

You can simulate a host failure by selecting Test Failover from the VM’s context menu. You can also simulate a secondary restart using the same menu. In both instances, the VM is unprotected while the test is in progress.

Figure 10 – Testing failover

In the next video, I show how to perform a failover and a secondary restart along with the following:

- I illustrate where from HA (and DRS) is enabled which in turn lets you deploy FT protected VMs.

- Verify that the primary and secondary VMs are hosted on different servers.

- Initiate a failover test while pinging an FT protected VM’s IP address. Normally, you’d see a single packet loss. The 3-packet loss you see, is due to the low performing nested environment used.

- Simulate a secondary restart. Notice that the VM is unprotected for a brief instance but returns to being fully protected after the simulation completes.

Conclusion

Undoubtedly, the overhaul made to Fault Tolerance in vSphere 6, makes it a valid tool to have to ensure business continuity and why not, fewer calls in the middle of the night. The 10Gbit dedicated network may prove to be too expensive a requirement for small businesses. That said, there is cheaper 10Gbit gear but then again, if you’re using FT to protect mission VMs, it’s best to go the extra mile and work with trusted providers and tried and tested hardware.

For a complete list of requirements and functionality, have a look at the twolinks.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

8 thoughts on "VMware Fault Tolerance as implemented in vSphere 6.0"

Hi, if I need to add a new vmdk to my FT macchine I need to turn off and lost the copy, upgrade the vm with my new vmdk and recreate it again turning on FT? Right? If I disabled the FT machine doesn’t upgrade it, is that right?

Thanks in advance and a great post!

Hi,

Yes, to add a new disk, you need to TURN OFF FT (not suspend), add the disk and re-enable FT. This means you lose the original replica which is then recreated from scratch.

regards

Jason

This link says the other way around: I am now confused.

https://docs.vmware.com/en/VMware-vSphere/6.0/com.vmware.vsphere.avail.doc/GUID-57929CF0-DA9B-407A-BF2E-E7B72708D825.html

Hi Shanin,

Can you kindly be more specific so I can correct or clarify where applicable?

Jason

in the beginning you mention

” Max. 4 vCPUs (vSphere Standard and Enterprise licenses)

Max. 2 vCPUs (vSphere Enterprise Plus licenses)”

the vmware website says:

” vSphere Standard and Enterprise. Allows up to 2 vCPUs

vSphere Enterprise Plus. Allows up to 4 vCPUs”

Yep, you’re right. Well spotted. I corrected the post and thanks again for pointing it out.

Jason