Save to My DOJO

Table of contents

Modern virtualization technologies have matured greatly since the early days of virtualization. The focus is no longer on simply running virtual machines as it was in days gone by. Now the focus is on applications. When it comes down to it, applications are what enable business-productivity and power business-critical processes. Modern virtualization technologies must support, empower, and accelerate applications.

VMware vSphere 7 contains many great new features and enhancements that help to accelerate applications in your environment. In this post, we will take a look at application acceleration in vSphere 7 and see how the new features and enhancements are helping to allow your organization to achieve better, faster, and more efficient application delivery.

Application acceleration in vSphere 7

VMware vSphere 7 enhancements for applications include great new capabilities to make note of that help to deliver applications to end-users and business stakeholders with the latest performance and efficiency enhancements. What are these?

- Bitfusion

- Improvements in vMotion

- Improvements Distributed Resource Scheduler DRS

- DRS Scalable shares

Let’s take a look at these new vSphere 7 enhancements to application acceleration and see what capabilities they bring to your applications with vSphere 7.

Bitfusion

VMware acquired a company called BitFusion that is a leading pioneer in the space of virtualizing hardware accelerated devices with a strong emphasis on GPU technology. BitFusion software provides a platform that allows the decoupling of physical resources from the servers these devices are attached to.

Hardware acceleration and especially GPUs are linked closely with two very powerful application technologies that are helping to change how businesses are able to use applications and data – artificial intelligence (AI) and machine learning (ML).

These two terms may sound like something that would be seen in a science fiction movie. However, both AI and ML are two evolving technologies that are now a reality and both are enabling software to do things that would have never been possible before.

Artificial intelligence and machine learning are both very closely related. In fact, machine learning is a more specific discipline included in the overall realm of artificial intelligence. Both concepts are related to the idea of computers solving problems in an intelligent way and without any guidance or input from humans to do so.

Most organizations today are in possession of massive amounts of data. Data has been described as the new gold as it holds tremendous value. Massive amounts of data have allowed both AI and ML to become a reality. Both need data to be effective. Using machine learning, software developers can utilize advanced algorithms to allow computer programs to learn from data that is ingested and perform data analysis. Computers can filter through data and find patterns, anomalies, and other points of interest that allow them to make decisions based on this data analysis.

Another important ingredient besides data in the effectiveness of AI and ML is processing power. Filtering through massive amounts of data requires powerful processing hardware to do this effectively and efficiently. Modern CPUs and now GPUs are being used to provide the computing power needed for modern applications using AI and ML. Doing this in a virtualized environment requires virtualizing hardware accelerators such as GPUs.

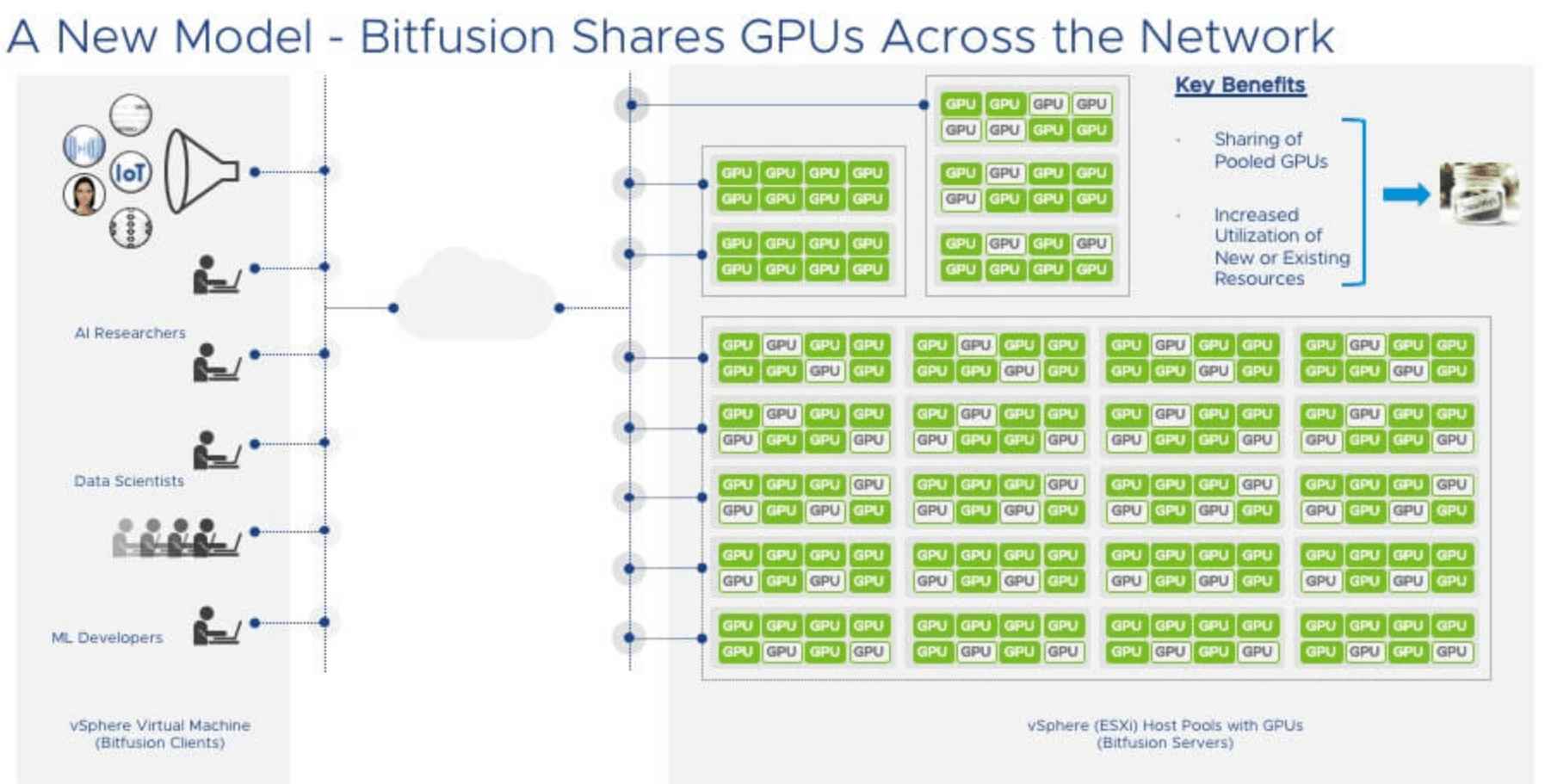

VMware vSphere Bitfusion is integrated natively into vSphere 7 and allows supporting artificial intelligence (AI) and machine learning (ML) workloads. It does this by virtualizing hardware accelerators such as GPUs. How does VMware vSphere Bitfusion empower AI/ML workloads? With vSphere Bitfusion, you can create pools of hardware accelerator resources. GPUs are by far the best-known accelerators that are used for many types of processing, including AI/ML. Using Bitfusion, vSphere 7 can create AI/ML cloud pools that can be used on-demand. Bitfusion allows GPU processing resources to be used across the network and used effectively and efficiently by intelligent workloads.

To put this ability into context, this allows vSphere to share GPUs much the same as it allowed sharing CPUs years ago. This allows eliminating “stranded” GPU resources that may be underutilized. This empowers customers and even service providers. It opens up new use cases such as service providers providing GPU-as-a-Service services.

Bitfusion allows effectively sharing GPU resources across the network for AI/ML workloads

VMware vSphere Bitfusion is an integrated feature of vSphere 7 and will also work with vSphere 6.7 environments and higher (for clients), while server side will require vSphere 7 and higher. You may wonder how vSphere Bitfusion is packaged with vSphere. The vSphere Bitfusion solution will be an add-on feature for the vSphere Enterprise Plus edition.

Improvements in vMotion

VMware vSphere vMotion has been the technology that many have cited as the feature of virtualization that truly excited them years ago. VMware vSphere vMotion is the technology that allows a running virtual machine to be migrated between one physical host to another, all while the virtual machine is running. The technology has certainly changed, morphed, and gained features and capabilities along the way with each new release of VMware vSphere.

VMware vSphere 7 has introduced several new enhancements and features to the core vMotion feature found in the latest vSphere release. There has been a huge emphasis on vMotion performance in the vSphere 7 release. This is especially true with the so-called “Monster” VMs that you may have running in your environment.

With the current vSphere 7 release, virtual machines can be created with 256 vCPU’s and 6 TB of memory as configuration maximums. These specs would certainly constitute a monster virtual machine. As great as vMotion works for the majority of workloads in previous vSphere releases, customers running monster VMs have noted performance issues with vMotion when trying to migrate large VMs prior to vSphere 7. This has historically been the result of the stun (switch-over process) for the workload.

With vSphere 7, there have been many improvements which have helped to eliminate any issues with vMotioning large virtual machines. These include:

- Loose page trace install

- Page table granularity

- Switch-over phase enhancements

Loose page trace install

One of the extremely important tasks that must be accomplished with a vMotion operation is keeping up with changed memory pages. The vMotion process can be carried out on a running virtual machine. This means the guest operating system will continue to operate during the vMotion process. This also means the virtual machine will continue to write data to memory. It is extremely important the vMotion process keeps up with the changes in memory pages and resend these pages.

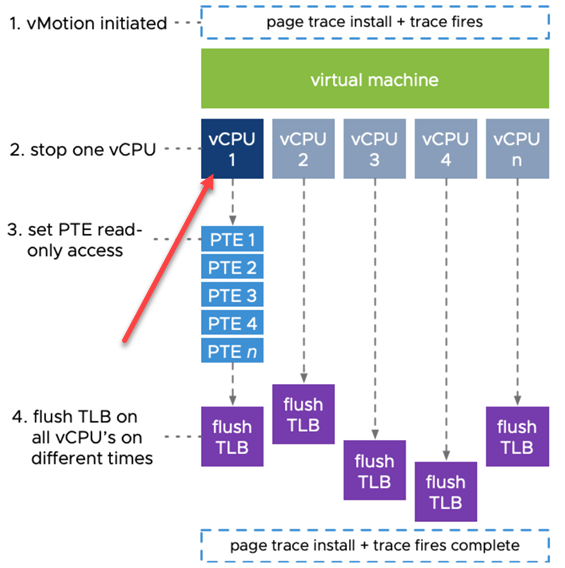

To do this, prior to vSphere 7, there is something called a page tracer that is installed on all vCPUs configured for the VM. This allows the vMotion process to have visibility to all changes in memory pages. During the installation of the page tracer, all vCPUs are stopped for a few microseconds. Even though this is an extremely brief period of time, this still amounts to disruption for the VM workload. The more compute resources that are allocated for a particular VM, the more disruption that occurs.

With vSphere 7, VMware has optimized this process with something called Loose Page Trace install. With vSphere 7, the process itself is similar, however, it only claims one vCPU to perform the tracing work instead of all vCPUs. With this enhancement, all other vCPUs simply continue to run on the workload without interruption. This creates a much more efficient vMotion process in vSphere 7.

VMware vSphere 7 performs “loose page trace installs” involving only one vCPU

Page Table Granularity

While the vCPU impact has been greatly decreased in vSphere 7, this was not the only enhancement. VMware also enhanced the way memory is set to read-only during the vMotion process. The new enhancement results in less work that needs to be done. This directly corresponds to much-increased efficiency.

Page granularity prior to vSphere 7 is set on a 4KB size. Each individual 4KB page needs to be set to read-only access with the vMotion process. With vSphere 7 and higher, the Virtual Machine Monitor (VMM) sets a read-only flag at a much larger granularity which is 1 GB. If a particular memory page is overwritten during the process, the 1GB memory granularity is broken down into 2MB and 4KB pages. However, for the most part, the majority of a VM’s memory is not accessed during the vMotion process. So, for the most part, the higher memory page granularity provides a much more efficient page size that leads to increased performance.

Switch-over phase enhancements

One of the other critically important parts of the vMotion process is the actual switch-over phase. After the vMotion process has been initiated and the process gets close to reaching memory convergence, the vMotion process will be ready to switch over to the destination ESXi host. During the last part of the phase, the VM on the source ESXi host is suspended and the final checkpoint data is set to the destination host. The switch-over time is also known as the “stun time”. Ideally, this is a 1 second or less process.

The memory bitmap tracks all memory of a VM. This is part of the process that tracks which pages are overwritten. New with vSphere 7, the memory bitmap is compacted which allows only sending over the information that is really needed. This helps to lower bitmaps that may be 192MB in size to only kilobytes in size. This drastically reduces the “stun time” for the VM to ensure it is under the desirable “less than 1 second” time.

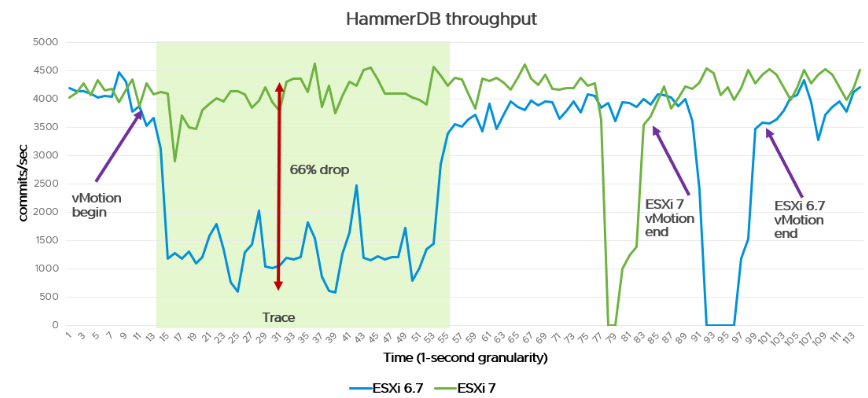

VMware released a comparison of a vMotion operation between vSphere 6.7 and vSphere 7. The vSphere 7 enhancements to vMotion greatly bolster the performance of a virtual machine during the vMotion operation as you can see below.

VMware vSphere 7 vMotion performance is noticeably better than previous vSphere releases (image courtesy of VMware)

Improvements Distributed Resource Scheduler DRS

One of the tremendous advantages to running a vSphere cluster with multiple ESXi hosts is taking advantage of vSphere Distributed Resource Scheduler (DRS). VMware vSphere DRS is a resource scheduling and load balancing solution for vSphere that provides resource management capabilities. These include the ability to load balance virtual machines as well as select the optimal placement in the cluster for virtual machines. DRS also allows administrators to configure resource allocations at the cluster level.

The main purpose of DRS is to make sure that virtual machines running in the vSphere cluster are able to have the resources they need to provide optimal performance and to run efficiently. It has long been said that DRS provides the mechanism to “keep your VMs happy”. When virtual machines are running optimally, this translates into good application performance.

VMware vSphere DRS has been around a while. It was first introduced in 2006 and while there have been minor enhancements along the way, it really hasn’t changed a lot since its introduction. With VMware vSphere 7, VMware has totally reworked DRS to provide better support for today’s modern workloads. This includes better DRS logic and enhancements to the DRS UI in the vSphere Client.

VMware has approached the new DRS logic from a workload perspective instead of a cluster perspective that was used in the old DRS logic. This provides many benefits for your applications running on top of the virtual machines in a vSphere 7 cluster, including a more fine-grained level of resource scheduling.

The old DRS logic has worked for years at the cluster level. Basically, with the old DRS, it focused on the resource usage on each ESXi host. If a particular ESXi host had resources that were over-consumed, DRS would determine if the balance of the cluster resources could be improved. If so, it would vMotion virtual machines from one ESXi host to another host to improve the balance. However, as good as this process has worked for years, it doesn’t consider the performance or happiness of the virtual machines in question.

With the new vSphere 7 DRS logic, VMware has reworked the logic involved and the focus of the DRS algorithms. With vSphere 7 DRS, it no longer looks at the balance of the cluster. Rather, a VM DRS score is calculated for each host. VMs are moved to the host that provides the highest VM DRS score.

The big difference in how DRS is calculating the score is that it no longer just looks to balance host load directly. It is looking at the VM DRS score metric. Another improvement with how DRS works in vSphere 7 is that the DRS scheduler now runs every 1 minute whereas in previous vSphere releases it ran every 5 minutes. So, DRS enhancements are now applied at much more frequent intervals which positively impacts your VMs and by extension, application performance.

With the new DRS logic, the obvious question that many will be asking related to the VM DRS score is, how is this score calculated? This is a good question that we will now consider. The first point to note about the new VM DRS score is that this is not a health metric of the VM itself. In other words, VMware is not calculating issues or health of the guest operating system or some other configuration issue with the VM.

Rather, it is calculating the execution efficiency of the virtual machine based on resource contention on the host that it is currently on. If the current host VM DRS score is low, DRS will look to move the VM to a host that will provide the virtual machine with a higher VM DRS score. What metrics are used to calculate the VM DRS score?

The new VM DRS score uses metrics such as CPU %ready time, memory swap metrics, and good CPU cache behaviour. The ESXi host load has not been totally forgotten with the new metric. Part of the VM DRS score is the reserve resource capacity or headroom that exists on the current ESXi host. Additionally, is there enough headroom where the virtual machine will be able to consume more resources if needed? Also, do other ESXi cluster hosts have more headroom available to the virtual machines in the environment? These are several of the questions the new VM DRS score calculates and helps to answer for the overall performance of your virtual machines and applications.

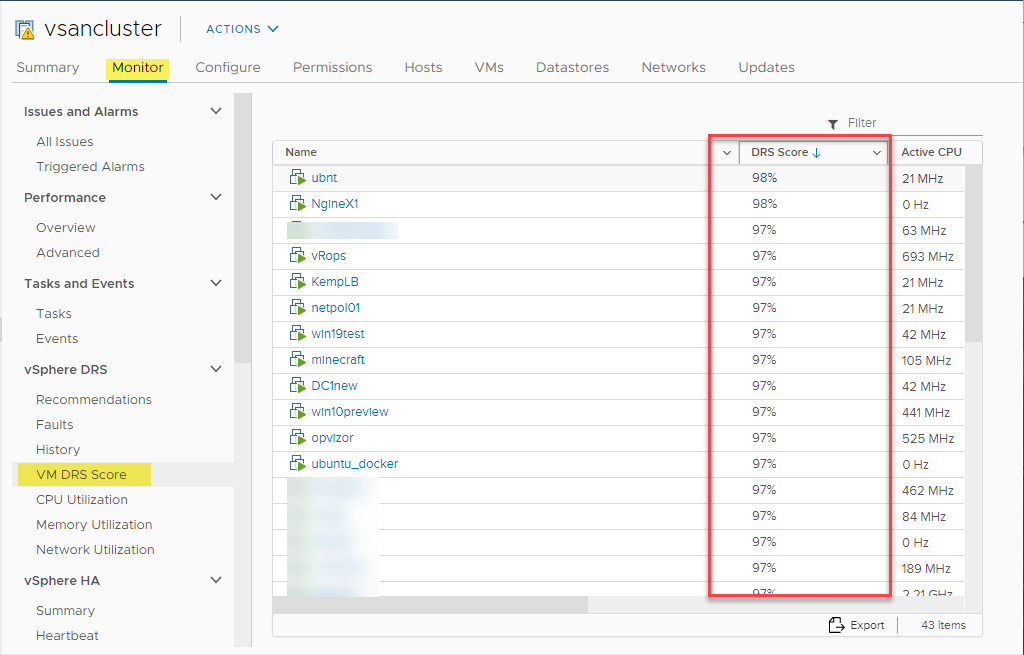

There are very useful views in the vSphere Client relative to the VM DRS score. Below, under the Monitor > vSphere DRS > VM DRS Score, you will see the VM DRS score for each VM running in the cluster. VM DRS scores in the 80-100% range show only mild or no resource contention. VMware notes that VMs that have lower scores does not necessarily mean they are performing worse since many metrics influence the score.

Viewing the VM DRS Score for each virtual machine running in a vSphere 7 environment

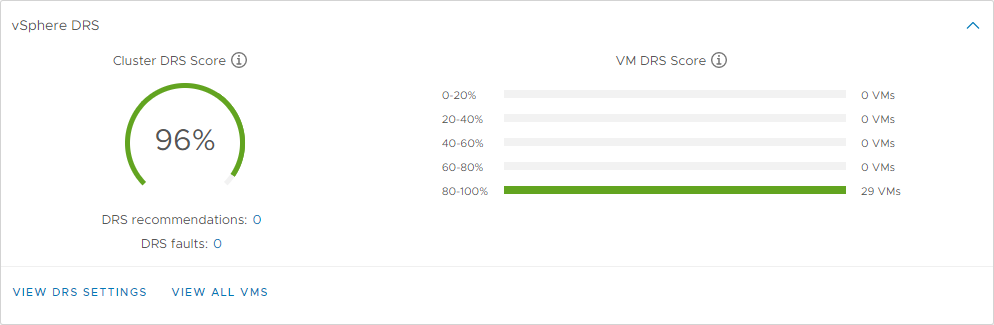

In addition, the vSphere 7 Client displays a vSphere DRS score and dashboard for the cluster in general as well. This will show how many virtual machines are running and what score they fall into.

VMware vSphere 7 Cluster DRS score showing the relative happiness of VMs in a vSphere 7 cluster

DRS Scalable shares

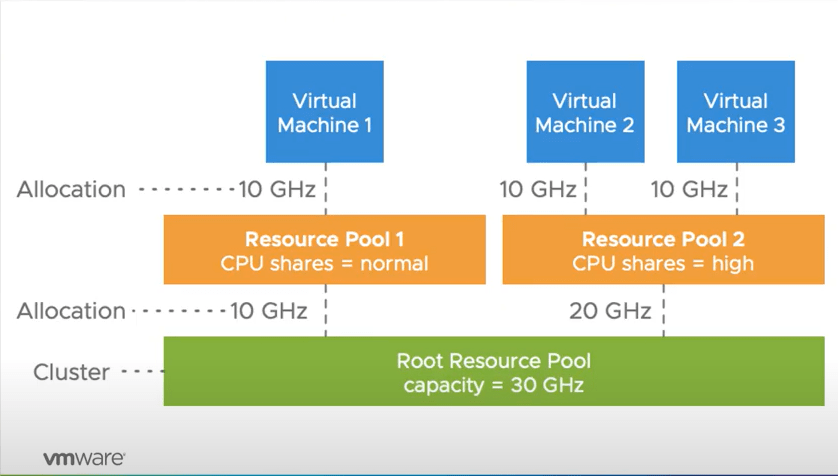

Before vSphere 7, there was no relative resource entitlement between resource pools and their respective virtual machine workloads. This means that with traditional vSphere DRS, the CPU shares that are assigned did not necessarily guarantee a higher entitlement of resources. VMware provides a high-level look at how this looks between the previous resource entitlement before vSphere 7.

With previous vSphere releases, you could see resource entitlement similar to the following. There is a total of 30 GHz CPU resources that can be entitled. In the first resource pool, Resource Pool 1, you see there is a single virtual machine configured with normal shares. Notice in Resource Pool 2, there are 2 virtual machines that are set to high CPU shares. It would appear that virtual machines with high CPU shares would get higher entitlement. However, between normal and high entitlements, all the VMs in the below scenario get the same 10 GHz of CPU resource entitlement as vSphere DRS simply divides up the available resources between the three VMs.

Resource entitlement before vSphere 7

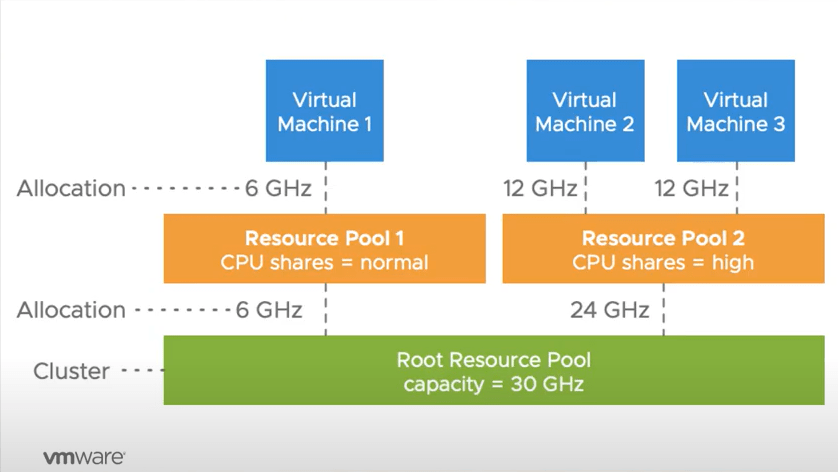

Now with VMware vSphere 7 scalable shares, vSphere 7 DRS can ensure that virtual machines with high CPU resource entitlements will actually receive the higher entitlement for the virtual machine. As you can see below, the total CPU resources available is 30 GHz. The two virtual machines calculated CPU entitlements calculated help to ensure they receive the high shares configured.

vSphere 7 scalable shares help to ensure high CPU resource entitlements when configured

Enabling vSphere 7 Scalable Shares

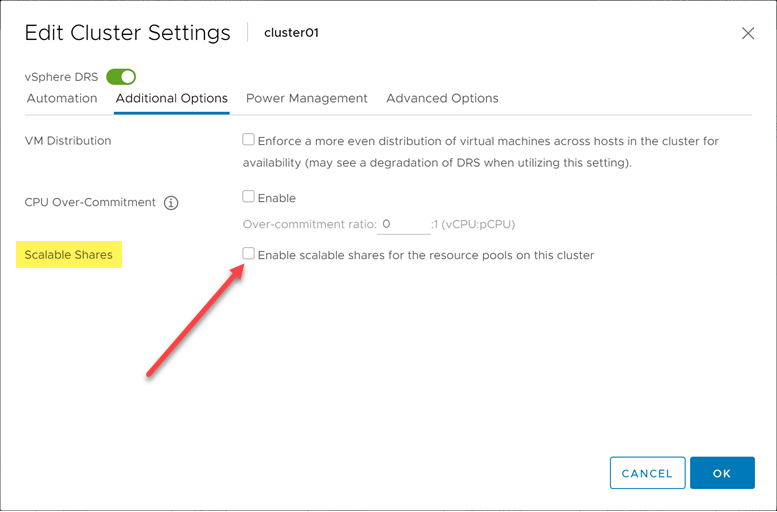

You will note that vSphere 7 scalable shares are not enabled by default. You have to enable the scalable shares feature to take advantage of it. Let’s see how and where this is done. There are actually two locations where scalable shares can be configured. This includes:

- Cluster DRS settings

- Resource pool settings

To enable the vSphere 7 scalable shares, at the cluster-level, this is a simple checkbox. Click to Enable scalable shares for the resource pools on this cluster.

You can enable vSphere 7 scalable shares at the cluster-level

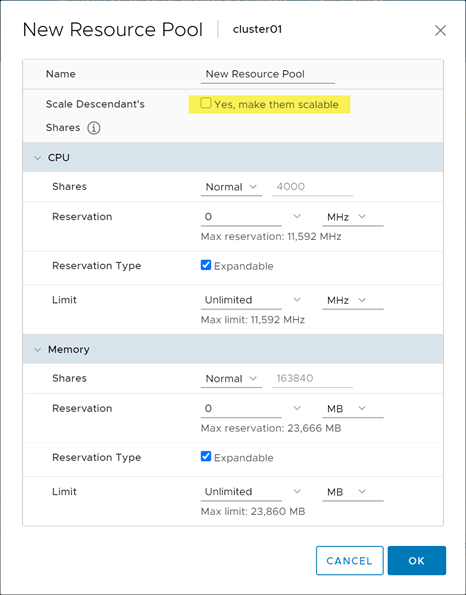

Also, you can enable scalable shares at each resource pool. You can place a checkbox in the Yes, make them scalable for the Scale Descendant’s setting.

Enable vSphere 7 scalable shares at the resource pool level

Wrapping Up and Final Thoughts

VMware vSphere has come a long way since the early days with many great new features that help to bolster the performance of applications. VMware is setting their sights on modern workloads. This means they are not simply creating features for VMs, but rather the focus is on the applications those VMs house. Application acceleration is certainly built into VMware vSphere 7 as part of the core product.

New features like Bitfusion help to support next-generation workloads that are applying artificial intelligence and machine learning algorithms. New vMotion performance mechanisms help to ensure there is no loss of performance for your application due to the underlying VMs which may move between hosts. Additionally, it provides many new mechanisms that ensure even “monster VMs” are able to vMotion across ESXi hosts successfully.

VMware vSphere DRS has been rewritten with great new algorithms that allow it to have context around the virtual machines themselves instead of simply balancing the resources of ESXi hosts in a cluster. This, in turn, helps to ensure application performance and resource allocation is healthy. Scalable shares also make sure that resource pool configuration honours settings such as high shares for CPU entitlement.

The end result is that VMware vSphere 7 provides the best application performance of any vSphere release to date. It helps to support modern workloads in your environment and makes sure the context is around the applications and the underlying VMs that are running them.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!