Save to My DOJO

Table of contents

When most VMware administrators provision their virtual machines they just add the requested CPU and Memory and go along their merry way. However, it is important to realize that there is a possibility of over-sizing VMs which can cause them to be slower than they would normally be if they had less CPU/Memory assigned to them. Nowadays, we are starting to get these large VMs with 24 vCPU for large databases. For me lately I’ve been seeing a lot of large VM requests for SQL clusters or for GDPR software (which is becoming a big thing now!) We need to be even more careful about how we provision them for the best optimization which will help prevent a lot of the “slowness” issues we run into down the road. The two big concepts that you need to have a good understanding of when provisioning large VMs are the concept of sizing the VM bigger than the vNUMA node size, and adding too much CPU on a VM when it’s not the only guest on a host (CPU RDY time). Let’s go more in-depth on each.

Sizing VMs Larger than the vNUMA Node Size

When we get a VM with a large number of resources allocated to it that are larger than the ESXi host’s physical NUMA node size, a virtual NUMA topology is already configured to allow the VM to benefit from NUMA on the physical host. What is NUMA? It stands for Non-Uniform Memory Access and is a method used for accessing memory when using multiple processors. So if you have a server with 2 physical processors, the memory is going to be split up among each CPU in it’s respective memory slots on the motherboard. NUMA will be used in order to access the memory configured for each CPU as well as remotely access memory assigned to the other CPUs if more memory is required. Pre ESXi 6.5 the vNUMA topology sometimes wasn’t the fastest and we would have to play around with the Sockets vs Cores. But in vSphere 6.5 and 6.7, vNUMA is actually a little smarter. It is no longer controlled by the Cores per Socket configuration in the VM. The host will automatically present the most optimized vNUMA topology to the VM unless advanced settings are used. We still need to be conscious of memory settings, if the memory being applied to a VM is larger than the NUMA node size, we will need to split up the cores vs sockets accordingly.

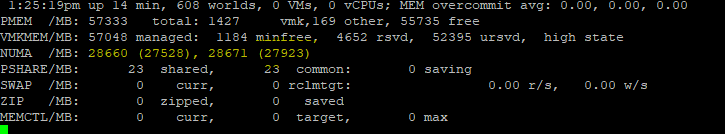

How do I know my host numa node size? Easy, start an SSH session into your host. Type in the following command to get into ESXTOP:

esxtop

then press “m” to get into the memory section:

On my host, I can see that I have 2 NUMA nodes about 28GB in size. ESXTOP will comma separate each node. So what happens if we allocate 36GB of memory to a VM with 1 socket and 10 cores? The VM will end up having to access memory from the 2nd NUMA node which is remotely accessed since we are borrowing it from our neighbor NUMA node. This can cause delays in our VM performance-wise. In this scenario, it is recommended to split up the sockets of the VM so that a vNUMA topology is created for each socket and a configuration of 2 sockets 5 cores will provide a much more optimized VM.

Over-sizing CPU

When a VM is assigned many cores, it must wait for all cores that are assigned to it to be available in order to run its CPU Cycle, even if it’s not using all cores. This can cause lag on the VM if other VMs are using the host CPU at the time. A good rule of thumb is, when sizing your VM, if the CPU utilization in the guest is on average below 20% at all times, reduce the number of vCPUs. If the VM is at 100% CPU utilization all the time, up the vCPU count. This will help with minimizing and maximizing your VMs to get the most CPU performance that they can get.

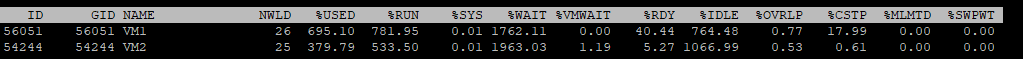

Another metric to watch for when provisioning resources is CPU RDY Time. This is the metric vSphere uses to record the length of time that a VM was scheduled to perform a task but couldn’t due to having to wait for CPU. The threshold to look for is usually 10%. Anything higher will usually be experiencing some slowness. How do I find the CPU RDY time? Easy, just like the example above with the NUMA nodes, we will use ESXTOP. So SSH into a host and type in the following:

esxtop

Then press “c” and you will see the column for %RDY. In my example, I have two VMs that I have allocated large amounts of vCPU to. I then ran a CPU Benchmark program to generate a mass CPU workload. We can see that VM1 is experiencing some performance issues with the %RDY column, it is above 10%:

Wrap-Up

It’s very important to pay attention to these two performance “gotchas” when provisioning large VMs. Especially since a lot of these VMs are used for serving up databases and are often the back-ends to primary business applications and cannot afford to have slow-downs. So make sure you do your homework and know your VMware infrastructure’s NUMA sizes so that you can develop processes for provisioning large VMs. Also, it is a good idea to make sure that all VMware Hosts are the same hardware and resource specs as much as possible.

Have you ever been bitten by this? Have you seen a misconfiguration with one of the above items cause issues? How did you end up fixing it? Let us know in the comments section below!

Thanks for reading!

[the_ad id=”4738″][thrive_leads id=’18673′]

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

9 thoughts on "These vSphere Configurations Will Slow Down Your VMs"

Luke Orellana,

Great topic! Can you explain a little more about NUMA when configuring small VMs?

My boss is used to configure the VMs inside a VMware host to only use the existing CPUs.

Example:

Host configuration: 12 CPUs and 32 GB of RAM

First VM: 2 CPUs and 4 GB of RAM

Second VM: 4 CPUs and 8 GB of RAM

Third VM: 4 CPUs and 16 GB of RAM

Fourth VM: 2 CPUs and 4 GB of RAM

So she is using the exact amount of CPUs and RAM of the host. But a work colleague says that she is doing it wrong and we can configure like 4 CPUs in the first VM and even having 14 CPUs totally, VMware will know how to handle it.

Can you shed a light here please?

Hi, this sounds like a great future post. Thanks for the idea.

Looking forward to it! Thanks!!!

Thanks for sharing