Save to My DOJO

When it comes to Hyper-V, storage is a massive topic. There are enough possible ways to configure storage that it could almost get its own book, and even then something would likely get forgotten. This post is the first of a multi-part series that will try to talk about just about everything about storage. I won’t promise that it won’t miss something, but the intent is to make it all-inclusive.

Part 1 – Hyper-V storage fundamentals

Part 2 – Drive Combinations

Part 3 – Connectivity

Part 4 – Formatting and file systems

Part 5 – Practical Storage Designs

Part 6 – How To Connect Storage

Part 7 – Actual Storage Performance

The Beginning

This series was actually inspired by MVP Alessandro Cardoso, whom you can catch up with on his blog over at CloudTidings.com. He was advising me on another project in which I was spending a lot of time and effort on the very basics of storage. Realistically, it was too in-depth for that project. His advice was simply to move it here. Who am I to argue with an MVP?

This first entry won’t deal with anything that is directly related to Hyper-V. It will introduce you to some very nuts and bolts aspects of storage. For many of you, a lot of this material is going to be pretty remedial. Later entries in this series will build upon the contents of this post as though everyone is perfectly familiar with them.

Intro

In the morass of storage options, one thing remains constant: you need drives. You might have a bunch of drives in a RAID array. You might stick a bunch of drives in a JBOD enclosure. You might rely on disks inside your host. Whatever you do, it will need drives. These are the major must-haves of any storage system, so it is with drives that we will kick off our discussion.

Spinning Disks

Hard disks with spinning platters are the venerated workhorses of the datacenter and are a solid choice. Their pros are:

- The technology is advanced and mature

- Administrators are comfortable with them

- They provide the lowest cost per gigabyte of space

- They offer acceptable performance for most workloads

Cons are:

- Their reliance on moving parts make them some of the most failure-prone equipment in modern computing

- Their reliance on moving parts make them some of the slowest equipment in modern computing

Spinning disks come in many forms and there are a lot of things to consider.

Rotational Speed and Seek Time

Together, rotational speed and seek time are the primary determinants of how quickly a hard drive performs. As the disk spins, the drive head is moved to the track that contains the location it needs to read or write and, as that location passes by, performs the necessary operation.

How quickly the disk spins is measured in revolutions per minute (RPM). Typical disk speeds range from 5400 RPM to 15,000 RPM. For most server-class workloads, especially an active virtualization system, 10,000 RPM drives and higher are recommended. 7200 RPM drives may be acceptable in low-load systems. Slower drives should be restricted to laptops, desktops, and storage systems for backup. The second primary metric is seek time, occasionally called access time. This refers to the amount of time it takes for the drive’s read/write head to travel from its current track to the next track needed for a read/write operation. Manufacturers usually publish a drive’s average seek time, which is determined by measuring seeks using a random pattern. Sometimes its maximum seek time will also be given, which measures how long it takes to travel across the entire drive platter. Lower seek times are better.

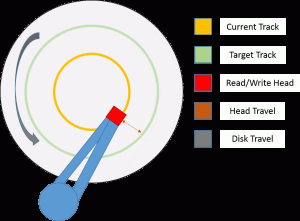

Consider the following diagram (I apologize for the crudity of this drawing, I’m a pretty good sysadmin for an artist and the original plan was to have an actual artist redo this):

Basically, the drive is always spinning in the direction indicated by the arrow and at full speed. The read/write head moves on its arm in the direction indicated by the arrow. What it has to do is move to the track where it needs to read or write data, then wait for the disk to spin around to the necessary spot. If you’ve ever seen a video of a train picking up mail from a hook as it went past, it’s sort of like that, only at much much much higher speeds. The electronics in the drive tell it the precise moment when it needs to perform its read or write operation.

Cache

Drives have a small amount of onboard cache memory, which is typically only for reads. If the computer requests data that happens to be in the cache, the drive sends it immediately without waiting for an actual read operation. In general, this can happen at the maximum speed of the computer-to-drive bus. Most drives will not use their onboard cache for writes as contents would reported to the computer as written but lost during a power outage. Mechanisms that save writes in the cache (for subsequent reads) and also directly on the platter are called “write-through” cache. “Write-back caches” place writes in the cache and return to the operating system, allowing the disk to take care of it when it gets around to it. These should be battery-backed. The larger the cache, the better overall performance.

IOPS

Input/output operations per second (IOPS) is sort of a catch-all metric that encompasses all possible operational types on the hard drive using a variety of tests. Due to the unpredictable nature of the loads on shared storage in a virtualized environment, the metric of most value is usually random IOPS, although you will also find specific metrics such as sequential write IOPS and random read IOPS. If the drive distinguishes between read IOPS and write IOPS, most server loads, including virtualization, are heavier on read than write. Higher IOPS is better.

Sustained transfer rate is effectively the rate at which the drive can transfer data in a worse-case scenario, usually measured in megabytes per second. There are a variety of ways to ascertain this speed, so you should assume that manufacturers are publishing optimistic numbers. A higher number for sustained transfer rate is better.

Form Factor

Spinning disks come in two common sizes: 2.5 inch and 3.5 inch. The smaller size limits the platter radius, which limits the amount of distance that the drive head can possibly cover. This automatically reduces the maximum seek time and, as a result, lowers the average seek time as well. The drawback is that the surface area of the platters is reduced. 2.5 inch drives are faster, but do not come in the same capacities as their 3.5 inch cousins.

4 Kilobyte Sector Sizes

Update 9/20/2013: A note from reader Max pointed out some serious flaws in this section that have now been corrected.

A recent advance in hard drive technology has been to convert to four kilobyte sector sizes. A sector is a logical boundary on a hard drive disk track that is designated to hold a specific number of bits. Historically, a sector has been almost universally defined as being 512 bytes in size. However, this eventually led to a point where the feasibility of expanding hard drives capacities by increasing bit density became prohibitive. By increasing sector sizes to 4,096 bytes (4k), superior error-correction methods can be employed to help protect data from the inevitable degradation of the magnetic state of a sector (fondly known as “bit rot”).

The drawback with 4k sectors is that not all operating systems support it. So, in the interim, “Advanced Format” drives have been created to fill in the hole. They use emulation to present themselves as a 512-byte-per-sector drive. These are noted as 512e (for emulated) drives, as opposed to 512n (for native). This emulation incurs a substantial performance penalty during write operations. Because Windows Server 2012, and by extension, Hyper-V Server 2012, can work directly with 4k sector drives, it is recommended that you choose either 512n or 4k sector drives.

Solid-State Storage

Solid-state storage, generally known as Solid State Drives (SSD) uses flash memory technology. Because they have no moving parts, SSDs have none of the latency or synchronization issues of spinning hard drives and they consume much less power in operation. Their true attractiveness is speed; SSD sustained data transfer rates are an order of magnitude faster than those of conventional drives. However, they are much more expensive than spinning disk.

Another consideration with SSD is that every write operation causes irreversible deterioration to its flash memory such that the drive eventually becomes unusable. As a result of this deterioration, early SSD’s had a fairly short life span. Manufacturers have made substantial improvements that maximize the overall lifespan of SSD. There are two important points to remember about this deterioration:

- A decent SSD will be able to process far more write operations in its lifetime than a spinning disk

- The wearing effects of data writes on an SSD are measurable, so the amount of remaining life for an SSD is far more predictable than for a spinning disk

The counterbalance is that SSDs are so much faster than spinning disks that it is possible for them to reach their limits much faster than their mechanical counterparts. Every workload is unique, so there is no certain way to know just how long an SSD will last in your environment. Typical workloads are much heavier on reads than on writes, and read operations do not have the wearing effect of writes. Most installations can reasonably expect their SSDs to last at least five years. Just watch out for that imbalanced administrator who needs to defragment something every few minutes because he’s convinced that someone was almost able to detect a slowdown. He’s going to need to be kept far away from your SSDs.

There are two general types of SSD: single-level cell (SLC) and multi-level cell (MLC). A single-level cell is binary: it’s either charged or it isn’t. This means that SLC can hold one bit of information per cell. MLC has multiple states, so each cell can hold more than a single bit of data. MLC has a clear advantage in density, so you can find these drives at a lower cost per gigabyte. However, SLC is much more durable. For this reason alone, SLC is almost exclusively the SSD of choice in server-class systems where data integrity is of priority. As added bonuses, SLC has superior write performance and uses less energy to operate.

Performance measurements for SSDs can vary widely, but they are capable of read input/output operations per second (IOPS) in the tens of thousands, write IOPS in the thousands, and transfer rates of hundreds of megabytes per second.

Drive Bus

The drive bus is the technology used to connect a drive to its controller. If you’re thinking about using shared storage, don’t confuse this connection with the external host-to-storage connector. The controller being covered in this section is inside the computer or device that directly connects to the drive(s). As an example, you can connect an iSCSI link to a storage device whose drives are connected to a SAS bus.

The two most common drive types used today are Serial-Attached SCSI (SAS), and serial ATA (SATA). Fibre Channel drives are also available, but they have largely fallen out of favor as a result of advances in SAS technology.

SAS is in iteration of the SCSI bus that uses a serial connections instead of parallel. SCSI controllers are designed with the limitations of hard drives in mind, so requests for drive access are quite well-optimized. SAS is most commonly found in server-class computing devices.

SATA is an improvement upon the earlier PATA bus. Like SAS to SCSI, it signaled a switch from parallel connectivity to serial. However, an early iteration of the SATA bus also introduced a very important new feature. Whereas older ATA drives processed I/O requests as they came in, (a model known as FIFO: first-in, first-out), SATA was enhanced with native command queuing (NCQ). NCQ allows the drive to plan its platter access in a fashion that minimizes read head travel. This helped it gain on the optimizations in the electronics of SCSI drives. SATA drives are most commonly found in desktop and laptop computers, although they are becoming more common in entry-level and even mid-tier NAS and SAN devices.

SAS drives are available in speeds up to 15,000 RPM where SATA tops out at 10,000 RPM. SAS drives usually have superior seek times to SATA. Also, they tend to be more reliable, but this has less to do with any particular strengths and weaknesses of either bus type and more to do with how manufacturers focus on build quality. Of course, all this translates directly to cost. Quality aside, SATA’s one benefit over SAS is that it comes in higher maximum capacities. Both bus types currently have a maximum speed of six gigabits per second, but as explained above, it is not realistic to expect any one drive to be able to reach that speed on a regular basis.

You will sometimes find hard drive systems labelled as near-line. Unfortunately, this isn’t a tightly-controlled term so you may find it used in various ways, but for a pure hard drive system it most properly refers to a SATA drive that can be plugged into a SAS system. This is intended mainly for mixed-drive systems where you need some drives to have the higher speed of SAS but also the higher capacity of SATA.

SSDs can use either bus type. Because they have no moving parts and therefore none of the performance issues of spinning disks, the differences between SAS and SATA SSDs are largely unnoticeable.

External Connections

If you’re going to use external storage, your choices are actually pretty simple. You can use a direct-connect technology, usually something with an external SAS cable. You can use Fibre Channel, which can direct-connect or go through an FC switch. And, you can use iSCSI, which travels over regular Ethernet hardware and cables. Pretty straightforward. The issue here is typically cost. FC is lossless and gets you the maximum speed of the wire… assuming your disks can actually push/pull that much data. iSCSI has the standard TCP overhead. It can be reduced using jumbo frames, but it’s still there. However, if you’ve got 10GbE connections, then the overhead is probably of little concern. Another option is SMB 3.0. Like iSCSI, this works over regular Ethernet.

Why This is All So Important

So, why have a whole blog post dedicated to really basic material? Well, if you truly know all these things, then I apologize, as I probably wasted your time. But, even though this all seems like common knowledge, many administrators come into this field midstream and have to sort of piece all this together through osmosis and a lot of them come out understanding a lot less than they think they do. For instance, I once watched a couple of “storage experts” insist on connecting an array using a pair of 8Gb Fibre Channel connectors. Their reasoning was that it would aggregate and get 16Gbps throughput in and out of the drives. That’s all well and good, except that there were only 5 drives in the array, and they were 10k. Know when those drives are going to be able to push 16Gbps? Never. Of course, the link is redundant, and there’s some chance that one of the lines could fail. Know when those 5 drives are going to reach 8Gbps? Also never. “Cache!” they exclaimed. Sure, but at 8Gbps, the cache could be completely transferred so quickly that still, not really 8Gbps. “Expansion!” they cried. OK, so the system has been in place for a decade and experiences about 5% data growth annually. Of the presented capacity of these 5 drives, 30% was being used. So, the drives and device would no longer be in warranty once they reached the point where a sixth drive would need to be added. Even a sixth drive wouldn’t put the array in a place where it could use 8Gbps.

This was a discussion I was having with experts. People paid to do nothing but sit around and think about storage all day. People who will be consulting for you when you want to put storage in your systems. Look around a bit, and you’ll see all sorts of people making mistakes like this all the time. Remedial or not, this information is important, and a lot further from the realm of common knowledge than you might think.

What’s Next

The next part of this series is going to look at how you combine drives together to offset their performance and reliability issues. It will then add all this together to start down the path of architecting a good storage solution for your Hyper-V system.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

7 thoughts on "Storage and Hyper-V Part 1: Fundamentals"

Great overview of the very basics any storage infrastructure builds upon.

From my point of view it absolutely makes sense to make sure all readers are at least on the same page with the very basics.

Just to add my 2 cents in regards to “experts”:

Was visiting a customer whos employees where suffering slow erp application performance. While they have their own IT guys, they where not able to pinpoint the issue. Since I was there for some project meeting in regards to erp functionality and wasn’t really there to fix those infrastructure issues, I told the local IT to look into the issue since I know for a fact, that this erp software can run a lot faster on a lot slower hardware.

I also told them, that CPU and RAM rarely seemed to get over 20%. Seemengly over sized. My guess was to put SQL, erver and ERP Server on the same ESX Host and if that didn’t help to check the SQL Server performance or storage in general.

Been there yesterday again and guess what?

They doubled the vCPU to 8 and RAM to 16GB

guess some more… it’s still slow, nothing changed.

I have customers running that software with 5 times the load they have with 4 vCores and 6GB RAM and at least double as fast.

They now have a super over sized VM which is underperforming because some “experts” probably have no clue about storage and especially how to measure it’s performance.

So, knowing the basics can’t be wrong.

Rgards Juri

I agree with Juri: Great overview. Especially of the constraints that your going to be banging your head against in Real Life which, to me, is the core of what engineering is all about.

Thanks for the great post!

Very informative and helpful for what is a very headache inducing topic. I’ve had many discussions with IT regarding storage and everyday there seems to be a different answer.

My only suggestion would be to flesh out the “External Connections” section a bit more. Direct, FC, iSCSI, and SMBv3 are all important parts to designing a complete storage solution. Adding the same detail to this section that is found in the others would be awesome.

Thanks Eric!

Nice article

One thing, though. Your view on sector size is extremely outdated. They’ve stopped having constant number of sectors per track in late ’90s if not earlier. It’s a waste of density and capacity otherwise, all modern drives use variable number of sectors per track to maintain mostly constant bit density (bits per linear mm of the track) instead of angular density (bits per angular rotation) across the whole platter.

I stand corrected. As I researched your comment, I found many terms that I recall. It appears that this was something I knew about, then forgot. I’ll update the article. Thanks!

Great overview and well constructed. I understood certain parts before but this pieced it together very well and gave a good grounding.