Save to My DOJO

Table of contents

- What is the Hyper-V Virtual Switch?

- What are Virtual Network Adapters?

- Modes for the Hyper-V Virtual Switch

- Conceptualizing the External Virtual Switch

- What are the Features of the Hyper-V Virtual Switch?

- Why Would I Use an Internal or Private Virtual Switch?

- How Does Teaming Impact the Virtual Switch?

- What About the Hyper-V Virtual Switch and Clustering?

- Should I Use Multiple Hyper-V Virtual Switches?

- What About VMQ on the Hyper-V Virtual Switch?

Note: This article was originally published in June 2018. It has been fully updated to be current as of September 2019.

Networking in Hyper-V commonly confuses newcomers, even those with experience in other hypervisors. The Hyper-V virtual switch presents one of the product’s steeper initial conceptual hurdles. Fortunately, once you invest the time to learn about it, you will find it quite simple. Digesting this article will provide the necessary knowledge to properly plan a Hyper-V virtual switch and understand how it will operate in production. If you know all about the Hyper-V virtual switch and you can skip to a guide on how to create one.

For an overall guide to Hyper-V networking read my post titled “The Complete Guide to Hyper-V Networking“.

A side note on System Center Virtual Machine Manager: I will not spend any time in this article on network configuration for SCVMM. Because that product needlessly over-complicates the situation with multiple pointless layers, the solid grounding on the Hyper-V virtual switch that can be obtained from this article is absolutely critical if you don’t want to be hopelessly lost in VMM.

What is the Hyper-V Virtual Switch?

The very first thing that you must understand is that Hyper-V’s virtual switch is truly a virtual switch. That is to say, it is a software construct operating within the active memory of a Hyper-V host that performs Ethernet frame switching functionality. It can use single or teamed physical network adapters to serve as uplinks to a physical switch in order to communicate with other computers on the physical network. Hyper-V provides virtual network adapters to its virtual machines, and those communicate directly with the virtual switch.

[thrive_leads id=’16356′]

What are Virtual Network Adapters?

Like the Hyper-V virtual switch, virtual network adapters are mostly self-explanatory. In more detail, they are software constructs that are responsible for receiving and transmitting Ethernet frames into and out of their assigned virtual machine or the management operating system. This article focuses on the virtual switch, so I will only be giving the virtual adapters enough attention to ensure understanding of the switch.

Virtual Machine Network Adapters

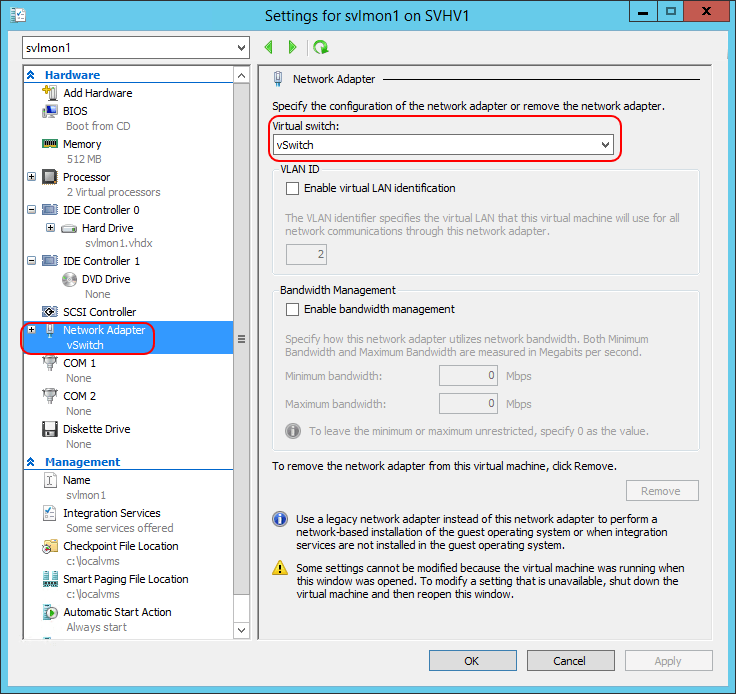

The most common virtual network adapters belong to virtual machines. They can be seen in both PowerShell (Get-VMNetworkAdapter) and in Hyper-V Manager’s GUI. The screenshot below is an example:

Example Virtual Adapter

I have drawn a red box on the left where the adapter appears in the hardware list. On the right, I have drawn another to show the virtual switch that this particular adapter connects to. You can change it at any time to any other virtual switch on the host or “Not Connected”, which is the virtual equivalent of leaving the adapter unplugged. There is no virtual equivalent of a “crossover” cable, so you cannot directly connect one virtual adapter to another.

Within a guest, virtual adapters appear in all the same places as a physical adapter.

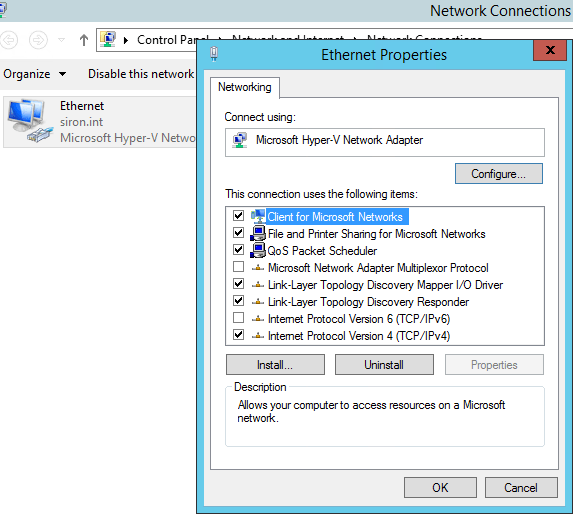

Virtual Adapter from Within a Guest

Management Operating System Virtual Adapters

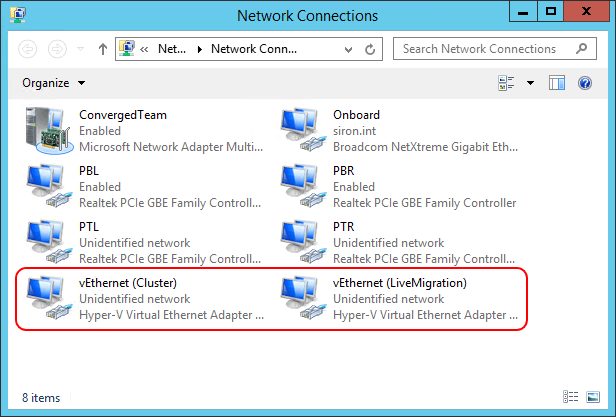

You can also create virtual adapters for use by the management operating system. You can see them in PowerShell and in the same locations that you’d find a physical adapter. The system will assign them names that follow the pattern vEthernet (<name>).

Virtual Adapters in the Management Operating System

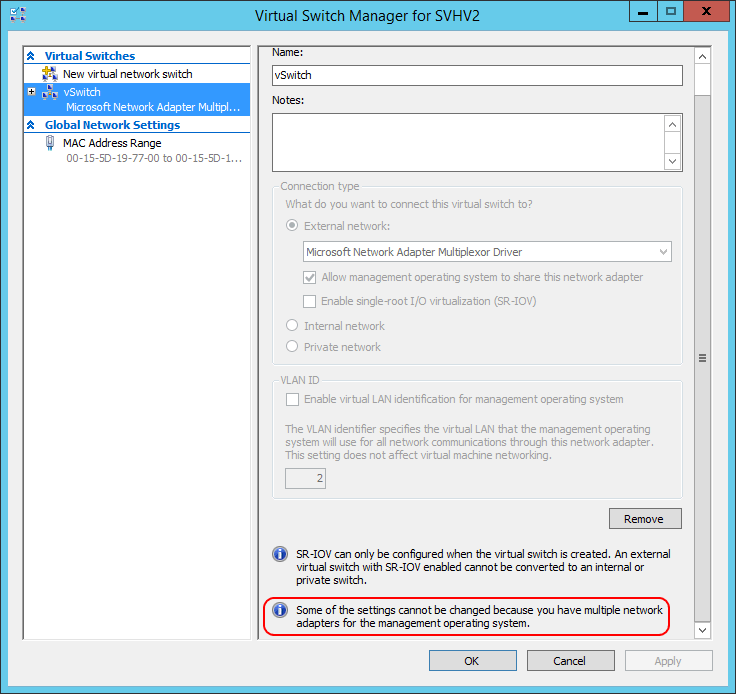

In contrast to virtual adapters for virtual machines, your options for managing virtual adapters in the management operating system are a bit limited. If you only have one, you can use Hyper-V Manager’s virtual network manager to set the VLAN. If you have multiple, as I do, you can’t even do that in the GUI:

Virtual Switch Manager with Multiple Host Virtual Network Adapters

PowerShell is the only option for control in this case. PowerShell is also the only way to view or modify a number of management OS virtual adapter settings. All of that, however, is a topic for another article.

Modes for the Hyper-V Virtual Switch

The Hyper-V virtual switch presents three different operational modes.

Private Virtual Switch

A Hyper-V virtual switch in private mode allows communications only between virtual adapters connected to virtual machines.

Internal Virtual Switch

A Hyper-V virtual switch in internal mode allows communications only between virtual adapters connected to virtual machines and the management operating system.

External Virtual Switch

A Hyper-V virtual switch in external mode allows communications between virtual adapters connected to virtual machines and the management operating system. It uses single or teamed physical adapters to connect to a physical switch, thereby allowing communications with other systems.

Deeper Explanation of the Hyper-V Switch Modes

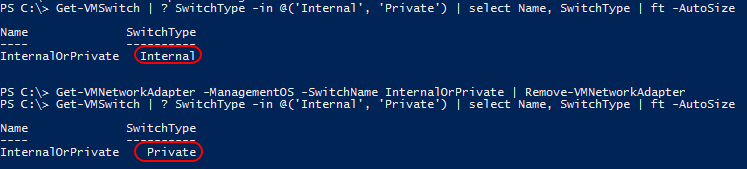

The private and internal switch types only differ by the absence or presence of a virtual adapter for the management operating system, respectively. In fact, you can turn an internal switch into a private switch just by removing any virtual adapters for the management operating system and vice versa:

Convert Internal Virtual Switch to Private

With both the Internal and Private virtual switches, adapters can only communicate with other adapters on the same switch. If you need them to be able to talk to adapters on other switches, one of the operating systems will need to have adapters on other switches and be configured as a router.

The external virtual switch relies on one or more physical adapters. These adapters act as an uplink to the rest of your physical network. Like the internal and private switches, virtual adapters on an external switch cannot directly communicate with adapters on any other virtual switch.

Important Note: the terms Private and External for the Hyper-V switch are commonly confused with private and public IP addresses. They have nothing in common.

[thrive_leads id=’17165′]

Conceptualizing the External Virtual Switch

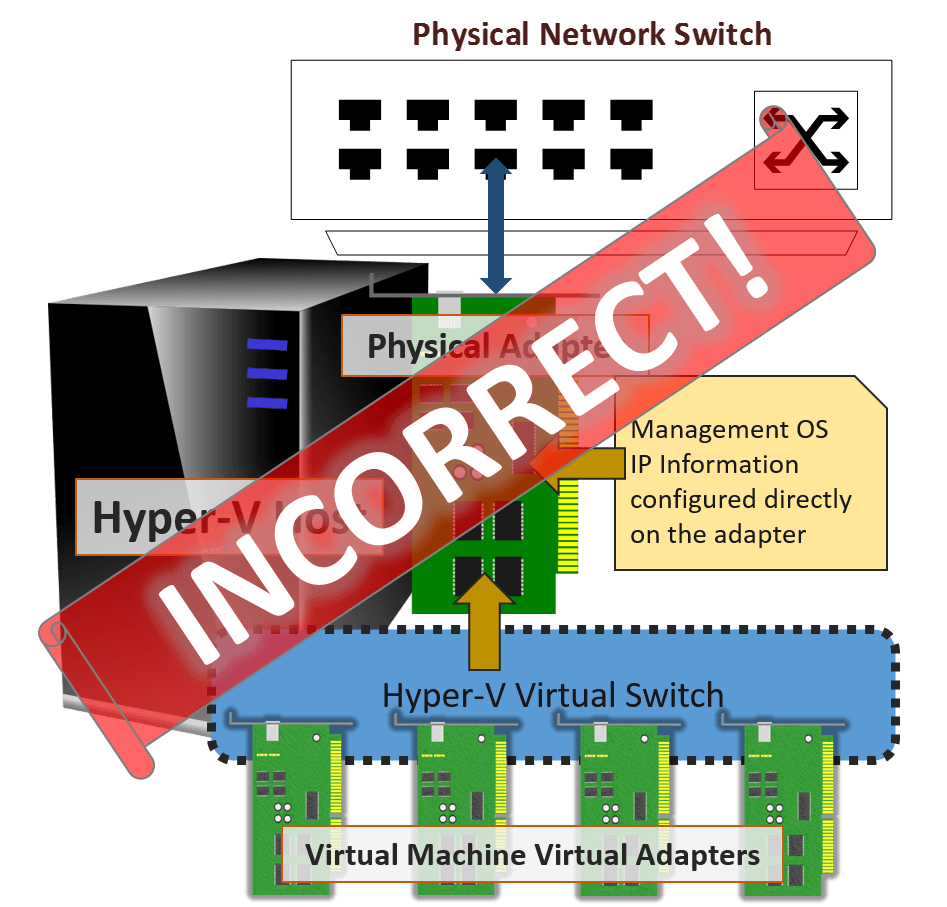

Part of what makes understanding the external virtual switch difficult is the way that the related settings are worded. In the Hyper-V Manager GUI, it says Allow management operating system to share this network adapter. In the PowerShell New-VMSwitch, there’s an -AllowManagementOS boolean parameter which is no better, and its description — “Specifies whether the parent partition (i.e. the management operating system) is to have access to the physical NIC bound to the virtual switch to be created.” — makes it worse. What happens far too often is that people read these and think of them like this:

Incorrect Visualization of the Hyper-V Virtual Switch

The number one most important thing to understand is that a physical adapter or team used by a Hyper-V virtual switch is not, and cannot be, used for anything else. The adapter is not “shared” with anything. You cannot configure TCP/IP information on it. After the Hyper-V virtual switch is bound to an adapter or team (it will appear as Hyper-V Extensible Virtual Switch), tinkering with any other clients, protocols, or services on that adapter will at best have no effect and at worst break your virtual switch.

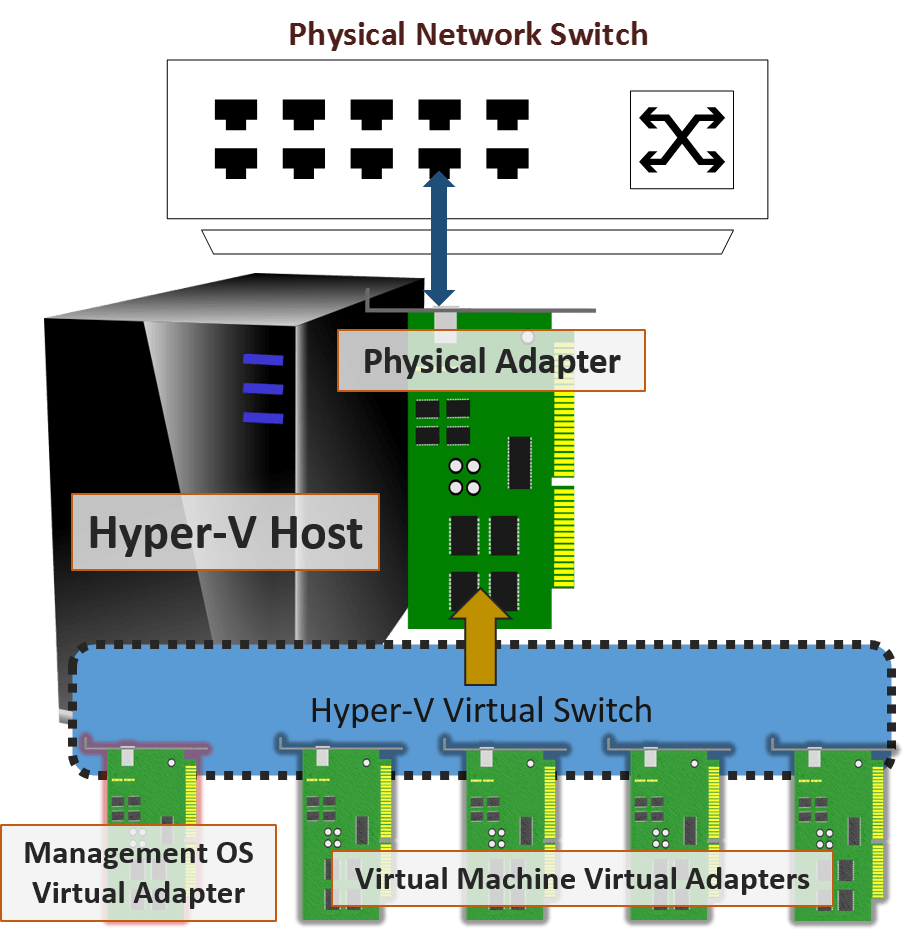

Instead of the above, what really happens when you “share” the adapter is this:

Correct Visualization of the External Hyper-V Virtual Switch

The so-called “sharing” happens by creating a virtual adapter for the management operating system and attaching it to the same virtual switch that the virtual machines will use. You can add or remove this adapter at any time without impacting the virtual switch at all. I see many, many people creating virtual adapters for the management operating system by clicking that Allow management operating system to share this network adapter checkbox because they believe it’s the only way to get the virtual switch to participate in the network for the virtual machines. If that’s you, don’t feel bad. I did the very same thing on my first 2008 R2 deployment. It’s OK to blame the crummy wording in the tools because that is exactly what threw me off as well. The only reason to check the box is if you need the management operating system to be able to communicate directly with the virtual machines on the created virtual switch or with the physical network connected to the particular physical adapter or team that hosts the virtual switch. If you’re going to use a separate, dedicated physical adapter or team just for management traffic, then don’t use the “share” option. If you’re going to use network convergence, that’s when you want to “share” it.

If you’re not certain what to do in the beginning, it doesn’t really matter. You can always add or remove virtual adapters after the switch is created. Personally, I never create an adapter on the virtual switch by using the Allow… checkbox or the -AllowManagementOS parameter. I always use PowerShell to create any necessary virtual adapters afterward. I have my own reasons for doing so, but it also helps with any conceptual issues.

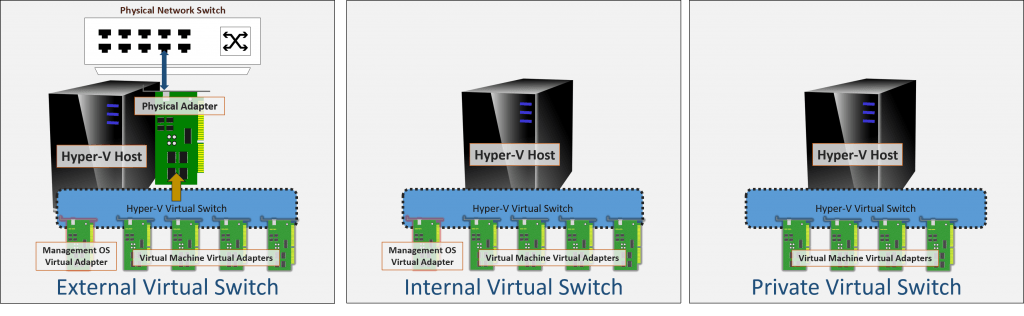

Once you understand the above, you can easily see that the differences even between the external and internal switch types are not very large. Look at all three types visualized side-by-side:

Side-by-side Visualization of All Switch Modes

I don’t recommend it, but it is possible to convert any Hyper-V virtual switch to/from the external type by adding or removing the physical adapter/team.

What are the Features of the Hyper-V Virtual Switch?

The Hyper-V virtual switch exposes several features natively.

- Ethernet Frame Switching

The Hyper-V virtual switch is able to read the MAC addresses in an Ethernet packet and deliver it to the correct destination if it is present on the virtual switch. It is aware of the MAC addresses of all virtual network adapters attached to it. An external virtual switch also knows about the MAC addresses on any layer-2 networks that it has visibility to via its assigned physical adapter or team. The Hyper-V virtual switch does not have any native routing (layer 3) capability. You will need to provide a hardware or software router if you need that type of functionality. Windows 10 and Server 2016 did introduce some NAT capabilities. Get started on the docs site. - 802.1q VLAN, Access Mode

Virtual adapters for both the management operating system and virtual machines can be assigned to a VLAN. It will only deliver Ethernet frames to virtual adapters within the same VLAN, just like a physical switch. If trunking is properly configured on the connected physical switch port, VLAN traffic will extend to the physical network as expected. You do NOT need to configure multiple virtual switches; every Hyper-V virtual switch automatically allows untagged frames and all VLANs from 1-4096. - 802.1q VLAN, Trunk Mode

First, I want to point out that more than 90% of the people that try to configure Hyper-V in trunk mode do not need trunk mode. This setting applies only to individual network adapters. When you configure a virtual adapter in trunk mode, Hyper-V will pass allowed frames with the 802.1q tag intact. If software in the virtual machine does not know how to process frames with those tags, the virtual machine’s operating system will treat the frames as malformed and drop them. Very few software applications can even interact with the network adapters at a point where they see the tag. Not even Microsoft’s Routing and Remote Access Service can do it. If you want a virtual machine to have a layer 3 endpoint presence in multiple VLANs, then you need to use individual adapters in access mode, not trunk mode. - 802.1p Quality of Service

802.1p uses a special part of the Ethernet frame to mark traffic as belonging to a particular priority group. All switches along the line that can speak 802.1p will then prioritize it appropriately. - Hyper-V Quality of Service

Hyper-V has its own quality of service for its virtual switch, but, unlike 802.1p, it does not extend to the physical network. You can guarantee a minimum and/or limit the outbound speed of a virtual adapter when your virtual switch is in Absolute mode and you can guarantee a minimum and/or lock a maximum outbound speed for an adapter when your switch is in Weight mode. The mode must be selected when the virtual switch is created. Use this when you need some level of QoS but your physical infrastructure does not support 802.1p. - SR-IOV (Single Root I/O Virtualization)

SR-IOV requires compatible hardware, both on your motherboard and physical network adapter(s). When enabled, you will have the option to connect a limited number of virtual adapters directly to Virtual Functions — special constructs exposed by your physical network adapters. The Hyper-V virtual switch has only very minimal participation in any IOV functions, meaning that you will have access to very nearly the full speed of the hardware. This performance boost does come at a cost, however: SR-IOV network adapters cannot function if the virtual switch is assigned to an LBFO adapter team. It will work with the new Switch-Embedded Team that shipped with 2016 (not all manufacturers and not all adapters, though). - Extensibility

Microsoft publishes an API that anyone can use to make their own filter drivers for the Hyper-V virtual switch. For instance, System Center Virtual Machine Manager provides a driver that enables Hardware Network Virtualization (HNV). Other possibilities include network scanning tools.

Why Would I Use an Internal or Private Virtual Switch?

There is exactly one reason to use an internal or private virtual switch: isolation. You can be absolutely certain that no traffic that moves on an internal or private switch will ever leave the host. You can partially isolate guests by placing a VM with routing capabilities on the isolation network(s) and an external switch. You can look at the first diagram on my software router post to get an idea of what I’m talking about.

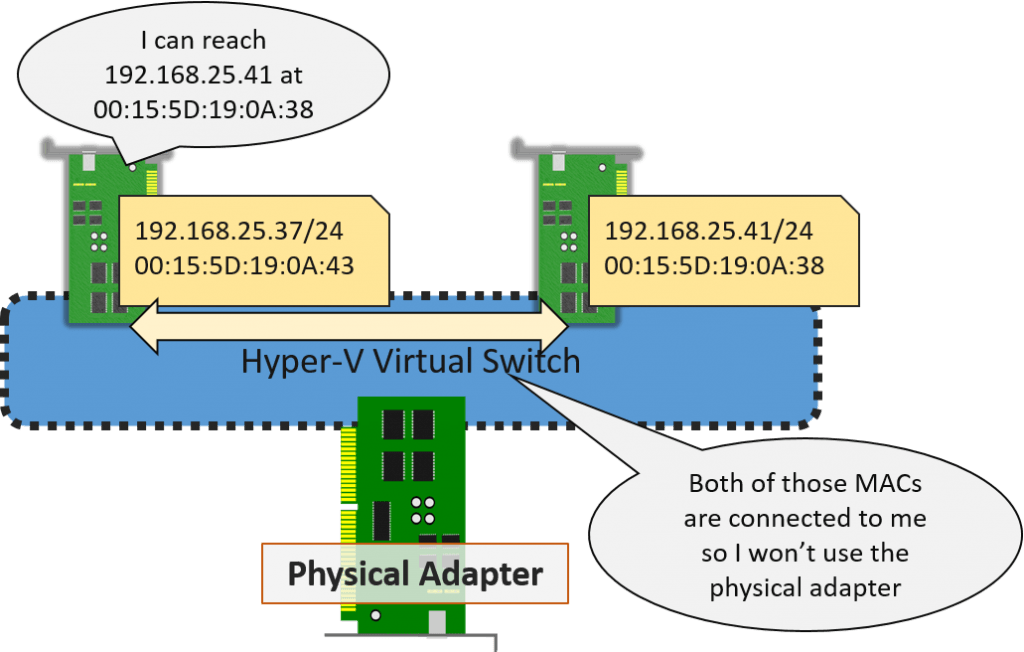

Internal and private virtual switches do not provide a performance boost over the external virtual switch. This is because the virtual switch is smart enough to not use the physical network when delivering packets from one virtual adapter to the MAC address of another virtual adapter on the same virtual switch. However, if layer 3 traffic requires traffic to pass through an external router, traffic might leave the host before returning.

The following illustrates communications between two virtual adapters on the same external virtual switch within the same subnet:

Virtual Network Adapters on the same Subnet

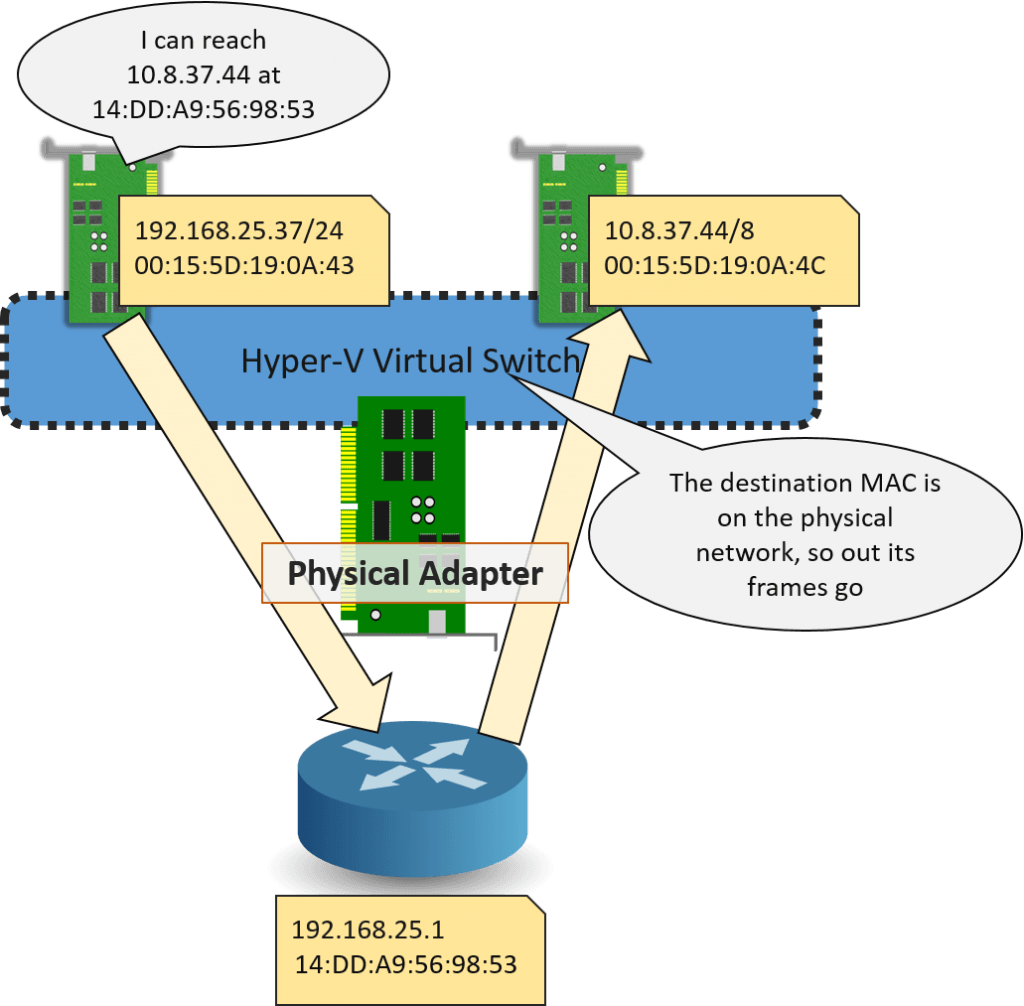

If the virtual adapters are on different subnets and the router sits on the physical network, this is what happens:

Virtual Adapters on Different Subnets

What’s happened in this second scenario is that the virtual adapters are using IP addresses that belong to different subnets. Because of the way that TCP/IP works (not the virtual switch!), packets between these two adapters must be transmitted through a router. Remember that the Hyper-V virtual switch is a layer 2 device that does not perform routing; it is not aware of IP addresses. If the way that Ethernet and IP work are new to you, or you need a refresher, I’ve got an article about it.

How Does Teaming Impact the Virtual Switch?

There are a great many ifs, ands, and buts involved when discussing the virtual switch and network adapter teams. The most important points:

- Bandwidth aggregation does not occur the way that most people think it does.

I see this sort of complaint a lot: “I teamed 6 1GbE NICs for my Hyper-V team and then a copied a file from a virtual machine to my file server and it didn’t go at 6Gbps and now I’m really mad at Hyper-V!” There are three problems. First, file copy is not a network speed testing tool in any sense. Second, that individual probably doesn’t have a hard disk subsystem at one end or the other that can sustain 6Gbps anyway. Third, Ethernet and TCP/IP don’t work that way, nevermind the speed of the disks or the Hyper-V virtual switch. If you want a visualization for why teaming won’t make a file copy go faster, this older post has a nice explanatory picture. I have a more recent article with a more in-depth technical description. The TL;DR summary: using adapter teaming for the Hyper-V virtual switch improves performance for all virtual adapters in aggregate, not at an individual level. - Almost everyone overestimates how much network performance they need. Seriously, file copy isn’t just a bad testing tool, it also sets unrealistic expectations. Your average user doesn’t sit around copying multi-gigabyte files all day long. They mostly move a few bits here and there and watch streaming 1.2mbps videos of cats.

- Using faster adapters gives better results than using bigger teams. If you really need performance (I can help you figure out if that’s you), then faster adapters gives better results than big teams. I am as exasperated as anyone at how often 10GbE is oversold to institutions that can barely stress a 100Mbps network, but I am equally exasperated at people that try to get the equivalent of 10GbE out of 10x 1 GbE connections.

- SR-IOV doesn’t work in LBFO, but it might with SET. I mentioned this above, but it’s worth reiterating.

What About the Hyper-V Virtual Switch and Clustering?

The simplest way to explain the relationship between the Hyper-V virtual switch and failover clustering is that the Hyper-V virtual switch is not a clustered role. The cluster is completely unaware of any virtual switches whatsoever. Hyper-V, of course, is very aware of them. When you attempt to migrate a virtual machine from one cluster node to another, Hyper-V will perform a sort of “pre-flight” check. One of those checks involves looking for a virtual switch on the destination host with the same name of every virtual switch that the migrating virtual machine connects to. If the destination host does not have a virtual switch with a matching name, Hyper-V will not migrate the virtual machine. With versions 2012 and later, you have the option to use “resource pools” of virtual switches, and in that case it will attempt to match the name of the resource pool instead of the switch, but the same name-matching rule applies.

Starting with the 2012 version, you cannot Live Migrate a clustered virtual machine if it is connected to an internal or a private virtual switch. That’s true even if the target host has a switch with the same name. The “pre-flight” check will fail. I have not tried it with a Shared Nothing Live Migration.

When a clustered virtual machine is Live Migrated, there is the potential for a minor service outage. The MAC address(es) of the virtual machines virtual adapter(s) must be unregistered from the source virtual switch (and therefore its attached physical switch) and re-registered at the destination. If you are using dynamic MAC addresses, then the MACs may be returned to the source host’s pool and replaced with new MACs on the destination host, in which case a similar de-registration and registration sequence will occur. All of this happens easily within the standard TCP timeout window, so in-flight TCP communications should succeed with a brief and potentially detectable hiccup. UDP and all other traffic with non-error-correcting behavior (including ICMP and IGMP operations like PING) will be lost during this process. Hyper-V performs the de-registration and registration extremely quickly — the duration of the delay will depend upon the amount of time necessary to propagate the MAC changes throughout the network.

Should I Use Multiple Hyper-V Virtual Switches?

In a word: no. That’s not a rule, but a very powerful guideline. Multiple virtual switches can cause quite a bit of processing overhead and rarely provide any benefit.

The exception would be if you really need to physically isolate network traffic. For instance, you might have a virtualized web server living in a DMZ and you don’t want any physical overlap between that DMZ and your internal networks. You’ll need to use multiple physical network adapters and multiple virtual switches to make that happen. Truthfully, VLANs should provide sufficient security and isolation.

You should definitely not create multiple virtual switches just to separate roles. For instance, don’t make one virtual switch for management operating system traffic and another for virtual machine traffic. The processing overhead outweighs any possible benefits. Create a team of all the adapters and converge as much traffic on it as possible. If you detect an issue, implement QoS.

What About VMQ on the Hyper-V Virtual Switch?

VMQ is a more advanced topic than I want to spend a lot of time on in this article, so we’re only going to touch on it briefly. VMQ allows incoming data for a virtual adapter to be processed on a CPU core other than the first physical core of the first physical CPU (0:0). When VMQ is not in effect, all inbound traffic is processed on 0:0. Keep these points in mind:

- If you are using gigabit adapters, VMQ is pointless. CPU 0:0 can handle many 1GbE adapters. Disable VMQ if your 1GbE adapters support it. Most of them do not implement VMQ properly and will cause a traffic slowdown.

- Not all 10GbE adapters implement VMQ properly. If your virtual machines seem to struggle to communicate, begin troubleshooting by turning off VMQ.

- If you disable VMQ on your physical adapters and you have teamed them, make sure that you also disable VMQ on the logical team adapter.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

395 thoughts on "What is the Hyper-V Virtual Switch and How Does it Work?"

Very nice article, clear (very clear) and concise. Extremely helpful on demistifying Hyper-V virtual switch which has been a headache for me.

Thanks!

Go Ahead !!!!!

Hello,

have you to try setup DHCP server on Internal or Private V-Switch – as you wrote in Adv. config. chapter? I try do it on 2012 cluster, and it not works. Internal or Private adapter is in disconnected state if not have manually assigned IP address. I think this is not error, this is by design.

Hi,

No, I haven’t personally tried it yet, although it’s something I intend to try in the near future. However, I didn’t invent the idea. I’ve seen material from several others indicating that they have successfully implemented this. Also, I am currently using internal adapters in DHCP client mode and they are receiving APIPA addresses and have a “Limited” status, as expected. A “disconnected” status indicates a media sense failure, which normally means that the adapter is not connected to any network at all.

Hi,

Resolution is simple, yours article is correct, and you have true. I read wrong translation of another article, where it seems like DHCP is generally blocked on Internal and Private v-switch. This is not true, correct info is: Internal and Private v-switch does not have own build-in DHCP server on host side. DHCP works normally.

My problem is related only to configuration errors:

1. Guest client machines must be on same node with Guest DHCP server. Internal v-switch is really able ONLY connect guests to own host and its guests (host have connectivity only if have good IP config on Internal v-switch associated virtual adapter). Internal v-switch connected Guest machines is not able to communicate with other cluster Hosts or Guests on this Hosts. In short, clustered internal v-switch is not really “clustered XD”, clustered in this case means only usable for configuration as clustered resource.

2. Is not good idea to switch on NAP on scope and not configure NPS sited on same server like DHCP service.

After resolve this two problems, all works fine in my lab.

Glad you got your issue fixed.

1) This is a common point of confusion. Internal and private network traffic is always limited to the physical host it occurs on. This does not change in a clustered environment because the virtual switch is not a clustered resource.

2) That’s good information. Hopefully, this will help someone else.

Hello Eric,

Thanks a lot for enlightening newbies like us. Please share some light on this

issue I have been facing for almost a week.

SCOPE : I am trying to set up a lab using Server 2012 and hyper-v , where the first thing I want to achieve is that my Virtual Machines are able to achieve IP address through the DHCP server which happens to be the HOST machine.

I ll start off with 2 VM ( Win 8,7 ) and slowly grow my network by installing more VM like Server, Exchange Server etc.

Here is the detail of the network and hardware and all the work I have done step-by-step

Hardware : Machine I5 processor ,8 GB Ram not connected to any router or switch.

I could not get the wireless to work but connects to the home network using the Ethernet Card : Realtek Card 1GB

Software : Windows Server 20102 r2

Steps I have done so far :

1. Ran the add roles and features and installed DHCP, DNS and Hyper-V.

2. Started Hyper-V and added the Virtual Internal Switch and then added two new VM ( Win 8 ) and added the internal virtual switch to them.

3. If I give static IP to the Virtual Internal Switch in Host and then the two VM. All of them in same subnet.

Disable firewall in all the machines.

RESULT : All the VM and the host machine are able to PING each other.

However, if I provide static IP to the Internal Switch in HOST and leave the VM on DHCP, I am not able to get IP address to my VM.

With that being said, what is it that I am missing in the picture , in order to achieve that.

HERE IS THE WINNING FORMULA :

My friends after 5 days of research and hit and trial and 1 pack of Marlboro… lol

I finally got my lab up and running.

I went in to the DHCP stats and saw there was no DHCP request my the client VM machine.

Got me thinking and ……. I decided to configure the DHCP scope in my host machine.

Stumbled across this video :

https://www.youtube.com/watch?v=OhnOwbKpO-w

Boom , I saw the DHCP stats and the VM has an IP address from the HOST MACHINE..

Its 3 am in the morning and I am a happy camper…

Hi,

Great article but hopefully you can clear something up for me.

If I follow the steps, I can create the VM-Switch and VM-NetworkAdapter. After assigning an IP to the adapter and setting the correct VLAN, I can ping out from the host and add to a domain. However, I get Kereberos Resolution errors when attempting to connect to the host using Server Manager. If I remove the adapter and replace it with a LBFOTeamNic, set IP and VLAN, no errors!

Am I missing something?

Cheers

Martin

Do you have any problems of any kind with any other traffic class?

I’ve had to disable VMQ on the team adapter in some cases to keep odd things from happening, but that wouldn’t pick on a particular traffic type.

I am just building a server for POC so there is nothing else running on it yet and I am unable to answer your question.

So can I assume that the LBFOTeamNic is not the supported/optimal/best practice way?

If so, I do some more digging into my issue

Cheers

There’s nothing wrong with using LBFO, as long as you can get it to work. What’s your load balancing algorithm?

Sorry about the slow reply – been off for a few days.

Sorted out the Kereberos errors by logging on with the correct account.

One last questiion, if I may.

I am still trying to understand the difference in purpose of the VM Network Adapter as opposed to a LBFO Team Nic Interface. Both seem to achieve the same function/result but is one or other more suited to a specific use than the other?

Sorry, just read over what I wrote. Meant to ask what is the difference between VM network Adapter and Team Interface

The big differences are that you can use RSS and some other advanced features on a team interface but not on a virtual adapter. The Hyper-V role must be installed and a virtual switch created to use a virtual adapter, but a team interface just needs 2012. You can have multiple team interfaces, but only one per VLAN; you can have as many virtual adapters in the same VLAN as you want. You can only use policy-based QoS for a team interface, but a virtual adapter can also be controlled with Hyper-V QoS.

I must be missing something (it’s happened before….). I set up Windows server 2012 as a host, two NICs. I then created two VMS, also Windows server 2012. I use one NIC exclusively for the host, and creae two virtual network switches, one Internal, one External. I assign the external network 192.168.181.0/24, the internal 192.168.182.0/24. All three systems, host, vm1 and vm2, each get IP configured for each network, 192.168.182.200, .201, .202, and 192.168.181.200, .201, .202. Logically, I should be able to ping between the vms on either network. However, I can’t do it on either one. Weird. From each VM I can ping the default gateway on the external, and I can ping the host system, but not each other. Looking around for clues, I haven’t seen anything to help. It’s pretty straightforward to set up, seems logical to me, IP is correctly set up, but no ability to connnect between the vms. Any ideas/

I may not be entirely understanding your issue, but as I understand your setup, you won’t be able to communicate from one network to the other without installing a gateway that has a presence in both networks. Is that the direction you need to be sent in? Or you can’t ping across VMs in the same network? The only thing I can think of on that offhand is default firewall rules that block ICMP.

Your article help me a lot-i had a problem with the difference btn private and internal, but am cool now

I think you may have reversed “external” and “internal” in the following:

“if you wanted to use a private or external switch, you’d use the -SwitchType variable with one of those two options. That parameter is not used in the above because the inclusion of the -NetAdapterName parameter automatically makes the switch internal.”

Help on New-VMSwitch -SwitchType says:

“Allowed values are Internal and Private. To create an External virtual switch, specify either the NetAdapterInterfaceDescription or the NetAdapterName parameter, which implicitly set the type of the virtual switch to External.”

Ah, so I did. Good catch. I’ll fix the article. Thanks!

Hi Eric,

So what should I do for same subnet situation?

I have 2 Hyper-V host that I assigned a dedicated NIC for management of the hyper-v host and another for VM production ( VM connecting to the outside world).

The Hyper-V host dedicated NIC has a static IP address ( 192.168.1.11) and the VM production NIC gets an IP address via DHCP ( same subnet through 192.168.1.xxx).

Cluster configuration network validation is giving a warning of same subnet.

For the VM production NIC , I created a Virtual Switch ( so it created a Vethernet ) , that my VMs can use to communicate to the production network (192.168.1.xxx) and internet. should I allow management operating system to share this network adapter?

For the Management NIC should I leave it alone having a static IP , do I need to put a gateway and DNS IP address on it?

Please advice.

Thanks,

Jojo

The management adapter needs a static IP to keep your cluster happy. If you want it to be able to access the network for things like Windows Update, then it needs a gateway and DNS.

Hyper-V cluster nodes are also supposed to have at least one other NIC to use for Live Migration. If you created a vNIC in the management operating system, then move it to another subnet and use that for Live Migration.

Can you tell me what the difference is between the “management adpater” that needs a static IP in the first sentence and the “vNIC” in the last sentence as well as how it all relates to Hyper-V backups that use the management NIC ( or so I’ve been led to believe ) ?

The management adapter just means the “adapter that the computer uses for its normal business”. So, if it was just a regular computer with one NIC, that would be the management adapter. All the other adapters that get added have other duties, like cluster adapters, Live Migration adapters, etc. Backup occurs through the management adapter.

When you create a virtual switch, you have the option to create virtual adapters (vNICs) on it that the management operating system can use. The management operating system will see them the same way that it sees physical NICs. vNICs can be used for management or any other role that a physical NIC would be.

Here is where the confusion lies for me. If the management NIC for the host OS is a vNIC, ie. sharing with host OS is checked so you get the vEthernet network adapter that you put your IP, etc. on, then what happens if the hypervisor goes down ?

The management operating system is a special-case virtual machine. Therefore, a hypervisor crash means a management operating system crash, too. You’ll have bigger problems than the status of the management adapter vNIC if that happens.

A more likely scenario is that your virtual switch gets deleted, in which case any and all vNICs for the management operating system will also be deleted. This is one reason why I save my virtual switch and management OS virtual NIC creation routines in a .ps1 file on the local system.

Hi Eric,

Thank you for your advice.

Here is my configuration for one of my Hyper-V host, each of them has a dedicated NIC.

management – static 192.168.1.11

VM production – DHCP – same subnet as management – cluster and client

Live Migration – 192.168.8.11 – cluster only

Heartbeat – 192.168.7.11 – cluster only

ISCSI – 192.168.5.1 – None

Backup – 172.16.5.11 – Cluster and Client

What I’m trying to avoid is multihomed network. So that VM production network access will just be on NIC for VM production not crossing over to the Management. If I uncheck the allow management on the VM production virtual switch, will this be the correct action?

I’m also trying to do a backup of the hyper-v and vm too and I created a subnet 172 network that will be used. Unfortunately I don’t have any documentation I can find on the internet to implement this. What I’m trying to accomplish is backing up even during business hours. My backup device (NAS) has a dedicated nic for backup and so are my Hyper-Vs.

Thanks,

Jojo

Yes, remove the Allow. That vNIC isn’t doing anything for you.

To avoid multihoming issues, make sure that the management adapter is the only one with a gateway and that none of the others are registering in DNS.

If you’re backing up from the hypervisor across the network, it will use the management adapter by default. That should be a low-traffic network otherwise so that may be OK.

If you want to force it to use a specific NIC, then the easiest way is just to have it target a system that’s on the same subnet as that NIC. So, if you want to use a NIC that has an IP address of 172.16.5.11/16, then make sure the target system is using an IP in the 172.16.0.0/16 network. When deciding how to send traffic, the Windows TCP/IP stack will always choose the path that doesn’t require a gateway if such a path exists.

Eric,

Great advice. Thanks!

How do I force the routing of the backup traffic to the 172 or is it automatic? Is it correct that the backup network is on a cluster and client or should I make a virtual switch for the 172 network.

You should write a book for Hyper-V. I’ve read a couple of Hyper-V books ( I won’t mention the authors) but they don’t mention this part of the setup. I do believe that explaining the basics with real world implementation is the best way to learn. After learning the basics then going to the advanced part will be the next phase of learning.

I also like that you respond quickly unlike the Microsoft evangelists that preaches what they want the readers to hear but don’t reply at all. Either they don’t know the question or just ignore my questions, maybe both.

Thanks,

Jojo

It’s automatic if the destination is in the same subnet. That’s why the cluster’s subnets work without any particular magic. You can also create a special routing rule, but those are a pain to manage and I avoid them unless I have no choice. Refer to the chart in this post to see how Windows determines where to send traffic.

I haven’t read any books about Hyper-V, but my assumption is that those authors focus on Hyper-V as Hyper-V, not Hyper-V in a failover cluster. It’s odd that you would mention me writing a book…

Eric,

It’s not odd but you seem to have a firm, logical grasp of Hyper-V and its internal workings especially for Hyper-V beginners like me.

The authors have a section of hyper-v networking and clustering that doesn’t explain what will be the impact if you choose a particular option.

Thanks,

Jojo

First, I want to acknowledge that you’re saying very kind things to me and that I very much appreciate that.

I think what you’re highlighting here is the difference between the book format and the blog format. Print book publishers are really concerned about page counts, flow, deadlines, etc. So, a book author has to draw much thicker lines about what a potential reader needs to know and what sort of content belongs. If you’re really steeped in TCP/IP, then some of this stuff is almost second-nature. But, in-depth TCP/IP training is usually reserved for network admins, not system admins. So, on the one hand, there’s a case for explaining it well. On the other hand, TCP/IP is not Hyper-V-specific technology. In a blog, I can just telescope out things into comments if it’s quick or new posts if it’s not. In a book… well… you can only do so much.

On the other subject, I clearly remember having questions and no one to ask them of that would respond, so I feel your pain. I do sometimes wish the experts would be a little more user-friendly, but I imagine that after a while, being a big-time expert becomes its own burden. Right now, the volume of questions I get is mostly manageable, so I respond whenever I can.

Eric,

Thanks for your great advice. I unchecked the allow management per your suggestion, what’s weird is it disappeared from the host’s NIC, I presume this is the effect of the Hypervisor controlling it now. I didnt lose my connectivity, so it means its working.

I also editted the hosts file on the backup server (NAS) for the 172.16.5.11/12 address of the hyper-V, to force it to go backup network.

Thanks,

Jojo

Not weird, that’s exactly what it’s supposed to do. When you create a Hyper-V switch on an adapter, that adapter is not visible in the host’s NIC list. What you were seeing was a virtual NIC all along.

Is there a way to change the IP address of an external switch from Hyper-V Manager?

Hyper-v virtual switches do not have IP addresses.

Hi Eric,

This is an absolutely awesome collection of articles. THANK YOU.

Your article is INCORRECT in my case. On a powerfully equipped Win8.0 x64Ent (AD domain member), logged into AD User Acct (member of local admins), when I add an external vSwitch, it simply does not matter if I check ‘Share with Mgmt. OS’ or not, in any combination with/without VLAN Tagging. The vNIC for the host is never created.

If I didn’t have over 100 bit apps (Sql Server instances, etc.), I’d blow it away for Win8.1 (the only path from W8Ent), but at this point, I’m still experimenting, considering some cmdlets next. Reboots, and starting from scratch also does not make any difference (literally, deleting all other adapters, so only the primary Ethernet adapter remains).

Any ideas?

Thanks,

Paul

I haven’t worked much with Client Hyper-V so I can’t say anything to your symptoms. I haven’t heard of that happening before. If you haven’t tried the PowerShell route I would definitely do that next.

Eric,

First, I want to apologize, and admit that I was wrong. I think there were other factors that were causing some unusual behavior (at least, I could find no other information on the internet, which was similar to what I was attempting and seeing).

Thus it seemed to me at the time that I was encountering a corner case, or that I had some other software/driver that was interfering, or I may have just been confused still. I was resetting TCP/IP (netsh), uninstalling any software that just looked a little suspicious and continued to research the situation, with no success.

In fact, there were several complicating factors, including prior installs of vSphere/Virtualbox/quemu sw/components (yes, all of them), prior 3rd party firewall (ICS sharing still not possible, due to Domain membership), and other security hurdles (on each port of our fully segmented network). So I surrendered, and installed another physical adapter (actually, a USB dongle/Nic 😉 and I’m back on track now, I think.

~p

Hi Eric,

We have change in VLAN’s and we are forced to change the IP address of the existing Hyperv 2 node cluster running 2012 hyperv core OS.

I would like to know what precaution should i take before changing my IP address?

Currently it is all configured on single Vlan

MGMT: Host

HB:

Team NIC for Trunk: For Guest VMS

SAN is connected via FC.

Many thanks in advance.

Regards

Natesh

Hi Natesh,

There is no major risk in this process and it’s easily reverted if something does go wrong. Just make sure there’s nothing going on that relies on any of the host IPs or the CNO IP, like an over-the-network backup or something.

Hi,

Nice article. I have one question. I have 2 physical NICs that are virtual switches. I have added virtual network adapters to each switch.

I know which adapter is on which switch, but is the a command I can run to find out which adapter is on which switch?

[I have looked 🙁 ]

Thanks,

David

Hi David,

Any particular reason you’re using separate switches?

The command you’re looking for is “Get-VMNetworkAdapter -VMName *”. It will show the virtual machine name and the switch name for each virtual adapter.

Hi. Attempting to configure similar environment and having difficulty understanding how to set up dual presence VM using Routing access service. Are you considering additional articles (part 3) on how to create a dual presence VM? Please advise.

Actually, yes. Keep an eye on the blog.

In your article, is the routing virtual dserver a member of the isolated network or public network? I’m testing configuration using front end web servers (SharePoint) in a DMZ with DMZ domain controller routed through a RRAS server that straddles the DMZ and isolated network/domain.

A router must be present on all networks that it will route between. So the RRAS system must exist on both the private and public switches. From your last sentence, it sounds like you knew that, so maybe I don’t understand your question. In the described configuration, all guests except the RRAS system should only exist on the private switch.

Two great articles Eric, very informative, thanks…

In the post you say:

“If you create an external switch on the adapter that the system is using for its general network connectivity, that connection will be broken while the switch is created. This will cause the overall creation of the switch to fail.”

The Technet documant at https://technet.microsoft.com/en-us/library/jj647786.aspx says:

“When you remotely create a virtual network, Hyper-V Server creates the new virtual switch and binds it to the TCP/IP stack of the physical network. If the server running Hyper-V Server is configured with only one physical network adapter, you may lose the network connection. Losing the network connection is normal during the creation of the virtual switch on the physical adapter. To avoid this situation, when you create the external virtual switch in Step 5, ensure that you select the Allow management operating system to share this network adapter check box.”

Has this changed since your article was originally published?

I haven’t created a virtual switch remotely in a very long time, but I do believe that it will usually make a successful reconnection after the switch and that virtual adapter are built. However, the connection is still broken during creation and I have heard people say that when the virtual adapter is rebuilt, it is sometimes unable to successfully connect for various reasons. Sometimes it doesn’t properly duplicate the IP settings, for instance. However, to the best of my knowledge, the switch creation is now successful every time, whereas it used to be left in a sort of half-created limbo state.

So, does checking the box “allow management operating system to share this network adapter” stop the Host OS from being able to have ANY external network connectivity, or does it just mean the Host OS will need to create a virtual adapter just like the VM’s?

Neither. Checking the box creates a virtual adapter for the management OS.

I’ve set up two Hyper-V systems. One is a Server 2012 R2 Hyper-V core system. After installation, I used the sconfig dialog to define a fixed IP for the host system. It works fine.

I have a Hyper-V enabled on a Windows 8.1 workstation. Before I enabled the feature on Windows, the machine had a DHCP address. This instance of Hyper-V also works fine.

My problem is that I now want to change the host machine to a fixed IP address. I’ve gone into the network center from the control panel and have changed the IPV4 settings for the Virtual switch from DHCP to a fixed IP (complete with default gateway, DNS, subnet mask). When I do this, however, the host 8.1 system loses it’s ability to connect to the Internet. If I attempt to do a ‘repair’, it comes up with the error ‘DHCP is not enabled’. If I use that to repair the connection, it sets DHCP. It then works fine, but I’m back to where I started.

I know that the host can have a fixed IP because my server works that way. What I don’t understand is why I can’t change my desktop workstation from DHCP to fixed. I’ve tried starting and stopping the Hyper-V service after making the changes. I even tried deleting the Virtual Switch and recreating…all to no avail.

Any ideas?

Thanks!

Is your physical adapter wireless? I hear of all sorts of odd things happening when the virtual switch is bound to a wireless adapter. My suspicion is that it has something to do with the way that MAC addresses are propagated and advertised. I don’t think that wireless driver authors have yet caught on to some of the things that we’re doing with their equipment.

In short, I don’t have an answer to your question. You might try searching/asking on the official Hyper-V forums. Brian Ehlert seems to have had quite a lot of experience with this and is often able to help people out.

I have configured one private switch and connected 5vms for that switch I am not seeing any communication among them. what may be the reason?

Appreciate your help

There are lots of possible reasons.

Eric,

Hyper-V newbie here.

I have a host running Win Srv 2012 R2 Std, with 6 physical NICS.

One of the 6 NICs is used exclusively by the Host.

The other 5 NICs are teamed and used to create an external v-switch (which allows the VMs to talk and allows our employees to remote into the VM-XP).

The host is running a Raid5 of 15K RPM drives.

The host OS is running on a RAID1 of 15K RPM drives.

The VMs are using Dynamic V-disks on the RAID5 (taking a speed hit there I assume).

Running on the host I have two VMs:

VM-Dell04 (Win Srv 2012 R2 std)

VM-XP (XP 32bit).

The VM-XP runs apps that need data from VM-Dell04.

Our employees need to be able to remote into VM-XP and run these apps.

It all works but I’m not sure I have the setup optimized as the data transfers don’t seem very fast between the two VMs.

Is there a better “Network” configuration I should consider?

Thx,

J

Transfers between two guests on the same system should only use VMBus, so there’s probably not much else you can do. You can try using a Private virtual switch, but I wouldn’t expect much. There are a lot of variables to troubleshoot so I think you’d do best to start trying to find where the bottleneck really is.

Eric,

Thanks for the reply.

By VMBus, do you mean a subset of the External V-switch, which VMs use by default to communicate?

Indicating that you believe that my current “network” setup is correct.

Or do I have to explicitly tell it to use the VMBus some how.

Thanks so much,

J

VMBus is, among other things, used for communications between the VMs. This diagram: https://msdn.microsoft.com/en-us/library/cc768520(v=bts.10).aspx is for 2008, but it’s still applicable in this case.

As long as Integration Services are installed in the VMs and they are on the same TCP/IP subnet, Hyper-V should know not to ship their communications across the physical wire. This mechanism is not perfect, though. The Private switch is more reliable in that regard, but also more of a pain to work with. I’d make certain that network bandwidth is the problem before going down that rabbit hole.

Hello Eric.

I would appreciate your help.

I’m experiencing DHCPNACK issue with Virtual machines on the W2k12 R2 host

– Microsoft-Windows-DHCP Client reports error:

– Event ID 1002 The IP address lease 147.x.y.z for the network card with network address 0x00155D30F11 has been denied by the DHCP server 192.168.0.1 ( The DHCP Server sent a DHCPNACK message )

Here’s the configuration:

IP scope 147.x.y.z/24

DHCP Server 147.x.y.3 255.255.255.0

I’m not aware of any 192.168.0.1 DHCP Server

The w2k12 R2 host :

NICTeam of 4 x Gigabit Ethernet physical NICs

Teaming mode : LACP, LB mode DYNAMIC

The corresponding ports on the physical switch are set to LACP mode and added to the Link Aggregation group.

Hyper V networking was set by following PS cmdlest:

New-VMSwitch “VSwitch” -MinimumBandwidthMode Weight -NetAdapterName “MyTeam″ -AllowManagementOS 0

Set-VMSwitch “VSwitch” -DefaultFlowMinimumBandwidthWeight 70

Add-VMNetworkAdapter -ManagementOS -Name “Management” -SwitchName “VSwitch”

Set-VMNetworkAdapter -ManagementOS -Name “Management” -MinimumBandwidthWeight 5

The VMs are connected to the virtual switch “vSwitch”. The virtual NICs inside the VMs has TCP/IP IPv4 settings set to automatic.

So, do you have any idea where does the DHCP 192.168.0.1 come from and why it denies the address lease from legitimate DHCP 147.x.y.3.

Thank you,

Igor

The DHCP DORA process is all layer 2 and operates by Ethernet broadcast. There must be a DHCP serving system somewhere in that Ethernet space that has an IP address of 192.168.0.1. Someone may have brought in a consumer-grade router and plugged it into a wall jack somewhere. If you don’t use VLANs then that can be tough to sort out. Whatever this device or system is, it’s getting involved in the DORA process before your “legitimate” DHCP server. It is not authoritative for this 147 range, which is why it’s NACKing it.

This is not an issue with your Hyper-V installation. It’s a basic Ethernet and DHCP problem.

You’re aware that an IP address starting with 147 is in a public address space and that someone owns it, correct?

Hi,

Thanks for the quick reply.Actually, I administer the 147.x.y.0/24 scope, so no worries about the ownership.I know it’s public.

NACKing events were reported only within the newly deployed VMs on the new w2k12 R2 host, and this is my first deployment of the VMs going through the virtual switch which is bound to the NIC Team, so I thought that there might have been some DHCP functionality inside of the Hyper V switch that I was not aware of, and it had to be turned off.

The NACKing stopped today and everything works fine now (maybe the maintenance guy returned the cables in the previous-correct configuration on some router somewhere in the building)

Anyway, my physical switch has only default VLAN *1 enabled, and it regularly gets an IP in the 147.x.y.0/24 scope from the legitimate DHCP.

The 4 ports GE1/0/15 – GE1/0/18 are grouped in the LAG Group, and they work in ACCESS mode.

However, if it comes again, it’s good to know that it has nothing to do with the Hyper V, and I’ll go after the rogue DHCP server.

Thank’s again,

Igor

OK, just so long as you know. I had a client once whose previous consultant set up their internal network with an IP range owned by Sun Microsystems. It ended well, but…

I’ve seen the consumer-grade router thing before. It’s usually some employee that’s upset that IT won’t put them on the wireless or give them more ports so they take things into their own hands. Keep an eye out.

Ok I have a really weird one I set up a network with only an external virtual switch. I have virtual-1 which is the main server, and v-1, v-2 and v-3 as the virtual servers. Everything is pinging fine, all show up in the dns and in wins. All joining of machines to the domain when fine. I can access shares, and see other servers using server names. My problem is network neighborhood. On the host server (Virtual-1) network neighborhood shows it and the non-virtual servers, but none of the virtual servers. In each Virtual server (v-1, v-2, v-3) I can see it, and the host (Virtual-1) in network neighborhood, but none of the other servers.

I know this seems trivial but there are some server apps I use that don’t let you type in the network address for say a folder – you MUST select it through network neighborhood! I have checked and all the requisite services are turned on and network discovery is checked on all servers.

Everything else works except this. Did I miss a setting on the virtual switch to allow it to pass this seemingly simple netbios style traffic? Speaking of which should I just disable netbios and have it just use DNS?

So weird….

That is a really confusing naming convention.

There are no settings in Hyper-V that would affect what you see in Network Neighborhood. In a domain, I think that’s all controlled by having the Computer Browser service started in the PDC emulator and ensuring that it is winning in all computer browser elections. Don’t troubleshoot this as a Hyper-V issue.

I always disable NetBIOS but then I don’t use anything that works with the Computer Browser either. Not sure what to tell you.

Hi Eric,

I am using Hyper-V on Windows 10 where I run multiple virtual Linux machines where each runs a VPN client. This is how I partition my VPN access, because I need to manage multiple unrelated networks.

I was quite surprised to learn that Hyper-V “takes over” the host networking – I was under the initial impression that virtual switch is what allows virtual machines to access the physical network as set up by host adapter. Well, now my host adapters IPv4 and IPv6 are unchecked, and it seems as you say in the article – even the host now accesses e.g. Internet through the virtual switch set up for the Ethernet card. That seems to be completely counterintuitive. Why would Microsoft design their virtualization that way? They may have isolated the virtual machines from the host, but now the host has to access the physical network through the switch…

The problem with this is that if there are issues with the virtual switch, my host networking suffers. And there are [issues] – in fact this is how I came to read this article, because I started to experience erratic network behavior, with dropouts, refused connections where there were none before etc.

Let me lead off by saying that I am not discounting your experience or the natural frustration that arises from the issues that you’re experiencing.

To date, none of these problems have been isolated to the virtual switch itself. It is very often a limitation of the hardware. For instance, very few desktop adapters were designed around the notion that they’d be dealing with multiple MAC addresses. The same goes for many consumer-grade switches (on a single switch port or WiFi terminus). That’s only one possible cause, and it’s beyond your control and Microsoft’s. These sorts of problems would manifest in any non-NAT situation. I haven’t done anything with Hyper-V in Windows 10 yet, but that was an option with Internet Connection Sharing in Win8. The feature is still in 10, so it should work.

Keep in mind that Hyper-V is always a type 1 hypervisor, even in Windows 10. Isolation is critical for a type 1.

Hi Eric – Thanks for the write-up. I’m setting-up Hyper-V under Windows 10 to replace all of the Virtual PC’s I was using under Windows 7 for dev/test. I’m an ex-dev who recently moved to an IS/IT role (by choice) so still have a lot to learn about networks. But I want to believe this move is going to make me a more well-rounded “techie”.

Do I understand correctly that once the physical NIC is tied to a virtual switch, the physical NIC is no longer usable by anything on the host for connectivity to the physical network? Specifically, if my Windows 10 box only has on NIC and I “bind” (terminology?) it to the virtual switch, then should I expect that my host OS will be using the Management OS Virtual Adapter to gain access to the physical network? Further, if I have any apps/services that want to “bind” to a NIC (for whatever reason), they’ll also need to be “bound” to the Mgmt OS V-Adapter to gain access to the physical network?

Fortunately, my Win10 box has 2 NICs so I’m thinking I could just leave one NIC for the OS and the other to the virtual switch … though I guess that means an extra hop or two to get from the host to the VM’s.

If you have 2 NICs in a Windows 10 system, I would continue using one as the management adapter and use the other to host the virtual switch with no host-level virtual adapter at all. That’s just because, unless I missed something, Windows 10 doesn’t allow teaming.

Thanks for the reply. I took the approach you suggested – leave NIC1 to the management OS and use NIC2 for the “external” virtual switch. I unchecked the box about “sharing” with the management OS so that the management OS virtual adapter wasn’t created. I created a Win7 VM to test connectivity, but couldn’t seem to connect to the internet. It was too late at night to troubleshoot but I did notice that NIC2 is still visible from the host (I didn’t think it would be). I checked NIC2’s properties and noticed that only the “Hyper-V” related entry was checked … nothing else. Does that sound right?

I just re-re-read your previous post to Armen and I think it’s starting to sink-in. You mentioned that, “Hyper-V is a type 1 hypervisor”. I always associated “type 1” hypervisors with appliances. If Hyper-V wants to be more like a type 1 hypervisor, I guess it makes sense that the virtual switch would want exclusive access to the physical NIC. It feels weird though … calling Hyper-V type 1. I may need to rethink what “type 1” means as I always thought it just meant that the hypervisor “lived close to the metal” and didn’t rely on a management OS. But yet doesn’t Hyper-V rely on the Windows OS? Wait, maybe not … maybe I’m seeing a place for the Virtual SAN now … hmmm …

Appearances are deceiving. When you enable the Hyper-V “role”, it does do a full-on switch. The hypervisor boots first, then it starts up your Windows environment as the “root partition”, now known in Windows parlance as the “management operating system”. The only functional difference between this configuration and other hypervisors is that those other hypervisors’ management operating systems have never been known apart from their hypervisors. But, regardless of manufacturer, the hypervisor and management operating system are always co-dependent.

Eric, your article is much useful much appreciated, My case is little different.We have 6 physical NICs teamed as PROD(10.*), MGMT(15.*), BACKUP(99.*) (each with 2 NICs). PROD pNIC and MGMT pNIC is configured with IP, SM,GW but MGNT PNIC doesn’t have DNS. Also Backup pNIC is configured with IP and SM.

We are created vSWITCH using PROD pNIC.

Now the issue we are facing is, We are able to ping PROD vNIC IP of virtual server from Hyper v host 1. But unable to ping MGMT/backup vNIC IP of virtual server from Hyper V Host 1.

My requirement is to ping MGMT vNIC IP and BACKUP vNIC IP of virtual server from Hyper v host 1.

Note:

1. PROD/MGNT/BACKUP are in different VLAN

2. if we are creating vSWITCH using MGNT pNIC , We are able to ping MGMT vNIC IP of virtual server from Hyper v host 1. But unable to ping PROD/backup vNIC IP of virtual server from Hyper V Host 1.

3. In both scenario(either vswitch using PROD pNIC or MGNT pNIC), from virtual server we are able to ping (prod IP, mgmt IP , backup IP) of HYPER V host 1. But not from hyper v host to virtual server.

This is really not a technical support forum. To get you started, your configuration appears to be dramatically over-architected and does not make sense to me. I would re-evaluate what you’re trying to accomplish and design something simpler. Whatever the problem is, it’s likely a basic networking issue.

Eric,

Thanks for the great article, your first diagram was indeed how envisioned the virtual switches.

In paragraph 9, you stated:

“The IP settings are unbound from the physical switch,” did you mean they are unbound from the physical adapter (vice switch)? Seems to make more sense that way based on the rest of the article.

Thanks,

Yes, that’s exactly what I meant. Hard to believe it’s sat this long without someone bringing that up. Thanks! Fixed.

Excellent piece indeed, Eric. Thanks for sharing.

We’re seeing a weird observation on our Hyper-V environment. We’ve a host that runs a number VMs. These VMs are running Docker containers. Also, the VMs works are a cluster, where they communicate with each other, and with other external servers as well.

What are noticing though is that most of the time spent waiting for network socket response from one VMs to another. So, we are wondering if there’s something to look at from Hyper-V virtual switch. Any clue?

I don’t really have a clue on that, no. You’ve got a deeper level of nesting than I have tried myself.

I would connect Performance Monitor to the host and watch the physical CPUs, especially CPU 0, core 0, and watch the virtual NICs.

I’d like to virtualize all my domain controllers.

The question is: Should I check “allow management operating system to share this network adapter”?

If I don’t check it, then the host will not be able to communicate with the DC it hosts. It would have to contact another DC out on the network using another physical adapter. But the if the host can’t contact another DC, then it won’t authenticate.

I think what I mean is: does unchecking the box prevent communication between the management OS and the gust VM though the virtual adapter, forcing them to communicate through the physical network?

Sorry if this isn’t clear – it’s difficult to explain.

I think that you’re overthinking it.

Take a step back. Ask yourself, “What path do I want the computer running Hyper-V to use to participate on the network?”

If you have a physical adapter or adapter team that’s not used for a virtual switch, then make sure that it has good physical connectivity and IP information and then you don’t need a virtual adapter for the management operating system.

If all physical adapters are used by a virtual switch, then the management operating system needs a virtual adapter on the virtual switch with good IP information.

Orient yourself from the position of the IP network first. Once you’ve done that, think through how all of the physical adapters and switches, whether physical or virtual, connect to each other. Draw it out if that helps you to make sense of it.

What you want to avoid is having the management OS connect to the same IP network through both a physical pathway and a virtual pathway or any combination thereof. That’s multi-homing and it confuses the system just as much as it confuses people. One point of presence per IP network per system, maximum.

Hey Eric,

I’ve been reading on other Posts or forums about using 2 physical NICs having one configured for MGMT and the other for VM traffic. After reading your post I see that you prefer to run them all in a NIC teaming setup. May I ask why?

I keep an eye on network performance metrics using MRTG (article).

If I were to pull the one-year statistics on a system configured with a dedicated management adapter, I’d be able to point out that the management adapter almost never did any work. I’d also be able to point out times that the adapter dedicated to the virtual machines could have used more bandwidth, but didn’t have anything else available.

So, my first answer is that my method is a better utilization of resources that the institution has already purchased.

Also, many people have access to multiple switches these days. You can create a team across two switches and get some simple, cheap redundancy for both your management traffic and your virtual machine traffic.

So, my second answer is that my method provides redundancy.

Finally, we have access to helpful new technology that’s been around long enough for the wheel to be very round.

So, my final answer is that it’s not 2010.

The only time that I split away a physical management adapter is if there are more than two adapters and there is a capabilities limitation. For example, my test lab systems have an onboard adapter that doesn’t support RSS. They have two PCI dual-port adapters that do support RSS. So, I use the onboard adapter for management and put everything else on the RSS-capable adapters. Truthfully, I only do that because I hate paying for hardware and letting it sit idle. If I had a second switch in my lab, though, that onboard adapter would only do out-of-band operations and there would be a virtual adapter for management traffic.

nice site. I have 6 1GB Nic’s and 2 10GB NIc’s. should I really make only ONE Team and mix all together or two teams. How can I be sure that Live Migration takes the 10GB NIc’s. Is this out of the box? thanks

The gigabit NICs are a waste of your time to worry about. Disable them and stick with a team of the 10GbE.

Nested Virtualization: In the new Server 2016 it is stated that the Hyper-V role can now be set up on a virtualized Server 2016. I have a Windows 10 Ent. Physical host with this server set up. I can enable the Role/Feature inside the Server 2016 VM and create a Windows 10 Ent. inside it. BUT it cannot see the internet. Note that the virtual switch was ‘automatically’ set during the feature install and was not set up like the physical host by adding the virtual switch. Why can’t the Virtual NIC in this nested VM be set up to get to the internet, activation etc.?

I have read articles similar to this, but cannot get the nested VM to see thru. It looks similar to the Primary NIC/v-Switch but is in essence a virtual switch set up on a virtual NIC.

Comments? (https://www.petri.com/deep-dive-windows-server-2016-new-features-nested-virtualization-hyper-v)

I haven’t spent much time with nested virtualization, but I didn’t have any troubles getting the virtual switch to work. I can’t duplicate your exact layout because my Win10 system runs on an AMD chip, so no nested.

Many people have it working just fine, though. So, check the layer 3 settings for any problems. If you’re using a wireless adapter, that might be the problem. Wireless adapters and Hyper-V seem to have a hate/hate relationship.

Hi, Eric

I’m migrated my Virtualization to Hyper-V. I read that NIC Teaming (LACP) created on Intel i350 network cards is better that default NIC Teaming on Windows (created as Hyper-V port). It’s true or not ?

I have not seen any significant body of empirical evidence to sway me either way. The Microsoft LACP mode works well in some environments, poorly in others. Most people have gone to Switch Independent mode.

If you use Intel’s teaming software and have any troubles with networking, Microsoft PSS might opt to direct you to Intel for assistance. I am inclined to stick with the Microsoft networking stack for support reasons.

My VM connected to Hyper-V switch (external switch) is taking a manual IP as 10.1.46.250 automatically.

Please help to understand how this IP is getting assigned.

Regards

Prakash

Well, if it’s manual, then someone typed it in. If it’s automatic, then it’s DHCP.

Thanks for a great writeup!

I have been trying to direct-connect two PCs each with 10GbE jr45 ports using a cat 6 cable. This seems to work for the 2 “Dom0″/Mgt OSs and for the VMs on one of them (which sees its host and the other Dom0). However, I cannot get the VMs on the 2nd PC to see the direct-connect network. In your article you say this cannot be done for VMs, but it does work for the VMs on one PC and they see the Dom0/Mgt OS on the other. Its just the 2nd box’s VMs than cant see past its Dom0. One box has Win10 as Dom0 and Win10 VM. It has a “Aquantia AQtion” 10Gbit NIC. The 2nd box is a small SuperMicro all-in-one server with Intel “SoC 10GBase-T” NIC running WinSvr16 as Dom0 and same as VM.

Do these results make sense to you?

Thanks!

Not sure which part of the article implies that it wouldn’t work. As long as the management OS and guests use the same virtual switch, and that virtual switch sits on the connected physical NIC, everything should work well. At that point, you should only need to look for a fundamental TCP/IP solution. I don’t see anything in your configuration that would outright prevent connectivity.

Wow! I can’t believe I never stumbled across this site/blog before. You’ve done an amazing job of explaining what seems to be a confusing topic for most people (including me at times).

I was Googling around trying to find a recommendation/best practice for setting up a 2-node Hyper-V cluster on a completely flat network. Each node has 5 x usable 1GbE NICs and the network is a single subnet (VLAN 1). For reasons I won’t go into, we cannot create additional VLAN’s to segregate traffic.

What do you think is the best approach for setting up the networking for a 2-node Hyper-V cluster like this? Would you team all 5 NICs and create a single External vswitch for everything? Or maybe a 3-NIC team attached to External vswitch for management & VM traffic, and then a 2-NIC team for LM, & cluster traffic. One concern is having LM and the Management OS with IP’s on the same subnet.

Not sure why, but I’ve been racking my brain over this and just wanted to get your thoughts on it. Thank you!

One virtual switch.

You don’t need VLANs to separate subnets. Multiple virtual switches wouldn’t help with that anyway.

Just invent auxiliary subnets that don’t already appear anywhere on your network and use them for all roles besides your management network.

Thanks for the quick reply Eric! I’m not sure I understand what you mean by inventing auxiliary subnets. If I only have a single NIC team and a single External virtual switch, which other Hyper-V roles need IP’s assigned to them anyway? Sorry if that sounds like a stupid question, but I don’t deal with Hyper-V enough to feel confident. I’ve been looking for an example of what this config might look like, but haven’t come up with anything useful for an entirely flat network.

In my test lab, the “real” network is 192.168.25.0/24. It has a router at 192.168.25.1, DNS at other IPs, the whole shebang.

On my Hyper-V hosts, the Cluster Communications adapters have IPs in 192.168.10.0/24. No router. The Live Migration adapters have IPs in 192.168.15.0/24, also no router. No need for DNS or DNS registration on those networks. Those subnets don’t have any particular meaning. I just made them up.

You don’t need VLANs to use separate subnets.

Ah, I follow what you’re saying! So, I was thinking of creating a 3-NIC team with External Hyper-V virtual switch for Management & VM traffic. The management OS virtual adapter would be assigned an IP on the public/real network, have a default GW, register with DNS, etc. Then use the other 2 NICs for a second team, assign a made-up IP address, and use that for LM & cluster. Is there a need to even create a 2nd virtual switch for the 2-NIC team if I do this?

One team, one virtual switch, 2 or 3 virtual network adapters for management OS. Management vNIC required, Live Migration vNIC required, cluster vNIC optional. On gigabit, I would use that third vNIC.

You are THE MAN Eric! Thank you so much, that makes a lot more sense now. I hadn’t thought about multiple virtual network adapters for management OS. I really appreciate your help, and I’m sharing your site/blog with all my co-workers! 🙂

Hi Eric great article .. just wanted to discuss with you my concern … I have hyper-v server and 2 VM running on the same. My NIC card on hyper-v are terminated to switch port which are configured as access port on switch end.

I have created one virtual external switch on hyper-v and attached both VM to same virtual external switch only one VM is able to communicate to outside but second is not able to communicate with either host or first VM or to external network. both the vm share same VLAN.

Please let me know if switch port if configured as access port and connected to external switch, will it allow only one VM to communicate to external network though other VM is also in same VLAN as first.

If i need to support two VM or multiple VM of same VLAN do i need to have switch port as trunk.

If i share management port of hyper-v and VM network on same VLAN and switch port access configuration works fine. both management port and VM are accessible though switch port is access mode.

Please try to clear my confusion.

Hyper-V does not know or care what mode your physical switch is in, so you need someone who is an expert on your physical switch to answer this.

If I were to guess, your switch likely has a security setting that prevents it from accepting frames from multiple MACs on the same port. If you can’t find that setting, then converting to trunk mode might make it work.

can we support two VM on same vlan connected to external switch on hyper-v with single physical NIC as uplink terminated to switch port and mode configured as access mode. physical switch port configured as acccess mode and VM have common VLAN.

Please clear my doubt thanks

I have Two NIC’s. One for Management / VM network and other for DMZ network.

My DMZ network pings gateway if I assign DMZ IP to DMZ NIC. But from VM guest I can not connect to DMZ gateway.

Any help on this would appreciate.

Wow. All makes sense now. Best explanation I have read about this topic. Seriously, far better than the official documentation. Thanks for this article.

Hello, excellent article! Quick question, would you happen to have an idea of why my VMs can access shared files between each other but not with the host PC? It was working when my external network was using the on-board Ethernet adapter. I then installed a NIC card with multiple ports and changed the ‘External’ adapter to use one of these ports. Since, I cannot access a shared folder from my host PC on any VM. I have disabled the firewall but no luck, any ideas will be greatly appreciated, thanks!

Troubleshoot it as a basic networking problem. Something modified TCP/IP, DNS, etc. The virtual switch alone does not cause behavior like this.

I have bought a dedicate server with a main IP and 8 subnet IP’s. How can i create VM’s in Hyper V so that i can assign each IP to each VM. I would like each VM to be accessible from public using the assigned subnet IP. I have been trying to fix his from past 1 week. Would be grateful if you can help. Thank you 🙂

You do the same thing that you would do with 8 physical computers connected to an unmanaged switch. I don’t have a clear enough view of what you’re doing and what you’ve tried, but I suspect that you’re over-thinking the problem and/or the solution.

Hi Eric,

Awesome article, wish I had found this 4 hours ago. In any case I fall in the category of wireless only physical adapter. In any case I’m trying to set up multiple dev VM’s one for each project and I’m probably over thinking the solution. Several projects are web based clients (Symfony, Jquery and Angular) with LAPP backends and all are on GitHub Repo’s. Given this, I though I needed to share directories with the host for my commits (thinking that isolating VM pairs for dev, debug and test might server me well).

Any insights are much appreciated.

Cheers,

Steve

If you want to use the network for host/guest sharing, the best way is to ensure that the guests can go directly to the host’s network endpoint without needing to access the physical network. Flat IP network, basically. If that’s not an option, a secondary virtual switch in Internal mode will fix the problem. But you have other options.

let’s say i have a win 10 host OS with a physical ethernet adapter and i want to share a wifi internet connection that’s inside the guest OS through that host’s physical ethernet adapter with another device like a video game,switch or something

Nothing about that question makes any sense to me. One of us doesn’t understand what the other one is saying.

I havet been struggling for quite some time to wrap my head around hyper-v, configuration , Networking and its concepts byt you sir have a really great way on explaining things. Thank you.

Tomas

hi Eric,