Save to My DOJO

Hyper-V is no longer the newcomer in the virtualization space. After springing forth from its Virtual Server parent, it’s now had over half a decade to mature into the reliable, enterprise-grade hypervisor that it is today. As with any complex software, Hyper-V is composed of many parts, like the branches and leaves of a great tree. Like a tree, some of those branches form into major trunks that define the tree’s silhouette. Others become a twisted impediment to the proper growth of the larger organism and must be pruned away for its overall well-being. In Hyper-V, one of those latter branches is the pass-through disk.

Many of you are already aware of this fact and have long since moved on. The reasoning behind this post is that there are still a handful of people out there clinging to this old tech, wishing that branches that were solid in 2008 weren’t withering away in 2015. I’d like to talk a few of those people into joining this decade while it’s still young. The other reason is simply that the Internet never forgets, and a lot of literature available on pass-through disks is from the earlier incarnations of Hyper-V. Judging by the number of questions I still see on pass-through disks, I assume that these older articles are confusing the newcomers. So, let’s just go ahead and stick a fork in them all right here and now.

Why Are Pass-Through Disks Still an Option?

A very good question someone might ask is, “If pass-through disks are no good, why can I still use them?” The answer is that there are still good uses for pass-through disks.

1. When You’ve Got a Physical Portable Disk and Want to Quickly Move Files to/from the Virtual Environment

I occasionally use this method and for those few times, I’m really glad it’s available to me. I’ve got a SATA-to-USB converter that works for laptop drives, so I can attach a laptop drive to a host and quickly move things to and from a guest. I don’t use it for more permanent things, such as saving a drive. I’ll show why in the next section.

2. Vendor Requirements

Some vendors refuse to keep up with the times. Their support policies, however ridiculous, often dictate practice. That’s really unfortunate, but life isn’t always fair.

3. … ?

I’ll assume there’s some really good reason I don’t know about. Please, tell us in the comments… after you’ve read and digested the rest of the article. I’ve envisioned a handful of edge cases, but for typical installations the benefit has shrunk to practical non-existence.

Formerly Good (or OK), Now Bad Reasons to Use Pass-Through

Time to update some knowledge!

Performance

In 2008, pass-through disks were notably faster than even fixed VHDs. With each new release of Hyper-V, that difference shrank. In 2012 R2, you can’t see the difference without running a benchmark. Or, to put it in more boardroom-friendly terms, there is no improvement in user experience when using pass-through disks in lieu of VHDX and the risks outweigh any minor benefit that might exist. That said, all else being equal, if you’ve got some extremely performance-sensitive application, pass-through still barely — just barely — outperforms VHDX. However, in the world of computer architecture, it’s usually pretty simple to make something else not be equal. If performance of my storage when on a VHDX could benefit from that infinitesimally small difference in IOPS, I would just add one more spindle to my storage. That would more than cure the IOPS difference. If it’s a latency issue, then we’ve probably identified an application that isn’t well-suited for virtualization anyway.

However, it’s still worth pointing out that, even when VHD was young, most people didn’t need nearly as much disk performance as they thought and adding spindles was an option even in those dark ages.

Connecting Non-Disk Devices to VMs

This isn’t actually the same thing; doing this is still OK, although trouble-ridden. It’s mentioned here because a lot of times this gets confused with pass-through disks. I’ll talk about it again later in this article.

Getting Around the 2TB Limit

VHD files top out at 2TB. Pass-through disks were commonly recommended as the solution. I was never particularly a fan of that, as you could just as easily have created multiple VHD files and used volume extension inside the guest. Then you’d have your greater-than-2TB volume along with all the benefits of VHD. Starting in 2012, this is no longer a good recommendation at all. A VHDX file can be up to 64TB in size — still not as large as the 256TB that a GPT volume can reach, but still not precluding guest volume extension, either.

Modern Solutions

Now it’s time to look at what you should be using instead of pass-through.

Standard VHDX

For 99.9% of the installations out there, standard VHDX is the best solution. Whether or not you choose to use Dynamically Expanding or Fixed is a discussion for another time, although you’re free to read our older article on the subject. Whichever you choose, VHDX gives you access to all the powers that Hyper-V has to offer for its virtual disks. The performance cost over pass-through disks is so minimal that almost no one can justify not using VHDX on this basis alone. Standard VHDX should be the default storage solution for all VMs.

Direct Connections to Remote Storage

If the storage you’re connecting to is on an iSCSI or fibre channel target, you don’t need pass-through, even in the event that VHDX isn’t good enough for some reason. Guests can connect directly to iSCSI just like hosts can. If it’s fibre, Hyper-V exposes that to guests through its virtual SAN feature. If you’re going to use virtual SAN within a Hyper-V cluster, remember that setting the masks as tightly as possible is vital for speedy Live Migrations.

This isn’t a wonderful solution. You lose many of the same VSS benefits that you lose with pass-through disks, such as host-level backup, hardware-assisted operations, and Hyper-V Replica. It’s still a better option than pass-through, though. Where I’d be willing to consider an exception on that statement is when the Hyper-V administrator and the guest administrator are different people and you don’t want the guest’s administrator troubleshooting storage. VHDX is still a superior choice in that situation.

Direct Connection to USB Storage

When you need something on a USB drive that’s more permanent, then the best thing to do is get it off of the USB drive and place it on internal or remote storage. It’s much more reliable, much faster, and much less prone to “Oops, were you using that?” unplugging accidents.

In order to get the data from USB to VHD, you first set up the USB drive just as you would to make it pass-through, but then you use the new VHD wizard to clone it into a virtual disk. The steps are as follows:

- Physically connect the disk to your Hyper-V Server or Windows Server with Hyper-V host.

- Next, you have to take the volume offline.

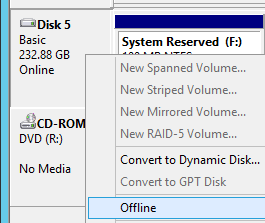

- If it’s a GUI edition, you just open up Disk Management (run “diskmgmt.msc”) and right-click on it and choose Offline.

- If it’s not a GUI edition, you can use PowerShell.

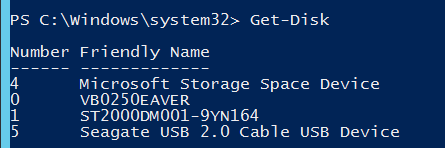

- First, use Get-Disk to determine what number is the USB disk. It’s usually pretty obvious:

- Once you know the number, just pipe it to Set-Disk -IsOffline $true:

- First, use Get-Disk to determine what number is the USB disk. It’s usually pretty obvious:

- Next, you create a new virtual disk using the USB drive as a source.

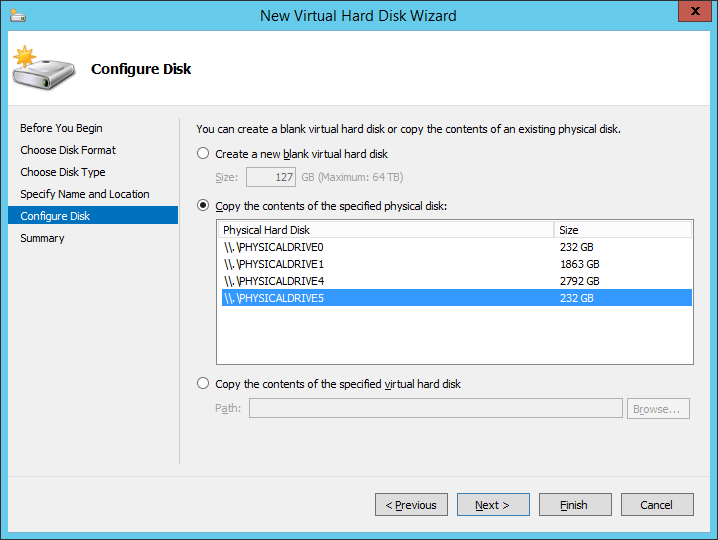

- In the New Virtual Disk wizard, and when you get to the Configure Disk screen, choose the USB drive as a source:

- In PowerShell, just use the Get-Disk cmdlet with -SourceDisk set to the disk number, -Path set to the destination directory with file name, and the -Dynamic switch:

- In the New Virtual Disk wizard, and when you get to the Configure Disk screen, choose the USB drive as a source:

- If it’s a GUI edition, you just open up Disk Management (run “diskmgmt.msc”) and right-click on it and choose Offline.

Now you’ve got a VHDX file that you can use just like any other. It’s a perfect copy of the USB disk, and a better one than pretty much any other tool can create. I’ve used this more than a few times to save disks from failed laptops.

Non-Disk Hardware

As previously mentioned, pass-through is mostly about disks, but there are other types of hardware you might want to connect to a guest. The issue with all directly connected hardware is the same: portability is next to impossible, which is anathema for the virtual machine paradigm. For that reason, some things just don’t have solutions. But, a few do.

USB pass-through for devices like scanners and dongles is commonly asked for. Hyper-V cannot truly pass-through USB, but there are ways to use USB devices within guests. First, if it’s just a device attached to your client machine and you’re only going to use it in an active remote session, USB pass-through works seamlessly from remote client to guest virtual machine… as long as you’re using recent operating systems. Second, if everything is really recent, you can use Enhanced Session Mode for VMConnect sessions. The latest version of Remote Desktop Connection Manager allows you to connect to VMs using VMConnect technology, so it should allow for enhanced mode as well (I haven’t tested that part myself). The third, and probably more common method, is when you need a permanent connection. These require third-party hardware or software solutions. I’ve used network-connected USB hubs in the past to connect dongles with great success.

Another is fibre channel devices such as tape drives. The first thing I need to say on this is that even though it works, this is not supported. Just use the virtual SAN feature linked above, and you can make many fibre channel devices connect directly to guests in Hyper-V.

The Risks of Pass-through

So, you know what you can do instead of pass-through. But the real important things to understand are the problems that pass-through disks present.

- No portability. VHDX files can be copied anywhere that they’re needed. This makes migrations a snap, even in old Hyper-V versions that don’t support Shared Nothing Live Migration. There are a lot of other benefits to this portability too, such as manual backups or sharing virtual disks with other people for development or debugging purposes.

- Host backups are a problem. The host computer only brokers the attachment between the virtual machine and the disk. It can’t see the contents. So, if you use a hypervisor-based backup application like Altaro, your pass-through disks aren’t going to be backed up. You’ll be required to use an agent-based system inside the guest.

- Guest backups are a problem. The nice thing about host-based backups is how coordinated everything is. The VM backups run sequentially without interruption, so you don’t have to do a lot of fancy scheduling to try to balance the detrimental effects of too many VMs backing up at once or spacing them out so far that there is a lot of dead-air time when it would be more efficient to be performing a backup. When you’ve got a system that performs smart backup scheduling, like Altaro does, it’s even better. On top of the scheduling benefits of host-level backup, any hardware acceleration provided by the storage manufacturer can be put into play, which means that you can get some very fast backups. Unless you decide to use an in-guest backup agent just so your pass-through disks are covered. Then you get none of these things. It’s safe to say that if you have a hardware accelerator available to assist with host-level backups and you forfeit it, that will cost you more performance in a night than the pass-through disk will add to daytime operations throughout its lifetime.

- Forget Hyper-V Replica. HVR can’t see pass-through disks. ’nuff said.

- Snapshots/checkpoints don’t work with pass-through disks. Just like backup and Replica, the snapshot/checkpoint mechanism can’t see the contents of pass-through disks. I’ve heard a few people using this as an excuse to use pass-through disks because they’re terrified of what will happen if someone takes a snapshot. Frankly, I’d be more terrified that this is the length someone is willing to go to in order to prevent snapshots from being taken. It’s just plain poor stewardship. Besides, I’m fairly certain that the system can still snapshot the guest, it just doesn’t get the contents of pass-through disks. If someone inadvertently takes a snapshot, that could make a merge a tricky situation.

- Live Migration can be problematic. For starters, you can forget about Shared Nothing Live Migration. Intra-cluster Live Migration usually works, but sometimes it hiccups because the source host has to take the pass-through disk offline and the destination host has to bring it immediately online. There’s a sort of “limbo” period in-between where neither one of them has it. I don’t know that I’ve ever heard of a destination system not getting the hand-off, but I have heard of some taking so long to pick it up that the guest blue screened. In any other Live Migration scenario, if the destination host has any problems with the hand-off, the migration fails and the guest continues running at the source without interruption. With pass-through disks, there’s no such luck.

- Lack of support. Microsoft will support your pass-through configuration, but that’s about it. Community forum posts are likely to go unanswered, except for suggestions that you convert to VHDX. Some software vendors may not support their applications on pass-through disks, especially if they know about the potential disconnect issue. For access to the widest support base, VHDX is the way to go.

- Lack of tools. We have all kinds of nifty things we can do with VHDX files using PowerShell and other tools. You can even directly mount them in Windows Explorer. There’s really nothing out there for pass-through disks. It seems like a small issue, until there’s a problem with a VM and you need to perform a task on the disk and discover that there’s really no good way to do it.

So, if you’re still using pass-through disks, the time has come. Use the wizard and convert them to VHDX. If you’re starting to plan a new Hyper-V deployment and wondering if pass-through is a viable option, it isn’t. Begin your virtualization journey with VHDX. It’s worth it.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

59 thoughts on "The Hyper-V Pass-Through Disk: Why its time has Passed"

Eric,

I would like to take advantage of the storage tiers of storage space for a Windows Server 2012 R2 standard server running as a guest on a Hyper-V Core. I don’t see how I can do that without resorting to pass-thru disks. If I can have both tiered storage (hard disks and SSD) and VHDX, please let me know how. Thanks in advance.

Regards,

Patrick

Hyper-V Server does not support Storage Spaces, presumably to prevent people from inappropriately standing up file servers without purchasing a Windows license. If this is technology that you want, my preferred choice would be to use Windows Server in Core mode as the management operating system. Since you have a 2012 R2 Standard guest, you’re already licensed for it, provided that you don’t allow anything except local virtual machines to directly access the Storage Space.

Eric,

I would like to take advantage of the storage tiers of storage space for a Windows Server 2012 R2 standard server running as a guest on a Hyper-V Core. I don’t see how I can do that without resorting to pass-thru disks. If I can have both tiered storage (hard disks and SSD) and VHDX, please let me know how. Thanks in advance.

Regards,

Patrick

Hi Eric, very nice article. It reminded me of the great iSCSI vs NFS virtualization debate.

With that said, I must ask ask if you’ve given any thought to running an enterprise NAS storage with Hyper-V? I’m curious how this would compare.

I am actually looking into an enterprise NAS solution now. I’m not sure what I can say about it because of the hospital’s requirements around non-disclosure of configuration so I think I’ll leave it there. Preliminary results are promising. It offers native SMB 3 so we’ll be using it as though it were just a gigantic scale-out file server.

Hi Eric, very nice article. It reminded me of the great iSCSI vs NFS virtualization debate.

With that said, I must ask ask if you’ve given any thought to running an enterprise NAS storage with Hyper-V? I’m curious how this would compare.

Hi Eric, I am going to deploy Hyper-V environment single cluster with 24 hosts with HA and DR (Cover 2 geographical locations). After implementation Hyper-V 2012 R2, I need to migrate VMs from VMware, which are currently using multiple RDMs on their VMs. I would like to know how VHDX files can be substitute for RDMs and can we suggest for VHDX disk instead of pass-through disk. and also what is the best recommendation practically to assign SAN LUN on hyper-V 2012 R2 host, size wise (like 10TB, 20TB LUN), numbers wise (like 50 or more storage LUNs on host) etc. If, I assign 50 LUNs to Hyper-V host then how it will be managed ? like after 26 Alphabets what will happen? I also heard that in VM level SQL clustering CSV format disk doesn’t supportive. Hence it is mandatory to assign pass-through disk in this case, is it right?

Pass-through is the Hyper-V equivalent of RDM in VMware. If you are intent on duplicating your VMware environment in Hyper-V, then pass-through is the answer. I would not duplicate the environment, if it were me, but that’s not my decision to make.

There are no recommendations around LUN assignment. Assign as many or as few as you like. The nice thing about using a few large LUNs is that you need less overall slack space. The nice thing about many smaller LUNs is that the loss of one has reduced impact. Cluster Shared Volumes use volume identifiers that you will mask behind friendly names, so there are no drive letters in use.

If you are creating a true guest cluster in Hyper-V in which you require the use of a shared VHDX, then it’s not currently a wonderful technology although it is still better than pass-through. I’m not certain where you heard that CSV doesn’t support it, though. I don’t know any other way to create a shared VHDX. 2016 introduces a much superior solution for shared VHDX, if you’re willing to wait a month. All of that said, SQL can now operate from SMB 3 storage and that would be better than either pass-through or shared VHDX. That particular line of questioning is better handled by SQL experts.

Hi Eric, Thanks for your reply. I am not going to duplicate the environment. I will follow the best practices as per Microsoft for Hyper-V. But certain places there is no recommendation from MS. Why RDMs/Pass-Through is necessary ? because of faster performance then other disk types like VHD/VHDX. Is there any performance IOPS comparison chart between pass-through disk and VHDX disk?

I don’t know enough about the applications of RDM in VMware to speak intelligently to that.

I have seen a handful of attempts to gather performance metrics of pass-through against VHDX in more recent versions, but they are not conclusive. With the variety of hardware quality and acceleration features available, pass-through is sometimes a bit faster, sometimes a lot slower. Pass-through is never a lot faster, though. If what you’re virtualizing is fit for virtualization at all, pass-through won’t speed it up enough to be worth the trade-offs.

The lone exception might be when you need to share storage between virtualized cluster members, but only so far as 2012 R2. Shared VHDX in 2012 R2 has a lot of the same limitations as pass-through.

One use for pass-through disks is for converged virtualization.

On VMWare, it’s fairly common to set up a storage VM that has direct access to disks (either via RDMs or having a controller passed through to the VM directly), then having that VM export iSCSI or NFS to the same host the storage VM is running on. This essentially allowed you to use software defined storage and skip the traditional hardware RAID controller. VMWare’s VSAN came along a while later as an option, but it’s expensive, and requires a minimum of 3 hosts. With a storage VM based on QuadStor (one of my favorite choices), it’s easy to set up a 2 node share-nothing storage cluster with no cost other than a decently fast interconnect between nodes (10Gbps, for example).

It was (and still is as of ESXi 6.0 Update 2) impossible to have the storage VM put it’s disks into virtual disks (VMDKs), because ESXi will have a small fit as soon as you put a VM on the disks the storage VM provides. It appears to be something to do with the nested virtual disks causing ESXi to going into a death spiral. The host stops responding completely in short order.

Looking towards Hyper-V, I would be inclined to use pass-through disks or a pass-through storage controller in the same manner as I’ve done in the past with VMWare. Maybe Hyper-V doesn’t suffer the same issue as ESXi with the nesting? I haven’t tested it. However, it would make more sense to use pass-through disks or controller for a storage VM anyway, so the storage VM’s OS can get direct access to the disks (this is important for file systems like ZFS and for disk failure prediction).

So there you have it – another use for direct disk access *grin*.

I haven’t operated many VMware environments, certainly not enough to comment on that part of what you said. I never did anything like that at all. That’s not a value or judgment statement.

As I think through all of the ways that I could configure storage for Hyper-V, I don’t see anywhere that this sort of configuration would make sense over some other alternative. If you were trying to use a virtual machine to fool Hyper-V into using a loopback network-based storage mechanism, then the “best” configuration for the VM would be to use some sort of SMB 3 technique which still wouldn’t require or benefit from pass-through. But, I may not be entirely envisioning the use case that you’re talking about. I don’t want my guests to be aware of things like disk failure predictions because that sort of thing should be a host problem. I’m thinking that what you’re presenting here is one of those edge cases. It’s not normative in Hyper-V.

One use for pass-through disks is for converged virtualization.

On VMWare, it’s fairly common to set up a storage VM that has direct access to disks (either via RDMs or having a controller passed through to the VM directly), then having that VM export iSCSI or NFS to the same host the storage VM is running on. This essentially allowed you to use software defined storage and skip the traditional hardware RAID controller. VMWare’s VSAN came along a while later as an option, but it’s expensive, and requires a minimum of 3 hosts. With a storage VM based on QuadStor (one of my favorite choices), it’s easy to set up a 2 node share-nothing storage cluster with no cost other than a decently fast interconnect between nodes (10Gbps, for example).

It was (and still is as of ESXi 6.0 Update 2) impossible to have the storage VM put it’s disks into virtual disks (VMDKs), because ESXi will have a small fit as soon as you put a VM on the disks the storage VM provides. It appears to be something to do with the nested virtual disks causing ESXi to going into a death spiral. The host stops responding completely in short order.

Looking towards Hyper-V, I would be inclined to use pass-through disks or a pass-through storage controller in the same manner as I’ve done in the past with VMWare. Maybe Hyper-V doesn’t suffer the same issue as ESXi with the nesting? I haven’t tested it. However, it would make more sense to use pass-through disks or controller for a storage VM anyway, so the storage VM’s OS can get direct access to the disks (this is important for file systems like ZFS and for disk failure prediction).

So there you have it – another use for direct disk access *grin*.

Hello Eric,

thanks for all the many great articles about the usage of Hyper-V in daily life. After watching quite some trainings and reading a lot of material about it, I mostly miss information, what some of the things really mean be choosing a, b or c.

We are a creative company and run an SBS2011, first on a dedicated on dedicated hardware and later virtualized on Hyper-V. Now, time has come to replace it. We got a new HW and plan to completely set it up new. No migration of AD or Exchange. This will give us a new fresh start.

Our plan is to run Windows Server 2016 Standard with HV-role. Then, 2 VMs: VM1 = ADDC, DNS, DHCP and File Server, VM = Exchange 2016

For the files we use frequently, we got a 1TB NVMe SSD, all other data is on a hardware Raid5 (4 x 4 TB HDDs). So if I understand it right, I create a VHDX of the maximal size, format them with NTFS and create the shares.

I’m just a bit unsure, to use 10 TB VHDX is fine. With Altaro Backup we backed up only the VMs in the past. Now, we include the VHDX with all the data as well. Is that correct so?

I don’t think that I clearly understand your plan, but this does not sound like the approach that I would take.

I would use tiered Storage Spaces. Let the system automatically manage all of your storage in a single pool.

Then just create VHDXs for the virtual machines as normal. I do not see a reason to create one massive all-encompassing VHDX.