Save to My DOJO

Table of contents

- What are Spectre and Meltdown?

- What are the Risks of Running without Patches?

- Should I Patch My Hyper-V Hosts, Guests, or Both?

- What Happens if a Hyper-V VM Updates Before Its Host?

- What If No BIOS Update is Available?

- What About Non-OS Software?

- Quality Control Problems with Spectre and Meltdown Patches

- How to Ensure that You Receive Microsoft’s Spectre and Meltdown Patches

- How to Apply, Configure, and Verify the Spectre and Meltdown Fixes for Hyper-V and Windows

- The Performance Impact of the Spectre and Meltdown Patches on a Hyper-V Host

- Performance Benchmarks

- Conclusion

- Tell Me Your Experiences

The Spectre and Meltdown vulnerabilities have brought a fair amount of panic and havoc to the IT industry, and for good reason. Hardware manufacturers and operating system authors have been issuing microcode updates and patches in a hurry. As administrators, we need to concern ourselves with three things: the risks of running unpatched systems, the performance hit from patching, and quality control problems with the patches. If you’re skimming, then please pay attention to the section on ensuring that you get the update — not everyone will automatically receive the patches. Below I’ll run down what you need to know to ensure you’re protected plus a benchmark analysis of the performance impact of the recently released update patches.

What are Spectre and Meltdown?

Both Spectre and Meltdown are hardware attacks. Your operating system or hypervisor choice does not affect your vulnerability. It might affect fix availability.

Spectre is a category of assault that exploits a CPU’s “branch prediction” optimizations. A CPU looks at an instruction series that contains a decision point (if condition x then continue along path a, else jump to b) and guesses in advance whether it will follow the “else” code branch or continue along without deviation. The Wikipedia article that I linked contains further links to more details for those interested. Because the Spectre vulnerability encompasses multiple attack vectors, the predictor has more than one vulnerability. All of the ones that I’m aware of involve fooling the CPU into retrieving data from a memory location other than the code’s intended target. Spectre affects almost every computing device in existence. Fixes will affect CPU performance out of necessity.

Meltdown belongs to the Spectre vulnerability family. It affects Intel and some ARM processors. AMD processors appear to be immune (AMD claims immunity; I do not know of any verified tests with contrary results). As with Spectre, Meltdown exploits target the CPU’s optimization capabilities. You can read the linked Wikipedia article for more information. For a less technical description, Luke posted a great analogy on our MSP blog. Whether you read more or not, you should assume that any running process can read the memory of any other running process. The fix for this problem also includes a performance hit. That hit is potentially worse than Spectre’s — an estimated 5 percent to as much as 30 percent. I’ll revisit that later.

What are the Risks of Running without Patches?

Properly addressing Spectre and Meltdown will require more administrative effort than most other problems. Admins in large and complex environments will need to plan deployments. With unpredictable performance impacts, some will want to wait as long as possible before doing anything. The more avoidant of us will want to just ignore it as much as possible and hope that it just sorts itself out. Eventually, we’ll all have new chips, new operating systems, and redesigned applications that won’t be susceptible to these problems. That won’t be ubiquitous for some time, though. We need more immediate solutions.

In one sense, an unpatched system will be fairly easy to assault. Attacks can be carried out via simple Javascript. Unprivileged user code can access privileged kernel memory. The processor cache is an open book for many of these vulnerabilities. It looks to me as though some attack vectors could be used to read essentially any location in memory, although some other accounts dispute that. These are hardware problems, so in the hypervisor world, they transcend the normal boundaries between virtual machines as well as the management operating system. These threats are serious.

However, they cannot be exploited remotely. Attacking code must be executed directly on the target system. That separates the Spectre and Meltdown vulnerabilities from other nefarious vectors, such as Heartbleed. Furthermore, Spectre-class attacks are a bit of a gamble from the attacker’s side. Even if they could read any location in memory, it will be mostly without context. So, something may look like a password, but a password to what? The clues might be alongside the password or they might not.

So, the risk to your systems is extremely high, but the effort-to-reward ratio for an attacker is also high. I do know of one concern: LSASS keeps the passwords for all logged-on users in memory, in clear text. You should assume that a successful Spectre or Meltdown attack might be able to access those passwords. Also, attacks can come from Javascript in compromised websites. You shouldn’t be browsing from a server at all, so that could help. But what about remote desktop sessions? Do you keep those users off of the general Internet? If not, then any of them could unwittingly risk everyone else on the same host.

I cannot outline your risk profile for you, but I would counsel you to work from the assumption that a compromise of an unpatched system is inevitable.

Should I Patch My Hyper-V Hosts, Guests, or Both?

One of my primary research goals was to determine the effects of only applying OS patches, only applying firmware updates, and doing both. I did not find full, definitive answers. However, it does appear that some kernel protections only require OS updates. Others indicated that they would require firmware updates, but they did not make it clear whether or not a firmware update alone would address the problem or if a combination of firmware and OS updates were necessary. However, there is no question that the best protection only comes from updating your hardware and software.

Also, the guests’ kernels must be made aware of the changes to the physical layer in order to fully utilize their mitigation techniques, which can only be done if both the host and the guest are updated.

Therefore, for the fullest protection, you must:

- Patch the host

- Perform host configuration changes

- Update the firmware on the host

- Patch the guests

- Cold boot the guests

Also, be aware that “host” means any Hyper-V host. Windows Server, Hyper-V Server, or desktop Windows — patch them all.

We’ll go over the how-to in a bit. Let’s knock out some other questions first.

What Happens if a Hyper-V VM Updates Before Its Host?

Well, the host isn’t patched, so that’s a problem. Aside from that, nothing bad will happen (generally speaking — outliers always exist). But the guest will not be aware of the changed capabilities of the host if it is not cold booted after the host is updated. Until all of the above has been done, the guest will not have the fullest protection.

What If No BIOS Update is Available?

If your hardware does not have an available update, then you still need to apply all software patches. That will mitigate several kernel attacks.

If you are running Windows/Hyper-V Server 2012 R2 or earlier as your management operating system, I’m sad to say that you cannot do much else. Practice defense-in-depth: if no attacker can access your systems, then they cannot execute an attack. Remember that the patches are still applicable and will help — but don’t lose sight of the hardware-based nature of the problems. If possible, upgrade to 2016.

For 2016, you can restrict which processors and cores that the management operating system and guests use. That helps to confine attacks. The relevant features are not well-documented. I had planned on performing some research and writing an article on them, but none of that has happened yet. In the meantime, you can read Microsoft’s article on using these features to mitigate Meltdown and Spectre attacks on systems that do not have an available hardware update. It contains links to instructions on using those features.

What About Non-OS Software?

Software plays an enormous role in all of this. Application developers can take precautions to protect their cached information. Interpreters and compilers can implement their own mitigations. The January updates included patches for IE and Edge to help block Spectre and Meltdown attacks via their Javascript interpreter. Microsoft has released an update to their C++ compiler that will help prevent Spectre attacks.

Expect your software vendors to be releasing updates. Remember that the nature of the attacks means that otherwise benign software can now be an attack vector.

Quality Control Problems with Spectre and Meltdown Patches

I am aware of three problems in the deployment pipeline for the Spectre and Meltdown fixes from Microsoft.

- Older AMD processors (Athlon series) reacted very badly to the initial patch group that contained the Spectre and Meltdown fix. In response, Microsoft stopped offering them while working on a fix. I have a Turion x2 system with Hyper-V that was offered the patch after that, so I believe that Microsoft has now issued a new fix.

- Many antivirus applications caused blue screen errors after the fixes were applied. To prevent that, Microsoft implemented a technique that will ensure that no system will receive the update until it has been deliberately flagged as being ready. Pay special attention, as that affects every system!

- Some systems simply will not take the update. I wish that I could give you some solid guidance on what to do. I do not know of any tips or tricks particular to these patches. I had one installation problem that I was able to rectify by disabling the “Give me updates for other Microsoft products when I update Windows” option. However, that particular system seemed to have problems of its own apart from the patches, so I cannot say for certain if it was anything special with this patch. You may need to open a ticket with Microsoft support.

How to Ensure that You Receive Microsoft’s Spectre and Meltdown Patches

These patches were deployed in the January 2018 security patch bundle. My system showed it as “2018-01 Security Monthly Rollup for OS Name” with a follow-up Quality Rollup. That deployment vehicle might lead you to believe that just running Windows Update will fix everything. However, that’s not necessarily the case. In order to avoid blue screens caused by incompatible antivirus applications, the updater looks for a particular registry key. If it doesn’t exist, then the updater assumes that the local antivirus is not prepared and will decline to offer the update. Since Microsoft now issues security patches in cumulative roll-up packages, it will decline every subsequent security update as well.

If Your System Runs Antivirus

In this case, “system” means host or guest. If you run an antivirus application, it is up to the antivirus program to update the registry key once it has received an update known to be compatible with the Spectre/Meltdown fixes. If you have an antivirus package and the updater will not offer the January 2018 security pack, contact your antivirus vendor. Do not attempt to force the update. Switch antivirus vendors if you must. Your antivirus situation needs to be squared away before you can move forward.

If Your System Does Not Run Antivirus

Personally, I prefer to run antivirus on my Hyper-V host. I recommend that everyone else run antivirus on their Hyper-V hosts. I’m aware that this is a debate topic and I’m aware of the counter-arguments. I’ll save that for another day.

If you do not run antivirus on your Hyper-V host (or any Windows system), then you will never receive the January 2018 security roll-up or any security roll-up thereafter without taking manual action. Microsoft has left the task of updating the related registry key to the antivirus vendors. No antivirus, no registry update. No registry update, no security patches.

In your system’s registry, navigate to HKEY_LOCAL_MACHINESOFTWAREMicrosoftWindowsCurrentVersion. If it doesn’t already exist, create a new key named “QualityCompat”. Inside that key, create a new DWORD key-value pair named “cadca5fe-87d3-4b96-b7fb-a231484277cc”. Leave it at its default value of 0x0. You do not need to reboot. The updates will be offered on the next run of Windows Update (pursuant to any applicable group policy/WSUS approvals).

For more information, you can read Microsoft’s official documentation: https://support.microsoft.com/en-us/help/4072699/january-3-2018-windows-security-updates-and-antivirus-software.

How to Apply, Configure, and Verify the Spectre and Meltdown Fixes for Hyper-V and Windows

Microsoft has already published solid walk-throughs:

- For Hyper-V hosts and non-virtualized systems: https://support.microsoft.com/en-us/help/4072698/windows-server-guidance-to-protect-against-the-speculative-execution

- For Hyper-V guests: https://docs.microsoft.com/en-us/virtualization/hyper-v-on-windows/CVE-2017-5715-and-hyper-v-vms

Things to absolutely not miss in all of that:

- Without the post-install registry changes, your Hyper-V hosts will not have the strongest protection

- Depending on your hypervisor and guest configurations, you may need to configure additional registry settings

- Each virtual machine must be COLD-booted after the host has been updated! A simple restart of the guest OS will not be sufficient.

- Live Migrations between patched/unpatched hosts will likely fail. Keep this in mind when you’re attempting to update clusters.

After you’ve applied all of the patches, you can verify the system’s level of protection. The linked articles include instructions on acquiring and using a special PowerShell module that Microsot built just for that purpose. However, several readers pointed me to a more thorough tool named InSpectre, published by Gibson Research Corporation.

The Performance Impact of the Spectre and Meltdown Patches on a Hyper-V Host

We’ve all seen the estimations that the Meltdown patch might affect performance in the range of 5 to 30 percent. What we haven’t seen is a reliable data set indicating what happens in a real-world environment. The patches are too new for us to have any meaningful levels of historical data to show. I can tell you that my usage trends are not showing any increase, but that is anecdotal information at best. I also know of another installation (using a radically different hardware, hypervisor, and software set) that has experienced crippling slowdowns.

So, for the time being, we’re going to need to take some benchmarks. For anyone who missed the not-so-subtle point: performance bottlenecks found by benchmarks do not automatically translate to performance bottlenecks in production.

Impact on Storage

From what I’ve seen so far, these patches have the greatest detriment on storage performance. Since storage already tends to be the slowest part of our systems, it makes sense that we’d find the greatest impact there.

First up is Storage Space Direct. Ben Thomas has already provided some benchmarks that show a marked slow down. Pay close attention to the differences, as they are substantial. However, most of us would be more than happy with the “slow” number of 1.3 million IOPS. Those numbers are difficult to read in comparison with builds that would be normative in a small or medium-sized business.

I also found an article detailing the storage performance impact on an Ubuntu system using ZFS in a VMware client. This helps reinforce the point that these problems are not caused by or related to your choice of hypervisor or operating system.

I don’t have an S2D environment of my own to do any comparison. However, I will perform some storage benchmarking on a standard Hyper-V build with local storage and remote Storage Spaces (not S2D) storage.

Performance Benchmarks

I ran a number of performance checks to try to help predict the impact of these updates.

DISCLAIMER: The below benchmarks are a result of these stress tests running on this particular set of hardware. The performance impacts discussed below are not consistent across all hardware platforms and your mileage may vary depending on your own situation. The below exercise is an illustration of what the measured performance impact is on this hardware, and acts as a guide for showing you how to do so in your own environments if desired.

The Build Environment and Methodology

I used the build outlined in our eBook on building a Hyper-V cluster for under $2500. To save you a click, the CPUs are Intel Xeon E3-1225 (Sandy Bridge). We should expect to see some of the more “significant” impacts that Microsoft and Intel warn about.

The tests were performed on one of the compute nodes. Some of the storage tests involved the storage system.

The host runs Windows Server 2016. It has multiple guests with multiple operating systems. I chose three representatives: 1 Windows 2012 R2, 1 Windows 10, and 1 Windows Server 2016. All run GUI Windows because Passmark won’t generate a full CPU report under Core.

None of these systems have the patch yet.

I took the following steps:

- Collected CPU and storage benchmarks from the host, a Windows 10 guest, the WS2012R2 guest, and the WS2016 guest. Tests were run separately.

- Ran a CPU stress utility on some guests while testing others.

- Ran a storage stress utility on some guests while testing others.

- I applied available firmware updates to the host and the OS patch to the management OS and all guests.

- Repeated steps 1-3 for comparison purposes.

I used these tools:

- Passmark for benchmarking

- Prime95 x64 for CPU load: https://www.mersenne.org/download/#download

- DiskSpd.exe for disk load: https://gallery.technet.microsoft.com/DiskSpd-a-robust-storage-6cd2f223

Testing Phase 1: The Pre-Patch Raw Results

To begin, I ran benchmarks against the host and some of the guests separately. This establishes a baseline.

Note: The below numbers are simply scores provided by the bench-marking application. Higher numbers mean better performance.

| Pre-Patch CPU Benchmark | |||||

|---|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | ||

| CPU Mark | 7404 | 4198 | 4055 | 4197 | |

| Integer Math | 8226 | 4209 | 4077 | 4212 | |

| Prime Numbers | 33 | 24 | 21 | 24 | |

| Compression | 8208 | 4084 | 4028 | 4103 | |

| Physics | 492 | 303 | 295 | 315 | |

| CPU Single Threaded | 1905 | 1882 | 1847 | 1886 | |

| Floating Point Math | 7371 | 3738 | 3646 | 3522 | |

| Extended Instructions (SSE) | 283 | 144 | 138 | 131 | |

| Encryption | 1260 | 632 | 592 | 631 | |

| Sorting | 5165 | 2590 | 2541 | 2608 | |

| Pre-Patch Disk Benchmark | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | 416 | 505 | 355 | 629 |

| Disk Sequential Read | 55 | 124 | 44 | 153 |

| Disk Random Seek +RW | 6 | 4 | 6 | 4 |

| Disk Sequential Write | 53 | 11 | 47 | 15 |

Phase 1 Analysis

The primary purpose of phase 1 was to establish a performance baseline. The numbers themselves mean: “the systems can do this when not under load”.

The host has four physical cores. Each virtual machine has two vCPU. As expected, each VM’s CPU performance comes out to about half of the host’s metrics.

Testing Phase 2: Pre-Patch Under CPU Load

Next, I needed to have some idea of how a load affects performance. This system serves no real-world purpose, so I needed to fake a load.

Hyper-V is architected so that loads in the management operating system take priority over the guests (I didn’t always know that, in case you find something that I wrote to the contrary). Therefore, I didn’t feel that simulating a load in the management operating system would provide any value. It will drown out the guests and that’s that.

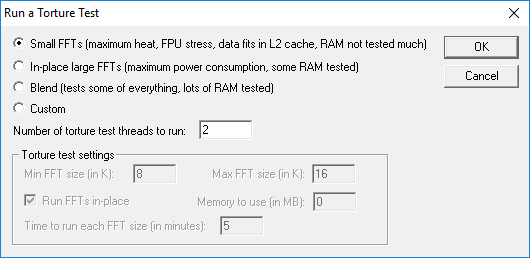

To test, I ran Prime95 on four virtual machines. I set the torture test to use small FFTs because we’re interested in CPU burn, not memory:

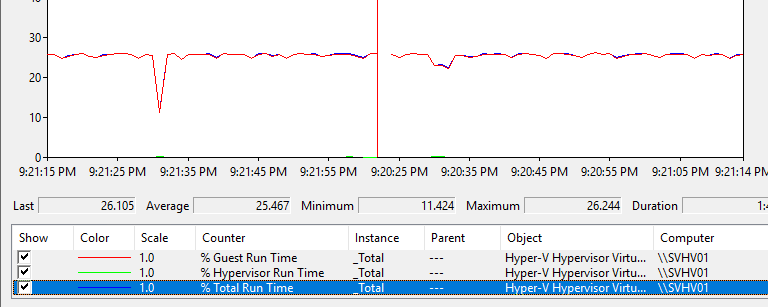

For whatever reason. that only appeared to run the CPUs up to about 25% actual usage:

The guests all believe that they’re burning full-bore, so this might be due to some optimizations. I thought the load would be higher, but we should not be overly surprised; hypervisors should do a decent job at managing their guests’ CPU usage. No matter what, this generates a load that the host distributed across all physical cores, so it works for what we’re trying to do.

While Prime95 ran in those four VMs, I tested each of the originals’ CPU and disk performance separately:

| Pre-Patch CPU Benchmark during CPU Load | ||||

|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | |

| CPU Mark | not tested | 1553 | 1339 | 1261 |

| Integer Math | not tested | 1549 | 1356 | 1335 |

| Prime Numbers | not tested | 7 | 5 | 5 |

| Compression | not tested | 1570 | 1345 | 1308 |

| Physics | not tested | 108 | 96 | 77 |

| CPU Single Threaded | not tested | 753 | 646 | 564 |

| Floating Point Math | not tested | 1385 | 1211 | 1109 |

| Extended Instructions (SSE) | not tested | 52 | 45 | 44 |

| Encryption | not tested | 232 | 205 | 201 |

| Sorting | not tested | 985 | 841 | 817 |

| Pre-Patch Disk Benchmark during CPU Load | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | 416 | 349 | 385 | 481 |

| Disk Sequential Read | 55 | 90 | 51 | 111 |

| Disk Random Seek +RW | 6 | 3 | 6 | 4 |

| Disk Sequential Write> | 53 | 2 | 48 | 16 |

Testing Phase 3: Pre-Patch Under Disk Load

Phase 3 is like phase 2 except that we’re simulating heavy disk I/O load. It’s a little different though because the guests are spread out between local and remote storage.

I used DiskSpd.exe with the following command line:

Diskspd.exe -b256K -d600 -o8 -t4 -a0,1 #0

That’s the default from the documentation with the exception of -d600 and #0. The -d600 sets the duration. I used a five-minute timeout so that I would have enough time to start the stress tool on all of the guests and run the CPU and disk benchmarks. The #0 selects a disk that the VMs have.

| Pre-Patch CPU Benchmark during I/O Load | ||||

|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | |

| CPU Mark | not tested | 3696 | 3814 | 3747 |

| Integer Math | not tested | 3934 | 3927 | 3856 |

| Prime Numbers | not tested | 13 | 18 | 16 |

| Compression | not tested | 3715 | 3759 | 3838 |

| Physics | not tested | 259 | 249 | 267 |

| CPU Single Threaded | not tested | 1760 | 1852 | 1775 |

| Floating Point Math | not tested | 3415 | 3476 | 3375 |

| Extended Instructions (SSE) | not tested | 129 | 136 | 131 |

| Encryption | not tested | 565 | 577 | 544 |

| Sorting | not tested | 2399 | 2430 | 2375 |

| Pre-Patch Disk Benchmark during I/O Load | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | not tested | 76 | 385 | 94 |

| Disk Sequential Read | not tested | 12 | 57 | 17 |

| Disk Random Seek +RW | not tested | 2 | 6 | 3 |

| Disk Sequential Write> | not tested | 5 | 43 | 5 |

Testing Phase 4: Post-Patch Raw Results

Now we’re simply going to repeat the earlier processes on our newly-updated systems. Phase 4 aligns with Phase 1: a straight benchmark of the host and representative guests.

| Post-Patch CPU Benchmark | ||||

|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | |

| CPU Mark | 7263 | 3989 | 3841 | 3705 |

| Integer Math | 8191 | 4052 | 3966 | 3827 |

| Prime Numbers | 34 | 21 | 17 | 18 |

| Compression | 8015 | 3890 | 3919 | 3336 |

| Physics | 489 | 303 | 301 | 273 |

| CPU Single Threaded | 1860 | 1712 | 1756 | 1747 |

| Floating Point Math | 7205 | 3464 | 3528 | 3281 |

| Extended Instructions (SSE) | 249 | 135 | 105 | 121 |

| Encryption | 1188 | 612 | 598 | 560 |

| Sorting | 5021 | 2469 | 2210 | 2448 |

| Post-Patch Disk Benchmark | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | 348 | 505 | 269 | 373 |

| Disk Sequential Read | 46 | 124 | 33 | 88 |

| Disk Random Seek +RW | 5 | 4 | 4 | 3 |

| Disk Sequential Write> | 44 | 11 | 36 | 10 |

Phase 4 Analysis

We don’t see that much CPU performance slowdown, but we do see some reduction in storage speeds.

Testing Phase 5: Post-Patch Under CPU Load

This test series compares against phase 2. Several VMs

| Post-Patch CPU Benchmark during CPU Load | ||||

|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | |

| CPU Mark | not tested | 1241 | 1267 | 1518 |

| Integer Math | not tested | 1203 | 1288 | 1525 |

| Prime Numbers | not tested | 5 | 5 | 7 |

| Compression | not tested | 1280 | 1253 | 1480 |

| Physics | not tested | 91 | 94 | 116 |

| CPU Single Threaded | not tested | 603 | 620 | 700 |

| Floating Point Math | not tested | 1087 | 1118 | 1325 |

| Extended Instructions (SSE) | not tested | 43 | 44 | 45 |

| Encryption | not tested | 192 | 192 | 239 |

| Sorting | not tested | 811 | 802 | 941 |

| Post-Patch Disk Benchmark during CPU Load | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | 416 | 349 | 325 | 366 |

| Disk Sequential Read | 55 | 90 | 41 | 85 |

| Disk Random Seek +RW | 6 | 3 | 6 | 4 |

| Disk Sequential Write> | 53 | 2 | 42 | 12 |

Testing Phase 6: Post-Patch Under Disk Load

This is the post-patch equivalent of phase 3. We’ll test performance while simulating high disk I/O.

Again, I used DiskSpd.exe on several VMs with the following command line:

Diskspd.exe -b256K -d600 -o8 -t4 -a0,1 #0

| Post-Patch CPU Benchmark during I/O Load | ||||

|---|---|---|---|---|

| Host | Windows 10 Guest | 2012 R2 Guest | 2016 Guest | |

| CPU Mark | not tested | 3152 | 3104 | 2920 |

| Integer Math | not tested | 3192 | 3268 | 3106 |

| Prime Numbers | not tested | 14 | 14 | 13 |

| Compression | not tested | 3163 | 2654 | 2361 |

| Physics | not tested | 215 | 219 | 211 |

| CPU Single Threaded | not tested | 1667 | 1774 | 1614 |

| Floating Point Math | not tested | 2678 | 2885 | 2672 |

| Extended Instructions (SSE) | not tested | 105 | 95 | 100 |

| Encryption | not tested | 476 | 452 | 449 |

| Sorting | not tested | 2015 | 2086 | 1999 |

| Post-Patch Disk Benchmark during I/O Load | ||||

|---|---|---|---|---|

| Host (internal) | Windows 10 Guest (Remote SS) | 2012 R2 Guest (internal) | 2016 Guest (Remote SS) | |

| Disk Mark | not tested | 40 | 360 | 54 |

| Disk Sequential Read | not tested | 5 | 50 | 7 |

| Disk Random Seek +RW | not tested | 1 | 5 | 3 |

| Disk Sequential Write | not tested | 3 | 44 | 4 |

Consolidated Charts

These charts reorganize the above data to align each result with its system rather than its condition.

| Host CPU | ||

|---|---|---|

| Pre | Post | |

| CPU Mark | 7404 | 7263 |

| Integer Math | 8226 | 8191 |

| Prime Numbers | 33 | 34 |

| Compression | 8208 | 8015 |

| Physics | 492 | 489 |

| CPU Single Threaded | 1905 | 1860 |

| Floating Point Math | 7371 | 7205 |

| Extended Instructions (SSE) | 283 | 249 |

| Encryption | 1260 | 1188 |

| Sorting | 5165 | 5021 |

Average Host CPU Performance Reduction is roughly 2%

| Host Disk (Internal) | ||

|---|---|---|

| Pre | Post | |

| Disk Mark | 416 | 348 |

| Disk Sequential Read | 55 | 46 |

| Disk Random Seek +RW | 6 | 5 |

| Disk Sequential Write | 53 | 44 |

Average Host Disk Performance Reduction is roughly 17.4%

| Windows 10 Guest CPU | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| CPU Mark | 4198 | 1553 | 3696 | 3989 | 1241 | 3152 |

| Integer Math | 4209 | 1549 | 3934 | 4052 | 1203 | 3192 |

| Prime Numbers | 24 | 7 | 13 | 21 | 5 | 14 |

| Compression | 4084 | 1570 | 3715 | 3890 | 1280 | 3163 |

| Physics | 303 | 108 | 259 | 303 | 91 | 215 |

| CPU Single Threaded | 1882 | 753 | 1760 | 1712 | 603 | 1667 |

| Floating Point Math | 3738 | 1385 | 3415 | 3464 | 1087 | 2678 |

| Extended Instructions (SSE) | 144 | 52 | 129 | 135 | 43 | 105 |

| Encryption | 632 | 232 | 565 | 612 | 192 | 476 |

| Sorting | 2590 | 985 | 2399 | 2469 | 811 | 2015 |

Average Guest CPU performance reduction is roughly 5%

| Windows 10 Guest Disk (Remote Storage Spaces) | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| Disk Mark | 505 | 349 | 76 | 505 | 349 | 40 |

| Disk Sequential Read | 124 | 90 | 12 | 124 | 90 | 5 |

| Disk Random Seek +RW | 4 | 3 | 2 | 4 | 3 | 1 |

| Disk Sequential Write | 11 | 2 | 5 | 11 | 2 | 3 |

Average I/O performance reduction is roughly 47.4%

| Windows 2012 R2 Guest CPU | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| CPU Mark | 4055 | 1339 | 3814 | 3841 | 1267 | 3104 |

| Integer Math | 4077 | 1356 | 3927 | 3966 | 1288 | 3268 |

| Prime Numbers | 21 | 5 | 18 | 17 | 5 | 14 |

| Compression | 4028 | 1345 | 3759 | 3919 | 1253 | 2654 |

| Physics | 295 | 96 | 249 | 301 | 94 | 219 |

| CPU Single Threaded | 1847 | 646 | 1852 | 1756 | 620 | 1774 |

| Floating Point Math | 3646 | 1211 | 3476 | 3528 | 1118 | 2885 |

| Extended Instructions (SSE) | 138 | 45 | 136 | 105 | 44 | 95 |

| Encryption | 592 | 205 | 577 | 598 | 192 | 452 |

| Sorting | 2541 | 841 | 2430 | 2210 | 802 | 2086 |

Average CPU performance reduction is roughly 5.3%

| Windows 2012 R2 Guest Disk (Internal) | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| Disk Mark | 355 | 385 | 385 | 269 | 325 | 360 |

| Disk Sequential Read | 44 | 51 | 57 | 33 | 41 | 50 |

| Disk Random Seek +RW | 6 | 6 | 6 | 4 | 6 | 5 |

| Disk Sequential Write | 47 | 48 | 43 | 36 | 42 | 44 |

Average I/O performance reduction is roughly 6.5%

| Windows 2016 Guest CPU | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| CPU Mark | 4197 | 1261 | 3747 | 3705 | 1518 | 2920 |

| Integer Math | 4212 | 1335 | 3856 | 3827 | 1525 | 3106 |

| Prime Numbers | 24 | 5 | 16 | 18 | 7 | 13 |

| Compression | 4103 | 1308 | 3838 | 3336 | 1480 | 2361 |

| Physics | 315 | 77 | 267 | 273 | 116 | 211 |

| CPU Single Threaded | 1886 | 564 | 1775 | 1747 | 700 | 1614 |

| Floating Point Math | 3522 | 1109 | 3375 | 3281 | 1325 | 2672 |

| Extended Instructions (SSE) | 131 | 44 | 131 | 121 | 45 | 100 |

| Encryption | 631 | 201 | 544 | 560 | 239 | 449 |

| Sorting | 2608 | 817 | 2375 | 2448 | 941 | 1999 |

Average CPU performance reduction is roughly 11.8%

| Windows 2016 Guest Disk (Remote Storage Spaces) | ||||||

|---|---|---|---|---|---|---|

| Pre | Pre+CPU | Pre+I/O | Post | Post+CPU | Post+I/O | |

| Disk Mark | 629 | 481 | 94 | 373 | 366 | 54 |

| Disk Sequential Read | 153 | 111 | 17 | 88 | 85 | 7 |

| Disk Random Seek +RW | 4 | 4 | 3 | 3 | 4 | 3 |

| Disk Sequential Write | 15 | 16 | 5 | 10 | 12 | 4 |

Average I/O performance reduction is roughly 42.6%

Data Analysis

The analysis has a fairly simple conclusion: some CPU functions degraded noticeably, others did not, the impact on I/O varied from very little to significant. The Windows 2016 guest does have a wide disparity in its disk statistics. Based on the other outcomes, I suspect an environmental difference or that I made some error when running the traces more than I believe that the raw speed degraded by 50%.

Conclusion

What we learn from these charts is that the full range of updates causes CPU performance degradation for some functions but leaves others unaffected. Normal storage I/O does not suffer as much. I imagine that the higher impacts that you see in the Storages Spaces Direct benchmarks shared by Ben Thomas are because of their extremely high speed. Since most of us don’t have storage subsystems that can challenge a normal CPU, we aren’t affected as strongly.

Overall, I think that the results show what could be reasonably inferred without benchmarks: high utilization systems will see a greater impact. As far as the exact impact on your systems, that appears to largely depend on what CPU functions they require most. If your pre-patch conditions were not demanding high performance, I suspect that you’ll suffer very little. If you have higher demand workloads, I’d recommend that you try a test environment if possible. If not, see if your software vendors have any knowledge or strategies for keeping their applications running acceptably.

Tell Me Your Experiences

I’ve given a breakdown of the benchmarking from my set-up but I’m curious to know if others have experienced a similar performance impact from the patches. Furthermore, if you still have any lingering doubts about the whole issue I’m ready to answer any questions you have about Meltdown, Spectre or anything else related to these security vulnerabilities and their respective patches. Contact me through the comments section below!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

39 thoughts on "The Actual Performance Impact of Spectre/Meltdown Hyper-V Updates"

Good write up but no mention of x86 (32bit meltdown) update not being available.

We have 25 windows 7 PC’s on 32bit windows 7, and even though meldown KB has been installed there is no meltdown protection.

Another useful thing to mention is a free test tool (much easier than the microsoft way of testing for protection) is available from grc.com called InSpectre and works really well.

What I have not understood well so far is the relationship we have with virtual CPUs. As explained in this article

https://www.altaro.com/hyper-v/hyper-v-virtual-cpus-explained/,

and how I have understood it, the vCPU send a thread to the Thread Scheduler and the thread gets then passed over to be executed on one of the physical CPUs. Should it therefore not enough that only the host, on which the hypervisor is running, is getting patched? Since from my understanding it is only the ‘physical’ thread that could make use of the branch prediction. Are there some further information available that describes in more detail how instructions are passed from a vCPU to a pCPUs to better understand this.

Cheers

Portions of the fixes in question only exist in the operating system patches. When you pass up a thread from a guest to the hypervisor, it bypasses a lot of the controls in the management OS. Microcode (firmware) fixes always apply, of course. This isn’t a blanket statement because the hypervisor portion also gets some updates, but you should always expect the hypervisor to minimize its involvement in performance-sensitive operations.