Save to My DOJO

Q: How many networks should I employ for my clustered Hyper-V Hosts?

A: At least two, architected for redundancy, not services.

This answer serves as a quick counter to oft-repeated cluster advice from the 2008/2008 R2 era. Many things have changed since then and architecture needs to keep up. It assumes that you already know how to configure networks for failover clustering.

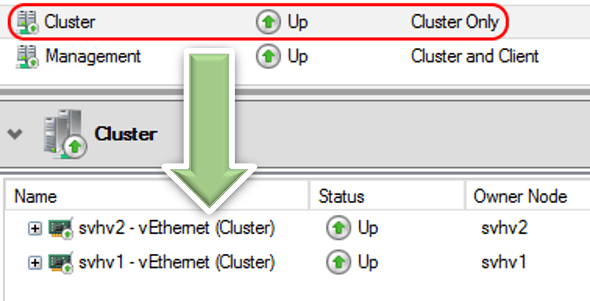

In this context, “networks” means “IP networks”. Microsoft Failover Cluster defines and segregates networks by their subnets:

Why the Minimum of Two?

Using two or more networks grants multiple benefits:

- The cluster automatically bypasses some problems in IP networks, preventing any one problem from bringing the entire cluster down

- External: a logical network failure that breaks IP communication

- Internal: a Live Migration that chokes out heartbeat information, causing nodes to exit the cluster

- An administrator can manually exclude a network to bypass problems

- If hosted by a team, the networking stack can optimize traffic more easily when given multiple IP subnets

- If necessary, traffic types can be prioritized

Your two networks must contain one “management” network (allows for cluster and client connections). All other networks beyond the first should either allow cluster communications only or prevent all cluster communications (ex: iSCSI). A Hyper-V cluster does not need more than one management network.

How Many Total?

You will need to make architectural decisions to arrive at the exact number of networks appropriate for your system. Tips:

- Do not use services as a deciding point. For instance, do not build a dedicated Live Migration or CSV network. Let the system balance traffic.

- In some rare instances, you may have network congestion that necessitates segregation. For example, heavy Live Migration traffic over few gigabit adapters. In that case, create a dedicated Live Migration network and employ Hyper-V QoS to limit its bandwidth usage

- Do take physical pathways into account. If you have four physical network adapters in a team that hosts your cluster networks, then create four cluster networks.

- Avoid complicated network builds. I see people trying to make sense out of things like 6 teams and two Hyper-V switches on 8x gigabit adapters with 4x 10-gigabit adapters. You will create a micro-management nightmare situation without benefit. If you have any 10-gigabit, just stop using the gigabit. Preferably, converge onto one team and one Hyper-V switch. Let the system balance traffic.

Do you have a question for Eric?

Ask your question in the comments section below and we may feature it in the next “Quick Tip” blog post!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

12 thoughts on "Hyper-V Quick Tip: How Many Cluster Networks Should I Use?"

Great article, thanks for the tip!

Eric

I really struggle to understand a probably very basic part of cluster networking and iSCSI using the same NICs. If I have per example 2 x 10GB NICs per server node. Now with just that, how can I accommodate for using the Windows teaming feature for generic network path redundancies (CSVs, Witness, Cluster communication etc.) but NOT use it for the iSCSI traffic, since that should use MPIO.

I can think of no longer creating team adapters outside Hyper-V and start using Switch Embedded Teaming (SET). I should then theoretically be able to use non teamed NICs outside Hyper-V to achieve MPIO for iSCSI traffic.

Am I on track with my assumption or totally off? 🙂

Cheers

Juri

With 2x10GbE, you would make a team, put a Hyper-V virtual switch on it, and use vNICs for iSCSI. Or, you could use SET, that would be fine. You would then configure MPIO across those vNICs. Yes, it will be less efficient than using dedicated pNICs for iSCSI, but with 10GbE that probably won’t ever matter.

Wouldn’t I somehow need to prioritize the iSCSI traffic, since the teams traffic could add latency to the storage traffic?

Possibly. Don’t invest in fixing a problem until you can prove that you have it.

We have a four node 2008 R2 HyperV cluster and we are migrating over to a new core switch. When we move the cluster network over the quorum breaks and the cluster fails so we have to move the four cluster connection back to the old core switch. We checked the vlans and we are pretty sure we had configured the cluster vlan and routing the same for both the old core switch and the new core switch. I think you mentioned that the 2008 R2 cluster choose which will be the cluster network based on the IP addressing assigned to the adapters of the nodes. I am still new at this so I don’t understand why the quorum is never reached when we move the connections over to the new core switch.

08R2 did not have dynamic quorum so in a four-node cluster, if a node cannot reach a minimum of two other voting members (2 nodes or 1 node witness) then it will exit the cluster. When the cluster network drops, the ability to reach the witness does not satisfy the minimum for quorum. You can add an alternative network and enable it for cluster traffic or temporarily set your cluster mode to witness-only.