Save to My DOJO

I encountered a question regarding some of the environment deployment options available in Hyper-V. At the time, I just gave a quick, off-the-cuff response. On further reflection, I feel like this discussion merits more in-depth treatment. I am going to expand it out to include all of the possibilities: physical, virtual machine with Windows Server, Hyper-V container, and Windows containers. I will also take some time to talk through the Nano Server product. We will talk about strategy in this article, not how-to.

Do I Need to Commit to a Particular Strategy?

Yes… and no. For any given service or system, you will need to pick one and stick with it. Physical and virtual machines don’t differ much, but each of the choices involves radical differences. Switching to a completely different deployment paradigm would likely involve a painful migration. However, the same Hyper-V host(s) can run all non-physical options simultaneously. Choose the best option for the intended service, not by the host. I can see a case for segregating deployment types across separate Hyper-V and Windows Server hosts. For instance, the Docker engine must run on any host that uses containers. It’s small and can be stopped when not in use, but maybe you don’t want it everywhere. If you want some systems to only to run Windows containers, then you don’t need Hyper-V at all. However, if you don’t have enough hosts to make separation viable, don’t worry about it.

Check Your Support

We all get a bit starry-eyed when exposed to new, flashy features. However, we all also have at least one software vendor that remains steadfastly locked in a previous decade. I have read a number of articles that talk about moving clunky old apps into shiny new containers (like this one). Just because a thing can be done — even if it works — does not mean that the software vendor will stand behind it. In my experience, most of those vendors hanging on to the ’90s also look for any minor excuse to avoid helping their customers. Before you do anything, make that phone call. If possible, get a support statement in writing.

You have a less intense, but still important, support consideration for internal applications. Containers are a really hot technology right now, but don’t assume that even a majority of developers have the knowledge and experience — or even an interest — in containers. So, if you hire one hotshot dev that kicks out amazing container-based apps but s/he moves on, you might find yourself in a precarious position. I expect this condition to rectify itself over time, but no one can guess at how much time. Those vendors that I implicated in the previous paragraph depend on a large, steady supply of developers willing to specialize in technologies that haven’t matured in a decade, and supply does not seem to be running low.

Understand the Meaning of Your Deployment Options

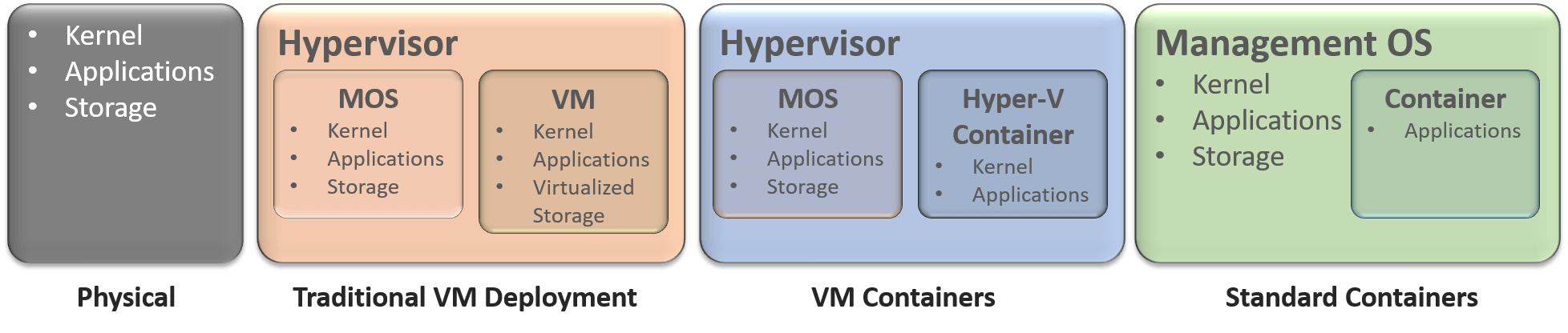

You don’t have to look far for diagrams comparing containers to more traditional deployments. I’ve noticed that very nearly all of them lack coverage of a single critical component. I’ll show you my diagram; can you spot the difference?

See it? Traditional virtual machines are the only ones that wall off storage for their segregated environments. Most storage activity from a container is transient in nature — it just goes away when the container halts. Anything permanent must go to a location outside the container. While trying to decide which of these options to use, remember to factor all of that in.

Physical Deployments

I personally will not consider any new physical deployments unless conditions absolutely demand it. I do understand that vendors often dictate that we do ridiculous things. I also understand that it’s a lot easier for a technical author to say, “Vote with your wallet,” than it is to convert critical line-of-business applications away from an industry leader that depends on old technology toward a fresh, unknown startup that doesn’t have the necessary pull to integrate with your industry’s core service providers. Trust me, I get it. All I can say on that: push as hard as you can. If your industry leader is big enough, there are probably user groups. Join them and try to make your collective voices loud enough to make a difference. Physical deployments are expensive, difficult to migrate, difficult to architect against failure and depend on components that cannot be trusted.

Beware myths in this category. You do not need physical domain controllers. Most SQL servers do not need physical operating system environments — the exceptions need hardware because guest clustering has not yet become a match for physical clustering. Even then, if you can embrace new technologies such as scale-out file servers to avoid shared virtual hard disks, you can overcome those restrictions.

Traditional Virtual Machine Deployments

Virtual machines abstract physical machines, giving you almost all of the same benefits of physical with all the advantages of digital abstraction. Some of the primary benefits of using virtual machines instead of one of the other non-physical methods:

- Familiarity: you’ve done this before. You, and probably your software vendors, have no fear.

- Full segregation: as long as you’re truly virtualizing (meaning, no pass-through silliness) — then your virtual machines can have fully segregated and protected environments. If you need to make them completely separate, then employ the Shielded VM feature. No other method can match that level of separation.

- Simple migration: Shared Nothing Live Migration, Live Migration, Quick Migration, and Storage Migration can only be used with real virtual machines.

- Checkpoints: Wow, checkpoints can save you from a lot of headaches when used properly. No such thing for containers. Note: a solid argument can be made that a properly used container has no use for checkpoints.

The wall of separation provided by virtual machines comes with baggage, though. They need the most memory, the most space, and usually the most licensing costs.

Standard Containers

Containers allow you to wall off compute components. Processes and memory live in their own little space away from everyone else. Disk I/O gets some special treatment, but it does not enjoy the true segregation of a virtual machine. However, kernel interactions (system calls, basically) get processed by the operating system that owns the container.

You have three tests when considering a container deployment:

- Support: I think we covered this well enough above. Revisit that section if you skipped it.

- Ability to work on today’s Windows Server version: since containers don’t have kernel segregation, they will use whatever kernel the hosting operating system uses. If you’ve got a vendor that just now certified their app on Windows Server 2008 R2 (or, argh, isn’t even there yet), then containers are clearly out. Your app provider needs to be willing to move along with kernel versions as quickly as you upgrade.

- A storage dependency compatible with container storage characteristics: the complete nature of the relationship between containers and storage cannot be summed up simply. If an app wasn’t designed with containers in mind, then you need to be clear on how it will behave in a container.

Containers are the thinnest level of abstraction for a process besides running it directly on host hardware. You basically only need the Container role and Docker software running. You don’t need a lot of spare space or memory to run containers. You can use containers to get around a lot of problems introduced by trying to run incompatible processes in the same operating system environment. As long as you can satisfy all of the requirements, they might be your best solution.

Really, I think that management struggles pose the second greatest challenge to container adoption after support. Without installing even more third-party components, docker control occurs by command line. When you start following the container tutorials that have you start your containers interactively, you’ll learn that the process to get out of a container involves stopping it. So, you’ll have to also pick up some automation skills. For people accustomed to running virtual machines in a GUI installation of Windows Server, the transition will be jarring.

Hyper-V Containers

Virtual machine containers represent a middle ground between virtual machines and containers. They still do not get their own storage. However, they do have their own kernel apart from the physical host’s kernel. Apply the same tests as for regular containers, minus the “today’s version” bit.

Hyper-V containers give two major benefits over standard containers:

- No kernel interdependence: Run just about any “guest” operating system that you like. You don’t need to worry (as much) about host upgrades.

- Isolation of system calls: I can’t really qualify or quantify the value of this particular point. Security concerns have caused all operating systems to address process isolation for many years now. But, an additional layer of abstraction won’t hurt when security matters.

The biggest “problem” with Hyper-V Containers is the need for Hyper-V. That increases the complexity of the host deployment and (as a much smaller concern) increases the host’s load. Hyper-V containers still beat out traditional virtual machines in the low resource usage department but require more than standard containers. Each Hyper-V container runs a separate operating system, similar to a traditional virtual machine. They retain the not-really-separate storage profile of standard containers, though.

What About Nano Server?

If you’re not familiar with Nano, it’s essentially a Windows build with the supporting bits stripped down to the absolute minimum. As many have noticed (with varying levels of enthusiasm), Nano has been losing capabilities since its inception. As it stands today, you cannot run any of Microsoft’s infrastructure roles within Nano. With all of the above options and the limited applicability of Nano, it might seem that Nano has lost all use.

I would suggest that Nano still has a very viable place. Not every environment will have such a place, of course. If you can’t find a use for Nano, don’t force it. To understand where it might be suited, let’s start with a simplified bit of backstory on Nano. Why was infrastructure support stripped from it? Two reasons:

- Common usage 1: Administrators were not implementing Nano for these roles in meaningful quantities. Microsoft has a history of moving away from features that no one uses. Seems fair.

- Common usage 2: Developers were implementing Nano in droves. Microsoft turned their attention to them. Also seems fair.

- Practicality: Of course, some administrators did use Nano for infrastructure features. And they wanted more. And more. And in trying to satisfy those requests, Nano started growing to the point that once it checked everyone’s boxes, it was essentially Windows Server Core with a different name.

So, where can you use Nano effectively? Look at Microsoft’s current intended usage: applications running on .Net Core in a container. One immediate benefit: churning out Nano containers requires much less effort than deploying Nano to a physical or virtual machine. Nano containers are ultra-tiny, giving you unparalleled density for compute-only Windows-based applications (i.e., web front-ends and business logic processing systems in n-tier applications).

How Do I Decide?

I would like to give you a simple flowchart, but I don’t think that it would properly address all considerations. I think that it would also create a false sense of prioritization. Other than “not physical”, I don’t believe that any reasonable default answer exists. A lot of people don’t like to hear it, but I also think that “familiarity” and “comfort” carry a great deal of weight. I can’t possibly have even a rudimentary grasp of all possible decision points when it comes to the sort of applications that might be concerning you.

I personally place “supportability” at the highest place on any decision tree. I never do anything that might get me excluded from support. Even if I have the skills to fix any problems that arise, I will eventually move on from this position. Becoming irreplaceable sounds good in job security theory, but never makes for good practice.

You have other things to think about as well. What about backup? Hopefully, a container only needs its definition files to be quickly rebuilt, but people don’t always use things the way that the engineers intended. On that basis alone, virtual machines will continue to exist for a very long time.

Need More Help with the Decision?

It can be daunting to make this determination with something as new as containers in the mix. Fear not! Altaro is hosting a VERY exciting webinar later this month on this topic in mind specifically. My good friend and Altaro Technical Evangelist Andy Syrewicze will be officiating an AMA styled webinar with Microsoft’s very own Ben Armstrong on the subject of containers, and the topic written about here will be expanded upon greatly.

Thanks for Reading!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

7 thoughts on "How to Choose the Right Deployment Strategy"

Hi Eric,

I don’t know if this is verboten here or not, but if you’re interested in containers I recommend checking out GCS and Kubernetes – I’ve been reading a lot about this over the past few weeks, and am starting to wonder why anyone planning any new computation/dev/big data/machine learning projects wouldn’t be seriously considering GCS. Dramatic improvements in agility, adaptability, performance, efficiency, reliability and scalability while significantly reducing support, complexity and cost (not just over on-prem infrastructure, but compared to other cloud options). I’d be interested to read your thoughts.

The only ideas that I reject here are spam, scams, and posts that serve no purpose except to be mean.

I have not yet delved into Kubernetes much. I think there is a disconnect out there between these hot new technologies and the things that really happen in a typical business. I recently had to listen to one of my vendors go off on a five minute rant against Microsoft because he doesn’t feel like they take session 0 “server” software seriously anymore. After that call, I had to go deal with a COM port problem. Archaic technologies are the norm. Things like you’re talking about are the exception.

Yeah, these new technologies are amazing. I would love to really get comfortable with them. The problem is that almost no one starts net-new code or net-new projects. Most people take whatever has worked for years and paints a flashy new interface over it to get through the sales/bidding process. My normal audience just won’t have the opportunity to see really hot tech in action for a long time… maybe not even until they’ve been replaced by the next new thing.

If you want to share some of your research, I’d like to look into it.