Save to My DOJO

The constant threats of ransomware and data breaches place even greater urgency on the vital practice of backup strategy. Permanent data loss or exposure can destroy a business. To properly plan your backup schedule, you need to know your loss tolerances (RPO) and the amount of downtime that you can withstand (RTO). Check out this Altaro article for Defining the Recovery Time (RTO / RTA) and Recovery Point (RPO / RPA) for your Business (also Backup Bible). You won’t find any “one size fits all” solution to data protection due to the uniqueness of datasets, even within the same company. This article covers several considerations and activities to optimize backup strategy, planning, and scheduling.

Best Practices for Backup Infrastructure Design

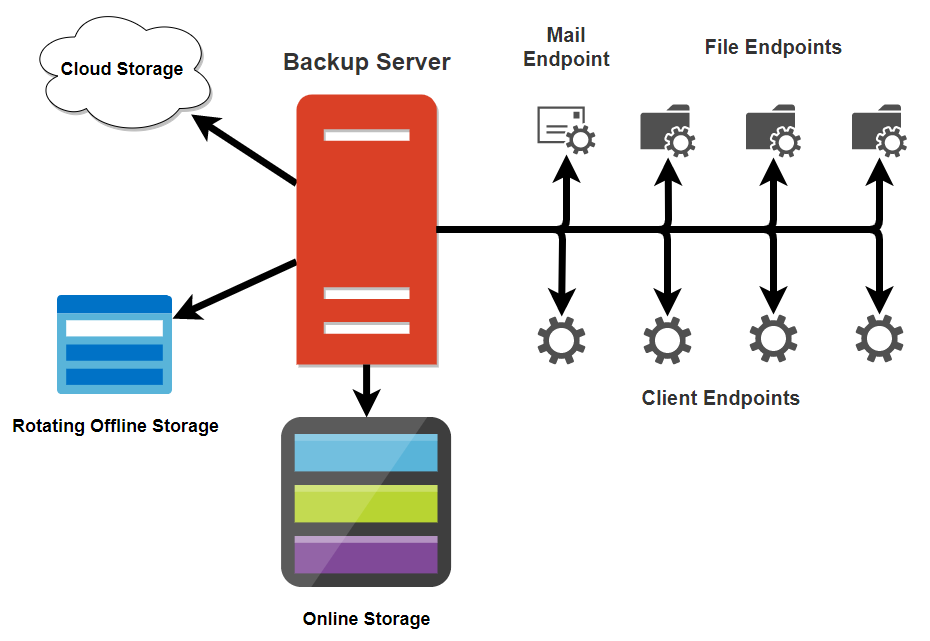

Your backup infrastructure exists inextricably among the rest of your system. Sometimes, that obscures its unique identity. Not understanding backup as a component can lead to architectural mistakes that cost time, money, and sometimes data. Visualize your backup infrastructure as a standalone design:

Think of systems to back up as “endpoints”, following the categorization of your backup system. A “File Endpoint” label could refer to a standard file server, or it could mean any application server that works with VSS and requires no other special handling. A “Client Endpoint” almost falls into the “File Endpoint”, except that you can never guarantee its availability. Some systems have more particular requirements from your backup application, such as e-mail. Set those apart. In all other cases, use the most generic category possible. Whether you create a diagram does not matter as much as your ability to understand your infrastructure from the perspective of backup.

Consider these best practices while designing your backup infrastructure:

- Automate every possible point. Over time, repetitive manual tasks tend to be skipped, forgotten, or performed improperly.

- Add high availability aspects. When possible, avoid single points of failure in your backup infrastructure. Your data becomes excessively vulnerable whenever the backup system fails, so keep its uptime as close to 100% as your situation allows.

- Allow for growth. If you already perform trend analysis on your data and systems, you can leverage that to predict backup needs into the future. If you don’t have enough data for calculated estimates, then make your best guess. You do not want to face a long capital request process, or worse, denial, if you run out of capacity.

- Remember the network. Most networks operate at a low level of utilization. If that applies to you, you won’t need to architect anything special to carry your backup data. Do not make assumptions; have an understanding of your total bandwidth requirements during backup windows. Consider your Internet and WAN link speeds when preparing for cloud or offsite backup.

- Mind your RPOs and RTOs. RPOs dictate how much space capacity your backup infrastructure requires. RTOs control their speed and resiliency needs.

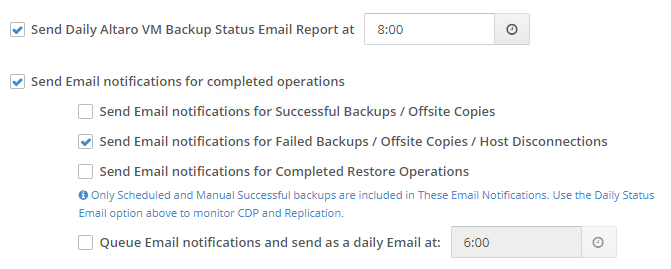

- Configure alerts. You need to know immediately if a backup process fails. Ideally, you can automate the backup system to continue trying when it encounters a problem. However, even when it successfully retries, it still must make you aware of a failure so that you can identify and correct any small problems before they turn into show-stoppers. Optimally, you want a daily report. That way, if you don’t get the expected message, you’ll know that your backup system has a failure. Minimally, configure to receive error notifications. The notification configuration in Altaro VM Backup looks like this:

- Employ backup replication. If one copy of your data is good, two is better. If the primary data and its replica exist in substantially distant geographic regions, they help protect against natural disasters and other major threats to physical systems.

- Document everything. Your design will make so much sense when you build it that you’ll wonder how anyone would struggle to understand it. But, six months later, some of it will puzzle even you. Even if it can’t fool you, it can fool someone. Write it all down. Keep records of hardware and software. Note install directions. Keep copies of RTO and RPO decisions.

- Schedule refreshes. Your environment changes. Things get old. Prepare yourself by scheduling frequent reviews. Timing depends on your organization’s flux, but do not allow reviews to occur less than annually.

- Design and schedule tests. If you have not restored your backup data, then you do not know if you can restore it. Even if you know the routine, that does not guarantee the validity of the data. Test restores help to uncover problems in media and misjudgments in RTOs and RPOs. Remember that a restore test goes beyond your backup application’s green checkmark. Start up the restored system(s) and verify that they can operate as desired. Automate these processes wherever possible.

Best Practices for Datacenter Design to Reduce Dependence on Backup

Remember that backup serves as your last line of defence. Backups don’t always complete and restores don’t always work. Even if nothing goes wrong during recovery, it still needs time to complete. While designing your datacenter, constrain the odds of needing to restore from backup.

- Implement fault tolerance. “Fault tolerance” means the ability to continue operating without interruption in the event of a failure. The most common and affordable fault tolerant technologies protect power and storage systems. You can purchase or install local and network storage with multiple disks in redundant configurations. Some other systems have fault tolerant capabilities, such as network devices with redundant pathways.

- Implement high availability. Where you find fault tolerance too difficult or expensive to implement, you may discover acceptable alternatives to achieve high availability. Clusters perform this task most commonly. A few technologies will operate in an active/active configuration, which provides some measure of fault tolerance. Most clusters utilize an active/passive design. In the event of a failure, they can transfer operations to another member of the cluster with low, or sometimes effectively no service interruption.

- Remember the large data chunks that will traverse your network. As mentioned in the previous section, networks tend to operate well below capacity. Backup might strain that. In a competitive network, backups might not complete within an acceptable timeframe or they might choke out other vital operations. The best way to address such problems is through network capacity expansion. If you can’t do that, leverage QoS. If you employ internal firewalls, ensure that the flood of backup data does not overwhelm them. That might require more powerful hardware or specially tuned exclusions.

Best Practices for Backing Up Different Services

While determining your RTOs and RPOs, you will naturally learn the priority of the data and systems that support your organization. Use that knowledge to balance your backup technology and scheduling.

- Utilize specialized technology where appropriate. Most modern backup applications, such as Altaro VM Backup, recognize Microsoft Exchange and provide highly granular backup and recovery. In the event of small problems (e.g., an accidentally deleted e-mail), you can make small, targeted recoveries instead of rebuilding the entire system. Do not overuse these tools, however. They add complexity to backup and recovery. If you have a simplistic implementation of a specialized tool that won’t benefit from advanced protections, apply a regular backup strategy. As an example, if you have a tiny SQL database that changes infrequently, don’t trouble yourself with transaction log backups or continuous data protection.

- Space backups to fit RPOs. This best practice is partly axiomatic; a backup that doesn’t meet RPO is insufficient. However, if a system has a long RPO and you configure its backup for a short interval, it will consume more backup space than strictly necessary. If that would cause your organization a hardship in storage funding, then reconfigure to align with the RPO.

- Pay attention to dependencies. Services often require support from other services. Your web front-ends depend on your database back-ends, for example. If the order of backup or recovery matters, time them appropriately.

- Minimize resource contention. With multiple backup endpoints come scheduling conflicts. If your backup system tries to do too much at once, it might fail or cause problems for other systems. Try to target quieter times for larger backups. Stagger disparate systems so that they do not run concurrently.

Best Practices for Optimizing Backup Storage

If you work within a data-focused organization, your quantity of data might grow faster than your IT budget. Backups naturally incur cost by consuming space. Follow these best practices to balance storage utilization:

- Constrain usage of SSD and other high-speed technology as backup storage to control costs. Consider systems that require a short RTO first. After that, look at any system that churns a high quantity of data and has a short RPO, then it might need SSD to meet the requirement. Remember that RPO alone does not justify fast storage; if a more cost-effective solution meets your needs, use it.

- Move backups from fast to slow storage where appropriate. It makes sense to place recent backups of your short RTO/RPO systems on fast disk. It doesn’t make sense to leave it there. If you ever need to restore one of those systems from an older point, then either you have suffered a major storage failure, or you have no failure at all (e.g., someone needs to see a specific piece of data that no longer exists in live storage). In those cases, you can keep costs down by sacrificing quick recovery. Move older data to slower, less expensive storage.

- Use archival disconnected storage. Recovering from disconnected storage implies that a catastrophe-level event has occurred. Such events happen rarely enough that you can justify media that maximizes capacity per dollar. Manufacturers often market such storage as “archival”. They expect customers to use those products to hold offline data, so they typically offer a higher data survival rate for shelved units. In comparison, some technologies, such as SSD, can lose data if left unpowered for long periods of time. Your offline data must reside on media built for offline data.

- Carefully craft retention policies. In a few cases, regulations set your retention policies. In all other areas, think through the possibilities before deciding on a retention policy. You could keep your Active Directory for ten years, but what would you do with a ten-year-old directory? You could keep backups of your customer database for five years, but does your database already contain all data stretching back further than that? If you keep retention policies to their legal and logical minimums, you reduce your storage expense.

- Leverage space-saving backup features. Ideally, we would make full backups of everything. More distinct copies make our data safer. Realistically, we cannot hold or use very many full backups. Put technologies such as incremental, differential, deduplication, and compression backup features to use. Remember that all of these except compression depend on a full backup. Spacing out full backups introduces some risk and extends recovery time.

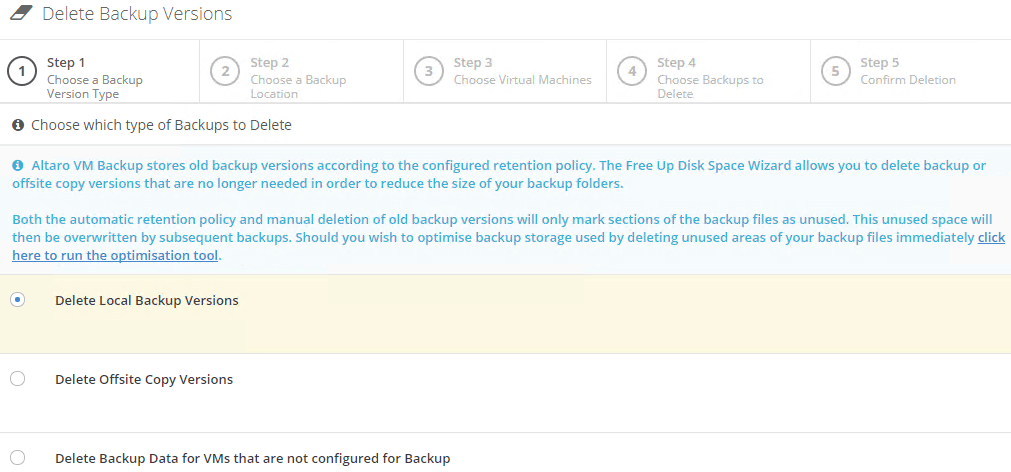

- Learn your backup software’s cleanup mechanism. Even in the days of all-tape backup, we had to remember to periodically clean up backup metadata. With disk-based backup, we need to reclaim space from old data. Most modern software (such as Altaro VM Backup) will automatically time cleanups to align with retention policies. However, they also should include a way for you to clear space on demand. You may use this option when retiring systems.

Best Practices for Backup Security

Every business is responsible for protecting its client and employee data. Those in regulated industries may have additional compliance requirements. A full security policy exceeds the scope of a blog article, so ensure that you perform due diligence by researching and implementing proper security best practices. Some best practices that apply in backup:

- Follow standard security best practices to protect data at rest and during transmission.

- Encrypt your backup data, either by using automatically encrypted storage or the encryption feature of your backup application.

- Implement digital access control. Apply file and folder permissions so that only the account that operates your backup application can write to backup storage. Restrict reads to the backup application and authorized users. Use firewalls and system access rules to keep out the curious and malicious. For extreme security, you can isolate backup systems almost completely.

- Implement physical access control. A security axiom: anyone who can physically access your systems/media can access its data. Encryption might slow someone down, but sufficient time and determination will break any lock. Keep unnecessary people out of your datacenter and away from your backup systems. Create a chain-of-custody procedure for your backup media. Magnetize or physically damage media that you intend to retire.

Protect Your Data with Standard Best Practices

If you follow the backup strategy best practices listed above, then you will have a strong backup plan with an optimized schedule for each service and system. Continue your protection activities by following the best practices that apply to all types of systems: patch, repair, upgrade, and refresh operating systems, software, and hardware as sensible.

Most importantly, periodically review your backup strategy. Everything changes. Don’t let even the smallest alteration leave your data unprotected!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!