Hyper-V is inherently a host-bound service. It controls all of the resources that belong to the physical machine, but that is the extent of its reach. This is where Microsoft Failover Clustering comes in.

Failover Clustering works alongside Hyper-V to protect virtual machines, but it is a separate technology. As a result, it uses its own management interface, aptly named “Failover Cluster Manager”.

[thrive_leads id=’16356′]

This portion of our series will introduce you to clustering technology and take you on a tour through the entire Failover Cluster Manager interface, much like our sections on Hyper-V Manager. As with those sections, this is intended to be an interface guide only. The constituent concepts of failover clustering will be explained in other articles in this series.

What is Microsoft Failover Clustering?

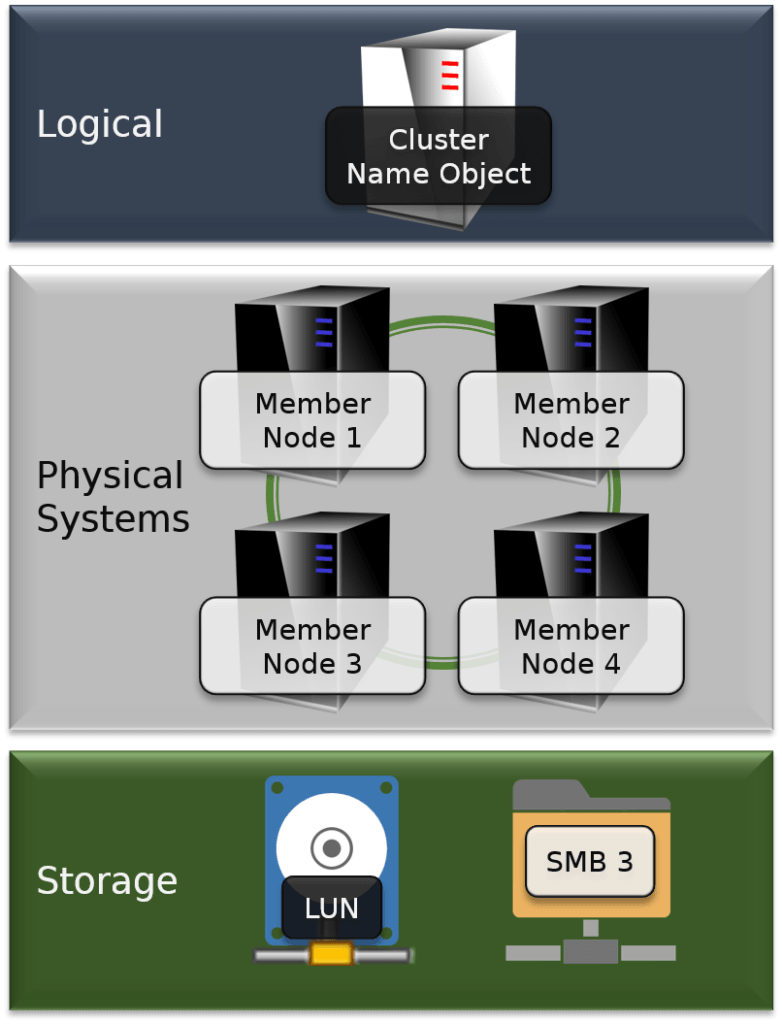

Microsoft Failover Clustering is a cooperative system component that enables applications, services, and even scripts to increase their availability without human intervention. Between one and sixty-four physical hosts are combined into a single unit, called a Failover Cluster.

These hosts share one or more common networks and at least one shared storage location. One or more of the previously listed items running on these physical hosts are presented to Microsoft Failover Clustering as roles. The cluster can move these roles from one host to another very quickly in response to commands or environmental events. The cluster itself is represented in the network by at least one logical entity known as a Cluster Name Object.

Many roles, including Hyper-V virtual machines, can be intentionally moved in without any service interruption. All roles can be quickly and automatically moved to a surviving node in the event of a node failure. For these reasons, clusters are called high availability technology because they can decrease –and potentially eliminate – the amount of time that a service is unreachable outside of its own scheduled maintenance windows.

Microsoft Failover Clustering is always in an active/passive configuration. This means that any single role can only operate on a single cluster node at any given time. A cluster can operate multiple roles simultaneously, however. Depending on the role type, it may be possible for roles to operate independently on separate hosts. As this specifically applies to Hyper-V, an individual virtual machine is considered a role, not the entire Hyper-V service. Therefore, a single virtual machine can only run on one Hyper-V host at any given time, but each host can run multiple virtual machines simultaneously.

If a host crashes, all of its virtual machines will also crash, but they will be automatically restarted on another cluster host. Because a virtual machine cannot run on two hosts simultaneously, Hyper-V virtual machines are not considered fault tolerant.

What is Failover Cluster Manager?

Just as Hyper-V has Hyper-V Manager, Failover Clustering has Failover Cluster Manager. This tool is used to create and maintain failover clustering. It deals with roles, nodes, storage, and networking for the cluster. The tool itself is not specific to Hyper-V, but it does share much of the same functionality for controlling virtual machines.

As with Hyper-V Manager, this is a Microsoft Management Console (mmc.exe) snap-in. It is very small and has no particular hardware or software requirements except for a dependency upon components of Windows Explorer. Because of that, it cannot run directly on Hyper-V Server or Windows Server Core installations. It can be used to remotely control such hosts, however.

How to Access Failover Cluster Manager

If you haven’t yet installed the feature, it is a component of the Remote Server Administration Tools. Installation and activation of that toolset was covered in section 2.3. Once activated, the icon for Failover Cluster Manager appears in the Administrative Tools group in Control Panel and on the Start menu/screen.

Introducing the Failover Cluster Manager Interface

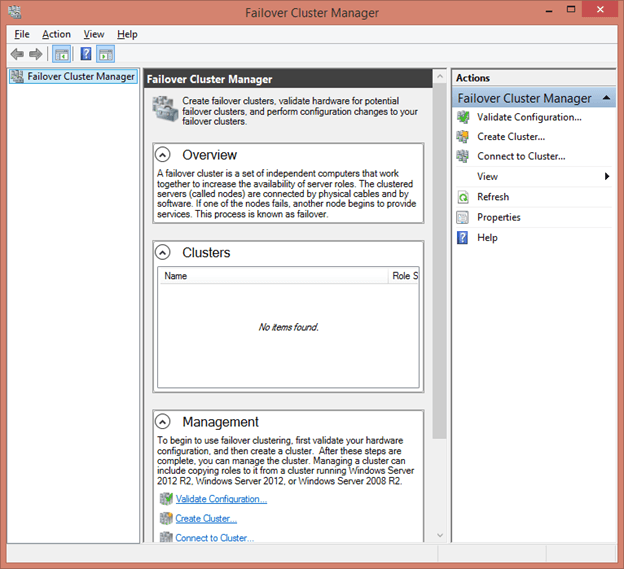

The following screenshot shows Failover Cluster Manager as you see it when it is opened for the first time:

As with Hyper-V Manager, it is divided into three panes. The left pane is currently empty, but attached clusters will appear underneath the Failover Cluster Manager root node, in much the same fashion as Hyper-V Manager’ host display. The tools differ in that the clusters will have their own sub-nodes for the various elements of failover clustering.

The center pane’s default view contains a great deal of textual information and hyperlinks. Its contents will change based on what is selected in the left pane. Returning to this initial set of informational blocks can be done at any time by selecting the root Failover Cluster Manager node. In some views, the center pane will be divided in half with the lower section displaying information about the currently selected item.

The right pane is a context menu just as it is in Hyper-V Manager. Its upper area contents will change depending upon what is selected in the left pane. If an item is selected in the center pane, an additional section will be added to the right pane that is the context menu for that selected object.

How to Use Failover Cluster Manager to Validate a Cluster

One of the most useful capabilities provided by failover clustering is its ability to scan existing and potential cluster nodes to determine their suitability for clustering. This process should be undertaken prior to building any cluster and before adding any new nodes to an existing cluster. The primary reason is that it can help you to detect problems before they even occur. The secondary reason is that Microsoft support could potentially refuse to provide assistance for a cluster that has not been validated. A cluster that has passed all validation tests is considered fully supportable even if its components have not been specifically certified.

To help ensure that your validation is successful, perform necessary steps to prepare your nodes to be clustered. A quick synopsis is given in the next section on using Failover Cluster Manager to create a cluster. This isn’t strictly necessary as the validation wizard will alert you to most problems, but it will save time.

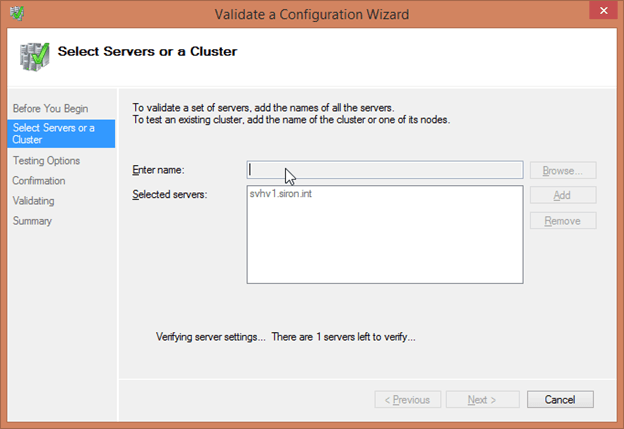

To begin, simply select any of the Validate Configuration items. One is found on the context menu of the Failover Cluster Manager root node in the left pane and another is in the center pane when that root node is selected. Once you’ve done so, the Validate a Configuration Wizard appears. The wizard contain the following series of screens:

- The first page of the dialog is merely informational. You can optionally select to never have that page appear again if you like. When ready, click Next.

- The second page asks you to select the hosts or the cluster to be validated. If a host is already part of a cluster, selecting it will automatically include all nodes of the cluster. You can use the Browse button to scan Active Directory for computer names. You can also just type the short name, fully-qualified domain name, or IP address of a computer and click Add to have Failover Cluster Manager search for it. Once all hosts to be validated for a single cluster have been selected, press Next.

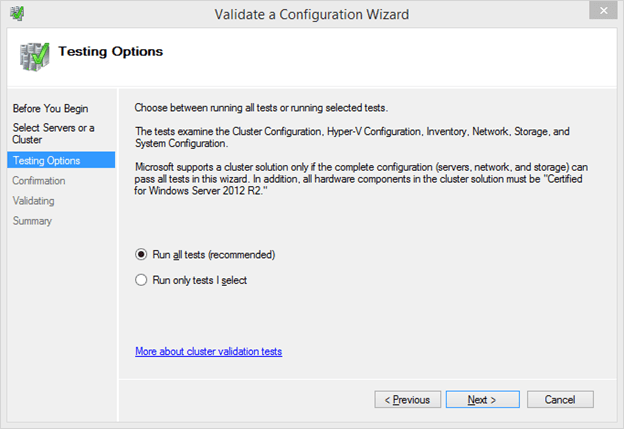

- The following screen provides two choices. The first specifies that you wish to run all available tests. This is the most thorough, and this is the validation that Microsoft expects for complete support. However, some tests do require shared storage to be taken offline temporarily, so this may not be appropriate for an existing cluster. The second option allows you to specify a subset of available tests.

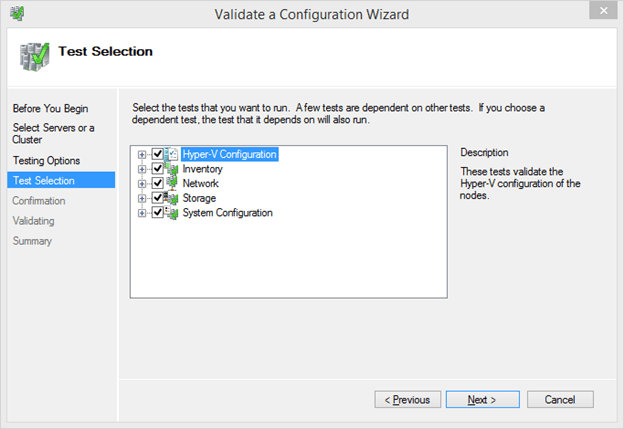

- If you opted to run only selected tests, the next screen will ask you to specify which tests to run. You can click the plus icon next to any top-level item to see sub-tests for that category. Deselect the Storage category if you are working on a live cluster and interruption is not acceptable.

- After test selection, you’ll be taken to a confirmation screen that lists the selected nodes and tests that will be run on them. Verify that all is as expected and click Next when ready.

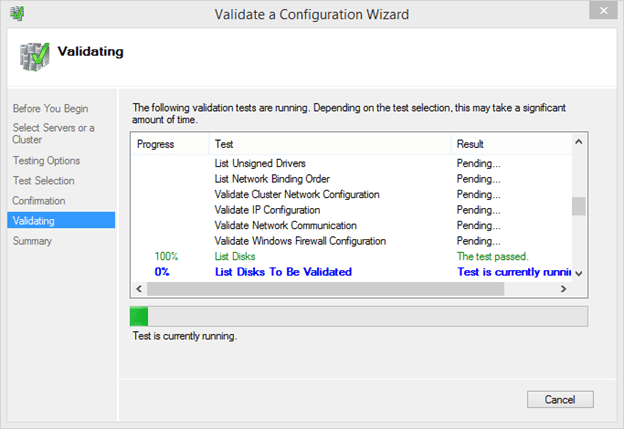

- The tests will begin and their progress will be displayed. The overall test battery can take quite some time to complete.

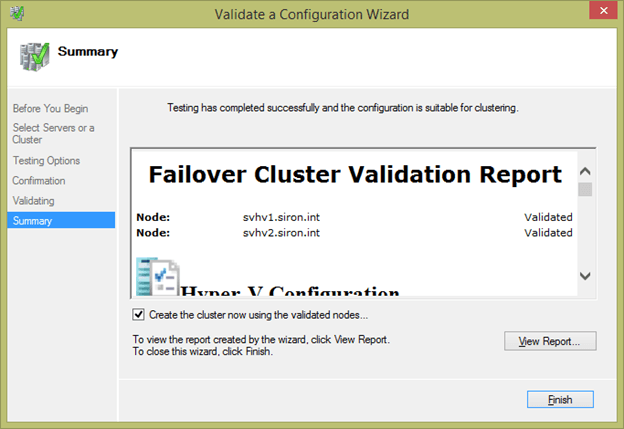

- When the tests complete, you will automatically be brought to the Summary page. Here, you can click View Report to see a detailed list of all tests and their outcomes. You can check the box Create the cluster now using the validated nodes in order to start the cluster creation wizard immediately upon clicking Finish.

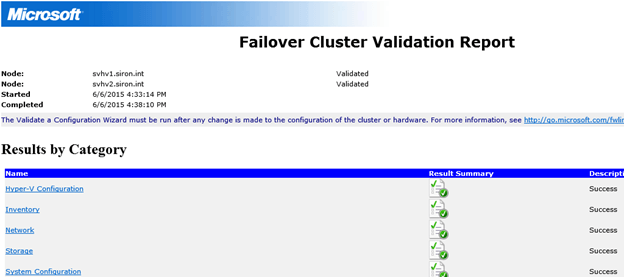

There are three possible outcomes for the validation process. If the wizard doesn’t find any problems, it will mark your cluster as validated. If it finds any concerns that don’t prevent clustering from working but will result in a suboptimal configuration (such as multiple IPs on the same host in the same subnet), it will mark the cluster as validated but with warnings. The final possibility is that the wizard will find one or more problems that are known to prevent clustering from operating properly and will indicate that the configuration is not suitable for clustering.

The generated report is an HTML page that can be viewed in any modern web browser. It contains a set of hyperlinks near the top that jump to the specific item that contains more details. Warning text will be highlighted in yellow and error text will be highlighted in red.

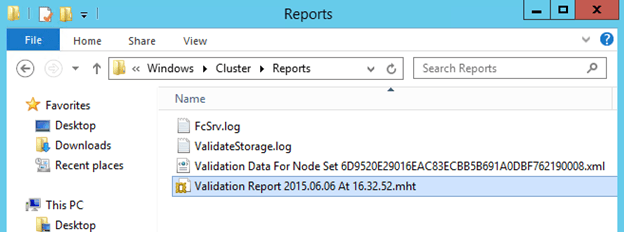

This report will be automatically saved to “C:\Windows\Cluster\Reports” on each one of the nodes. The file name will begin with “Validation Report”.

How to Use Failover Cluster Manager to Create a Cluster

The surest way to be prepared to create a cluster is by having completely a fully successful validation test as described above. A quick summary of the required steps to have completed prior to creating the cluster:

- All nodes must have at least one IP address in the same subnet

- The Failover Clustering role must be installed on all hosts

- Management operating system on all nodes must be the same version

- All nodes must be in the same domain and organizational unit

- Your user account must have the correct privileges to create a computer account in the organizational unit where the nodes reside or the computer account must be pre-staged in that location

The recommended steps are:

- Cluster validation should have been completed successfully

- Shared storage should be prepared and connected (SMB 3 storage has no particular “connection” step, but the node computer accounts should have Full Control permissions on the NTFS and share level)

- One shared storage LUN or location should be set aside for quorum (regardless of the number of nodes)

If you used the validation wizard’s option to create the cluster, you’ll be automatically taken right to the Create Cluster Wizard. To open it directly, use any of the Create Cluster links. They all in the same location as the Validate Configuration links. The screens of this wizard are detailed below:

- The first screen is informational. If you’d like, you can check the box to never be shown this page when running the wizard in the future. Click Next when ready

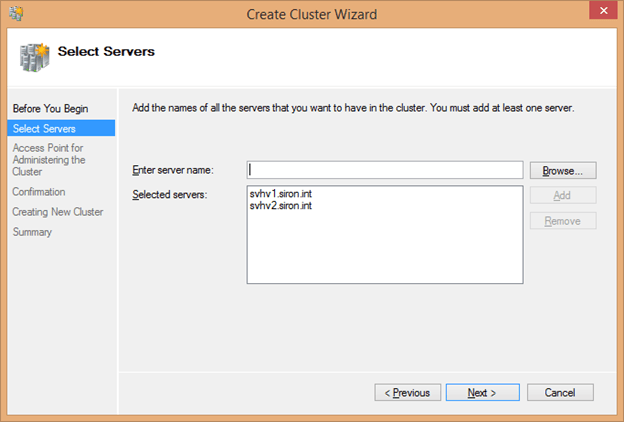

- If you started the wizard directly without going through the validation first, you’ll next be presented with a dialog requesting the names of the host(s) to join together. This works the same way as the identical dialog page in the validation wizard.

- The next page asks you to create the administrative computer name and IP address. This object and its IP address are not as important for a Hyper-V cluster as they are for other cluster types because, unlike most other clustered roles, virtual machines are not accessed through the cluster. However, they are still required. In the Cluster Name text box, enter the name to use for the computer account. The matching object that is created in Active Directory is known as the cluster’s Computer Name Object (CNO). If you are using a pre-staged account, ensure that you use identical spelling. In the lower section, you’ll be asked to choose the network to create one or more administrative access points on and to select an IP for it/them. The networks that appear will be automatically selected by detecting adapters on each node that have a gateway and are in common subnets across all nodes. Preferably, there will only be one adapter per node that fits this description. Enter the IP address that the computer account is to use.

- Next, you’ll be brought to the Confirmation page. There is one option here to change, and that is Add all eligible storage to the cluster. If checked, any LUNs that have been attached to all nodes will be automatically added as cluster disks, with one being selected for quorum. If you’d like to manually control storage, uncheck this box before clicking Next.

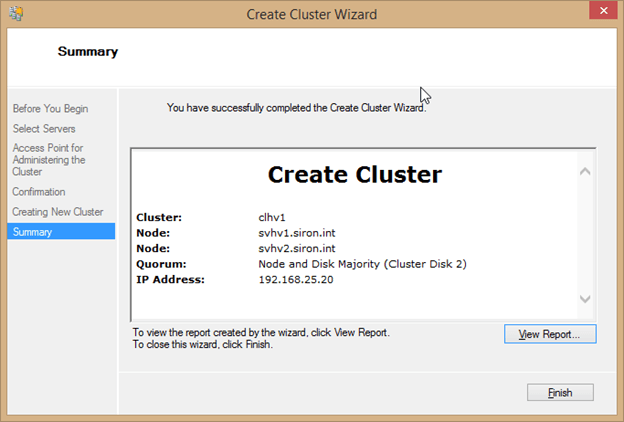

- The next dialog shows a progress bar as your cluster and is built. It will automatically advance to the Summary page once it’s completed. There will be a View Report button that opens a simple web page with a list of all the steps that were taken during cluster creation. This can help you to troubleshoot any errors that occur. As with the validation wizard, the report is saved in C:\Windows\Cluster\Reports on all nodes. To close the wizard, click Finish.

If the wizard successfully creates the cluster, it will be automatically connected afterward.

How to Connect to an Existing Cluster

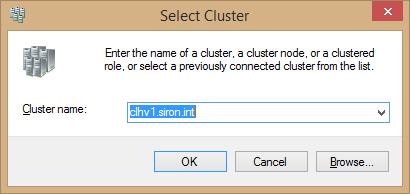

If you’ve already got a cluster and just need to connect Failover Cluster Manager to it, that’s a simple process. Find the Connect to Cluster link on the context menu for the root Failover Cluster Manager item in the left pane or the link in the center pane. That will open the Select Cluster dialog:

You can use the Browse button to select from a list of known clusters. Click OK to connect. The cluster will appear in the left pane under the Failover Cluster Manager root node. Each time you open this applet with your user account on this computer, it will automatically reconnect to this cluster. To remove it, use the Close Connection context menu item.

How to Manage the Hyper-V Cluster

Now that you’ve successfully created and connected to your cluster, you can use the tree objects in the left pane to navigate through the sections to view and manipulate the cluster’s components. The next few articles will dive into these sections in greater detail. Before jumping into that, there are a number of operations that become available for the cluster itself. All of these appear on the cluster’s context menu, and many appear in the center pane when the cluster object is selected in the left pane.

The following items appear on the cluster’s context menu, and many appear in the Configure section of the center pane.

- Configure Role opens the wizard with the same name. This is how you make an existing virtual machine highly available. The process will be discussed in the next chapter.

- Validate Cluster opens the cluster validation wizard for this node. Remember to use this if you make changes to the cluster so that you always have an up-to-date report.

- View Validation Report opens the last validation report in the default web browser. This doesn’t work remotely.

- Add Node opens a wizard very similar to the cluster creation wizard that allows you to add a node to the currently selected cluster. This wizard won’t be shown here as it is not substantially different from the creation wizard.

- Close Connection permanently removes this cluster from the local Failover Cluster Manager applet running under your account. You can use the Connect option to reattach to it at any time.

- Reset Recent Events clears the notifications of recent cluster-related events. These are viewable on the Cluster Events node which will be discussed in a later chapter.

-

More Actions opens a sub-menu that contains several additional wizards. Some require a longer explanation and will be discussed individually below. The items are:

- Configure Cluster Quorum Settings, which guides you through setting up how the cluster determines the minimum number of nodes required to operate. Find our detailed explanation and walkthrough at this link: https://www.altaro.com/hyper-v/quorum-microsoft-failover-clusters/

- Copy Cluster Roles opens the cluster migration wizard that allows you to migrate roles from another cluster to the current cluster. Unfortunately, resources were not available to demonstrate this process for this write-up.

- Shut Down Cluster performs the designated cluster-controlled offline action on each role and then stops the cluster service on each node.

- Destroy Cluster removes all nodes from the cluster and disables the cluster computer object in Active Directory. All configuration information is lost.

- Move Cluster Core Resources relocates the quorum disk and the cluster name object to another node. You can allow Failover Clustering to select the node or you can select it yourself.

- Cluster-Aware Updating opens the Cluster-Aware Updating (CAU) interface. This tool allows you to set up an automation routine to orderly patch all the nodes in your cluster without imposing downtime upon the guests. This is a large, complicated topic. Read more at our article series on the subject starting at: https://www.altaro.com/hyper-v/cluster-aware-updating-hyper-v-basics/

- View brings you to a dialog where you can customize which columns are displayed in the center pane.

- Refresh retrieves updated information from the cluster and displays it in the center pane.

- Properties opens the cluster’s properties dialog with basic configuration options.

- Help initiates the MMC help viewer with basic information about connecting and troubleshooting Failover Cluster Manager.

Shut Down Cluster

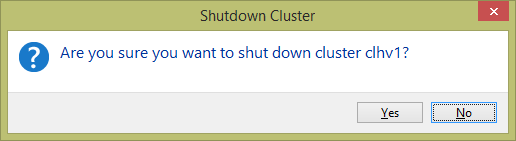

This option on the cluster’s More Actions menu is used to completely stop the cluster. The interface itself is simple; you’re simply given a Yes/No dialog:

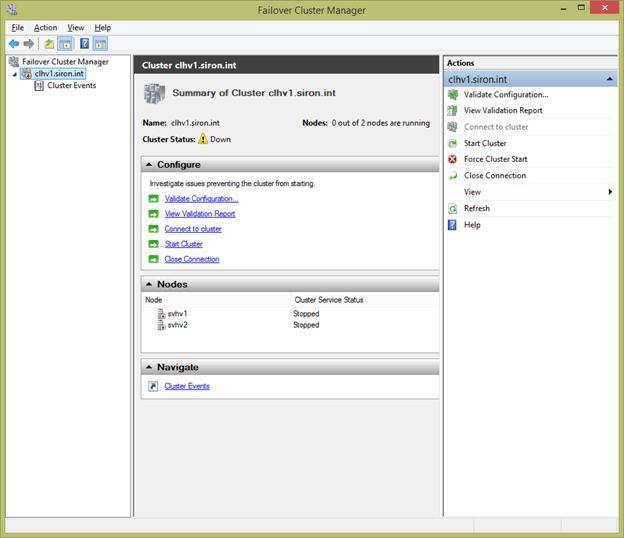

If you answer Yes, all of the cluster’s protected roles are subjected to the Cluster-Controlled Offline Action configured for them (details in the next chapter) and then the cluster service is stopped on all nodes. Once this happens, the context menu and center pane for the cluster changes:

All of the management nodes for the cluster are removed with the exception of Cluster Events and several of the context menu items are gone as well. Two new items are added for this state.

- Start Cluster attempts an orderly startup of the cluster.

- Force Cluster Start force-starts the cluster service on as many nodes as possible, even if they are insufficient to maintain quorum.

Because the cluster is down, you are not able to view any of the roles. If you open Hyper-V Manager or use PowerShell, there are two things to expect. First, all highly available virtual machines have completely disappeared. This is because Failover Clustering unregistered them from the hosts they were assigned to and did not register them anywhere else. In the event that the cluster service cannot be restarted, you can use Hyper-V Manager’s or PowerShell’s virtual machine import process to register them without any data or configuration loss. The second thing to notice is that any virtual machine that was not made highly available is still in the same state it was when the cluster was shut down.

Using Failover Cluster Manager to Revive a Down Cluster

In the event that a cluster is shut down, your first attempt to bring it back online should always begin with the Start Cluster option. After selecting this option, you will need to wait a few moments. The interface should refresh automatically once it detects that the nodes are online. If it doesn’t, you can use the Refresh option. All roles will automatically be taken through the designated virtual machine Automatic Start Action.

Force Cluster Start is a tool of last resort. All responding nodes will be forced online and quorum will be ignored. Roles will be positioned the best ability of the cluster and those that can be started, will, in accordance with their priority. Once quorum is re-established, the cluster will automatically return to normal operations.

Destroy Cluster

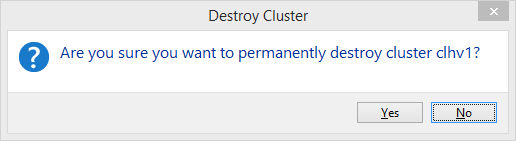

This option, also found on the cluster’s More Actions menu, is a final operation that completely removes the cluster object from Active Directory and deletes the cluster’s configuration from the nodes. Before you can take this option, you must remove all roles (details in the next chapter). As with the Shut Down Cluster command, there is only a single, simple dialog:

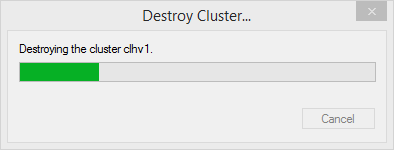

Upon responding Yes, a progress bar is displayed, after which the cluster is destroyed and all remnants are removed from the interface.

Cluster Properties

The cluster’s Properties dialog can be reached through its context menu item. It contains three tabs.

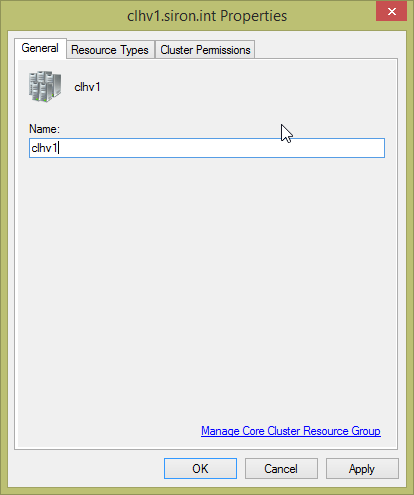

Cluster Properties – General

This is a very simple page with only two controls.

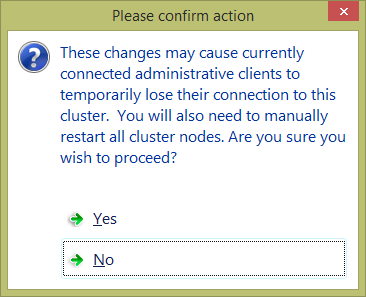

The stand-out item here is a check box that contains the name of the cluster name object (CNO). This change can be quite disruptive, as indicated by the confirmation dialog:

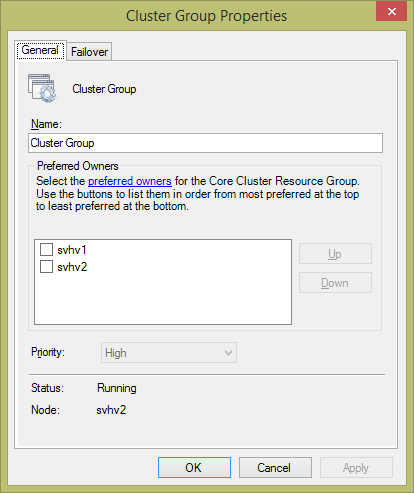

The other item on the dialog page is Manage Core Cluster Resource Group. This group includes the CNO, its IP address, and the Quorum witness. Clicking the link opens the following dialog:

While it is possible to use this dialog to rename the core cluster resource group, it’s not recommended. The group might be identified in third party tools by name. In the center of the dialog, you can check one or more nodes to indicate that you prefer the cluster move the resources to those nodes when it is automatically adjusting resources. The lower section of the dialog shows which node currently owns these resources and what their status is.

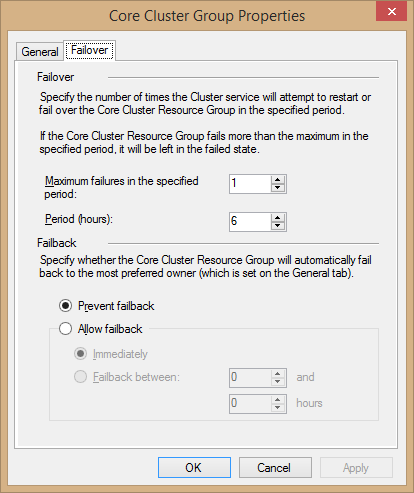

The Failover tab of this dialog shows a dialog that is common to all cluster-protected resources. It contains a number of settings that guide how the cluster will treat a resource if a node it is on suffers a failure:

In the Failover box, you set limits to prevent a resource from flapping. This occurs when a resource’s host fails and it tries to locate another but is continuously unsuccessful and therefore is taken through repetitive failover attempts. Use the first textbox to indicate how many times in a defined period that you want the resource to attempt to fail over and use the second textbox to define the length of that period.

In the Fallback section, you notify the cluster how to handle a case in which a failover has occurred and the original owning node comes back online. The default Prevent failback leaves the resource in its new location. Allow failback opens up options to control how the resource will fail back to its source location. The first option, Immediately, sends the resource back as soon as the source becomes available. The second option allows you to establish a failback window. The hours fields here set the beginning and ending of that window. 0 represents midnight and 23 represents 11 PM. So, if you want to allow the core resource to fail back between 6 PM and 7 AM, set the top textbox to 18 and the bottom textbox to 7.

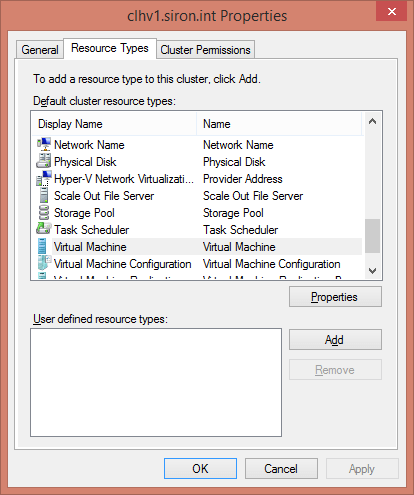

Cluster Properties – Resource Types

The primary purpose of the Resource Types is to establish health check intervals for clustered resources. The dialog lists all the potential role types for your cluster.

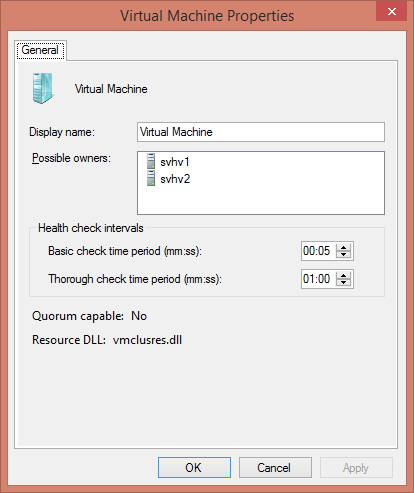

The item of primary interest is Virtual Machine. Highlight that role and click the Properties button. Doing so will open the following dialog:

The purpose of this dialog is to establish the interval between health checks. The controls are the same for all cluster resource types. In most cases, the defaults should be adequate. You can reduce the interval if you want failures to be detected more rapidly. If you have high latency inter-node communications or known intermittent issues with your cluster, you can raise these thresholds to reduce failover instances. You may also need to duplicate these settings for the Virtual Machine Configuration resource as well.

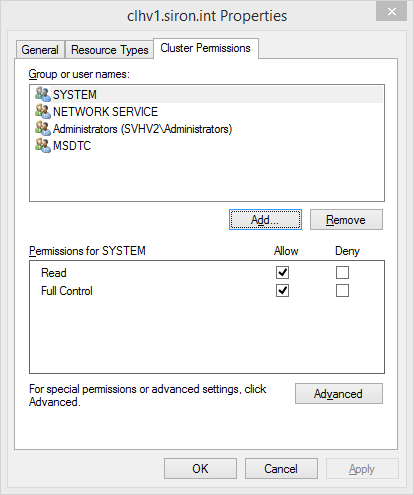

Cluster Properties – Cluster Permissions

It is usually unnecessary make changes to the cluster permissions. For administrative privileges, it’s better to use the local Administrators group on the nodes to control access. If you choose to use this dialog, it is the standard permissions dialog used by Windows. The only permissions available are Read and Full Control.

Continuing into Advanced Cluster Configuration

This chapter has worked through all the basics of using Failover Cluster Manager to set up, connect to, and configure your cluster. The following articles will guide you through the additional cluster management components of this graphical tool.