Save to My DOJO

In a previous post, we covered Storage Spaces Direct in general and got a quick introduction into the technology and the product within Windows Server 2016. Now we are going to have a deeper look at the technologies directly connected to S2D and most likely recommended to get the best performance out of your servers.

Redirected Memory Access – RDMA

In general, RDMA can be explained by its Wikipedia entry.

In computing, remote direct memory access (RDMA) is a direct memory access from the memory of one computer into that of another without involving either one’s operating system. This permits high-throughput, low-latency networking, which is especially useful in massively parallel computer clusters.

Source: https://en.wikipedia.org/wiki/Remote_direct_memory_access

But how does it work?

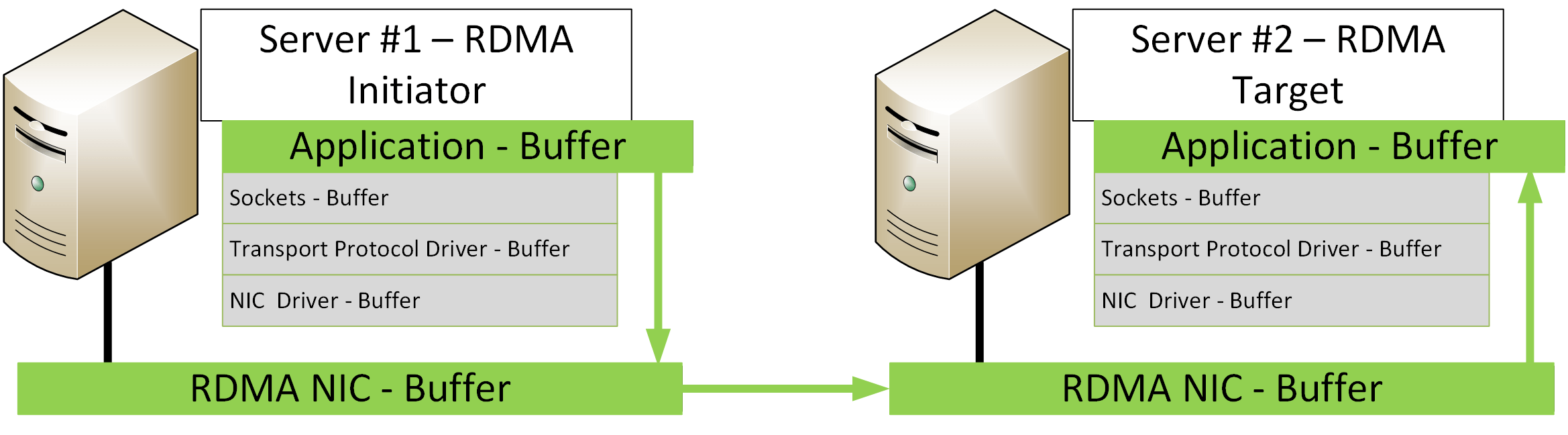

Let me try to explain it with the following drawing:

An application able to use RDMA running on Server#1, the Initiator, can directly transfer its data into the RDMA NIC Buffer. Afterwards, the RDMA NIC transfers the data to the RDMA NIC Buffer from Server #2, the target. From there, the data is directly sent to the Buffer of the Application running on Server #2. No CPU Buffer, Transport Protocol Buffer or Driver Buffer is used in between thus avoiding regulations or limitations that may be contained within those layers.

Non-Volatile Memory Host Controller Interface Specification – NVMe

The second technology which is often used in conjunction with S2D is storage called NVMe. The easiest way to describe an NVMe is to say, it’s an SSD directly connected to the PCIe bus of your server.

A more detailed explanation is given by Wikipedia.

NVM Express (NVMe) or Non-Volatile Memory Host Controller Interface Specification (NVMHCI) is a logical device interface specification for accessing non-volatile storage media attached via a PCI Express (PCIe) bus. The acronym, NVM, stands for non-volatile memory, which is commonly flash memory that comes in the form of solid-state drives (SSDs). NVM Express, as a logical device interface, has been designed from the ground up to capitalize on the low latency and internal parallelism of flash-based storage devices, mirroring the parallelism of contemporary CPUs, platforms and applications.

Source: https://en.wikipedia.org/wiki/NVM_Express

Such NVMe’s are used in S2D as cache devices to boost IOPS and storage performance. There is a very good video by Elden Christensen on Channel 9, where he explains storage in S2D in depth.

Storage Tiering

With S2D you don’t follow the common concept of Storage Tiering, which you know from Storage vendors like Dell EMC, NetApp or IBM. Which is explained very well here: https://en.wikipedia.org/wiki/Automated_tiered_storage

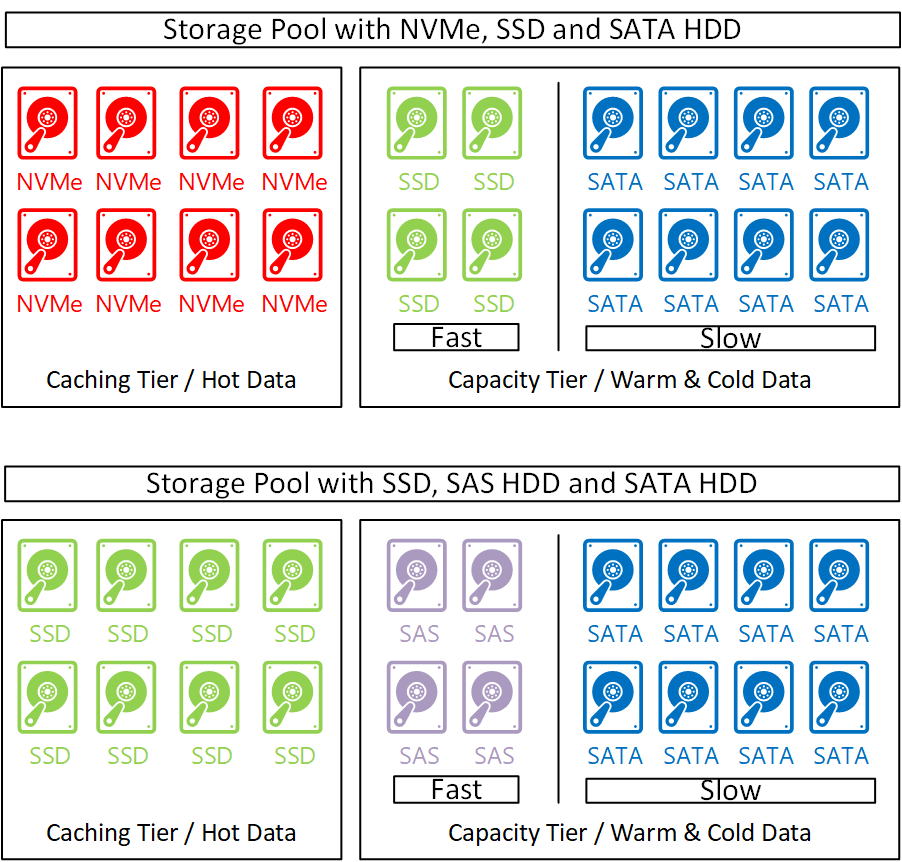

With S2D you only have two storage tiers. The Caching Tier, which is built from fast NVMe’s or SSD’s (this is where your hot data is located) and the Capacity Tier, which is built from SSD’s and/or HDD’s to store cold data. Depending on the number and type of disk, the capacity tier can also have some kind of sub-tier as a fast Capacity Tier for your “warm” data but this only possible when you use NVMe, SSD, and HDD or SSD for caching and HDDs with different RPM speeds within the same storage pool.

The picture below should show you an example for those storage pools:

Data Deduplication

If you’ve used storage systems for any length of time, you will also know about data deduplication. Deduplication is a technology which is very common, and whose goal is to reduce the amount of capacity that stored data needs on a physical device by removing duplicated data blocks.

I don’t want to drill deeper into Dedupe because we are focusing on S2D with this series and there are much better articles from storage experts out their, explaining these topics for all kinds of vendors. 🙂

Also of note: deduplication for S2D is not supported with Windows Server 2016 but it is on the feature list for S2D in Windows Server 2019. [Preview Download]

As it looks so far with the Windows Server 2019 Technical Preview, deduplication for S2D works as expected and when looking on most Hyper-V workloads, you may see a dedup rate of around 50%.

Wrap-Up

How about you? Have you used any of the technologies listed here with S2D or otherwise? Let us know in the comments section below!

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

2 thoughts on "Storage Spaces Direct – S2D Technologies"

Just wondering what happened to the rest of this series??

I’m going on writing but it was a very hard year. Lots of work, two books about Azure and a new born sun. 🙂 Cheers, Flo