Save to My DOJO

Table of contents

- Introduction to Virtual CPUs

- Taking These Concepts to the Hypervisor

- What About Processor Affinity?

- How Does Thread Scheduling Work?

- What Does the Number of vCPUs Assigned to a VM Really Mean?

- But Can’t I Assign More Total vCPUs to all VMs than Physical Cores?

- What’s The Proper Ratio of vCPU to pCPU/Cores?

- What About Reserve and Weighting (Priority)?

- But What About Hyper-Threading?

- Making Sense of Everything

Did your software vendor indicate that you can virtualize their application, but only if you dedicate one or more CPU cores to it? Not clear on what happens when you assign CPUs to a virtual machine? You are far from alone.

Note: This article was originally published in February 2014. It has been fully updated to be relevant as of November 2019.

Introduction to Virtual CPUs

Like all other virtual machine “hardware”, virtual CPUs do not exist. The hypervisor uses the physical host’s real CPUs to create virtual constructs to present to virtual machines. The hypervisor controls access to the real CPUs just as it controls access to all other hardware.

Hyper-V Never Assigns Physical Processors to Specific Virtual Machines

Make sure that you understand this section before moving on. Assigning 2 vCPUs to a system does not mean that Hyper-V plucks two cores out of the physical pool and permanently marries them to your virtual machine. You cannot assign a physical core to a VM at all. So, does this mean that you just can’t meet that vendor request to dedicate a core or two? Well, not exactly. More on that toward the end.

Understanding Operating System Processor Scheduling

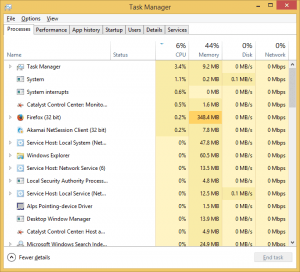

Let’s kick this off by looking at how CPUs are used in regular Windows. Here’s a shot of my Task Manager screen:

Task Manager

Nothing fancy, right? Looks familiar, doesn’t it?

Now, back when computers never, or almost never, shipped as multi-CPU multi-core boxes, we all knew that computers couldn’t really multitask. They had one CPU and one core, so there was only one possible active thread of execution. But aside from the fancy graphical updates, Task Manager then looked pretty much like Task Manager now. You had a long list of running processes, each with a metric indicating what percentage of the CPUs time it was using.

Then, as in now, each line item you see represents a process (or, new in the recent Task Manager versions, a process group). A process consists of one or more threads. A thread is nothing more than a sequence of CPU instructions (keyword: sequence).

What happens is that (in Windows, this started in 95 and NT) the operating system stops a running thread, preserves its state, and then starts another thread. After a bit of time, it repeats those operations for the next thread. We call this pre-emptive, meaning that the operating system decides when to suspend the current thread and switch to another. You can set priorities that affect how a process rates, but the OS is in charge of thread scheduling.

Today, almost all computers have multiple cores, so Windows can truly multi-task.

Taking These Concepts to the Hypervisor

Because of its role as a thread manager, Windows can be called a “supervisor” (very old terminology that you really never see anymore): a system that manages processes that are made up of threads. Hyper-V is a hypervisor: a system that manages supervisors that manage processes that are made up of threads.

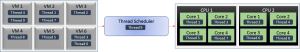

Task Manager doesn’t work the same way for Hyper-V, but the same thing is going on. There is a list of partitions, and inside those partitions are processes and threads. The thread scheduler works pretty much the same way, something like this:

Hypervisor Thread Scheduling

Of course, a real system will always have more than nine threads running. The thread scheduler will place them all into a queue.

What About Processor Affinity?

You probably know that you can affinitize threads in Windows so that they always run on a particular core or set of cores. You cannot do that in Hyper-V. Doing so would have questionable value anyway; dedicating a thread to a core is not the same thing as dedicating a core to a thread, which is what many people really want to try to do. You can’t prevent a core from running other threads in the Windows world or the Hyper-V world.

How Does Thread Scheduling Work?

The simplest answer is that Hyper-V makes the decision at the hypervisor level. It doesn’t really let the guests have any input. Guest operating systems schedule the threads from the processes that they own. When they choose a thread to run, they send it to a virtual CPU. Hyper-V takes it from there.

The image that I presented above is necessarily an oversimplification, as it’s not simple first-in-first-out. NUMA plays a role, for instance. Really understanding this topic requires a fairly deep dive into some complex ideas. Few administrators require that level of depth, and exploring it here would take this article far afield.

The first thing that matters: affinity aside, you never know where any given thread will execute. A thread that was paused to yield CPU time to another thread may very well be assigned to another core when it is resumed. Did you ever wonder why an application consumes right at 50% of a dual-core system and each core looks like it’s running at 50% usage? That behavior indicates a single-threaded application. Each time the scheduler executes it, it consumes 100% of the core that it lands on. The next time it runs, it stays on the same core or goes to the other core. Whichever core the scheduler assigns it to, it consumes 100%. When Task Manager aggregates its performance for display, that’s an even 50% utilization — the app uses 100% of 50% of the system’s capacity. Since the core not running the app remains mostly idle while the other core tops out, they cumulatively amount to 50% utilization for the measured time period. With the capabilities of newer versions of Task Manager, you can now instruct it to show the separate cores individually, which makes this behavior far more apparent.

Now we can move on to a look at the number of vCPUs assigned to a system and priority.

What Does the Number of vCPUs Assigned to a VM Really Mean?

You should first notice that you can’t assign more vCPUs to a virtual machine than you have logical processors in your host.

Invalid CPU Count

So, a virtual machine’s vCPU count means this: the maximum number of threads that the VM can run at any given moment. I can’t set the virtual machine from the screenshot to have more than two vCPUs because the host only has two logical processors. Therefore, there is nowhere for a third thread to be scheduled. But, if I had a 24-core system and left this VM at 2 vCPUs, then it would only ever send a maximum of two threads to Hyper-V for scheduling. The virtual machine’s thread scheduler (the supervisor) will keep its other threads in a queue, waiting for their turn.

But Can’t I Assign More Total vCPUs to all VMs than Physical Cores?

Yes, the total number of vCPUs across all virtual machines can exceed the number of physical cores in the host. It’s no different than the fact that I’ve got 40+ processes “running” on my dual-core laptop right now. I can only run two threads at a time, but I will always far more than two threads scheduled. Windows has been doing this for a very long time now, and Windows is so good at it (usually) that most people never see a need to think through what’s going on. Your VMs (supervisors) will bubble up threads to run and Hyper-V (hypervisor) will schedule them the way (mostly) that Windows has been scheduling them ever since it outgrew cooperative scheduling in Windows 3.x.

What’s The Proper Ratio of vCPU to pCPU/Cores?

This is the question that’s on everyone’s mind. I’ll tell you straight: in the generic sense, this question has no answer.

Sure, way back when, people said 1:1. Some people still say that today. And you know, you can do it. It’s wasteful, but you can do it. I could run my current desktop configuration on a quad 16 core server and I’d never get any contention. But, I probably wouldn’t see much performance difference. Why? Because almost all my threads sit idle almost all the time. If something needs 0% CPU time, what does giving it its own core do? Nothing, that’s what.

Later, the answer was upgraded to 8 vCPUs per 1 physical core. OK, sure, good.

Then it became 12.

And then the recommendations went away.

They went away because no one really has any idea. The scheduler will evenly distribute threads across the available cores. So then, the amount of physical CPUs needed doesn’t depend on how many virtual CPUs there are. It depends entirely on what the operating threads need. And, even if you’ve got a bunch of heavy threads going, that doesn’t mean their systems will die as they get pre-empted by other heavy threads. The necessary vCPU/pCPU ratio depends entirely on the CPU load profile and your tolerance for latency. Multiple heavy loads require a low ratio. A few heavy loads work well with a medium ratio. Light loads can run on a high ratio system.

I’m going to let you in on a dirty little secret about CPUs: Every single time a thread runs, no matter what it is, it drives the CPU at 100% (power-throttling changes the clock speed, not workload saturation). The CPU is a binary device; it’s either processing or it isn’t. When your performance metric tools show you that 100% or 20% or 50% or whatever number, they calculate it from a time measurement. If you see 100%, that means that the CPU was processing during the entire measured span of time. 20% means it was running a process 1/5th of the time and 4/5th of the time it was idle. This means that a single thread doesn’t consume 100% of the CPU, because Windows/Hyper-V will pre-empt it when it wants to run another thread. You can have multiple “100%” CPU threads running on the same system. Even so, a system can only act responsively when it has some idle time, meaning that most threads will simply let their time slice go by. That allows other threads to access cores more quickly. When you have multiple threads always queuing for active CPU time, the overall system becomes less responsive because threads must wait. Using additional cores will address this concern as it spreads the workload out.

The upshot: if you want to know how many physical cores you need, then you need to know the performance profile of your actual workload. If you don’t know, then start from the earlier 8:1 or 12:1 recommendations.

[thrive_leads id=’17165′]

What About Reserve and Weighting (Priority)?

I don’t recommend that you tinker with CPU settings unless you have a CPU contention problem to solve. Let the thread scheduler do its job. Just like setting CPU priorities on threads in Windows can cause more problems than they solve, fiddling with hypervisor vCPU settings can make everything worse.

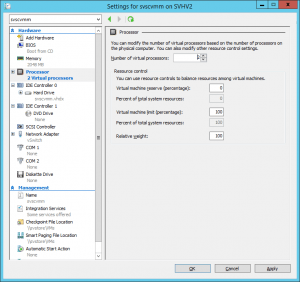

Let’s look at the config screen:

vCPU Settings

The first group of boxes is the reserve. The first box represents the percentage of its allowed number of vCPUs to set aside. Its actual meaning depends on the number of vCPUs assigned to the VM. The second box, the grayed-out one, shows the total percentage of host resources that Hyper-V will set aside for this VM. In this case, I have a 2 vCPU system on a dual-core host, so the two boxes will be the same. If I set 10 percent reserve, that’s 10 percent of the total physical resources. If I drop the allocation down to 1 vCPU, then 10 percent reserve becomes 5 percent physical. The second box, will be auto-calculated as you adjust the first box.

The reserve is a hard minimum… sort of. If the total of all reserve settings of all virtual machines on a given host exceeds 100%, then at least one virtual machine won’t start. But, if a VM’s reserve is 0%, then it doesn’t count toward the 100% at all (seems pretty obvious, but you never know). But, if a VM with a 20% reserve is sitting idle, then other processes are allowed to use up to 100% of the available processor power… until such time as the VM with the reserve starts up. Then, once the CPU capacity is available, the reserved VM will be able to dominate up to 20% of the total computing power. Because time slices are so short, it’s effectively like it always has 20% available, but it does have to wait like everyone else.

So, that vendor that wants a dedicated CPU? If you really want to honor their wishes, this is how you do it. You enter whatever number in the top box that makes the second box show the equivalent processor power of however many pCPUs/cores the vendor thinks they need. If they want one whole CPU and you have a quad-core host, then make the second box show 25%. Do you really have to? Well, I don’t know. Their software probably doesn’t need that kind of power, but if they can kick you off support for not listening to them, well… don’t get me in the middle of that. The real reason virtualization densities never hit what the hypervisor manufacturers say they can do is because of software vendors’ arbitrary rules, but that’s a rant for another day.

The next two boxes are the limit. Now that you understand the reserve, you can understand the limit. It’s a resource cap. It keeps a greedy VM’s hands out of the cookie jar. The two boxes work together in the same way as the reserve boxes.

The final box is the priority weight. As indicated, this is relative. Every VM set to 100 (the default) has the same pull with the scheduler, but they’re all beneath all the VMs that have 200 and above all the VMs that have 50, so on and so forth. If you’re going to tinker, weight is safer than fiddling with reserves because you can’t ever prevent a VM from starting by changing relative weights. What the weight means is that when a bunch of VMs present threads to the hypervisor thread scheduler at once, the higher-weighted VMs go first.

But What About Hyper-Threading?

Hyper-Threading allows a single core to operate two threads at once — sort of. The core can only actively run one of the threads at a time, but if that thread stalls while waiting for an external resource, then the core operates the other thread. You can read a more detailed explanation below in the comments section, from contributor Jordan. AMD has recently added a similar technology.

To kill one major misconception: Hyper-Threading does not double the core’s performance ability. Synthetic benchmarks show a high-water mark of a 25% improvement. More realistic measurements show closer to a 10% boost. An 8-core Hyper-Threaded system does not perform as well as a 16-core non-Hyper-Threaded system. It might perform almost as well as a 9-core system.

With the so-called “classic” scheduler, Hyper-V places threads on the next available core as described above. With the core scheduler, introduced in Hyper-V 2016, Hyper-V now prevents threads owned by different virtual machines from running side-by-side on the same core. It will, however, continue to pre-empt one virtual machine’s threads in favor of another’s. We have an article that deals with the core scheduler.

Making Sense of Everything

I know this is a lot of information. Most people come here wanting to know how many vCPUs to assign to a VM or how many total vCPUs to run on a single system.

Personally, I assign 2 vCPUs to every VM to start. That gives it at least two places to run threads, which gives it responsiveness. On a dual-processor system, it also ensures that the VM automatically has a presence on both NUMA nodes. I do not assign more vCPU to a VM until I know that it needs it (or an application vendor demands it).

As for the ratio of vCPU to pCPU, that works mostly the same way. There is no formula or magic knowledge that you can simply apply. If you plan to virtualize existing workloads, then measure their current CPU utilization and tally it up; that will tell you what you need to know. Microsoft’s Assessment and Planning Toolkit might help you. Otherwise, you simply add resources and monitor usage. If your hardware cannot handle your workload, then you need to scale out.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

361 thoughts on "Hyper-V Virtual CPUs Explained"

Supervisor is actually a good term. With more control comes more responsibility.

I also tend to let my processor settings alone, aside from asigning vCPUs.

I tend to belive that if it is necessary to tweak these settings manually, there are some other problems piling up, like a way overprovisioned server… or:

Numbers of vCPUs can matter a lot, when dealing with pr. core licenses in the Guest system. Can be mitigated a tad by assigning a reserve, but don’t know how much it actually helps (if any).

Supervisor is actually a good term. With more control comes more responsibility.

I also tend to let my processor settings alone, aside from asigning vCPUs.

I tend to belive that if it is necessary to tweak these settings manually, there are some other problems piling up, like a way overprovisioned server… or:

Numbers of vCPUs can matter a lot, when dealing with pr. core licenses in the Guest system. Can be mitigated a tad by assigning a reserve, but don’t know how much it actually helps (if any).

Thanks for the well written explanations Eric. It all makes perfect sense. However there has been one thing that has always puzzled me about the relationship between pCPUs and vCPUs.

I currently have 3 Hyper-V Hosts in production and one in my home lab. I don’t usually mess with the production Hosts unless I need to. “If it ain’t broke, don’t break it.”

So anyway, I’ll set-up my question now. I’ve noticed this on all 4 Host servers, but I’ll use my lab server as my example side this is the one I “play” with the most. The hardware is a single Dual Core Xeon without Hyper-Threading. So I’m working with 2 physical cores here that also show up in Task Manager on the Host as 2 CPU cores (obviously). I generally have 2 VMs running simultaneously on the Host, each configured with 2 vCPUs that show up in their respective Task Manager as 2 CPU cores.

What puzzles me is the CPU usage reported by the respective Task Manager instances in each of the VMs as well as the Host. What I mean by this is that I can load down both of the VMs so that Task Manager shows both their vCPU cores buried at 90-100% usage. Yet when I look at Task Manager on the Host the pCPU cores are barely yawning, at say 10-15% usage.

This just doesn’t seem very efficient if these numbers are at all representative of actual pCPU usage.

Remember that the management operating system is actually a virtual machine. It’s using vCPUs the same as the guests, so it has no idea what they’re doing.

I’m not sure how much I trust Hyper-V Manager’s CPU meter, but it will at least be able to see into the guests. You can also find guest CPU counters in Performance Manager.

Okay wait a second here. You’re saying that if I install (in the case of my lab environment) Windows Server 2008 R2 Enterprise as the physical host on my server, then I install the Hyper-V Role, that my physical installation suddenly becomes a virtual machine?

Yes, that’s exactly what happens. That checkbox does quite a bit of work.

Hyper-V is a type-1 hypervisor because it provides direct hardware access to the underlying VMs. It doesn’t virtualize the OS on the physical machine.

The method that a type 1 hypervisor utilizes to provide direct hardware access is by existing between that hardware and all of the guest operating systems, including the management operating system. Therefore, the management operating system is virtualized. If it were not, then it would be a type 2 hypervisor.

One of my production servers is Hyper-V Server 2008 R2 as the host OS. That is actually running as a VM as well then?

It is. Hyper-V is a type 1 hypervisor, always.

Thank you Eric.

I’ve been running Hyper-V since a month or 2 after it’s initial release. I don’t have a huge organization and as I mentioned before, other than tinkering with my “lab” setup, if it ain’t broke, I try not to break it. So I realize there is probably a lot about it that I don’t know, because I haven’t needed to know. Yet.

This definitely is one of the things that I was never aware of before now.

Randy

What adds to the confusion is that on my production servers, the host OS displays all 16 cores as active if Task Manager, yet I can only assign 4 cores to any VM I create. Interesting behavior if the host OS is also in fact just a VM.

The parent partition doesn’t follow the same rules as the guests at a lot of levels, especially in terms of hardware. In fact, if you were on 2012 and went the other way, with > physical 64 cores, you wouldn’t be able to see them all in the host OS.

Very nice article Eric!

Unfortunately I’ve never had hardware with anything close to > 64 cores. Perhaps one day 😉

Very nice article, thx a lot.

Can you please explain the 8:1 or 12:1 rule?

I still have no clue what to assign my VMs – i mostly give 2 vCPUs if the system does more than AD, DNS – something like SQL, IIS…

Thx!

8:1 means that when you add up all the vCPUs for all the virtual machines on your host, that the sum does not outnumber the physical cores by more than an 8 to 1 ratio. Extrapolate for 12:1, which was a recommendation for more recent guests (2k8r2 and later, I think). But again, that’s just what it means. The numbers themselves were more or less invented, to put it crudely.

If you’ve been assigning 2 per VM and that’s working for you, then there is no reason to change. You’re doing exactly what most people I know of do. When I assign more than 2 vCPU to start, it’s because a software vendor says I have to for their software or because a sizing tool indicated more as a necessity.

Really nice, clear, well explained article of what is clearly a complex and misunderstood topic (it certainly was by me until i read this)

thanks

Really nice, clear, well explained article of what is clearly a complex and misunderstood topic (it certainly was by me until i read this)

thanks

Great article. Thank you. Assuming we are using Hyper-V so for a single thread app running on a VM, adding more vCpu isn’t really going to improve performance, correct? What or how do you recommend improving performance for single thread app?

That’s right, additional CPUs won’t have much impact on a single-threaded app. I always use a minimum of 2 vCPUs, however, to give breathing room to OS and supporting processes.

Improving performance will depend on the app. A lot of single-threaded apps have a “sweet spot” between too little and too much memory. If the app was built using a modern framework such as .Net, you probably won’t have to worry about the “too much”. After that, it’s just like working on a physical system. Use Performance Monitor or other tools to track what resources are peaking and address them.

The relationship between physical cores and virtual cores and Hyper-V (or even in the VMWare world) always confused the daylights out of me.

So I have a server with an Intel Xeon E5-2400 CPU, with hyperthreading enabled (something I thought was required for Hyper-V). In the Host OS (Windows Server 2012 R2), the device manager shows 12 CPUs.

I want to run a VM which is the Terminal Server with Remote Desktop Services (RDS) where users can connect and do work (Office 2013 will be installed on this as well).

How many vCPUs should I assign (I can assign 12 to it with 1 NUMA node)? Also, what is the relationship between physical/virtual CPUs and NUMA Nodes?

Personally, I have a harder time understanding VMware’s model than Hyper-V’s.

If this is the only VM you’re going to run, give it all 12. Terminal Server can put a big strain on virtualization, so you need to expect it to want as much as you can give it. If you’re going to be sharing the hardware with other VMs, I would still aim high, probably 8 vCPUs or more.

Hyper-V is NUMA-aware, and will pass that down to guests that are also NUMA-aware unless you disable it. The relationship there is actually fairly straightforward. Hyper-V respects NUMA boundaries. Threads on a NUMA node will be kept with memory in that NUMA node to the best of the system’s ability, just as it would in a non-virtualized environment. You can use the option to prevent NUMA spanning which will force the issue, albeit with the possible side effect of guests with large memory requirements not running properly. I did a fair bit of testing on that, though, and had a hard time really getting things to truly break.

The relationship between physical cores and virtual cores and Hyper-V (or even in the VMWare world) always confused the daylights out of me.

So I have a server with an Intel Xeon E5-2400 CPU, with hyperthreading enabled (something I thought was required for Hyper-V). In the Host OS (Windows Server 2012 R2), the device manager shows 12 CPUs.

I want to run a VM which is the Terminal Server with Remote Desktop Services (RDS) where users can connect and do work (Office 2013 will be installed on this as well).

How many vCPUs should I assign (I can assign 12 to it with 1 NUMA node)? Also, what is the relationship between physical/virtual CPUs and NUMA Nodes?

On a dual e5-2650 8 pcores each total 16 pcores with hyperthreading turned on so device manager shows 32 cpu. This machine runs 2012 r2 hyper v. there are two guests OS both 2012 r2 one DC and the other is the main terminal server (RDP) which runs about 65 sessions.

The main apps are SAP business one, MS Office and web browsers.

Would you recommend leaving HyperThreading on or turning it off.

Don’t turn off Hyperthreading unless you are running an application whose manufacturer has specifically said that Hyperthreading should be off when the application is virtualized or unless you have direct evidence that Hyperthreading is causing problems. Lync is an example.

I don’t know about SAP, but none of the other items you mentioned would require you to turn off Hyperthreading.

On a dual e5-2650 8 pcores each total 16 pcores with hyperthreading turned on so device manager shows 32 cpu. This machine runs 2012 r2 hyper v. there are two guests OS both 2012 r2 one DC and the other is the main terminal server (RDP) which runs about 65 sessions.

The main apps are SAP business one, MS Office and web browsers.

Would you recommend leaving HyperThreading on or turning it off.

I have a server that is running so slow it takes 3 hours to load 18 windows updates, then 24 hours to reboot. You can see the registry entries creep along instead of fly on normal machines. I have moved the 2008r2 guests from a 2008r2 host to a 2012 host machine. If the registry updates are slow is that a iops problem or cpu – 3 guest partitions, includes one DB and two application partitions, total 15 users for a library management system (not much activity) 8gb for db, 6gb for other guests out of 32gb total. one Raid 10 – 4 of sas 10k, drives reserve 4 cpu for db, 2 for others

Thoughts?

Usually I/O or memory if it’s a resource contention problem. That sounds like something else entirely may be going on. You’d have to run performance metrics on the host and guests during the update to see if anything is out of order.

I have a server that is running so slow it takes 3 hours to load 18 windows updates, then 24 hours to reboot. You can see the registry entries creep along instead of fly on normal machines. I have moved the 2008r2 guests from a 2008r2 host to a 2012 host machine. If the registry updates are slow is that a iops problem or cpu – 3 guest partitions, includes one DB and two application partitions, total 15 users for a library management system (not much activity) 8gb for db, 6gb for other guests out of 32gb total. one Raid 10 – 4 of sas 10k, drives reserve 4 cpu for db, 2 for others

Thoughts?

Hi Eric Siron,

Your article explains it far out the best I think. But still i’m a little confused. Most talk about 2 or 4 vm’s on 1 physical host. But what about running 30 different vm’s on 2 host servers with each 20 cores.

We have 2 host servers with 2 cpu Intel v2 series processor, witch in or case gives 20 cores and 40 logical processors according to the taskmanager. Makes a total of 40 cores and 80 logical processors.

The vm’s differs from AD, DNS, Exchange, SCOM, app and web servers. I’ve try 1, 2, 4, 8 and 16 vCPU and it seems the more the better. The physical host still seems to sleep most of the times and CPU time on the host doesn’t come over the 10%.

I’m considering to go for 20 and maybe also the max 40 vCPU on some vm’s. But still I running about 30 vm’s in total, say what if 30vm’s x 20vcpu= 600 vCPU in total. What will did do??? Is it overkill and asking for trouble. The hardware supplier says not more vCPU in total than the actual logical processor, so no more then 80 in total on 2 host.

Hope you can take away last bit of confusion for me.

Thanks! Remco

Hi Remco,

There’s an upper limit on the usefulness of adding virtual CPUs to any given guest and a limit to how thinly the physical CPUs can be spread, but finding those points is usually a matter of trial and error. I suspect that you’re well beyond the point of diminishing returns. But, what you’re seeing is the results of efficient thread distribution.

What you’ve done is overkill, but whether or not it’s troublesome is entirely dependent upon usage. vCPUs are resource objects that must be managed and tracked by the hypervisor, so having too many could hurt performance if there’s contention. If all VMs have access to all physical cores and a few of them decide to run very heavy multi-threaded operations, they could cause responsiveness issues in other guests. But, in fair weather conditions, there will be no problems at all.

I suspect that you have far more physical CPU than you really need and that’s why you’re seeing the results that you’re seeing. If your hardware supplier is saying that you shouldn’t have more vCPU than logical processors, then your hardware supplier does not understand virtualization, or, really, computing in general.

It doesn’t look like you have anything to really worry about. If you have the time, I’d find out each guest’s “sweet spot” where adding more vCPU has no truly meaningful impact and set them to that. Where that line is varies from person to person. In generic terms, I believe that a 20% performance boost is significant but a 5% increase is not. I would be hesitant to give all virtual machines access to all logical processors in any case.

Hi Eric Siron,

Your article explains it far out the best I think. But still i’m a little confused. Most talk about 2 or 4 vm’s on 1 physical host. But what about running 30 different vm’s on 2 host servers with each 20 cores.

We have 2 host servers with 2 cpu Intel v2 series processor, witch in or case gives 20 cores and 40 logical processors according to the taskmanager. Makes a total of 40 cores and 80 logical processors.

The vm’s differs from AD, DNS, Exchange, SCOM, app and web servers. I’ve try 1, 2, 4, 8 and 16 vCPU and it seems the more the better. The physical host still seems to sleep most of the times and CPU time on the host doesn’t come over the 10%.

I’m considering to go for 20 and maybe also the max 40 vCPU on some vm’s. But still I running about 30 vm’s in total, say what if 30vm’s x 20vcpu= 600 vCPU in total. What will did do??? Is it overkill and asking for trouble. The hardware supplier says not more vCPU in total than the actual logical processor, so no more then 80 in total on 2 host.

Hope you can take away last bit of confusion for me.

Thanks! Remco

The utilization is said to be calculated when vm:s are running, but I have a problem where also vm:s that are turned off is counted for. We have a host that we have 100 vm:s placed on, but only around 20 are running at any given time. Still I cant place more machines on it since Scvmm2012 reports this:

I don’t work with VMM any more often than I absolutely have to, not least because of nanny-nag things like this. I thought, though, that you could just proceed even when VMM doesn’t like something? Are you trying a migration or a new VM creation?

Hello Eric,

Your article is very helpful, thanks!

I am setting up a single VM on a low end server running windows 2012 r2 with hyper-v role. For the time being, it will be my only VM. The machine is a quad core and I believe I should give it 4 vcpu’s if it is the only VM. Is this correct?

Also, I am seeing big performance difference when I transfer files to the host OS vs the Guest OS. Essentially transferring files from the Guest OS is 10-20MB/s whereas transferring files from the Host OS is 45-70MB/s.

Is it normal for a VM’s performance to be much less than the Host OS?

Is it because of the Virtual NIC or the virtualized environment in general?

For only one VM, I would be inclined to give it the maximum number of vCPUs. If you add more VMs later, you might want to revisit this practice.

There are a lot of reasons why VM network rates might be slower than the host. Usually it’s drivers, but you’ll want to spend some time working with the offloading features of the network cards in the host. It’s mostly trial and error, unfortunately.

I will defiantly keep it all 4 for now. I appreciate you addressing every point in my last inquiry. You must be a valuable asset to Altaro.

If we assume that drivers and the settings are all optimal for the system, should there be a big difference in transfer speed when comparing the host os and the virtual machine?

I see 40-70MB/s for the host OS and 10-20MB/s for the VM! (Read performance)

Perhaps I should troubleshoot by adding a USB storage drive or a second drive and see if there is a difference with that.

I am starting to think it might be because I am running on RAID 1 with 2 SATA drives @7200RPM and no cache module.

However, it doesn’t really explain the performance difference between Host OS and Guest OS…

You can probably tell, I am new to Hyper-V.

When everything is in order, the guests can transfer almost as fast as the host. Use a network performance tool rather than file copy to be certain.

Hello Eric,

Your article is very helpful, thanks!

I am setting up a single VM on a low end server running windows 2012 r2 with hyper-v role. For the time being, it will be my only VM. The machine is a quad core and I believe I should give it 4 vcpu’s if it is the only VM. Is this correct?

Also, I am seeing big performance difference when I transfer files to the host OS vs the Guest OS. Essentially transferring files from the Guest OS is 10-20MB/s whereas transferring files from the Host OS is 45-70MB/s.

Is it normal for a VM’s performance to be much less than the Host OS?

Is it because of the Virtual NIC or the virtualized environment in general?

Hello Eric!

The article is pretty initeresting.

Our company encountered Hyper-V for the first time. The data centre provided some resources and GUI to create VMs.I created VM with 8 CPUs (detected as Xeons 2.xx GGz) and 24 GB RAM. I deployed windows 2008 into it and as a test job ran geospatial software, that computes some sofisticated paramteres of the Earth surface. The process that makes iterations eats about 15% of CPU and 300MB of RAM. I know that if I run the same software on physical machine it eats CPU resources twice more: 30%-55%.How to make this software use maximum available resources? Or there’re some limitations set up by data centre? I don’t see any settings that I could play with regarding CPU/performance limitations. The HDD subsystem is pretty fast.

Thanks.

Any time the CPU is not at 100%, the bottleneck is elsewhere. Disk is probably it. Even if it’s fast, it will be throttled to prevent any one VM from monopolizing the disk subsystem. My guess is that it’s there.

Hello Eric!

The article is pretty initeresting.

Our company encountered Hyper-V for the first time. The data centre provided some resources and GUI to create VMs.I created VM with 8 CPUs (detected as Xeons 2.xx GGz) and 24 GB RAM. I deployed windows 2008 into it and as a test job ran geospatial software, that computes some sofisticated paramteres of the Earth surface. The process that makes iterations eats about 15% of CPU and 300MB of RAM. I know that if I run the same software on physical machine it eats CPU resources twice more: 30%-55%.How to make this software use maximum available resources? Or there’re some limitations set up by data centre? I don’t see any settings that I could play with regarding CPU/performance limitations. The HDD subsystem is pretty fast.

Thanks.

We have 3 hyper-v host in cluster. We have around 8-10 VM running on each servers. Some are assigned 2 vCPU and some 4 vCPU. The max vCPU allowed is 32. The total vCPU assinged to the VMs exceed 32. Is this normal or am I am assigning more vCPU than total number of allowed.

Oversubscription of CPU is normal.

Dear Eric,

Good day to you…

I have a deployment to make in Hyper – V 2012 R2 and as a forecast, am supposed to show my management the number of VMs against a single host ratio, considering the vRAM and the vCPUs. Could you please help me with some standard capacity planners for the same or what will be the recommended ratio. This set-up will run Domino mail servers on top of it.

Thank you 🙂

http://aka.ms/map

Dear Eric,

Good day to you…

I have a deployment to make in Hyper – V 2012 R2 and as a forecast, am supposed to show my management the number of VMs against a single host ratio, considering the vRAM and the vCPUs. Could you please help me with some standard capacity planners for the same or what will be the recommended ratio. This set-up will run Domino mail servers on top of it.

Thank you 🙂

Sir can I use Hyper-V on dual core Intel E5700 processor? I am using Windows Ultimate edition but it’s not showing Hyper V option in Windows Features Turn ON or OFF?? What should I do to use Hyper V on my PC?

The last Windows that came in an Ultimate edition was Windows 7. Client Hyper-V did not appear until Windows 8. You must have Professional or Enterprise edition.

Informative article!

Although it’s not the most important to the topic of the article, I just wanted to clear up some things about Hyper Threading. Hyper Threading is sort of a misnomer because it actually has nothing to do with parallel instruction execution. What you’re describing, about instructions executing in lockstep, is simply called pipelining (unrelated to Hyper Threading). Basically instructions are broken up into multiple stages, and when instruction 1 is done with stage A, instruction 1 moves into stage B and instruction 2 enters stage A. These stages are executed in parallel, but for different instructions. Of course, if instruction 1 is taking a really long time in stage B (because it’s waiting on data from main memory, for example), instruction 2 can’t continue onto stage B until instruction 1 moves out of it. This is a pipeline stall, and it’s what you alluded to.

To resolve this issue, CPU architects duplicated execution resources, creating what is called superscalar processors. The resources for each stage are duplicated so that multiple instructions can be in the same stage at the same time. The CPU then dispatches the instructions to the execution resources and figures out how to combine the results so that, even if instructions completed out of order, it appears that the instructions completed in the order of the instructions in the code.

But what if all currently executing instructions are waiting for a response back from memory? In the mean time, the execution resources are doing nothing while the instructions are waiting for memory. To resolve this, Intel created Hyper Threading. Hyper Threading makes a core look like two cores, so that two physical threads can be run. If all the currently executing instructions in one thread are waiting for memory (or for some other results/resources), Intel decided that the execution resources could be better spent executing more instructions from another thread.

Basically what Hyper Threading means is that if thread 1 has downtime, the core executes thread 2 in the mean time. Then when thread 1 executes again, the hopes are that the results for each executing instruction in thread 1 are ready, and the core can carry on dispatching more instructions from thread 1 to the execution resources. This makes better utilization of the execution resources and can squeeze a bit more performance out of the CPU cores.

Every single commodity desktop/server CPU uses pipelining (whether or not it is Hyper Threaded) and has done so for at least the past decade or two. It’s an easy way to increase instruction throughput of the CPU without adding too much complexity to the design. Hyper Threading is a way to increase the utilization of superscalar pipelines to further increase the instruction throughput.

Informative article!

Although it’s not the most important to the topic of the article, I just wanted to clear up some things about Hyper Threading. Hyper Threading is sort of a misnomer because it actually has nothing to do with parallel instruction execution. What you’re describing, about instructions executing in lockstep, is simply called pipelining (unrelated to Hyper Threading). Basically instructions are broken up into multiple stages, and when instruction 1 is done with stage A, instruction 1 moves into stage B and instruction 2 enters stage A. These stages are executed in parallel, but for different instructions. Of course, if instruction 1 is taking a really long time in stage B (because it’s waiting on data from main memory, for example), instruction 2 can’t continue onto stage B until instruction 1 moves out of it. This is a pipeline stall, and it’s what you alluded to.

To resolve this issue, CPU architects duplicated execution resources, creating what is called superscalar processors. The resources for each stage are duplicated so that multiple instructions can be in the same stage at the same time. The CPU then dispatches the instructions to the execution resources and figures out how to combine the results so that, even if instructions completed out of order, it appears that the instructions completed in the order of the instructions in the code.

But what if all currently executing instructions are waiting for a response back from memory? In the mean time, the execution resources are doing nothing while the instructions are waiting for memory. To resolve this, Intel created Hyper Threading. Hyper Threading makes a core look like two cores, so that two physical threads can be run. If all the currently executing instructions in one thread are waiting for memory (or for some other results/resources), Intel decided that the execution resources could be better spent executing more instructions from another thread.

Basically what Hyper Threading means is that if thread 1 has downtime, the core executes thread 2 in the mean time. Then when thread 1 executes again, the hopes are that the results for each executing instruction in thread 1 are ready, and the core can carry on dispatching more instructions from thread 1 to the execution resources. This makes better utilization of the execution resources and can squeeze a bit more performance out of the CPU cores.

Every single commodity desktop/server CPU uses pipelining (whether or not it is Hyper Threaded) and has done so for at least the past decade or two. It’s an easy way to increase instruction throughput of the CPU without adding too much complexity to the design. Hyper Threading is a way to increase the utilization of superscalar pipelines to further increase the instruction throughput.

Intel didn’t create “Hyperthreading”, they merely implemented simultaneous multi-threading and marketed it as hyperthreading. The requirements for SMT are a superscalar architecture (i.e. more than one instruction can be dispatched and executed concurrently “per cpu core per clock” by today’s nomenclature), out of order execution and register renaming. The important thing to realize here is that more than one process context can be loaded in a CPU core concurrently and that’s why there are “logical” cores. The CPU pipeline has limited hardware resources (e.g, only one fp alu) so the concurrently executing threads in a single core only works well when they’re not competing for these resources (called a hardware hazard). This is where out of order execution comes into play – the CPU can execute a future instruction early if it knows hardware resource will be available. Enabling losing of more than one process concurrently increases the likelihood that a suitable instruction is found for execution.

The Alpha EV8 was going to be amazing 4 thread SMT CPU – until it was cancelled in favor of the (wait for it) Itanium. https://en.wikipedia.org/wiki/Alpha_21464

Hi Eric! Loved the article.

My first experience with Virtualization was Xenserver. I’m thinking of moving over to HyperV – Limiting factor is costs.

I have Physical Servers. One is an older 2x16core opteron. 64gb ram. The other is a new V5 2x8core Xeon.

I would migrating these VMs. A conglomeration of Linux and Windows. I’m going to be implementing a setup where one server manages a home grown VDI (Using openthinclient) and the other server is for the servers.

Which box would you recommend be for a VDI solution and the other for servers.

And thoughts on Xenserver vs HyperV?

Thanks for your time! You make learning this stuff actually fun!

VDI is a resource hog. It will take everything you give it and not be happy. Server virtualization is much tamer. Usually.

VDI the Microsoft way is also very expensive. If cost is already an issue, VDI is probably not a good solution.

I have never used XenServer so I could not make a meaningful comparison. Technologically, XenServer and Hyper-V are more similar than ESX and Hyper-V, but there are still a great many differences.

Well, I ultimately came up with:

Datacenter on the Xeon machine. I’ll be creating a 2012r2 vm for every user (about 25) which they will RDP into from a DevonIT Thinclient running WES9

So far I have about 5 servers and 5 RDP servers setup. It’s running great. Though, each set (Servers and USERS) have their own RAID on Samssung 850 Pro SSDs (which I discovered was KEY!). Even 10k or even 15k disks just don’t cut it. The SSDs are magic.

Again, thanks for the article. Loved it.

Hi Eric! Loved the article.

My first experience with Virtualization was Xenserver. I’m thinking of moving over to HyperV – Limiting factor is costs.

I have Physical Servers. One is an older 2x16core opteron. 64gb ram. The other is a new V5 2x8core Xeon.

I would migrating these VMs. A conglomeration of Linux and Windows. I’m going to be implementing a setup where one server manages a home grown VDI (Using openthinclient) and the other server is for the servers.

Which box would you recommend be for a VDI solution and the other for servers.

And thoughts on Xenserver vs HyperV?

Thanks for your time! You make learning this stuff actually fun!

Also, I have read different considerations on SSD RAID 5/6 vs 10k RAID 5/6 in that SSD RAID is pointless and wears down the SSDs.

Thoughts on this?

SSDs have a much more predictable failure rate but to say that SSD RAID is pointless makes the assumption that SSDs never fail before their time, so it is a naive position to take. Any RAID type is faster than a single disk, so there’s that as well. The notion that putting SSD in a RAID would increase their wear rate is a myth that was empirically debunked years ago. It often, but not always, extends the life of the individual disks.

I would be less inclined to build a RAID 6 on SSD than on spinning disk just because the SSDs are so much less likely to fail. Other than that, all the same rules would apply.

I used RAID5 for the SSD Disks on Samsung 850 Pro 512GB

Working great! Thanks!

Also, I have read different considerations on SSD RAID 5/6 vs 10k RAID 5/6 in that SSD RAID is pointless and wears down the SSDs.

Thoughts on this?

I’m interested to know your take on VoIP with Hyper-V. The reason i ask is that i was always under the impression that voip (Asterisk) doesn’t work well on a virtual server because of the virtual timer, and this causes issues with call quality etc because the virtual server uses a virtual CPU, and doesn’t actual get down to the actual core of the server. Is this still the case? Would it be worth while to try voip in a virtual server in a production environment (after testing of course).

I am running a complete Lync deployment on Hyper-V. I have some Asterisk systems on VMware. Test it out, find and follow best practices, but you should be fine.

So I have an i7-6700 CPU which has 4 cores 8 threads running in my windows 10 pro desktop. I only plan to run one VM instance in my environment; which is now running Windows 10 Home. So I was wondering how many CPU’s do you think I should give the VM? Keep in mind that I will sometimes want to run decent loads on the host and VM at the same time.

Thanks.

There is no way for me to answer that for you.

What additional information do you need? I am just looking for a starting point that can be tweaked as needed. This is my first VM, so I am just throwing darts.

If you already knew what you would need to tell me so that I could answer that question, you would not have ever asked me in the first place. You cannot precisely plan CPU if you do not have a CPU requirements profile.

Start with 2. Increase as necessary.

So I have an i7-6700 CPU which has 4 cores 8 threads running in my windows 10 pro desktop. I only plan to run one VM instance in my environment; which is now running Windows 10 Home. So I was wondering how many CPU’s do you think I should give the VM? Keep in mind that I will sometimes want to run decent loads on the host and VM at the same time.

Thanks.

I’m rather new to Hyper-V coming from Vmware…my question is; CPU resource control only works with Microsoft Windows guest OS’s correct? Thanks.

Ryan

No. Everything in this particular post is guest-agnostic.

I’m rather new to Hyper-V coming from Vmware…my question is; CPU resource control only works with Microsoft Windows guest OS’s correct? Thanks.

Ryan

Interesting article, I still leave puzzled in regards to HYPER-V and vCPU per host. We have several servers running Hyper-V on 2012 Data Center Edition. We host for end users EHR application. My question is in regards to cpu utilization. I have read all the posts an Article and your responses. On the logic of 12:1 we should only subscribe 192 max Physical Processors to Physical Cores. Is this correct, because we overscribe like crazy. Each machine has around 30 Virtual Machines, running mostly 2012 DC Guest. We have many Remote Desktop Servers, running at max Processor Count. My question is specific, based on the above and answers, Does Over Subscription in the Guest OS of the RDS Servers above the 192 count have a diminishing return? Theoretically we have support for up to 960 Virtual Processors for 30 VM, if you oversubscribe how high is the cost to performance for the Hypervisor to support all those Idle Threads? Obviously a balance is needed, but what is the cost of Oversubscribe on the Hypervisor/POS (Which you point out the GUI is a Virtual Sort Off). We have a habit of oversubscribing, for example 4-8 vCPU per DC, 24 vCPU to SQL Servers and MAX vCPU per RDS Server (like you said it is a hog and end users like speed). Also, in regards to SSD, we are now venturing there, from your input, you actually trust SSD Media over Magnetech Spinning Disk for reliability, is this correct?

As I said, 12:1 is something that someone made up. If higher densities are working for you, don’t worry about it. Idle thread counts are not of any particular concern. If you were to try to figure out any CPU costing involved, it would all be around context-switching. I don’t know that anyone has published numbers, but 1-3% is reasonable.

Enterprise-class SSD is more reliable than spinning disk for the equivalent number of write operations.

Thanks,

Got it, our end users are happy, but we are tweaking for Peak Time. SSD is clearly faster. We are starting to use them, and moving big clients to them. We use Veeam to backup, but we hope to never need it.

What is the default “measured span of time” for the CPU activity that you mention in the section below?

The CPU is a binary device; it’s either processing or it isn’t. The 100% or 20% or 50% or whatever number you see is completely dependent on a time measurement. If you see it at 100%, it means that the CPU was completely active across the measured span of time. 20% means it was running a process 1/5th of the time and 4/5th of the time it was idle.

As far as I know, Task Manager samples once per second.

What is the default “measured span of time” for the CPU activity that you mention in the section below?

The CPU is a binary device; it’s either processing or it isn’t. The 100% or 20% or 50% or whatever number you see is completely dependent on a time measurement. If you see it at 100%, it means that the CPU was completely active across the measured span of time. 20% means it was running a process 1/5th of the time and 4/5th of the time it was idle.

Thank you for this excellent article Eric! I’m really impressed you have continued to respond to comments years after publishing – many authors aren’t as dedicated, so thank you!

After reading all the comments, I’d still appreciate some clarification on this.

My HA fail over cluster: 2x Xeon E5-2680v3(48 logical processors) w/ 128gb ram on each server sharing a directly attached (16x600gb15k SAS) PowerValut. They are running server 2012r2 datacenter. Now, everything works great with the 9 VMs currently running but I’m approaching my vCPU limit(so I thought). I looked up this article because I wondered how many cores I should leave my host OS but since you taught me its really just another VM since Hyper-V is enabled; I guess i don’t need to worry about it because the Host will use whatever cores it needs when it needs it even if i have 48 vCPUs assigned across the 9 VMs? But did I understand “over subscription” correctly? Meaning i could assign up to 48 vCPUS to all 9 VMs? Totaling 432 vCPUs assigned across the 9 VMs?

Thank you for your time!

“I could assign up to 48 vCPUS to all 9 VMs?” — Yes, you could, if that’s what you wanted to do.

I was concerned that I may need to decrease the vCPUs on some VMs when it came time to create more. Thank you for the clarification and fast reply.

Thank you for this excellent article Eric! I’m really impressed you have continued to respond to comments years after publishing – many authors aren’t as dedicated, so thank you!

After reading all the comments, I’d still appreciate some clarification on this.

My HA fail over cluster: 2x Xeon E5-2680v3(48 logical processors) w/ 128gb ram on each server sharing a directly attached (16x600gb15k SAS) PowerValut. They are running server 2012r2 datacenter. Now, everything works great with the 9 VMs currently running but I’m approaching my vCPU limit(so I thought). I looked up this article because I wondered how many cores I should leave my host OS but since you taught me its really just another VM since Hyper-V is enabled; I guess i don’t need to worry about it because the Host will use whatever cores it needs when it needs it even if i have 48 vCPUs assigned across the 9 VMs? But did I understand “over subscription” correctly? Meaning i could assign up to 48 vCPUS to all 9 VMs? Totaling 432 vCPUs assigned across the 9 VMs?

Thank you for your time!

We have a software vendor that is requiring that we have 4 dedicated processors to our VM instance. I have told them that we can allocate 4 processors but not dedicate 4 processors. Because you cannot cherry pick which processor that will be matched up with a vm. Is my thinking correct?

That is exactly correct. Although it should hopefully give them what they want realistically.

perfect. I now have the backing of an expert to my thought process. The program is written in .net so the vm should be able to handle hyper threading? Is hyper threading setup as default or do you have to check a box to turn it on? Also, can you use hyper threading on a vm that has been running for a while without it?

If it’s a .Net app, then I’m certain that setting the Reserve will be sufficient.

Hyper-threading is always used by Hyper-V if Hyper-threading is enabled in the hardware BIOS. Hyper-V will handle guest thread scheduling with Hyper-threading in mind. .Net does not expose any CPU-related functionality to the programmer at all, so unless your vendor is doing something dumb like distributing binaries that have already been NGENed, the presence or lack of Hyper-threading is functionally irrelevant. You’ll get the minor speed boost if Hyper-threading is on and the app is multi-threaded and if the app truly pushes the CPU hard enough for you to notice. You’ll get baseline app performance otherwise.

Hello,

we have a virtualized exchange 2013 DAG on hyper-v cluster. Although we do not have any obvious performance issues, i came across microsoft’s best practice recommendation that suggests vp:lp ratio of 2:1 is supported although 1:1 is recommended. Is that an exception for virtualizing applications like Exchange and SQL which are memory intensive.

Our exchange 2013 VMs are hosted on BL460 G7 blades with 20 physical cores in total.

i meant resource intensive….that was a typo in my above comment

Since you have an environment to monitor, I would monitor it and see how it’s doing. If you can’t find a problem, then leave it be.

In abstract, Exchange and SQL are two applications that could realistically push the CPU. That doesn’t mean that they will. If I were architecting an Exchange deployment for a 10 user law firm, I wouldn’t worry at all about CPU usage. If I were architecting a SQL deployment as the back-end of the tracking system for a 5,000 user logistics company, I would worry very much about CPU. As for Microsoft’s recommendations, I would not use them as anything more than an informational message.

Thanks for the update Eric.

Hello,

we have a virtualized exchange 2013 DAG on hyper-v cluster. Although we do not have any obvious performance issues, i came across microsoft’s best practice recommendation that suggests vp:lp ratio of 2:1 is supported although 1:1 is recommended. Is that an exception for virtualizing applications like Exchange and SQL which are memory intensive.

Our exchange 2013 VMs are hosted on BL460 G7 blades with 20 physical cores in total.

Hello Eric! Thx for your article. It’s really great!

Have some questions. May you help me:

1. How to correctly calculate Ratio? 1:8 or 1:12, this is (Physical_Cores:vCPU) or (Logical_Cores:vCPU). I mean Logical_Cores as with Hyper-Threading technoligy enabled.

2. We are cloud (VPS) provider and have high oversubscription in our clusters (about 1:14 and more. pCPU:vCPU). And we are faced with the problem, when ratio on a host become too high after that Host OS slow down, freeze, latency increases on Physical NICs (we use VMQ) and so on. You wrote thet after Hyper-V role enabled Host OS become virtual, like a virtual machine. What about priority Host OS threads in scheduler? Are they most priority that other threads? I can controll weight of VM threads, but what about Host OS? Or, our problem is not related on it?

3.

Windows Server 2016 Datacenter does multi-tenant really well. It’s definitely worth a look, despite the high cost of licensing and the new per-core model. I believe there are still some really good videos on MVA about multi-tenanting, taming resource hogs, and parceling out bandwidth. Of course none of that stuff works in Standard Ed; only DataCenter.

Hello Eric! Thx for your article. It’s really great!

Have some questions. May you help me:

1. How to correctly calculate Ratio? 1:8 or 1:12, this is (Physical_Cores:vCPU) or (Logical_Cores:vCPU). I mean Logical_Cores as with Hyper-Threading technoligy enabled.

2. We are cloud (VPS) provider and have high oversubscription in our clusters (about 1:14 and more. pCPU:vCPU). And we are faced with the problem, when ratio on a host become too high after that Host OS slow down, freeze, latency increases on Physical NICs (we use VMQ) and so on. You wrote thet after Hyper-V role enabled Host OS become virtual, like a virtual machine. What about priority Host OS threads in scheduler? Are they most priority that other threads? I can controll weight of VM threads, but what about Host OS? Or, our problem is not related on it?

3.

Hi Eric,

Great article, it has helped my understanding a lot.

I was wondering if you would either be able to reply to this comment or extend your post to describe what the CPU usage in task manager actually means inside a guest. You have described the host and hypervisor very well, I know the perfmon counters can tell me what physical CPU is being used, but I can’t find much information about what the Guest sees as CPU usage is relating. An awful lot of admins RDP onto the guest VM to determine CPU usage, but is it really meaningful at all?

Many thanks for your time

I wouldn’t say that the guest view is meaningless. Its primary function would still be to track individual application performance, something that the hypervisor cannot do. I do not know if it is possible to map a guest thread to the physical core that operates it, but I’m not certain if that information would be useful, either.

Ok thanks, are you able to explain what the relation would be if we saw high utilization on an 8 vCPU Guest (from inside it) with a host which has say 16 physical cores, is this because of contention for other VM’s running on the hypervisor, or in the case of a single VM is it perhaps a case we need to add more vCPU. It is the relation between what the guest sees as CPU usage compared with the physical CPU that I dont quite understand. I have read somewhere an analogy which said that is a little like giving a VM a slice a pie, it doesn’t see or know there is more pie available and eats what it has been given, but i am not 100% sure on how this can relate to what the guest is actually needing, is it happy being at 90-100% or should it be given more vCPU, would it even make a difference?

Also, I am very unsure how I would configure vNUMA on the virtual machine, in fact our whole team is a little confused and unsure of what these settings do. I have read on technet to let the hypervisor sort it out (using hardware topology) but my colleague was instructed by MS Support to adjust it to give more NUMA nodes.

On a physical box from what i understand you would rather have 2 large NUMA nodes than 4 smaller ones because there is less chance that memory pages will be accessed remotely, but my colleague is under the impression we should increase the NUMA nodes and neither of us know who is right. the hardware topology says we should have 2 sockets and 2 NUMA nodes, but on this particular VM we have 180GB assigned, 16 vCPU and he made 8 NUMA nodes with 22.5GB each. The workload inside the VM is SQL.

It doesnt help that there seems to be very little information on why we would/should change it, what any calculation should be, technet telling us not to change it and MS support telling to make a change. Are you able to explain this function, or point me to some detailed resources?

Many thanks, appreciate your time

Activity in Guest A cannot have any bearing on what you see in Task Manager in Guest B. From one guest’s perspective, any time slices that belong to another virtual machine never happened. On an overloaded host, overly busy Guest A will cause latency in Guest B, not high utilization.

High utilization metrics within a guest mean that an application within that guest has high utilization. Hypervisor or not, nothing changes there. What you are describing to me is an application troubleshooting issue, not a hypervisor issue.

In the abstract, if the application is multi-threaded, then giving its VM more CPU will allow it to spread out its workload. Whether or not that means it drives more cores to 100% or it lowers its overall utilization depends entirely on what the app is doing. If an app that does all of its work in a single thread has a bug that causes race conditions, then it will just burn up a single core no matter how many cores are assigned. If an app that spreads its load across multiple threads has a bug that causes a race condition, then it will just burn up all the cores assigned to it. If you’re talking SQL server, it will spread out nicely. That doesn’t mean the queries that it operates are designed efficiently, though.

What NUMA does is allow a process to prefer memory that is owned by the same CPU that operates its threads while still having access to memory owned by a different CPU. Its purpose is to do as much as is possible to reduce or even eliminate the latency involved with requesting memory from another CPU. In early multi-socket systems, we had “interlaced” or “symmetric multi-processing” memory layouts in which memory allocation was just round-robin between the sockets. Now we have NUMA. NUMA-aware systems will attempt to group the threads and the memory owned by a process together on the same CPU. If a process’s thread winds up on another CPU, it can still access the memory on the original CPU, but it needs to make a cross-CPU request, and that induces latency. For reference, that latency is measured in nanoseconds. Most processes aren’t going to care one way or another. Most SQL deployments aren’t even going to care.

The “best” configuration would be to have all memory owned by a single memory controller and no NUMA at all. The “next best” is to size things so that they stay within a single NUMA node. The “third best” would be to use the absolute minimum number of NUMA nodes possible. The real best is to use the hardware topology and not worry so much about it.

In the abstract, configuring a VM to have more NUMA nodes than there are physical nodes would marginally increase Live Migration portability at the expense of performance. That’s because very few applications are NUMA-aware. SQL is different. Whereas a typical application on a VM with artificially-increased NUMA nodes would wind up with increased latency because it would be forced to make cross-CPU memory requests even to local memory, SQL will read the NUMA topology and build its threads and memory allocation to match. If you increase the number of NUMA nodes to a SQL machine, it will spawn additional I/O threads. Effectively, if you are CPU-bound but have I/O to spare, reducing the number of NUMA nodes is probably going to help you. If you are I/O-bound but have CPU to spare, increasing the number of NUMA nodes is probably going to help you. If you are both CPU and I/O-bound, you need more hardware. Nine times out of ten, flipping switches in the software does not go very far toward alleviating performance problems.

In this case, I would focus efforts on the application and SQL and not worry so much about Hyper-V. If Microsoft has done a performance analysis and thinks that more NUMA nodes would help, then I would be inclined to at least try what they’re saying.

Hi Eric,

Thanks ever so much for your reply, it appears that MS had advised one of my colleagues to do an incorrect configuration with vNUMA, also over time since that advice was given memory and vCPU has changed for the VM but the vNUMA was ignored and automatically got adjusted (not based on the real topology).

We changed the vNUMA topology to reflect the physical topology and done some testing on this new configuration, the severe performance issues we had seemed to have gone now (the actual root cause of the original extra load still needs to be determined but that is for our internal checks).

So, in this particular case the host hardware topology is 2 NUMA with 2 sockets, each socket has 8 cores plus hyper threading enabled (16 logical cores per socket), and approx. 320GB RAM.

The VM HAD 16 x VCPU, approx. 280GB RAM, the vNUMA setting was really weird; 3 sockets, and 12 NUMA. What it ended up being inside the VM was 4 NUMA nodes containing 2 CPU each (8 vCPU so far) and the remaining 8 NUMA containing 1 vCPU each (total of 12 NUMA and 16 vCPU)

From the host perspective however the performance counters didn’t indicate any remote pages were accessed, so the 12 vNUMA were somehow aligned with the 2 NUMA on the physical system (I suspect 4 fit into NUMA 0 and 8 fit into NUMA 1).

I have since read several other of your blogs and you certainly seem like a smart guy with this sort of stuff, so I was wondering if you’d know the answer to my below question.

The only remaining puzzle here for us is the “why”. Why does artificially increasing the number of vNUMA have such a negative impact on the performance if the VM isn’t accessing remote memory pages on the host? The application is SQL 2012 which is supposed to be NUMA aware, but I don’t understand what the application is trying to do when it has this configuration (at a technical/thread/CPU level)

Are you able to explain why this could be?

Many thanks

Part of the performance issue is because SQL is NUMA-aware. It will craft its threads so that they stay within the confines of a NUMA node, thereby eliminating the performance penalty of forcing those threads to access remote memory. By artificially dividing your available memory into fake NUMA nodes, you are causing SQL to increase the number of threads that it uses and also confining each of those threads to a smaller memory address space. If you have many small transactions, that division makes sense because you increase parallelism at the primary expense of available memory per transaction. There’s also a secondary expense caused by increased parallelism. Threads are a finite resource and context-switching has always been an expensive operation. To go much past this point would require some advanced knowledge of SQL Server that I do not possess, but it is a truism that if those threads are short-lived, the expense of setting them up and tearing them down can cause an overall performance drag.

So, the solution that MS proposed CAN work in some cases. Just not in yours.

Hi Eric,

Great article, it has helped my understanding a lot.

I was wondering if you would either be able to reply to this comment or extend your post to describe what the CPU usage in task manager actually means inside a guest. You have described the host and hypervisor very well, I know the perfmon counters can tell me what physical CPU is being used, but I can’t find much information about what the Guest sees as CPU usage is relating. An awful lot of admins RDP onto the guest VM to determine CPU usage, but is it really meaningful at all?

Many thanks for your time

thanks for the response, that kind of makes sense to me 🙂 i’ll have to find out further how our SQL server is working and whether the threads are short lived or not, maybe it was the excessive context switching which was pushing the server just over the limit, i feel the system is on the cusp of what it can do already with the allocated vCPU, possibly assigning some more vCPU could help with this from what I have read and understood so far. we have two SQL instances on the one VM each of which are standard and will only use 16 CPU maximum, i have a feeling if we added 4 more vCPU, SQL will disperse across them and reduce the CPU load a bit overall but increase usage of the real CPU, each instance should balance itself across 16 CPU, the host at the moment averages around 50% of its physical CPU resource so we have plenty of headroom

I want a clarification

I hired a VM with 16 processors and I saw only two processors have utlised by 90 % and another 80% and remaining 14 processors have utilised only 10-20 %.

Now we have increased the 32 processor after that I also seen my only two processors utlised 70 and another 60% and all other processors utlised only 20-30 %

How can set that task should be distributed to every processor so that every processor get utilise and my application run fast.

Pl. suggest ..

You cannot force applications to evenly distribute their processing work.

I want a clarification

I hired a VM with 16 processors and I saw only two processors have utlised by 90 % and another 80% and remaining 14 processors have utilised only 10-20 %.

Now we have increased the 32 processor after that I also seen my only two processors utlised 70 and another 60% and all other processors utlised only 20-30 %

How can set that task should be distributed to every processor so that every processor get utilise and my application run fast.

Pl. suggest ..

Hi Eric,

If I have only one VM and assign only one CPU (the guest is showing 1CPU 1Core) it the same has having all of 6cores/12virtual physical server?

The Guest is Windows server 2012.

No.

Hello,

Thanks for the informative article, I wonder if I could ask a quick question please,

I am testing an application that uses SQL to store Data, and I am comparing the speed of importing a large number of records between a VM using ESXi 6.5 and a VM using bare metal Hyper-V 2016 install.

Both Hypervisors are installed on two separate servers of the same server type configured with exactly the same hardware, DELL PowerEdge R220’s.

I have found the Hyper-V virtual machine to be a lot slower, taking more than twice as long to import records as the ESXi VM.

Both VMs have have 4GB ram, and a single core vCPU, during the import I have been monitoring CPU utilisation in the VMs and noticed the ESXi VM using up to 90% when performing the import, and the Hyper-V VM using around 45%.

I am at a loss as to why Hyper-V is slower, and wondered if you have any ideas why I am seeing such difference in performance please ?

Your workload is not CPU-bound. Look at other metrics.

My assumption would be storage configuration, possibly Hyper-V’s I/O load balancer trying to ensure there is sufficient access to storage for other VMs.

Eric thanks for this informative guide. A colleague and myself and shoe how have different takes on the same article.

So we wanted to go to the source. We have a host with (2) 8 core pCPUs (16 cores in total). On that host we have 2 VMs only running 4 vCPUs each. The VMs replaced identical Physical Servers with (2) 6 core pCPUs (12 cores). The VMs were originally built with 8 vCPUs each. We recently changed the vCPUs down to 4 because it was felt the more vCPUs created an artificial overhead (bottle neck) which was slowing the servers performance. But my read was that for the best performance you should stack more vCPUs to allow more threads to get in line for CPU processing.

This host will only ever run these 2 VMs how many vCPUs would you recommend?

Context switches kill CPU core performance more than most anything else. Stacking vCPUs is “bad” because it will ensure more context switches. Yes, it allows any given VM to queue up more processes, but it also means that all VMs will queue up on the same core, which leads to more context switching.

To reduce context switching to its absolute minimum, you’d need to push toward a 1:1 vCPU:pCore ratio.

You will also need to take a look at NUMA. I assume that you’ve also allocated most of the memory to the VMs? What you want to try to do is keep each of your VMs’ memory within a NUMA node. Hyper-V may still choose to span, but it will also span the vCPUs. If one VM has significantly more memory than the other, that poses a problem for NUMA. If you can balance the memory and vCPU, that’s “best”. If you can’t balance the memory, then balance vCPU count in line with memory allocation.

All of that assumes that your guests really are CPU-bound. In my experience, that’s a rare situation. Make sure that you capture performance traces from within the guests to confirm. If they’re not CPU-bound, then this sort of discussion is fun academically, but practically pointless.

My standing recommendation is to assign 2 vCPU per virtual machine and only increase for the virtual machines that display CPU bottlenecks. I make exceptions in cases like yours where that would lead to unused cores. However, I still say to increase only where you see the demand. So, if one of your 2 VMs is burning CPU, then give it more vCPUs and leave the other alone. If they’re both burning, then you’ve got a balancing act to perform.

So I should of gave you the full specs of the Host and VMs before asking the question. The host has 128GB with the 16 pCores. The VMs are identical with dynamic RAM 8GB to start 32GB to max. They are not CPU-bound (SQL-Database and a App Server that uses said DB). We agree to allocate the available resources instead of letting them sit idle.

We are testing a new Host/VM platform for our clients and were not sure what was best practice for this.

Thanks for your advise! And keep these articles coming ;-)!

Any thoughts on how vCPUs assigned to VMs relate to core counts when dealing with MS licensing? IE: A hyper-v vm with 8 vCPUs assigned and running SQL – which is licensed by number of cores – would 8 vCPUs translate to 8 cores for licensing purposes?

For Windows licensing, only the host cores are counted.

For SQL, I’m not entirely certain. I know that in one method, you license all of the physical cores and then you can run SQL in as many instances as you want. I don’t know if there is a way to only license vCPU.

Check with your license reseller.

Hi Eric,

Just would like to clarify this “you can’t assign more vCPUs to a virtual machine than you have physical cores in your host”

I thought it is the number of logical processors that is taken into account for maximum vCPU number that can be assigned to a virtual machine and not the number of pCPU cores.

Am I right?