Save to My DOJO

Table of contents

Very little is said about file systems and formatting for Hyper-V Server deployments, but there are often a number of questions. This offering in our storage series will examine the aspects of storage preparation in detail.

Part 1 – Hyper-V storage fundamentals

Part 2 – Drive Combinations

Part 3 – Connectivity

Part 4 – Formatting and file systems

Part 5 – Practical Storage Designs

Part 6 – How To Connect Storage

Part 7 – Actual Storage Performance

Sector Alignment

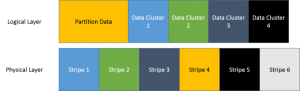

This section is mostly to lay to rest some advice that was very good for the Windows Server 2003 days and earlier. It’s still floating around as current, though, which is wasting a lot of people’s time. When a disk is formatted, a portion of the space is for partitioning and formatting information. After that, the actual data storage begins. The issue can best be seen in an image:

For single disk systems, it doesn’t really matter much how this is all laid out on the disk. Read and writes occur the same way no matter what. That changes for striped RAID systems. In all Microsoft disk operating systems, any given file always uses an exact amount of clusters. So, if you have a 4 Kb cluster size, a 4Kb file will use an entire 512 Kb cluster. A 373 Kb file will use exactly 94 clusters. Two files will never share a cluster. So, imagine you are writing a filed to the pictured system. The contents of a file are placed into data cluster 2. As you’ll recall from part 2 of this series, this will trigger a read-modify-write on stripes 3 and 4. If the clusters and sectors were aligned, then it would cause an entire stripe to be written and the RMW wouldn’t be necessary. That means only one write operation instead of two reads and two writes. In aggregate, that’s a substantial difference.

So, the advice was to modify the offset location. In the above image, that just changes where the first data cluster begins so that it lines up with the start of a stripe. If you’re interested, there is a Microsoft knowledgebase article that describes how to set that offset. The commands still work. However, starting with Windows Server 2008, the default offset should align with practically all RAID systems. If you want to verify for your system, you’ll first need to know what the stripe size of your array is. Then, use the calculation examples at the bottom of the linked article to determine your alignment.

The illustrations and wording so far indicates a matched cluster and stripe size. In most production systems, trying to perfectly match them is usually not feasible. Your goal should only be to ensure that the alignment is correct. Stripes are generally larger than clusters, and, because a cluster can contain data for only a single file, large clusters result in a lot of slack space. Slack space is the portion of a cluster that contains no data because the file is ended. For instance, in our earlier example of a 373 Kb file on a 4 Kb cluster size, there will be 1 Kb of slack space. If you managed to format your system with 256 Kb clusters (the actual maximum is 64 Kb) to match a 256 Kb stripe size, you’d have 117 Kb of slack space for the same file. As you can imagine, this can quickly result in a lot of wasted space. That said, the files you’re most concerned with for your Hyper-V storage are large VHD files, so using a larger cluster size might be beneficial. If you choose to investigate this route, keep in mind that performance gains are likely to be minimal to the point of being undetectable.

Partitioning and Disk Layout

You have a finite number of disks and a number of concerns they need to address. In a standalone system, you may be using all internal disks. It’s a common, and natural question, as to the best approach for these disks. You need to think about where you’re going to put Hyper-V and where you’re going to put your virtual machines.

Internal and Local Storage

First, Hyper-V needs nearly no disk performance for itself. The speed of the disk(s) you install Hyper-V on will affect nothing except startup times. Once started, Hyper-V barely glances at its own storage. It also does not perform meaningful amounts of paging, so do not spend time optimizing the page file. This is all a very important consideration, as more than a few people are insisting that Hyper-V should be installed on SSDs. This is an absurd waste of resources. Nirmal wrote an article detailing how to run Hyper-V from a USB flash disk. This is more than sufficient horsepower.

The benefit to a USB stick deployment is that all of your drive bays are free for virtual machines. The drawback is that USB sticks are somewhat fragile. Of course, they’re also easily duplicated, so this is something of a manageable risk. Despite this, it may not be an acceptable risk. In that case, you’ll need to plan where you’re going to put Hyper-V.

One option is to create a single large partition and use it for Hyper-V and virtual machines. If you’ve already installed Hyper-V, then you know that it defaults to this configuration. It’s generally not recommended, but there’s no direct harm in doing so. A preferable deployment is to separate Hyper-V and its management operating system from the virtual machines. With internal disks, there are a couple of ways to accomplish this.

One is to create a single large RAID system out of the physical disks using dedicated hardware. Most software solutions can’t use all disks, as they need an operating system to be present before they can place disks into an array of any kind except a mirror. On the hardware RAID, create two logical volumes (this is Dell terminology, your hardware vendor may use something else): one of 32 GB or greater (I prefer 40) and create a large logical volume on the rest of the space for virtual machines. You can also divide the space into smaller volumes and allocate virtual machines as you see fit. You may also use fewer logical volumes and carve up space with Windows partitioning, if you prefer.

Another option is to dedicate one or two disks for Hyper-V and the rest for the virtual machines. This establishes a clear distinction between the hypervisor/management operating system and virtual machines. If you choose this route, there’s no practical benefit in using more than two disks in a RAID-1 configuration for the hypervisor. One way that you can benefit from this is that the remaining disks can be used in a Storage Space in the absence of a dedicated array controller. Remember that this places a computational burden on the CPUs that will also be tasked with running your virtual machines.

Remote Storage

The basics of remote storage aren’t substantially different from the above. Drives are placed into arrays and then either presented as a whole or carved up into separate units. Your exact approach will depend on your equipment and your needs.

Don’t forget that you’ll need to consider where to place the hypervisor. You can use any of the internal storage options presented above, or you can use a boot-from-SAN approach.

Virtual Machines on Block-Level Storage

I’m not going to distinguish between FC and iSCSI here. Either connectivity method results in the same overall outcome: your Hyper-V host(s) treat block-level storage as though it were local. What matters here is how that storage is configured.

If your storage system supports it, you can choose to create an array out of a few disks and present that entire array as a storage location to one or more hosts. You can then place other disks into other arrays, and so on. The benefit with this approach is that it’s generally simpler to manage and it’s much easier to conceptualize. You’ll be able to put a sticker on certain physical disks and indicate which host(s) they exclusively belong to. It also helps to reduce the amount of risk that any given host is exposed to. Let’s say that you have fifteen disks in your storage system and you create five three-disk RAID-5 arrays. Each of these five arrays can lose a disk without losing any data. A rebuild of one array to replace a failed drive does not place the disks in other arrays at any risk at all. The drawback is that this is a very space-inefficient approach. In the five-by-three example, the space of five disks is completely unusable. Also, any given array can only operate at the speed of three drives.

Another approach is to create a single large array across all available disks in a storage location. Then, you can create a number of smaller storage locations that use all available drives. You wind up with a level of data segregation, but the data is at greater risk since the likelihood of multiple drive failures increases as you add drives. However, performance is improved as all drives are aggregated for all connected systems.

Of course, you also have the option to create a single large storage location and connect all hosts to it. For block-level storage, all Hyper-V hosts must be in the same cluster for this to work. Otherwise, there is no arbitration of ownership or access and, assuming you can get it to work at all, you’re going to run into troubles. This is not a recommended approach. I’ve used Storage Live Migration (and the regular storage move offered in System Center Virtual Machine Manager 2008 R2) to solve a number of minor problems and was very glad to have distinct storage locations. One thing that I did was disconnect storage from a 2008 R2 cluster and connect it to a new 2012 cluster and import the virtual machines in place. Having the storage divided into smaller units allowed me to do this in stages rather than all at once.

In block-level storage, these storage locations are typically referred to as LUNs. The acronym stands for “Logical Unit Number”, although it’s not quite as meaningful today as it was when it was first coined since we rarely refer to them with an actual number anymore. The “logical” portion still stands. The storage system is responsible for determining how a LUN is physically contained on its storage, but the remote system that connects to it just sees it as a single drive.

Virtual Machines on File-Level Storage

As discussed in part three, SMB 3.0 and later allows you to place virtual machines directly on file shares. Connecting hosts have no concern with the allocation, layout, format, or much of anything else of the actual storage. All they need is the contents of the files they’re interested in and the permissions to access it. This will be important, as SMB is an open protocol that can be implemented by anyone with the intent. Reports are that it will be incorporated into the Samba project, which implements SMB on Linux operating systems. Storage vendors such as EMC are already producing devices with SMB 3.0 support and more are on the way. The storage location is prepared on the storage side with a file system and then a share is established on it. Access permissions control who can connect and what they can perform.

If you’re configuring the SMB 3.0 share on a Windows system, remember your NTFS/share training. Both NTFS and share permissions have their own access control lists and each assigns the least restrictive settings applicable to a user, with Deny overriding. However, when combining NTFS and share permissions, the most restrictive settings are applied. As an example, consider an account named Sue. Sue is a member of a “Storage Admins” group and a “File Readers” group. The “Storage Admins” group is a member of the “Administrators” group on SV-FILE1, which contains a folder called “CoFiles” that is shared as “CompanyFiles”. The NTFS permissions on “CoFiles” gives the built-in group “Authenticated Users” read permissions and “Administrators” Full Control. The “CompanyFiles” share gives “File Readers” Read permissions. When Sue accesses the files while sitting at the console of SV-FILE1 and navigating through local storage, she has full rights. This is because she is in both “Authenticated Users” and “Administrators”; the Full Control permissions granted by her membership in “Administrators” is less restrictive than her Read permissions as a member of “Authenticated Users” and no Deny is set, so she ends up with Full Control at the NTFS level. However, when she accesses the same location through the “CompanyFiles” share, she only has Read permissions. She is not a member of any group that has more than Read permissions on that share’s access control list, so “Read” is her least restrictive permission. That she has Full Control at the NTFS level does not matter, because the share permissions won’t even let her try. Remember that when you’re creating shares for Hyper-V to use, you grant access permissions to computer accounts, not user accounts. Microsoft details how to set up such a share on TechNet.

A benefit of the SMB share approach is that a lot of storage processing is offloaded to the storage device. Your Hyper-V hosts really only need to process basic reads and writes. Processing for low-level I/O and allocation table maintenance falls squarely on the storage device. With block-level access, much of that processing occurs on the Hyper-V host even with the various offload technologies that are available. As SMB continues to mature, it will add features to bring it more feature-parity with the block-level techniques. Expect it to gain many new offloading features as time goes on.

Another advantage of SMB 3.0 is administrator familiarity. I happen to work in an institution where we have dedicated storage administrators to configure host access, but a great many businesses do not. Besides, it’s not fun going into a queue in another department when you need to get more storageright now. Configuring FC and iSCSI access methods aren’t hard on their own, but they’re unfamiliar. Also, Windows administrators generally have a good idea of the mechanisms of share and NTFS permissions. Not as many actually understand things like RADIUS and CHAP administration or how to troubleshoot or watch for intrusions.

Disk Formats

We’ve already talked a bit about NTFS. For now, this is the default and preferred format for your Hyper-V storage. It’s the only option for boot volumes. This format has withstood the test of time and is well-understood. It has robust security features and you can enhance it with BitLocker drive encryption.

With 2012 R2, you’ll be able to use the new ReFS system for Hyper-V virtual machine storage. ReFS adds protection against sudden drops of the storage, usually as a results of power outages. It also allows for larger volumes than NTFS, which will eventually become a necessity. It can also work better at finding and repairing data corruption. The integrity stream feature doesn’t work with VHDs yet, though.

In my opinion, ReFS is good — still a work in progress, but viable. I wouldn’t get in a hurry to replace NTFS to implement it, but I wouldn’t be afraid to use it either. Without the integrity streams, one of the data protection powers of ReFS is unavailable. That may be rectified in a later version. There are a number of NTFS features that ReFS doesn’t support, but none that should present any real problem for Hyper-V storage. In time, those may be brought into ReFS as well. 2012 R2 only represents the second version of this technology, so it’s likely to have quite a future.

What’s Next

This article concludes the theoretical portion of the series. The next piece will slide into practice. You’ll see some possible ways to design and deploy a solid storage solution in a high-level overview.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

10 thoughts on "Storage and Hyper-V Part 4: Formatting and File Systems"

Thanks for this amazingly helpful article!

I have questions on the Virtual Machines on Block-Level Storage section.

Could you please elaborate on your recommendation to avoid creating a single large storage location and connecting all hosts to it. I’m starting to plan out a lab and that’s exactly what I was going to do. Isn’t that one of the benefits of shared storage? I thought all of the hosts would need to be connected to the same lun in order to be able to “share” those VMs for for failover purposes.

You’ve linked to some other great articles before, can you recommend one on this specific issue?

Thanks again!

Part of it is just the basic notion of not putting all your eggs in one basket.

I think you’ll also find Storage Live Migration to be a lot more useful than it might seem at first blush. If you divide up your storage LUNs, you can move virtual machines around while you do such things as hypervisor upgrades.

I’ve also heard rumors that VMs running on the CSV owner node access their data faster than VMs on non-owner nodes. If that’s true, then using multiple CSVs and distributing ownership would help that. I intend to run some diagnostic tests to validate that, but as of this moment in time I don’t know if it’s truth or FUD.

No, I don’t know of any articles that specifically address this. It’s on my research list for part 7.

Hi

You wrote

and each assigns the least restrictive settings applicable to a user

I think that should most restrictive

Cheers

Jeff

It is least restrictive. If you are a member of two groups, one which is granted Read and one which is granted Full Control, then Full Control — the least restrictive option — is your effective permission. As written in the article, Deny overrides all, but otherwise, least restrictive applies.

Hi

You wrote

and each assigns the least restrictive settings applicable to a user

I think that should most restrictive

Cheers

Jeff