Save to My DOJO

The first part of this series was dedicated to looking at some of the most basic concepts in storage. In this article, we’ll build on that knowledge to look at the ways you can use multiple drives to address shortcomings and overcome limitations in storage.

Part 1 – Hyper-V storage fundamentals

Part 2 – Drive Combinations

Part 3 – Connectivity

Part 4 – Formatting and file systems

Part 5 – Practical Storage Designs

Part 6 – How To Connect Storage

Part 7 – Actual Storage Performance

Why We Use Multiple Drives

Despite a lot of common misperceptions, the real reason we use multiple drives for storage is to increase the speed at which drives can perform. Data protection is a secondary concern, mostly because it is more difficult to achieve. When these schemes were initially created, they were notoriously poor at providing any protection. Drives were manufactured and sold in batches, so one drive failure in an array was usually followed very quickly by at least one other. Improvements in manufacturing made multiple drive systems somewhat more viable for data protection, for a time. As drive sizes increase, the ability for multi-drive builds to protect data is again on the decline. The performance improvements are the real driver behind these builds, and they are still worth the effort.

As illustrated in the first article, drives are slow. Solid state drives are much faster than spinning disks, but they’re still not as fast as other components. By combining drives in arrays where their contents can be accessed in parallel, we can aggregate their performance for much improved data throughput.

True Data Protection

Since it’s been brought up, remember that the only real way to protect your data is by taking regular backups. There is no stand-in, no workaround, no kludge, and no excuse that is satisfactory. Get good backups.

Traditional RAID

In the distant past, RAID stood for “Redundant Array of Inexpensive Disks”. But, as most people were quick to point out, disks weren’t inexpensive. So, the “I” can also mean “Independent”. As the name implies, the idea was that a disk or two could fail and, through redundancy, could be replaced without losing data. As indicated earlier, this worked better on paper than in practice. However, the multiple paths to store and retrieve data increased drive performance, so usage continued.

There are multiple levels of RAID. This post will only examine the most common.

RAID-0

The zero in the name indicates “no redundancy”. This RAID type is most commonly used in performance PCs and workstations. If any drive in the array fails, all data is lost and must be restored from backup.

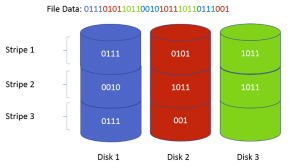

RAID-0 is useful to understand because its striping mechanism is used in all but one of the other common types. When data is written, it is spread as evenly as possible across all drives in the array in a pattern called a stripe. This is most easily understood by diagram:

The sample file data has been color-coded to show how it is distributed across a three disk RAID-0 array (sorry, those of you who are colorblind). You may notice that the final stripe isn’t full. The next file to be written will be able to use that empty space.

RAID-0 is used in performance systems because it is a pure aggregation of the drives’ abilities. When the file above is written, the controller writes to all three drives simultaneously. When the file is read, it is retrieved from all three drives simultaneously. Adding more drives to a RAID-0 directly improves its performance, but it also increases its risks as each new drive adds another potential point of failure that can bring the entire array down.

A RAID-0 array requires a minimum of two drives, but its upper limit is dictated by the controller (usually, the number of available drive bays is exhausted first). It is very uncommon to find such an array in a virtualization system.

RAID-1

RAID-1 is the only common RAID type that does not use a stripe pattern. It is known as a mirror array. It requires exactly two drives. Incoming data is written to both disks simultaneously, but because it is the same data, the speed is the same as if there was only a single drive. If the array controller is smart enough, data can be read from both drives simultaneously but from different locations, allowing the read speed of both drives to be combined.

One drive in a RAID-1 can fail and the other will continue to function. The loss of a drive is called a broken mirror. When the drive is replaced, a rebuild operation must take place, which simply copies the data from the surviving drive to the replacement. As this is an extremely heavy I/O operation, it is not uncommon for the other drive to fail during a rebuild. Some controllers have the ability to throttle rebuild speed (although this is generally to reduce the impact of the rebuild on standard data operations more than anything else).

The RAID-1 type is most commonly used to hold operating systems and SQL log files. It is generally not used to hold virtual machine data. However, in small installations with minimal use virtual machines, it would be acceptable.

RAID-5

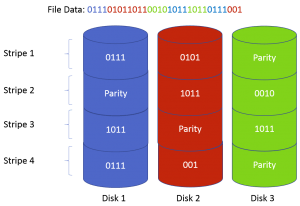

With RAID-5, we return to the striping mechanism. This system uses parity, in which part of the data saved onto disk participates in an error detection and control system. As with RAID-0, this is most easily understood by diagram:

For those interested, the parity calculation is a simple bitwise XOR of the live data. In the event that one of the drives is lost, this parity data can be used to mathematically reconstruct the missing data on the fly. That operation takes time, but the array can function in this degraded mode. The purpose of distributing the parity as seen in the diagram is to minimize the effects of the loss of a drive. Instead of calculating for an entire drive’s worth of data, it can skip calculated for blocks that only contained parity.

After a failed drive is replaced, a rebuild cycle begins. As with RAID-1, this can be a vulnerable time due to the uncommonly high I/O load. Many RAID controllers allow rebuilds to be throttled.

There are some other considerations for RAID-5 to speak of, but RAID-6 also shares these concerns so discussion will be held until after that section.

RAID-5 requires a minimum of three drives. Its maximum is limited by the controller and available bays.

RAID-6

RAID-6 is very similar RAID-5 except that it uses two parity blocks instead of one. This allows the array to lose two drives and continue to function. The math involved in parity calculations are more complex than the simple XOR technique of RAID-5. The impact is not usually noticeable with hardware RAID controllers but it’s something to watch out for with software arrays.

Concerns with Parity RAID

Parity RAID systems are beginning to fall out of favor in higher-end and large capacity systems. The reasoning for doing so is valid, but there are also a lot of FUD-spreading extremists who are advocating for the death of parity RAID regardless of the situation. This condemnation will eventually be the rule, but for now it is still very premature.

Write Performance

When a parity RAID system makes a write that only changes part of the data in a stripe, it first has to read all the data back so that it can recalculate the parity for the final combination of the pre-existing and the new/replaced data. Only then can it write the data. This entire process is known as a read-modify-write (RMW). It requires one extra read cycle before the write cycle. Naturally, that drags down performance. However, there are four mitigating factors. First, most loads are heavier on reads than writes (usually 60% or higher), and reads occur at the cumulative of all drives in the array minus one or two (because the parity block(s) is/are not read). Second, once the drives seek to where the stripe is, the entire RMW process occurs in an extremely localized area, which means that performance will be at the drives’ fastest rate. Third, a write-back cache on the array controller may cause the process to return to the operating system immediately such that the I/O effect is never felt. Finally, this write penalty is a known issue and should have been accounted for when the storage system was designed such that the minimum available IOPS remain above what is necessary for the connected system. I would also suggest a fifth mitigating factor: some people just have an unhealthy obsession with performance and it’s safe to ignore them. Another thing often overlooked is that a write that encompasses all blocks in a stripe is written immediately. The best way to increase performance of a RAID system is to increase spindles, so drop another disk or two in that new system you’re about to order and don’t worry.

Data Vulnerability

A more pressing concern than the write penalty is the vulnerability of data on the disks. Data on a disk is just a particular magnetization of coating on a glass platter. That magnetization status can and will slip into a non-discrete state. As bit densities increase and, as a direct result, the amount of coating that constitutes a single bit shrinks, the risk and effects of this demagnetization also increase. If the magnetization status of data in a stripe becomes corrupted on more than one drive, then the entire array is effectively corrupted. This is a very real concern and you should be aware of it.

The problem with the extremist FUD-spreaders is that they act as though this is all new information and we’re just now becoming aware of it. The truth is, the potential for data corruption through demagnetization has always been a risk and we have always known about it. Each drive contains its own onboard techniques to protect against the problem. This is pretty much the only reason the single drive in your laptop or desktop remains functional after a week or two in the field. Beyond that, RAID systems have their own protection scheme in the form of an operation that periodically scans the entire drive system for these errors. This process is known as a scrub, and it’s been in every RAID system I’ve ever seen, going back to the late ’90s.

That said, the risk is increasing and eventually we will have to stop using parity RAID. Disk capacities are growing to the point that a scrub operation just can’t complete in a reasonable amount of time. Furthermore, these big drives take a long time to go through a rebuild operation, stretching out into days in some cases. The sustained higher I/O load over that amount of time puts some serious wear on the remaining drives and greatly increases the odds that they’ll fail while there is insufficient protection. RAID-6 provides that extra bit of protection and as such will be with us longer than RAID-5.

So, the risk is real. But again, it’s on the big drives. Not everyone is jumping to those big drives, though, especially since they’re still not the fastest. The performance hounds are still using 450GB and smaller drives. These have no problems completing scrubs. Where is the sweet spot? I’m not certain, exactly, but I wouldn’t guess at it, either. I’d have the engineers for any RAID system I was considering tell me. They’ll have access to the failure rates of their systems. As a rule of thumb, I feel comfortable using 15k 600GB drives in a RAID-5. Putting a stack of 5400 RPM 2TB drives in a RAID-5 is just begging for data loss.

RAID-10

RAID-10 is a type of hybrid RAID in which multiple RAID types are combined in a single array. This particular type is called a “stripe of mirrors”. First, you take two or more pairs of drives and make them into mirrors. You then create a stripe across those mirrors. Just look at the RAID-0 diagram and imagine that each disk is actually two disks.

The primary reason to use RAID-10 is performance. With a well-designed controller, reads can occur from separate portions of every disk in the array, making this by far the fastest-reading RAID available. Writes occur at the combined speed of half the disks.

RAID-10 has historically been the preferred medium for SQL data, simply for the performance. However, it is also becoming the de facto replacement for parity RAID. Since data protection occurs across pairs and not the entire array, data integrity scans are quicker. If a drive is lost, only that pair needs to be rebuilt, so only one disk is placed at high I/O risk and not the entire array.

The drawbacks with RAID-10 are logistical in nature. Fully half of the physical drive space is reserved for redundancy, making it the most expensive per-gigabyte of the common RAID types. Reaching an equivalent storage capacity in comparison to a parity RAID also means filling lots of drive bays, which could quickly run out.

RAID-10 requires a minimum of four drives and is limited by the controller and available drive bays.

RAID Controller

RAID control can either be performed by dedicated hardware or in software. The preferred method is generally hardware. With modern hardware, parity calculations and scrub jobs and mirror maintenance aren’t particularly heavy loads, but it’s still better handled in dedicated hardware. Also, many hardware RAID controllers have an included battery that allow for write-back cache; in the event of power failure, the controller can flush cache contents to disks prior to powering them down.

Software RAID is often controlled by operating systems. Of most relevance in this series is the Storage Spaces feature (not really a RAID system, but close enough for this discussion) that was released in Windows Server 2012, but even earlier Windows operating systems could run a software RAID. Many NAS systems employ a software RAID, although that’s generally obscured by a specially designed operating system. In a Hyper-V Server deployment, what you want to avoid is running a software RAID (or Storage Spaces) on the same system that’s running virtual machines. The division of labor can be a drain on performance.

JBOD

JBOD will be something you’re going to start seeing more of. It stands for “just a bunch of disks”, and it means that there are multiple disks present but no RAID. In earlier systems, this wouldn’t be a sufficient platform for virtualization. Now, with the introduction of Storage Spaces, JBOD can be made into a viable configuration.

Storage Spaces

Storage Spaces is Microsoft’s new entry in the storage arena. It’s a large software stack that’s devoted to presenting storage. It was first introduced in Windows Server 2012 (and Windows 8, but I’m not going to discuss the user-level implementation). You can create a storage pool out of a single disk or across multiple disks in a JBOD. It is not supported to use Storage Spaces on an existing hardware RAID, although the system will allow it in some instances. In case you’re wondering, yes, the Storage Spaces system can detect a hardware RAID and, depending on what you’re trying to accomplish, will warn you about the hardware RAID or will simply refuse to use it. Storage Spaces also prefers SAS disks, although it can work with SATA (and with USB drives, although these aren’t supported for a server deployment).

Storage Spaces is not a traditional RAID system, although it does have some similarities. The simple Storage Space is effectively a RAID-0, as it stripes data across the drives in its pool. You can create a mirror on your physical storage, which uses two or three drives in a similar fashion to RAID-1. What’s interesting about the mirror is that you can continue adding disks in columns, which essentially converts your mirror into a RAID-10. You can also create a parity space, which is similar to a RAID-5. Storage Spaces also grants you the ability to designate hot spares. If a drive in a mirror or parity space fails or is predicted to fail, Windows will automatically transfer its data to one of these hot spares so that the system doesn’t need to wait for human intervention.

The draw for Storage Spaces is that it can provide many of the most-desired capabilities of a dedicated hardware storage system at a lower price point than the average SAN. You use a general-purpose computer system running Windows Server 2012 or later and regular SAS drives. You also have the option to attach inexpensive JBOD enclosures to the system to extend the number of drives available for use. The storage can then be exposed to local applications (although hardware RAID is still preferred for that) or to remote systems through iSCSI or SMB 3.0. You can even create Cluster Shared Volumes on a Storage Space.

The nice thing about Storage Spaces is that it’s also available for hardware vendors. They can build hardware systems with an embedded copy of Windows Storage Server using Storage Spaces, and the result is an inexpensive networked storage device.

If you’re considering using Storage Spaces, I’d encourage you to consider waiting until 2012 R2 is released. It adds a number of capabilities to Storage Spaces and addresses some of the shortcomings in the initial release. For instance, the 2012 version doesn’t support CSVs on a parity space, while R2 does. Since there’s less than a month to go (as of this writing), the wait should be tolerable.

What’s Next

This article took one step up from the fundamentals and looked at the ways that hard drives can be arranged in order to increase performance and reliability. The next part in this series will take a deep look into the ways that you can connect your Hyper-V systems to storage.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

7 thoughts on "Storage and Hyper-V Part 2: Drive Combinations"

How does Storage Spaces relate to any existing SAN setup that you have? Sorry but I am new to the storage side of things so would you use something like Storage Spaces with your SAN or would you just use your SAN to provide the storage?

I’ll say up front that I am by no means an expert on Storage Spaces. Storage Spaces is intended more for internal and direct-attached storage. I think there are ways to get it to work with a SAN but that’s not really its intended purpose.

When a SAN is present, my preference is to just have the nodes directly connect to it using CSV. There is the option of having one or more Windows Server systems in front of it presenting that storage as an SMB 3 share. That adds extra hardware and network hops, though, which translates to higher storage latency. The benefit would be that storage access would be controlled at the more familiar Windows layer and not on the hardware.

Amazing series of articles. You do a great job breaking down the basics that too many IT techs don’t fully understand.

Minor correction under RAID-5. I think the following sentence should say RAID-1 not RAID-0.

“After a failed drive is replaced, a rebuild cycle begins. As with the RAID-0, this can be a vulnerable time due to the uncommonly high I/O load. Many RAID-5 controllers also allow this to be throttled.”

Thank you! and thank you!

I will make the correction.

Amazing series of articles. You do a great job breaking down the basics that too many IT techs don’t fully understand.

Minor correction under RAID-5. I think the following sentence should say RAID-1 not RAID-0.

“After a failed drive is replaced, a rebuild cycle begins. As with the RAID-0, this can be a vulnerable time due to the uncommonly high I/O load. Many RAID-5 controllers also allow this to be throttled.”