Save to My DOJO

You may be aware that Hyper-V Server has two different virtual disk controller types (IDE and SCSI) and two different virtual network adapter types (emulated and synthetic). This post will look at these two types as well as the way that a virtual machine interacts with SR-IOV-capable devices. As we look ahead to Hyper-V Server 2012 R2 and its introduction of generation 2 VMs, understanding the differences will become more important.

Disclaimer

This posting will cover some fairly detailed concepts at a quick, high level. I have chosen to strive for brevity and comprehensibility over strict accuracy. If you have a deeper interest in a thorough analysis of hardware, both physical and virtual, there are a great many resources available.

Basic Hardware Functionality

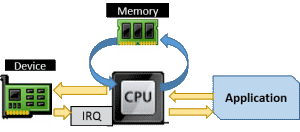

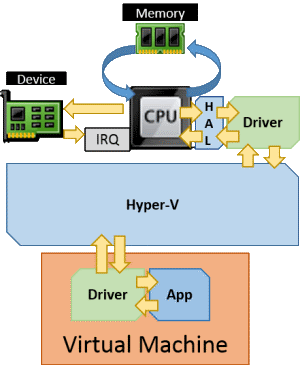

The CPU, as its name (Central Processing Unit) implies, is the essential core of what makes up a computer. It is connected to all other devices, whether they be internal components such as memory, hard drives, and video adapters, over a bus. There are many types of buses and all modern computers have more than one. But, regardless of the bus, all the hardware is controlled by the CPU.

Devices function by sending and receiving messages to and from the CPU. When software sends an instruction to disk to save a file, the instruction is processed by the CPU. Data going to and from the device is stored in a special location in memory set aside just for that device. Some ubiquitous devices have fixed, well-known locations in memory. For anyone who has been in the industry for a couple of decades or more, the address of “3F8” is known to belong to COM1. When testing modems connected to COM1, we could actually use DOS commands to copy “files” to COM1:

COPY CON COM1 ATDT 18001234567 ATH ^Z

The above would COPY from the CONsole to COM1. Where the copy command typically works with files, the CON source replaces a disk file with input from the console. The next three lines were the data for that console “file”; in this case, the first line sends a standard modem command to dial the phone and the second sends the standard modem command to hang up. The final line represents the end-of-file character, which, from the console, means that you’re done providing the file.

What would happen behind the scenes is that the CPU would place this data in the IO address space for COM1 (memory address 3F8) and then signal the device that it had data to process. When the device wants to talk back, it will do so by raising an interrupt request (IRQ), which is a signal to the CPU that the owner of that IRQ has something to say. As with data heading to the device, its response will also be routed through that address space. Hopefully, a software component has registered an interrupt handlerthat tells the CPU who to notify of this request. Of course, in our little DOS example above, there’s no handler, so the modem just makes a connection and sits there. If you look at the Resources tab of any entry in Device Manager, you can see its memory address and IRQ.

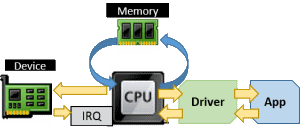

In later paradigms, drivers handle a lot of the nasty parts of talking to hardware. They register the interrupt handlers and know how to talk to the hardware. They expose simpler, and usually standardized, instruction sets that software can reference. So, instead of learning the exact way to get each and every printer to generate an “A”, they can send a common command to any print driver that understands that common set and let the driver worry about the particulars. This was an early form of hardware abstraction in personal computing. At that stage, drivers were often an interface between a particular application and a particular device.

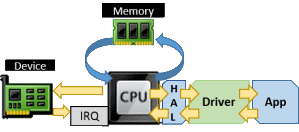

In the Windows world (and other OSs), the next step was abstraction at the operating system level. Windows introduced the Hardware Abstraction Layer, which sat directly atop the physical hardware as an intermediary between it and the drivers. The HAL offered a greater degree of stability and compatibility. Drivers became less application-oriented and more operating-system-oriented. The specifics of the HAL are not overly important to this discussion except to know that it’s there.

Emulated Hardware

With a basic understanding of how hardware functions, we can now transition to the virtualized world.

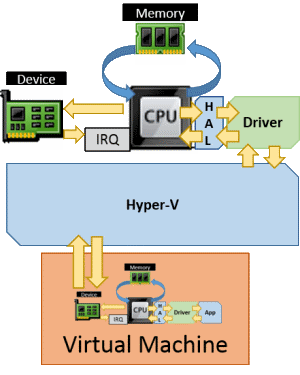

Emulated hardware is a software construct that the hypervisor presents to the virtual machine as though it were actual hardware. This software component implements a unified least-common-denominator set of instructions that are universal to all devices of that type. This all but guarantees that it will be usable by almost any operating system, even those that Hyper-V Server does not directly support. These devices can be seen even in minimalist pre-boot execution environments, which is why you can use a legacy network adapter for PXE functions and can boot from a disk on an IDE controller.

The drawback is that it can be computationally expensive and therefore slow to operate. The software component is a complete representation of a hardware device, which includes the need for IRQ and memory I/O operations. Within the virtual machine, all the translation you see in the above images occurs. Once the virtual CPU has converted the VM’s communication into that meant for the device, it is passed over to the construct that Hyper-V has. Hyper-V must then perform the exact same functions to interact with the real hardware. All of this happens in reverse as the device sends data back to the drivers and applications within the virtual machine.

The common emulated devices you’ll work with in Hyper-V are:

- IDE hard drive controllers

- Emulated (legacy) network adapters

- Video adapters

These aren’t the only emulated devices. If you poke around in Device Manager with a discerning eye, you’ll find more. The performance impact of using an emulated device varies between device types. For the network adapter, it can be fairly substantial. The difference between the emulated IDE controller and the synthetic SCSI controller is difficult to detect.

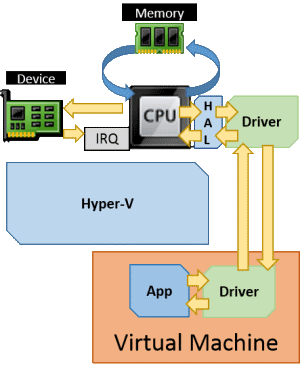

Synthetic Hardware

Synthetic hardware is different from emulated hardware in that Hyper-V does not create a software construct to masquerade as a physical device. Instead, it uses a similar technique to the Windows HAL and presents an interface that functions more closely to the driver model. The guest still needs to send instructions through its virtual CPU, but it’s able to use the driver model to pass these communications directly up into Hyper-V through the VMBus. The VMBus driver, and the drivers that are dependent on it, must be loaded in order for the guest to be able to use the synthetic hardware at all. This is why synthetic and SCSI devices cannot be used prior to Windows startup.

Here, the visual models we’ve been using so far don’t fit as well due to some shortcuts in the diagramming I had to use to keep it from becoming too confusing. This next diagram is less technically correct, but it should help get the concept across:

SR-IOV

SR-IOV (single root i/o virtualization) eliminates the need for the hypervisor to perform as intermediary at all. They expose virtual functions, which are unique pathways to communicate with a hardware device. So, a virtual function is assigned to a specific virtual machine; the virtual machine manifests that as a device. No other device or computer system, whether in the host computer or inside that virtual machine, can use that virtual function at all. Whereas all traditional virtualization requires the hypervisor to manage resource sharing, SR-IOV is an agreement between the hardware and software to use any given virtual function for one and exactly one purpose. This one is easy to conceptualize and diagram:

The benefit of SR-IOV should be obvious: it’s faster. There’s a lot less work involved moving data between the device and the virtual machine. A drawback is that Hyper-V has practically no idea what’s going on. The virtual switch has been designed to work with adapters using SR-IOV, but teaming hasn’t. Another problem is that SR-IOV-capable adapters currently available only have a few virtual functions available, usually around eight or so. If a virtual machine has been assigned an SR-IOV adapter, then it must have access to a virtual function or that adapter will not work. Hyper-V cannot take over because it is not part of the SR-IOV conversation. One of the potentially unexpected outcomes of this is that if you attempt to move a virtual machine to a host that doesn’t have sufficient virtual functions available, that guest will fail to start. For Live Migration, the migration will fail and the guest will remain on the source system.

As of today, the only devices making use of SR-IOV are network adapters. The big question though, is how much of a difference in performance does SR-IOV actually make? The answer is that it’s sort of a mixed bag. The differences can certainly be seen in benchmarks. In practical applications, the differences are not remarkable. Most environments are a long way from pushing the limits of synthetic adapters as it is. The impact is actually more noticeable on CPU, since most of the functionality is offloaded to the hardware. However, most virtualization environments are not stressing their CPUs either, so again, the impact may not be noticeable.

Where This Is Headed

With 2012 R2, this will become more important. The new generation 2 virtual machines will not be able to use legacy hardware at all. However, they will use UEFI mode (unified extensible firmware interface) instead of the traditional BIOS mode, so all hardware will be usable right from startup. That, and all the consequences of the new platform, will be a subject for a later post.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

15 thoughts on "Hyper-V Virtual Hardware: Emulated, Synthetic and SR-IOV"

Hi Eric,

This is a great article on emulated and synthetic hardware. As you know

Hyper v supports two types of disk, VHD and Pass-through. Both the storage is presented by parent partition however, I still have question that pass-through disk is directly attached to the VM which means it is isolated from parent partition and it is controlled by child partition.

So do you agree that pass-through disk not controlled by parent partition..?

The parent partition is responsible for connection to and presentation of storage for pass-through. It does not control it in any other way and has no visibility to the contents of the storage.

nice article..so if you want do a live migration between the two hosts hyperv.which adapters will be good.that does not affect any downtime while transferring in either nics to smb storage?

I don’t understand the question. There is no downtime during Live Migration no matter what the adapters are. That’s what they mean by “Live”.

Eric,

I am using my setup for learning Server 2012 R2 and get my MCSE 2012(already have MCSE for NT4 and Win2000)

I am sure you have heard this question many times in the past but I cannot find it here.

I have the following equipment

2 Dell T110 II

32gb each

1 onboard nic

4 port card

16 port Buffalo non managed switch

both servers running 2012 R2 and running Hypervisor role and 4 or 5 test VM’s

I keep hearing Nic teaming load/balancing.

So I created a nic team with the 4 ports from my network card(I know if the card fails all nics will fail).

I have the onboard nic set connected to a virtual nic for the hyperv and the 4 port team has another IP address set to that.

When I create new VM’s should I have them connect to the virtual switch created by the nic teaming or should they go to my internal nic(I think I need to point new vm’s to the nic teaming switch) but I thought I would check with you.

Does this make sense?

If you had this setup how would you set up the nics on one of the servers to get good connectivity/redundancy and learn things (the Microsoft way for taking the tests (70-410/411/412)

In my personal lab, I use the singular onboard port as the management adapter with no virtual switch. 2 of my 4 add-in ports are teamed and host the virtual switch. The other 2 add-in ports are unteamed and carry traffic to/from my storage system (SMB and iSCSI).

I only do this because I’m lazy: my lab systems don’t have out-of-band management capability and they’re in my garage and I don’t want to walk out there when I make breaking changes to my virtual switch. I know there is a very common recommendation out there to use a single unteamed NIC or physically segregated team for host management, but that’s archaic advice based on tribal knowledge that has been invalid for a number of years now due to the state of the technology. In reality, and definitely in production, a better solution would be to stop using the onboard port altogether and create a virtual adapter on the virtual switch attached to the team for the purposes of host management. I would definitely do that if my systems were production and not lab boxes because my laziness wouldn’t be a good excuse anymore. I would probably even do it on my lab boxes if I could use all 4 add-in ports for the VM team. If all the adapters had the same capabilities, I’d join the onboard the team with the others. But, for a lab, it really doesn’t matter.

I don’t know why you have a virtual switch on your single onboard adapter. That’s not doing anything for you. I would take that off no matter what. If you’re going to continue having the management adapter separated from the team (which is fine for your usage), then it (the team adapter) should not have an IP address — in reality it doesn’t, but I’m assuming you’ve got the virtual switch “shared” with the management OS. Turn that off. Only leave it on if you plan to stop using the onboard.