Save to My DOJO

With the release of Windows Server 2016 right around the corner, there’s been a great deal of buzz around the new features that will appear in Hyper-V. We are at the point where not many of the new features add a great deal of value for small businesses, which could be a good thing or a bad thing, depending on your perspective. One hot feature that will add almost nothing to a small business environment is Switch Embedded Teaming. It’s not going to hurt you any, to be sure, but it’s not going to solve any problems for you, either.

What is Switch Embedded Teaming?

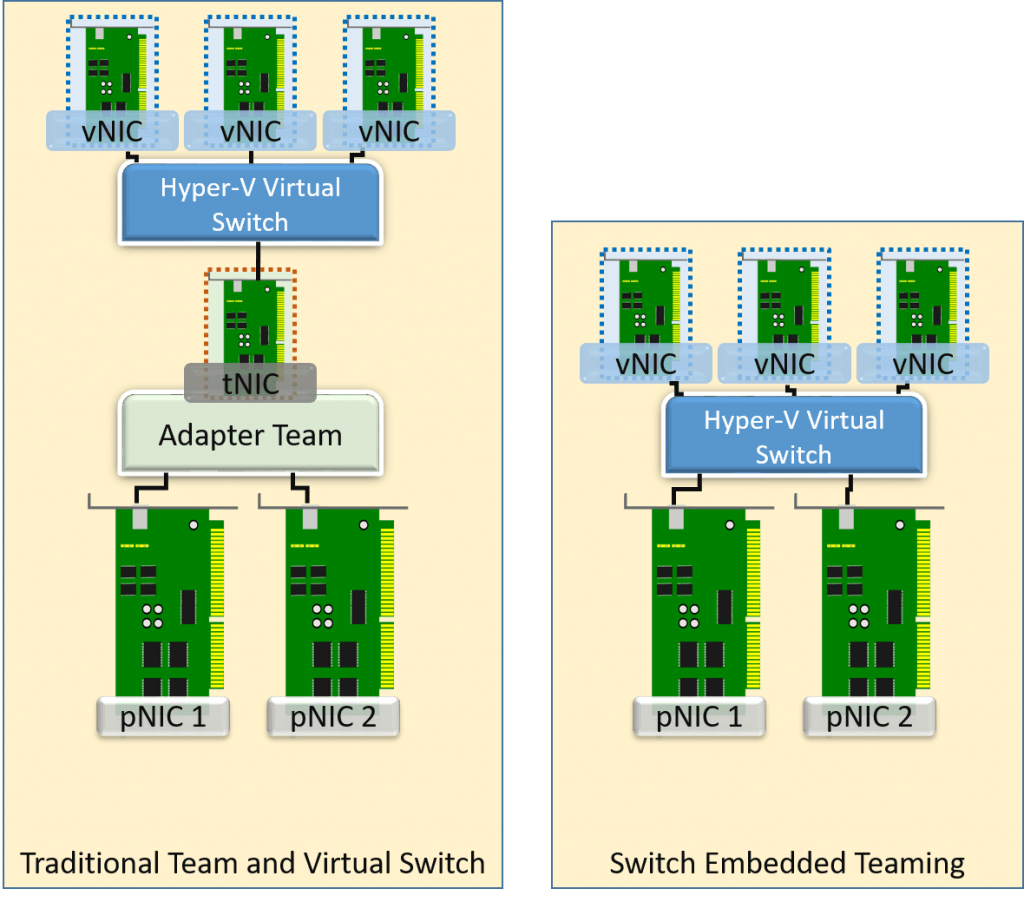

Switch Embedded Teaming enables the Hyper-V virtual switch to directly control multiple physical network adapters simultaneously. Compare and contrast this with the method used in 2012 and 2012 R2, in which a single Hyper-V virtual switch can only control a single physical or logical adapter. In order to use teaming with these earlier versions of Hyper-V, your only option is to use some other method to team the adapters. The only supported method is with Microsoft’s load-balancing failover (LBFO) features.

Pros of Switch Embedded Teaming

There are several reasons to embrace the addition of Switch Embedded Teaming to the Hyper-V stack.

- Reduced administrative overhead

- Reduced networking stack complexity

- Enables RDMA with physical adapter teaming

- Enables SR-IOV with physical adapter teaming

- Full support for Datacenter Bridging (perhaps a bit over-simplified, but mostly correct to just say 802.1p)

Cons of Switch Embedded Teaming

Switch Embedded Teaming looks fantastic on the brochure, but does not come without cost.

- Switch independent is the only available teaming mode — no static or LACP (802.3ad/802.1ax)

- Most hardware acceleration features available on inexpensive gigabit cards are disabled

- VMQ is recommended — fine for 10GbE, not so fine for 1GbE

- The QoS modes for the virtual switch do not function; if you need networking QoS, DCB is all you get

- Works best with hardware that costs more than the average small business can afford/justify

- Only identical NICs can belong to a single SET

- Limit of 8 pNICs per SET as opposed to 32 per LBFO (I’m not entirely certain that’s a negative)

- No active/passive configurations (not sure if that’s a negative, either)

Comparison of the Hyper-V Virtual Switch with Teaming against Switch Embedded Teaming

My aim in this article is to show that most small businesses already have a great solution with the existing switch-over-team design. Let’s take a look at the two options side-by-side:

Visual Comparison of SET and Traditional Teaming

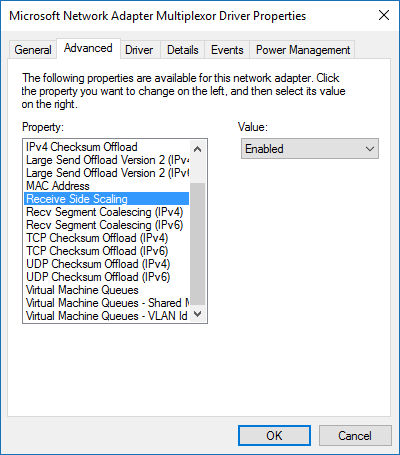

The big takeaway here is that the Hyper-V virtual switch can only operate with the feature set that is exposed to it. In the traditional team, the virtual switch sits atop the logical NIC created by the teaming software. You can easily see which features are available by looking at the property sheet of the adapter:

Team NIC Features

There are several features available here, each available to the Hyper-V virtual switch (although RSS doesn’t really do much in this context).

When we turn to SET, the switch is created directly from the physical adapters. There is no intervening team NIC. Does that mean that we get to use all of the features available on the network adapter? Sadly, it does not. No RSS, no RSC, no IPSec offloading, to name the big ones. SET’s focus is on VMQ, RDMA, and IOV. That’s where we encounter problems for the small business.

Simply put, SET shines on 10GbE hardware, especially high-end adapters that support RDMA. For the small business, it offers almost nothing except a bit of configuration-time convenience. Do you really need 10GbE for your environment? Here is where I’m supposed to say, “of course”, because as a tech writer, I shouldn’t have any problems spending other people’s money to solve problems that don’t even exist so that I can be like all the other tech writers and generate ad revenue from hardware vendors. But, since I’m not interested in winning any popularity contests among tech writers or having hardware outfits shove advertising dollars at me, I’ll opt for honesty and integrity instead. If you are operating a typical small business, you almost definitely do not need 10GbE. Most medium-sized businesses don’t even need 10GbE outside of their backbones and interconnects.

Answering 10GbE, RDMA, and IOV FUD

I’d like to leave that discussion there and jump right into comparing SET and switch+team, but we’ll have to take a detour to address all the FUD articles that are already being written in “response” to this one. There are more than a few otherwise respectable authors out there doing everything in their effort to convince everyone using Hyper-V that they absolutely must forklift out their Hyper-V environment’s gigabit infrastructure and replace it all with 10GbE hardware OR ELSE. If you can demonstrably justify 10GbE, then go ahead and buy it. If you can’t, or haven’t run the numbers to find out, then take their advice at your budget’s peril.

Identify the Hallmarks of Baseless FUD

We’ll start with the placement of the period in that “OR ELSE” statement. They have nothing to offer after the OR ELSE. I do occasionally see attempts to terrify readers with the promise of long times for Live Migrations. Well, if you’re spending so much time sitting around waiting on migrations to the point that it’s meaningfully impacting your work effort, then you need to focus your attention on fixing whatever problem is causing that before trying to bandage it with expensive hardware. If preventive maintenance is the source of your woes, then you desperately need to learn to automate. Preventive maintenance should be occurring while you’re snug at home in bed, not while you’re standing around waiting for it to finish. If you can’t avoid that for some reason and you also can’t find anything else to do to fill in the time, then Live Migration time isn’t really a problem, now is it? You can safely ignore any commentary about your environment by any author that cannot properly architect his/her own.

The next thing to look at is who employs these FUDders. Almost all of them work for training outfits or resellers. Pure trainers always get starry-eyed around new tech because they do little in the real world and don’t have to pay for it and their students are almost always zero-experience greenhorns trying to get into the market or employees of firms with big enough budgets to pay for trainers and sufficient need for 10GbE. Resellers love 10GbE because it has the same sort of margins as SANs, especially when bundled into any of the shiny new “hyper-convergence” packages. None of these people have any concerns over your budget or any motivation to investigate whether or not 10GbE is suitable to your needs.

10GbE FUD-Busting by the Numbers

The easiest and quickest way to bust FUD is to disprove it in numbers. There are two sets of numbers that you need, and they are not at all complicated to acquire. The first is by far the most important: do you have sufficient demand to even justify looking into the expense of 10GbE? If not, you don’t even need to bother with the second. The second compares the fiscal distance between 1GbE and 10GbE.

Do I Need 10GbE?

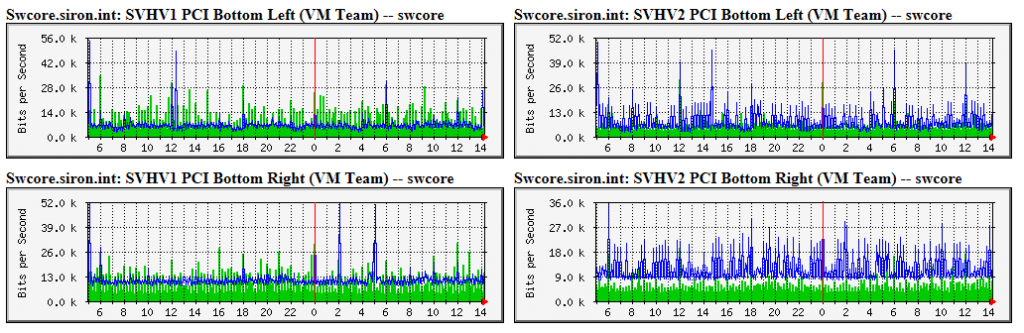

The easiest way to know if you need to purchase 10GbE is if you are routinely starved for network bandwidth. Take extra points if you’ve needed to setup QoS to address it. “I did a file copy and it was slow” does not count for anything. If you’re not certain, then that alone is a good indicator that you don’t need 10GbE. If you’d like to be certain, then you can easily become certain. Look at this environment:

Sample Networking Meter

That’s only two network adapters per host carrying VM traffic, Live Migration traffic, and cluster traffic, and it peaks at 52 KILObits per second. The 90% bar is well under 35kbps. Anyone who would counsel that the above should be upgraded to 10GbE is just trying to take your money or drum up demand to keep the pricing high. Not sure how to get the above for your own environment? You can have it in an hour or so. On the other hand, if the charts were routinely at or near the 1 gigabit mark and there aren’t any additional 1GbE adapters/ports to easily scale out with, or if you have one or two adapters that are routinely hotspotting right up to their maximum, then 10GbE might be justified.

Another potential sign that 10GbE might make more sense is if you’re using stacks and stacks of 1GbE adapters. The above example uses two per host, and as you can see, that’s plenty. Four per host should be more than adequate for the average small business host, even in a cluster (add a pair for storage). If you have more adapters than that, then the first thing that I would do is look into reducing the number of adapters that you have in play. If you can’t do that due to measured bandwidth limitations, then start looking into 10GbE. If I walked into a customer’s site and found Hyper-V hosts with 12x 1GbE adapters, I would immediately start pulling performance traces with an end goal of implementing change, either down to a reasonable number of adapters or up to 10GbE. I don’t really have a hard number in mind, but I think that, with the network convergence options made available by the Hyper-V virtual switch, that 8 or more physical gigabit adapters in a single host is where I would start to question the sensibility of the network architecture.

10GbE Pricing

The other part of the FUD claims that 10GbE doesn’t really cost as much it does, which is an absurd claim on its face. Sure, if you’re dedicating most of a 20-port switch to a single host because it’s got a lot of 1GbE adapters, that’s a problem. However, the problem is most likely that you are just using more 1GbE adapters than you need (addressed in the previous section). If you truly are pushing 12x 1GbE adapters to their limits, then I would be likely to counsel you to consider 10GbE myself. But, if you’re not at capacity, then that advice would mostly be about simplifying the complexity of your environment. It’s still cheaper to buy a 20-port 1GbE switch and fill it up with 1GbE connections than it is to simplify down to a pair of 10GbE adapters on a 10GbE switch — to a point. If you’re talking about filling up racks with multiple hosts using lots of 1GbE adapters and trying to match that with one switch per host, the math is different. But, if you’ve got that many hosts, then you’re probably not in the expected target audience for this article. Either way, there’s still a good chance that you could safely cut down a lot on your gigabit infrastructure expense just by not using so many adapters per host.

But, let’s not emulate the FUDders with a lot of “maybes” and “what-ifs”. Let’s get some solid numbers. I have chosen to gather pricing from PC Connection. This is not an endorsement of that company nor am I receiving any compensation from them (they don’t even know that I’m writing this article). I chose them primarily because they are one of the few large tech stores that does not constantly spam my work e-mail address and secondarily because I’ve had some positive business dealings with them in the past. This article is written to compare pricing, which means using a single point of reference. If you are interested in making a purchase, I recommend that you shop around to find the best pricing.

Network adapter pricing is tough to get a solid read on. You can get 10GbE adapters as inexpensively as $100 per port (not counting open box, refurbished, off-brands, etc). The ones that I would be most inclined to purchase drifted up toward $200 per port. When compared to, say, a quad-port 1GbE adapter card, I would actually consider that to be fairly reasonable pricing.

It’s the switches that will cost you, and wow, will they cost you. Take a look:

Don’t be fooled by some of the starting low prices — those usually only have a couple of 10GbE ports for uplink (which is what most people should be using 10GbE for anyway). The cheapest entry on that list that is all-10GbE (at least, the day that I wrote this) costs a bit over $1,000 for twelve ports. In case you’re new to 10GbE, note that none of those are RJ-45 ports, so you’ll need to go buy some SFP modules:

So, if you use a model with all SFP, then that’s an extra $30 per port. Minimum. Let’s do the math on that. You have a host with a single dual 10GbE port adapter for $200, then two SFPs for $60 into your $1,000 switch, then another SFP to connect it into your core switch where all of your gigabit desktops are connected which is another $30. Your out-of-pocket expense is $1,300 to connect a single host with the only redundancy being one port failing on your lone adapter! In contrast, if I were to rework the gigabit setup that I just built for switch redundancy, it would be 2x $200 for managed switches, 2x $80 for dual-port gigabit adapters, and less than $5 to cable it all. $485 for full redundancy vs. $1,300 for almost no redundancy. If I architect for full redundancy:

“Cheap” 10 Gigabit Full Redundancy

- 2x $1,000 for 2x 12-port 10GbE switches

- 4x $100 for 1-port 10GbE adapters (separating the ports for NIC/slot redundancy)

- 8x $30 for SFPs (two per host plus two per switch for uplink)

- Total: $2,640

Gigabit Full Redundancy

- 2x $200 for two 24-port gigabit switches

- 4x $50 for 2-port 1GbE adapters

- $10 for cabling, including uplinks

- Total: $610

I didn’t get exact pricing on the gigabit lines and I rounded down on the 10GbE lines — I know that I estimated high for gigabit cabling and what I listed is certainly well above the price for the cheapest dual gigabit adapters. Of course, you can also pay $3,000 for a 10GbE switch that uses Cat6A/7 RJ-45s instead of SFPs if you prefer, but that’s certainly not going to save you anything. I challenge any 10 GbE apologist to explain how the first set that uses the absolute cheapest 10GbE pricing costs less than the second set that aims for more moderately priced gigabit hardware for a customer that generates network utilization charts like the one that I posted above.

10GbE is expensive. If you need it, it’s worth it. If you’re not going to be utilizing it, you should not be paying for it.

I should also point out that the cheapest adapter I found that conclusively supports RDMA was a Broadcom adapter that costs $350 for a dual port model. I wanted to find the cheapest adapter from a more reliable manufacturer, but gave up when I hit the $250/port range. When you’re talking about SET vs switch+team in 10GbE terms, RDMA is very important.

SET on Small Business Real-World Hardware

My concrete exhibit will apply to those shops that are using affordable gigabit hardware. My current test systems are using Intel PRO/1000 PT adapters; capable, but with no frills. I ran a sequence of tests on them using different teaming modes to see what, if any, would be the “best” way to team.

The Test Bed

This is the configuration that I used:

- Two identical hosts with a pair of Intel PRO/1000 PT, teamed as shown in the test.

- All teams use the Dynamic load-balancing algorithm

- Identical virtual machines

- 1 GB fixed RAM

- 2 vCPU

- Windows Server 2016 TP5

- NTttcp was used for all speed tests

- Sender: ntttcp.exe -s -m 8,*,192.168.25.186 -l 128k -t 15 -wu 5 -cd 5

- Receiver: ntttcp.exe -r -m 8,*,192.168.25.186 -rb 2M -t 15 -wu 5 -cd 5

The Results

I ran each test 5 times and got similar results each time, so I only selected the output of the final run to publish here. I also ran one of the tests for 60 seconds instead of 15, but it didn’t deviate from the patterns set in the 15 second tests so I left that out as well.

The text versions of each final run:

C:ntttcp>C:ntttcpntttcp.exe -r -m 8,*,192.168.25.186 -rb 2M -t 15 -wu 5 -cd 5

Copyright Version 5.28

Network activity progressing...

Thread Time(s) Throughput(KB/s) Avg B / Compl

====== ======= ================ =============

0 15.008 13863.831 35760.661

1 15.008 13970.471 35076.111

2 15.008 14077.026 34977.920

3 15.008 13240.795 34939.402

4 15.008 13612.419 34918.755

5 15.008 13842.136 35155.942

6 15.008 14735.002 35560.669

7 15.008 14191.684 34980.036

##### Totals: #####

Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s)

================ =========== ============== ================

1634.660855 15.008 1457.259 108.919

Throughput(Buffers/s) Cycles/Byte Buffers

===================== =========== =============

1742.709 3.347 26154.574

DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr)

============= ============= =============== ==============

15021.588 5.217 15955.690 4.912

Packets Sent Packets Received Retransmits Errors Avg. CPU %

============ ================ =========== ====== ==========

388208 1176226 0 0 5.986

C:ntttcp>C:ntttcpntttcp.exe -r -m 8,*,192.168.25.186 -rb 2M -t 15 -wu 5 -cd 5

Copyright Version 5.28

Network activity progressing...

Thread Time(s) Throughput(KB/s) Avg B / Compl

====== ======= ================ =============

0 15.010 13964.411 35319.459

1 15.010 14174.338 35122.194

2 15.010 14403.259 35251.838

3 15.010 14510.257 35238.763

4 15.010 14087.658 35479.386

5 15.010 13909.704 35163.733

6 15.010 14727.481 35424.870

7 15.010 14714.799 34999.999

##### Totals: #####

Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s)

================ =========== ============== ================

1678.245636 15.010 1457.540 111.809

Throughput(Buffers/s) Cycles/Byte Buffers

===================== =========== =============

1788.936 2.863 26851.930

DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr)

============= ============= =============== ==============

13637.109 5.898 14115.190 5.699

Packets Sent Packets Received Retransmits Errors Avg. CPU %

============ ================ =========== ====== ==========

419363 1207355 0 0 5.257

C:ntttcp>C:ntttcpntttcp.exe -r -m 8,*,192.168.25.186 -rb 2M -t 15 -wu 5 -cd 5

Copyright Version 5.28

Network activity progressing...

Thread Time(s) Throughput(KB/s) Avg B / Compl

====== ======= ================ =============

0 15.015 15370.047 34927.580

1 15.015 13750.277 34454.931

2 15.015 14764.579 34364.324

3 15.015 13051.114 34825.682

4 15.015 14867.409 34478.395

5 15.015 14304.486 34993.893

6 15.015 12403.626 34343.637

7 15.015 13204.651 33996.360

##### Totals: #####

Bytes(MEG) realtime(s) Avg Frame Size Throughput(MB/s)

================ =========== ============== ================

1638.104072 15.015 1456.122 109.098

Throughput(Buffers/s) Cycles/Byte Buffers

===================== =========== =============

1745.565 3.427 26209.665

DPCs(count/s) Pkts(num/DPC) Intr(count/s) Pkts(num/intr)

============= ============= =============== ==============

14069.331 5.584 15171.695 5.178

Packets Sent Packets Received Retransmits Errors Avg. CPU %

============ ================ =========== ====== ==========

374673 1179624 0 0 6.140

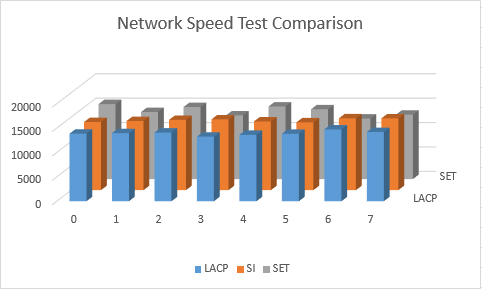

To make things a little easier to process, I created a couple of charts to compare the metrics that I found to be the most relevant, which was the throughput of each individual thread:

SET vs Virtual Switch Speed Comparison 1

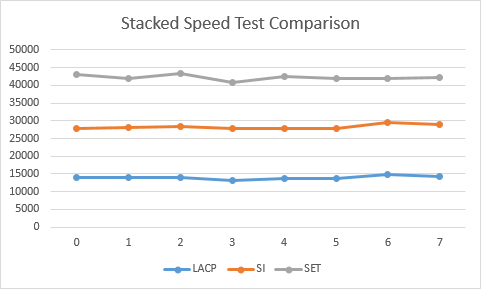

I then created a stacked line chart, which more clearly illustrates the only part of this testing that I found anything to note (the numbers at the left of the chart are meaningless for all except LACP):

SET vs Virtual Switch Speed Comparison 2

Speed Test Results Discussion

In my testing, the standard virtual switch on top of a switch independent team always came out with slightly better overall throughput, with LACP+switch and SET dancing around each other for second place. However, SET was noticeably worse at load-balancing than the other two (see how flat the LACP line is in the second chart compared to the other two, especially SET). When compared to LACP, that just makes sense. The connected physical switch doesn’t get to make any decisions on load balancing the traffic that it sends to Hyper-V whereas with LACP, it is intimately involved. I was a little surprised at how well basic switch independent mode was able to keep its traffic balanced.

Conclusion

The big takeaway here is that if you’re a typical small business just using cheap commodity gigabit networking hardware, it doesn’t really matter whether you use Switch Embedded Teaming or the “old” way of team plus switch. Unless one or more of those bullet points in the “cons” list at the beginning of this article hit you really hard, SET isn’t bad for you, but it’s also not anything for you to be excited about.

If you have a larger gigabit environment, I would expect the load-balancing variance to become more pronounced. I’m only using two gigabit adapters per team and I only had the pair of VMs to test with. With more physical adapters and more virtual network adapters, inbound traffic on a Switch Embedded Teaming configuration would likely experience more hotspotting. For typical small business environments, I still don’t think that it would be enough to matter.

If inbound network balancing really matters but standard low-end gigabit is what you have available, then a standard virtual switch on an LACP team is your best choice. I don’t really think that combination of requirement and hardware makes a lot of sense, though. Buy better hardware.

What also raises a yellow flag for SET and the small business is the inability to use disparate NICs. I don’t worry much about not being able to combine different NIC speeds — I’m not sure of any solid reason to combine NICs of different speed in a team, except perhaps in active/passive, which isn’t even possible in SET anyway. That part doesn’t seem to matter very much. But, it’s not uncommon to purchase a rackmount system that uses one type of onboard adapter with different adapters in the slots. Teaming daughter card adapters to slot adapters makes sense as it gives you hardware redundancy. SET will limit that ability, again in a way that will impact gigabit hosts more than 10GbE hosts.

Where Switch Embedded Teaming is of most value is on 10GbE (or faster!) hardware that support both IOV and RDMA. It also benefits from VMQ which doesn’t do very much for 1GbE — if you’re lucky enough for it to not cause problems. If you’re using the upper tier of hardware and you need that power, then SET will substantially add to Hyper-V’s appeal because RDMA-boosted equipment is FAST. For the rest of us, SET is just another three-letter acronym.

Further reading over at TechNet

https://technet.microsoft.com/en-us/library/mt403349.aspx

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

27 thoughts on "Hyper-V 2016 and the Small Business: Switch Embedded Teaming"

Hi,

You said that RSS is not supported in SET but it is not a problem since we create vNIC bound to the vSwitch. Thanks to SET vRSS is supported in parent partition and so vNIC leverages vRSS.

vRSS is functional only if VMQ is active, which it often isn’t on 1GbE. If someone were accustomed to using tNICs in the management operating system to leverage RSS on a non-VMQ team, they would no longer be able to do so with SET.

Hi,

You said that RSS is not supported in SET but it is not a problem since we create vNIC bound to the vSwitch. Thanks to SET vRSS is supported in parent partition and so vNIC leverages vRSS.