Save to My DOJO

In the first part of this series, we looked at the myths that scare people away from virtualizing their domain controllers. In this installment, you’ll see some of the ways that domain controllers can be successfully virtualized.

Disclaimer: I’m going to be talking about several Active Directory topics in this post. I will not be stopping to explain them in any real detail. If your AD knowledge is somewhat lacking, you should still be able to get through this article without a great deal of difficulty. Personally, I learned Active Directory by attending MCT-led courses, studying for the exams, and working in the real world since the first release of Active Directory. So, I don’t know of any good tutorials on the subject. If you know of any, please leave them in the comments.

Assess Your Situation

Before you begin, determine what you want your final domain controller situation to look like, how small failures will be handled, and how you’ll recover from any catastrophic disasters. How many domain controllers do you need? Where should they be placed? Should domain controllers be highly available? What will the backup schedule look like? The answers to these questions will draw the most definite picture of what your final deployment should look like.

How Many Domain Controllers are Necessary?

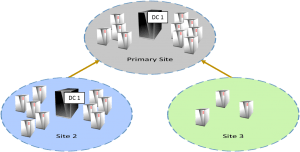

This is the first question you should answer. If you’re a small shop with a single location, you can shortcut this question. A single domain controller can easily handle thousands of objects. Any extra DCs are for resiliency, not capacity. If you have multiple sites, try to place at least one domain controller in each site and make sure you notify AD about them so authentication and replication traffic is properly handled. If you need more in-depth help, refer to TechNet’s thorough article on the subject.

Do I Really Need a Second Domain Controller?

I know that the purists are going to say, “Absolutely.” But usually, those purists have never been at the mercy of a small business operating budget where you just don’t have an extra stack of cash lying around to do nothing but domain services. For most of my career, the vast majority of companies I worked for/with used only a single DC and everything was fine. There are actually some benefits to this:

- Saves on Windows licensing and hardware costs; this is the primary reason many choose to do it

- USN rollback is impossible; this is important in a small environment, as technical resources with the capacity to detect it and the expertise to correct it are in short supply

- If Bad Things should occur, restore of domain services is extremely simple in a single DC environment

Now, with virtualization, the single DC environment is pretty low-risk. A small business could run Hyper-V Server on a host with two guests, one running domain services and the other running the company’s other services. This only requires a single Windows Server license and satisfies the best practice of separating domain services from other server applications. This could all then be backed up using any Hyper-V-aware solution (as a shameless plug, Altaro Backup Hyper-V would handle this scenario with its free edition). This is a solution that I wish I had access to many years ago, as it would have fundamentally changed the way I worked with many small business customers.

Where Should I Place Domain Controllers?

Try to keep multiple domain controllers off the same hardware, if possible. If you’ve only got a single physical server-class system, then having two domain controllers may not be worth the effort. I’ll return to this in the highly available section.

As previously mentioned, you’ll want at least one domain controller per site, if possible. This is certainly not the hard and fast rule some would like it to be. If a remote site is only going to have a handful of users, no other need for a local server, and a fairly reliable connection to the primary site, it’s not really worth having its own DC. The exception I sometimes make to this is if DNS resolution needs to be snappy and the intersite link isn’t. Then I might recommend a DC with DNS in that site. This would be a good place to tangent into read-only DCs, if I were so inclined.

This question isn’t limited to geography. You’ll also decide which to have virtual and which to have physical. If your capacity assessment determines that your DCs are going to be under a heavy load, you might consider not virtualizing. Remember that a primary goal of virtualization is the consolidation of server software to more fully utilize hardware; any software that is fully utilizing its hardware is generally not the best candidate for virtualization. A secondary reason is portability, but domain services have very little to gain from such portability. However, each additional domain controller reduces the overall load, so dispersing domain controllers as VMs across numerous Hyper-V hosts may reduce your hardware reliance.

If you want to try to balance across physical and virtual, with the physical machines being more dependent on hardware, remember that global catalog DCs work a bit harder than non-GC machines.

Edit: We continue to receive a number of comments about the so-called “chicken and egg” problem of virtualized domain controllers despite having thoroughly debunked that as a myth. Please refer to article one, linked at the top of this post.

One thing you really don’t want to do is place domain controllers on SMB 3 storage, at least not all of them. SMB 3 storage requires the ability to validate incoming connections against a DC, so you could wind up with a true “chicken and egg” scenario if your DCs are all on SMB 3 storage. Of course, you could also log into the file servers with local accounts, copy the files off by whatever means, and then import them or create new VMs around the VHDs and start them that way. So, even if you make this mistake, there’s a fix.

Should Domain Controllers Be Highly Available?

As a general rule, I recommend not making domain controllers highly available. Domain controllers post-NT 4.0 are designed as a multi-master system, meaning that for the most part, no single domain controller is in charge. Even though you’ll still hear people refer to the BDC (backup domain controller), there really is no such beast from Windows Server 2000 and onward. So, if you have multiple domain controllers, Active Directory is already highly available and won’t benefit much from being on virtual machines that are highly available.

This isn’t a universal truth. There are five flexible single master operation (FSMO) roles. I suggest that you consider allowing the machine(s) that hold these roles to be highly available, since there are a handful of domain operations that cannot be carried out when one or more of these roles are not available. However, it’s fairly trivial to transfer or seize these roles in the event of any outage of a FSMO system, so there’s no driving need to configure them as HA VMs.

Backup

Having good backups of your domain controller(s) means that you’ll be prepared for just about anything. In order to get a solid backup, all you really need is to capture the System State of domain controller systems. You don’t even necessarily need to capture every DC. You do want to ensure you backup at least one global catalog and every system that contains a FSMO role. Also, ensure that you’re capturing the DNS database, as Active Directory and DNS are highly intertwined. For small businesses, all these roles can be contained in a single system. If you have two DCs, have them both run DNS as well, and take full backups.

How often you backup the domain is up to you. I strive for nightly, but environments that don’t change often don’t necessarily need this level of protection. Just remember that you can only restore the environment to the state of its last backup. If once a week is acceptable, then back up once a week.

A much more pressing question is the retention policy. I would ensure that you at least have enough backups to cover the past couple of weeks. I’ve seen some locations that only keep a single backup of the domain, and this is just not sufficient. Damage that occurs to the domain may not be noticed right away, especially in a small environment. Anything more than a couple of months is probably not worth it. Be aware that if you have multiple DCs, performing a restore of one older than two months can cause deleted objects to reappear in the directory.

If you’re new to the idea of Active Directory backup and restore, make sure you perform some research. A single DC environment is straightforward; for multiple DCs, make sure you understand authoritative vs. non-authoritative restores and that you know how to perform each type using your selected backup software.

The Problem of Time Drift

This is the largest hurdle to having a virtualized domain controller. The issue is that the clock in Windows systems is tracked as a low-priority thread. In a physical system, this can lead to clock drift when the CPU is busy. In a virtual system, there’s an extra layer of distance to the CPU, so clock drift is even more likely. Actually, let me clarify that: clock drift in a Windows environment is completely unavoidable. It’s happening all the time.

In a Windows domain, time is (by default) synchronized to the domain controller that holds the PDC emulator role. If that’s virtualized, what do you do? First, make sure the host’s CPUs aren’t under a lot of strain. That will cause time drift.

Next, configure your domain to pull from an external time source. After you’ve set all that up, go read Ben Armstrong’s post on the subject, paying special attention to number 6. If you don’t want to do all that reading, then the most important thing to do is to run the following on your PDC emulator, if it’s virtualized in Hyper-V:

reg add HKLMSYSTEMCurrentControlSetServicesW32TimeTimeProvidersVMICTimeProvider /v Enabled /t reg_dword /d 0

While that’s the most important activity, the most important message is that you should never disable time synchronization. When someone tells you to disable time sync for your VMs, show them that link and have them read #8. Never means never for all possible values of never.

Update: This is now an outdated practice. Completely disable time synchronization for virtualized domain controllers. Read this article that covers the how and the why.

If you continue having issues with time drift, the first thing you need to check is the CPU load of the VM’s host. If you can’t lighten the load or move the PDC emulator VM, you can reduce the update intervals indicated in that blog post/KB article.

If you’re going to keep a physical DC, make that the domain’s authoritative time system.

Best Practices with Virtualized Domain Controllers

The most important thing is to not snapshot domain controllers. If you are using domain controllers that are Windows Server 2012 or later and your hypervisor is Hyper-V Server 2012 or later, then there is a new VM-Generation ID attribute that can keep reverted snapshots from causing a USN rollback. However, you should just avoid snapshotting your domain controllers as a rule. Of course, I also know that a lot of smaller shops will be combining roles. But, if you’ve only got one DC, USN rollback is impossible. In any case, be aware of the issue.

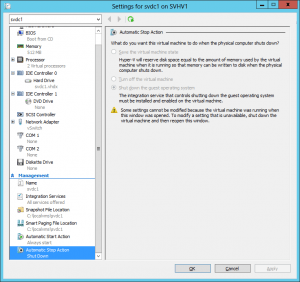

You should also avoid using Saved State with domain controllers. The risks aren’t nearly as severe as with snapshots. Mostly what you need to worry about is a long-saved DC causing tombstoned objects to become reanimated.

You’ll want to have domain controllers stop automatically when the host is shut down:

Don’t get fancy with domain controllers. Cloning them is OK starting with 2012, but deploying a new DC from scratch is very simple. If you have a small domain, initial replication doesn’t take long. So, there’s no value in taking risks with DCs.

Try to keep other roles and applications off your DCs. Domain controllers do not have a local security database, which can cause odd problems with applications and services that expect them. Domain controllers have their own group policy settings, and DCs should never be moved from their organizational unit. Also, any application that depends on objects covered by a System State backup/restore (the COM+ repository especially) will be inextricably intertwined with DC backup/restore.

Leave Write Caching Alone

On 2012 and 2008 R2 (2012 R2+ is safe), there was a bug that caused Hyper-V to incorrectly report the status of write caching to guest operating systems using IDE drives. When a domain controller starts, it disables write caching. Hyper-V doesn’t allow this function. When all is well, the domain controller will log Event ID 32 (The driver detected that the device <<IDE DRIVE>> has its write cache enabled. Data corruption may occur.” That lets you know that Hyper-V is correctly telling it that its attempt to disable write caching failed. The message is incomplete. It should be appended with: “, therefore Active Directory will use a non-caching I/O method for updating the directory.” If you do not see this message in your domain controllers’ logs shortly after boot-up and the domain controller is using vIDE, that’s when you have a problem. Ensure that you have KB2853952 installed on the host (again, not for 2012 R2+).

Examples

To help you in your own configurations, I will describe the two methods that I’ve used with Hyper-V.

The first was on Hyper-V Server 2008 R2 with Service Pack 1. There are two nodes in a cluster. Each node runs a non-highly-available DC on internal storage. There is a highly-available DC stored on a CSV that runs all 5 FSMO roles. There is another DC in a remote site (physical). This cluster will start from a complete power-off state without being able to reach the DC in the remote. There is a delay in accessibility of the CSV until at least one of the DCs is started.

The second is in my test lab and is much like my first. The differences are that it’s been on Hyper-V Server 2012 most of its life, I don’t have any highly available DC, and there is no other DC anywhere. This cluster and domain will also start from a cold power-off state with no problems. To reiterate, this configuration has no physical DC anywhere.

Summary

The primary point of this series is that it’s entirely acceptable to virtualize your domain controllers and eliminate physical DCs entirely. There is never a requirement for a physical DC. You need to carefully plan your deployment and recovery scenarios — just as you would for a completely physical deployment. There is no single correct way, so design a solution that fits your scenario. Due to excellent reader feedback and questions, there will be a third installment to this series that will look at troubleshooting and potential failure scenarios.

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

69 thoughts on "Demystifying Virtualized Domain Controllers Part 2: Practice"

Excellent article, especially on small systems where having 1 DC almost always makes more sense. It is probably also worth pointing out that many AD issues can be helped by having AD ntdsutil snapshots (especially if available from a regular scheduled task) and using the active directory recycle bin rather than having to revert to backups.

Good article that sums up some of the smoke surrounding placement, operation and recovery.

What about previous recommendations I’ve seen to place the AD database on a separate SCSI disk in the VM and to disable the physical disk cache? Are these still important 2012 R2?

Hi Jeff,

I have never personally done that and I’m not entirely certain what it would really do for you. The separation was always recommended in the physical realm for performance reasons, but you’d need a pretty large AD database for that to be a concern.

Once you promote a domain controller, it automatically disables the write cache anyway. This behavior was actually one of the larger bugs in Hyper-V Server 2012, as the hypervisor was ignoring the disable request and AD data was being corrupted on graceless shut downs. I still have some research to do to ensure that the bug truly has been rectified in both 2012 and R2 (planned for part 3 of this series), but it’s not supposed to be a concern anymore.

I do recall that bug being fixed with a rollup in 2012, but I don’t remember the exact KB. My question was prompted by this article on virtualizing domain controllers in 2008 and 2008 r2:

http://technet.microsoft.com/en-us/library/virtual_active_directory_domain_controller_virtualization_hyperv(v=WS.10).aspx

The “Storage” section seems to indicate that the SCSI disks are not optional. For example…

“To guarantee the durability of Active Directory writes, the Active Directory database, logs, and SYSVOL must be placed on a virtual SCSI disk. Virtual SCSI disks support Forced Unit Access (FUA). FUA ensures that the operating system writes and reads data directly from the media bypassing any and all caching mechanisms.”

I didn’t realize that a part 3 was coming or I would have waited to see if you addressed this before asking.

http://support.microsoft.com/kb/2853952/en-us

The series was only planned to have two articles. We’ve had such great feedback (now including yours) that a third installment is warranted.

This is literally the first time I’ve ever seen that note about FUA. I will need to do some research to see. My instinct tells me that this is one of those things where the author is absolutely certain that the virtual SCSI chain will obey Windows’ insistence to not use caching where the virtual IDE chain is a little more questionable, and, as a result, is taking the safe route. I also notice as I read through that article that the author tends to use “do not…” in places where “make sure you understand the possible consequences before…” would be much more appropriate.

I do recall that bug being fixed with a rollup in 2012, but I don’t remember the exact KB. My question was prompted by this article on virtualizing domain controllers in 2008 and 2008 r2:

http://technet.microsoft.com/en-us/library/virtual_active_directory_domain_controller_virtualization_hyperv(v=WS.10).aspx

The “Storage” section seems to indicate that the SCSI disks are not optional. For example…

“To guarantee the durability of Active Directory writes, the Active Directory database, logs, and SYSVOL must be placed on a virtual SCSI disk. Virtual SCSI disks support Forced Unit Access (FUA). FUA ensures that the operating system writes and reads data directly from the media bypassing any and all caching mechanisms.”

I didn’t realize that a part 3 was coming or I would have waited to see if you addressed this before asking.

Never speak in absolutes, right? Looking forward to part 3.

Same here. Both part 1 and 2 are excellent! How can I be informed when part 3 is out?

Also I’ve never heard of Altaro Hyper-V Backup v4 until now. How does it compare to the free offer from Veeam and PHD Virtual?

Hi Tom,

You can subscribe to updates on the blog via email – it’s just blog updates you get by signing up. http://hub.altaro.com/hyper-v/sign-up/

As for Altaro Hyper-V Backup: Unlike Veeam and PHD Virtual, it’s built specifically for Hyper-V – We know the platform inside out and have a thorough understanding of what it takes to make Hyper-V backup/restore reliable, fast and easy to handle.

Here’s a sample of the kind of spontaneous feedback we get: http://www.linkedin.com/company/altaro-software/altaro-hyper-v-backup-974447/product

If you’d like to try it out you can download a copy here: http://www.altaro.com/hyper-v-backup/ (You can get started in a matter of minutes)

Sam ([email protected])

Eric:

As many commenters have suggested in Ben Armstrong’s dated post on time-sync vis-à-vis Hyper-V, his guidance is not “gospel” throughout Microsoft, and many organizations have real-world experience that seems to contradict Ben’s advice.

I have administrated and maintained several organizations’ AD environments while increasingly leveraging Hyper-V since 2010 and have found the easiest and least error-prone approach to time-sync is to (1) disable Hyper-V integration services time-sync, (2) configure an authoritative domain time source that syncs with an external time source, (3) domain-join my Hyper-V servers, and (4) if using Linux VMs, ensure that they sync to the same external time source using NTP. [Note that in my environments, all Windows machines interacting with the domain are domain-joined resources.]

Using this approach, I have never had time sync issues, despite physical Hyper-V host failures, VM failures, etc.

I am not saying my approach is the ONLY correct approach or that it is the best approach, but your reiteration and magnification of Ben Armstrong’s directive to never, never, never disable HIS time-sync seems to run counter to my, and many others’, real-world experience.

Thanks for your articles and I look forward to reading your future contributions.

That is a fair assessment. I will endeavor to make a better effort at looking at my postings from a sideways standpoint before publishing them. Especially since I preach that philosophy.

However, I should, for posterity, point out that disabling time sync in a guest will inevitably guarantee that guest has drift if it is ever saved or paused. If it is in that state beyond the maximum skew limit, then only manual intervention can correct it. “Never” might be a strong phrasing, but “most people who cannot definitively illustrate a problem in their own environment” is probably not too far beyond the pale.

I just recovered the infrastructure from a 10min time offset and it looks like Ben’s recommendation no longer works. While troubleshooting the issue I was referred to this technet article: https://technet.microsoft.com/en-us/library/virtual_active_directory_domain_controller_virtualization_hyperv(v=ws.10).aspx

Under Time Service paragraph the note says that partial disabling of VM IC Provider no longer works.

At first I took this article with a grain of salt and kept on looking for other culprits. Disabling the Time Service under Integration Services fixed the issue.

Here is the important observation: by partially disabling the VM IC Provider (inside the VM via regedit) you will note that the “w32tm /query /source” is showing expected ntp sources (i.e. an external NTP for virtual DC and the DC itself for other virtual member servers). However, the time is still updating from the Hyper-V host.

How I realized it: I had 3 servers: HV joined to domain, virtual DC and virtual FS. All 3 servers with an offset of 10 min (e.g. 5:00PM). VM IC partially disabled (Ben’s article) on virtual DC and virtual FS.

DC (virtual) source shows: pool.ntp.org

FS (virtual) source shows: DC

HV (host) shows: DC

On DC I run “w32tm /config /update” and the time jumps to 4:50PM (correct time). After 3 seconds it jumps back to 5:00PM.

Same thing on the virtual FS.

I was able to fix the issue only by disabling the time sync integration service.

I understand the reasons why Time Sync shouldn’t be disabled. Those are valid implications. And I agree that a workaround should never be labeled as best practice. But this is the only workaround that works. The problem here is that Microsoft needs to fix the bug with the unintended VM IC Provider kick in, when the domain hierarchy should take precedence.

Max

Hi Max,

I’m going to test this out on my systems and see if I get the same behavior. Thanks for bringing me something testable!

My testing seems to be producing the same outcome. I still have a bit more poking to do, but it does look like the Hyper-V Time Synchronization service is overriding the guest settings. Not good.

I have dealt with Time Drift issues in a production settings multiple times now. It can really wreak havoc on a network in short order if you are using virtualized domain controllers.

Here are a couple of things I have gleaned…

1. Dynamic Memory Allocation is buggy – it really is – It has caused problems on all of my linux VMs and it has also caused problems on most Windows Server and Windows 7 systems I have virtualized. In this case, it caused a virtualized Domain Controller to RAPIDLY lose time whenever it was shut down. I.E. this was in an office that had some intermittent power issues and the Hyper-V host got shutdown every now and then. When it would come back up, the Domain Controller would be off by several hours. All of the workstations in the office would come online and sync time to the local DC… and now we have a big problem. The fix was to turn off Dynamic Memory Allocation.

2. In addition to that, I have see circular time sync happen when the Hyper-V Host is domain joined and set to sync its time to the nearest DC, which happens to be Virtualized on that Hyper-V host and the Time Sync integration service is enabled. They try to sync to one another and in the end there is a lot of time drift on both host and the domain controller.

3. Sync to an outside NTP server. This was more for an environment where the PDC was located offsite and there was a site-to-site VPN between that wasn’t particularly reliable. Easiest thing to do was to just use W32tm service and override to sync to a public NTP server. I realize this probably isn’t great practice but it was better than ending up with a few minutes of drift on regular occasion when the PDC couldn’t be reached.

Anyhow, I didn’t realize that Time Drift was a “thing” with Hyper-V 🙂 – thought it was just my own personal hell. Was surprised when I came across your article!

Thanks!

PS – I have written about both the Dynamic Memory and Circular Time Sync issues as well here: http://www.kiloroot.com/hyper-v-dynamic-memory-time-sync-issues-on-the-vm/

and here: http://www.kiloroot.com/ntp-circular-time-sync-windows-server-2012-r2-hyper-v/

Cheers!

I’ve never heard of Dynamic Memory causing anything like that. I don’t even know what hooks it would have to affect time in that way. Not sure if you’re aware, but there was a recent hotfix released for DM: https://support.microsoft.com/en-us/kb/3095308. Hopefully, your experience is very uncommon. I can say that, in my test environment, both of my domain controllers use Dynamic Memory and have always remaining perfectly in sync, even though I apparently was not truly synchronized to external time sources the way that I thought I was. That said… persistent bad power problems scream for line-conditioning UPSs. With problems like that, they’d pay for themselves in one outage.

You also might be interested in our more recent article on time and Hyper-V: http://www.altaro.com/hyper-v/hyper-v-time-synchronization/.

Eric:

As many commenters have suggested in Ben Armstrong’s dated post on time-sync vis-à-vis Hyper-V, his guidance is not “gospel” throughout Microsoft, and many organizations have real-world experience that seems to contradict Ben’s advice.

I have administrated and maintained several organizations’ AD environments while increasingly leveraging Hyper-V since 2010 and have found the easiest and least error-prone approach to time-sync is to (1) disable Hyper-V integration services time-sync, (2) configure an authoritative domain time source that syncs with an external time source, (3) domain-join my Hyper-V servers, and (4) if using Linux VMs, ensure that they sync to the same external time source using NTP. [Note that in my environments, all Windows machines interacting with the domain are domain-joined resources.]

Using this approach, I have never had time sync issues, despite physical Hyper-V host failures, VM failures, etc.

I am not saying my approach is the ONLY correct approach or that it is the best approach, but your reiteration and magnification of Ben Armstrong’s directive to never, never, never disable HIS time-sync seems to run counter to my, and many others’, real-world experience.

Thanks for your articles and I look forward to reading your future contributions.

With Win server 2012 (as hyperviser and as AD VM) you have a VM-Generation-ID that work with USN and InvocationID.

Before any transaction AD read the VM-Generation-ID and compare it to the last value in the directory. If there is a mismatch an internal mechanism reset InvocationID.

Check that http://technet.microsoft.com/en-us/library/hh831734.aspx

With Win server 2012 (as hyperviser and as AD VM) you have a VM-Generation-ID that work with USN and InvocationID.

Before any transaction AD read the VM-Generation-ID and compare it to the last value in the directory. If there is a mismatch an internal mechanism reset InvocationID.

Check that http://technet.microsoft.com/en-us/library/hh831734.aspx

Hi Eric,

I’m planning to deploy a virtual domain controller (vDC) on a windows server 2012 r2 hyper-v host in a clustered environment, is it better that the vDC will be created on a CSV and highly available or just be installed the host’s DAS?

Thanks,

Jojo

If it’s the only DC you have, then I would almost certainly place it on direct-attached storage and not make it highly available.

If it’s not the only DC, then it’s up to you. I generally incline toward not making them highly available unless I’ve already got non-HA DCs on each node.

Hi Eric,

I do have a physical domain controller as the primary source of AD. I’m thinking (the vDC will act as a secondary DC in case the primary DC fails) if I should make it highly available that if one of my nodes goes offline, it will just transfer to another node.

Thanks,

Jojo

Your rationale makes sense to me.

Eric,

Thanks again for your insight.

Jojo

I’ve tried it in my demo environment with one server running 2012 R2 which has the Hyper-V role.

Within Hyper-V I created the Domain Controller. However when my Hyper-V host restarts en boots it gets a lot of failures in my event log and reulting in the Hyper-V host to become unusable.

For instance:

– The Netlogon services is unable to register with a DC even after a netlogon restart leading to authentication failures

– The RD Session Host server cannot register ‘TERMSRV’ which results in RDP being unavailable

Any ideas would be appreciated because this setup would be idiaal.

Netlogon problems are expected for a time after startup. Restarting the service should do nothing but hasten the inevitable, as it should eventually figure everything out on its own.

RD Session Host is not something I’ve tried in this setup and you may not ever get it to work without some extra intervention. Hyper-V and the base management operating system can work when no DC is reachable, but that isn’t going to be true for every service. But, since it seems like you’ve figured out a few simple steps you have to take to make it all work, you can drop all that into a PowerShell script and set it as a startup script.

I’ve tried it in my demo environment with one server running 2012 R2 which has the Hyper-V role.

Within Hyper-V I created the Domain Controller. However when my Hyper-V host restarts en boots it gets a lot of failures in my event log and reulting in the Hyper-V host to become unusable.

For instance:

– The Netlogon services is unable to register with a DC even after a netlogon restart leading to authentication failures

– The RD Session Host server cannot register ‘TERMSRV’ which results in RDP being unavailable

Any ideas would be appreciated because this setup would be idiaal.

Just to add to my previous comment.

To fix the Hyper-V host the following services need te be restarted in this order:

dnscache To register in DNS

netlogon To setup authentication

netprofm To enable domain network instead of Private

nlasvc To enable domain network instead of Private

Just to add to my previous comment.

To fix the Hyper-V host the following services need te be restarted in this order:

dnscache To register in DNS

netlogon To setup authentication

netprofm To enable domain network instead of Private

nlasvc To enable domain network instead of Private

To resolve the time sync issue – the solution that I have used is to change the time source for the Hyper-V Host(s) to the external NTP provider of choice (time.nist.gov, us.pool.ntp.org or a local router providing the service).

My thinking here is since the host hardware will have the correct time then integration services will “reset” any local VMs including DCs to the correct time.

To resolve the time sync issue – the solution that I have used is to change the time source for the Hyper-V Host(s) to the external NTP provider of choice (time.nist.gov, us.pool.ntp.org or a local router providing the service).

My thinking here is since the host hardware will have the correct time then integration services will “reset” any local VMs including DCs to the correct time.

Hi Eric,

I’m planning to install a single physical server acting as a Hyper-V host running Windows Server 2012 R2 core (free Hyper-V) with two VM’s (one DC and one File Server). Since it’s a core server installation I need to manage it remotely from my laptop. As far as I know the management PC and the host server have to be joined to the same domain but I’m worried that joining the host to the domain will lead to another “chicken and egg” scenario because after the reboot the host will start before the domain controller. I know that there is a way to manage a server in a workgroup but I’m not sure if it’s safe to do it in a production environment. Does it mean that I have to have a separate machine running additional DC to avoid issues?

Thank you

Konrad

Part 1 of this series explains that there is no “chicken and egg” scenario.

Hi Eric,

I’m planning to install a single physical server acting as a Hyper-V host running Windows Server 2012 R2 core (free Hyper-V) with two VM’s (one DC and one File Server). Since it’s a core server installation I need to manage it remotely from my laptop. As far as I know the management PC and the host server have to be joined to the same domain but I’m worried that joining the host to the domain will lead to another “chicken and egg” scenario because after the reboot the host will start before the domain controller. I know that there is a way to manage a server in a workgroup but I’m not sure if it’s safe to do it in a production environment. Does it mean that I have to have a separate machine running additional DC to avoid issues?

Thank you

Konrad

Thanks for this article. I have been installing AD domain controllers along with Hyper-V services and now see the error of my ways. Since reading this about a month ago, I have now started making the physical server Hyper-V only and putting the AD DC on [one of] the VM’s. My question now is about servers where I had combined the two in the past. If go back and add a DC onto a VM and remove/demote the DC from the physical server (leaving just Hyper-V), will demoting the DC RE-ENABLE the write caching on the physical Hyper-V server? If not, how do I turn that back on?

Thanks again!

Bob

Bob, that is a fantastic question, and not least because I do not know the answer. The act of disabling the cache is part of the functionality of ADDS and is carried out at each boot. My suspicion is that once ADDS is gone, the component that performs that action will go with it and therefore the cache will remain enabled.

Thanks for this article. I have been installing AD domain controllers along with Hyper-V services and now see the error of my ways. Since reading this about a month ago, I have now started making the physical server Hyper-V only and putting the AD DC on [one of] the VM’s. My question now is about servers where I had combined the two in the past. If go back and add a DC onto a VM and remove/demote the DC from the physical server (leaving just Hyper-V), will demoting the DC RE-ENABLE the write caching on the physical Hyper-V server? If not, how do I turn that back on?

Thanks again!

Bob

Hi Eric,

Thanks for that excellent post it convinced me. There is one little point I think should be mentioned that we sometimes take for granted and that is in order for your credentials to be cached you have to login once with a DC reachable. So I would add that you should login at least once in your Hyper-V server hosting your DC so that your credentials are cached and whenever your password changes login again to make sure, I learned that lesson the hard way……..

The old password is still cached if the DC is unreachable. The most important thing is keeping track of the local admin password because that will always work.

Hi Eric,

Thanks for that excellent post it convinced me. There is one little point I think should be mentioned that we sometimes take for granted and that is in order for your credentials to be cached you have to login once with a DC reachable. So I would add that you should login at least once in your Hyper-V server hosting your DC so that your credentials are cached and whenever your password changes login again to make sure, I learned that lesson the hard way……..

Hi Eric,

After reading this blog, My confidence level went up to deploy Virtual DC but have question about DC 2008 on hyper-v 2012 R2.

I have two node Windows Hyper-V 2012 R2 cluster with few Windows 2012 R2 VM running on it.Current Domain operation level is 2008.

I want to install DC windows 2008 VM for legacy application.my question is,

1. Is DC 2008 supported on Windows 2012 R2 Hyper-V? Most blog I read suggested to use Gen 1 VM for 2008 boot disk and use SCSI Gen 2 VHDX for AD data store. Is this correct option?

Hi Vic,

2008 is on the supported list for 2012 R2, so that won’t be a problem. 2008 cannot be used inside a generation 2 virtual machine because it is not UEFI aware. It can be inside a VHDX because it is not aware of the difference. Whether or not you choose to use an additional vSCSI disk for the data store is up to you. I have not had the problems that others have had on the vIDE chain. I suspect that it has something to do with overall system stability. If you’re comfortable with the extra work, go ahead and add a vSCSI disk and place your AD store there.

I do have one question, though. It seems odd to have an application that requires a 2008 domain controller. Can it not work with a newer DC but with the domain left at 2008 functional level? The reason I ask is that 2012 has superior behavior characteristics when virtualized.

Eric,

Thanks for response, The reason, I need to keep DC at 2008 level is because we still have windows 2000 server running with application written in Cobol.

My concern is, it would break if I added windows 2012R2 DC.

As soon as I moved all my critical servers to virtual environment. I will have time to test legacy application and then upgrade DC to 2012 R2.

Are you saying, I can use VHDX as boot disk for windows 2008?

Yes, you can use a VHDX with any guest at all. VHD vs. VHDX is a concern for the hypervisor only. The guest has no idea. What you can’t do is install Server 2008 in a generation 2 virtual machine.

What you’re reading on those other blogs is about keeping your Active Directory database on a VHD or VHDX attached to the virtual SCSI controller instead of the virtual IDE controller. The virtual IDE controller had a bug in the past in which it did not properly notify the guest OS that it was unable to disable the write cache. That caused domain controllers stored on them to lose data if there was an unclean shut down. That particular problem has been patched, but it is still not possible to disable the write cache on drives on a vIDE controller. Since the guest OS is now aware of that, the data loss problem shouldn’t be the problem that it was then. However, it still doesn’t hurt to put the AD DB on a disk on a vSCSI controller.

Thank you for clarification. I really appreciate your time spend on helping us.

Hi Eric,

After reading this blog, My confidence level went up to deploy Virtual DC but have question about DC 2008 on hyper-v 2012 R2.

I have two node Windows Hyper-V 2012 R2 cluster with few Windows 2012 R2 VM running on it.Current Domain operation level is 2008.

I want to install DC windows 2008 VM for legacy application.my question is,

1. Is DC 2008 supported on Windows 2012 R2 Hyper-V? Most blog I read suggested to use Gen 1 VM for 2008 boot disk and use SCSI Gen 2 VHDX for AD data store. Is this correct option?

I think you missed something sort of critical. If you set up only virtualized domain controllers in a Windows 2008 R2 environment, you will create the chicken and egg problem. If you shut down the Hyper-V host, it will never be able to boot the guests back up because it needs to contact a domain controller in order to do so, and the only domain controllers are the guests.

They fixed this in Windows 2012 and beyond, where the Hyper-V host no longer needs to contact a domain controller in order to authorize starting the guest VMs.

Except that, again, I’ve been doing this for years. There is no “chicken and egg” problem. It is a myth. There has never been a Microsoft-based operating system that will fail to boot in the absence of a domain controller. In 2008 R2 and prior, if a virtualized domain controller was clustered and no other DCs were reachable, then the cluster service would fail to start. However, the guest would still be present and could still be started through manual intervention. The only possible “chicken and egg” problem is if ALL virtualized domain controllers are on SMB 3 storage and ALL are down simultaneously. Even then, recovery is possible.

Again. I’ve been doing this for years.

“The only possible “chicken and egg” problem is if ALL virtualized domain controllers are on SMB 3 storage and ALL are down simultaneously. Even then, recovery is possible.”

Just out of curiosity, what is the general process to recover from that? I have seen quite a few articles of people putting the DC on the host storage, but I have not found a solution online to this issue with SMB storage.

Log in to the system with the DC’s files using local credentials to recover them. They could be placed on removable storage or copied via UNC to another machine using its local credentials. If an existing Hyper-V host has sufficient local storage it could be built there, or another Hyper-V system could be stood up and the files imported to a virtual machine locally.

I think you missed something sort of critical. If you set up only virtualized domain controllers in a Windows 2008 R2 environment, you will create the chicken and egg problem. If you shut down the Hyper-V host, it will never be able to boot the guests back up because it needs to contact a domain controller in order to do so, and the only domain controllers are the guests.

They fixed this in Windows 2012 and beyond, where the Hyper-V host no longer needs to contact a domain controller in order to authorize starting the guest VMs.

I moved all my clients existing servers to 2012 R2 Hyper-V. The last phase was retiring an SBS 2011 server in favor of a 2012 R2 DC VM. The old SBS server is now a secondary Hyper-V host used for replication. What are the pros/cons of replicating the current DC VM verses standing up a secondary DC VM? Note: Less than 30 workstations and current DC is hosting some files/shares from old SBS server. I know this not the optimal setup but working within budget/resource limits.

There is no value in replicating a domain controller. Make another one.

Time Drift issue.

Update: This is now an outdated practice. Completely disable time synchronization for virtualized domain controllers.

Is this an non issue now? And Why? Can you explain?

See if this helps: http://www.altaro.com/hyper-v/hyper-v-time-synchronization/.

Time Drift issue.

Update: This is now an outdated practice. Completely disable time synchronization for virtualized domain controllers.

Is this an non issue now? And Why? Can you explain?

See if this helps: https://www.altaro.com/hyper-v/hyper-v-time-synchronization/.

Hi Eric,

I have a small question. I ran my domain on 2 Hyper V hosts. When I restart the whole environment I have “unidentified network”on the host. I usually have to activate the firewall so is there a trick that the hosts get the domain network?

Thanks in advance

“Unidentified network” is an issue with the Network Location Awareness service, often related to timeouts. There are many possible causes. Teaming seems to have an effect (http://www.altaro.com/hyper-v/troubleshooting-hyper-v-webinar-q-a-follow-up/).

Would it be easier to duplicate your domain firewall settings to the other profiles?

Hi Eric,

I have a small question. I ran my domain on 2 Hyper V hosts. When I restart the whole environment I have “unidentified network”on the host. I usually have to activate the firewall so is there a trick that the hosts get the domain network?

Thanks in advance

“Unidentified network” is an issue with the Network Location Awareness service, often related to timeouts. There are many possible causes. Teaming seems to have an effect (https://www.altaro.com/hyper-v/troubleshooting-hyper-v-webinar-q-a-follow-up/).

Would it be easier to duplicate your domain firewall settings to the other profiles?